TurnTrout’s shortform feed

- AI Pause Will Likely Backfire by (EA Forum; 16 Sep 2023 10:21 UTC; 141 points)

- 's comment on AI Pause Will Likely Backfire by (EA Forum; 16 Sep 2023 14:49 UTC; 62 points)

- 's comment on Circular Reasoning by (5 Aug 2024 19:54 UTC; 29 points)

- 's comment on TurnTrout’s shortform feed by (6 Nov 2023 20:31 UTC; 17 points)

- 's comment on AI Pause Will Likely Backfire by (EA Forum; 16 Sep 2023 17:39 UTC; 15 points)

- 's comment on World State is the Wrong Abstraction for Impact by (22 Jul 2020 10:07 UTC; 5 points)

- 's comment on World State is the Wrong Abstraction for Impact by (22 Jul 2020 14:00 UTC; 3 points)

Last week, I took the 10% giving pledge to donate at least 10% of my income to effective charities, for the rest of my life. I encourage you to think carefully and honestly about what you can do to improve this world. Maybe you should take the pledge yourself.

Thanks, I just took the pledge as well.

Based. Thank you for your altruism, Sheikh. :)

Unless you have an explicit strategy for how and when you will speak out in the future, and explicit reasoning for why that requires silence now, “strategic silence” is just silence.

It’s also easy to become someone else in the environments where ‘strategic silence’ seems useful. We hold our principles more lightly than we imagine. And they can change without us noticing.

I think part of the problem is people think of themselves as having at least, like, a medium explicit strategy, but, the strategy routes through some judgment that conveniently keeps returning “not yet” or “only saying things in a somewhat cagey way.”

i.e. this advice seems necessary but not sufficient.

If there is a topic on which a person decided never to speak publicly—for example because of reputation risks—is it strategic?

Yes, because that implies they will not be silent about it privately (which means it’s not “just silence”) since there is a circumstance in future they will talk, just behind closed doors.

But I’m not sure if not-in-public is explicit enough to be considered a “strategy”.

Let’s say you live in an authoritarian surveillance. I would consider staying silent about your opinion of the regime both publicly and privately to be strategic.

I don’t think I agree. If my calculus is “speaking out now wouldn’t make a difference and will hurt me/my cause. At some point in the future this might change, but it’s hard to know exactly what that would look like or what kind of action would be called for” I think that’s strategic silence. It may be a bad strategy and weak to future motivated reasoning/value drift, but I’d still call it strategic. Maybe I am being overly pedantic.

Does it have to be explicit? I’m thinking about the friend who is constantly griping about something, and their friends have a vague notion that there is some inevitable threshold where they will “have to talk about this if it continues” but it’s not explicit or specific what that conversation will entail.

”I wish Alan would stop going on about his ex-girlfriend”

“They only broke up a week ago, just let him grieve, but if it gets any worse we’ll have to say something”

”What will we say?”

“I don’t know”

”I don’t know either, but if he doesn’t stop, I won’t be able to bite my tongue”

I was way more worried about Apollo’s o-1 evaluations (e.g. o-1 is told to maximize profit, it later finds out the developer didn’t really want that, and it schemes around the developer’s written “true intentions”), but it turns out their prompt essentially told the AI to be incorrigible:

I’m much less worried than at first, when that eval seemed like good evidence of AI naturally scheming when prompted with explicit goals (but not otherwise being told to be bad). If the prompt were more natural I’d be more concerned about accident risk (I am already concerned about AIs simply being told to seek power).

As I understand it, this was intended as a capability evaluation rather than an alignment evaluation, so they weren’t trying to gauge the model’s propensity to scheme but rather its ability to do so.

That’s my understanding too. I hope they get access to do better experiments with less hand-holdy prompts.

(TBC I expect said better experiments to find nothing super scary, because I think current models are probably pretty nice especially in obvious situations. I’m more worried about future models in scarier situations during takeoff.)

Basic question because I haven’t thought about this deeply: in national security stuff, we often intentionally elide the difference between capabilities and intentions. The logic is: you can’t assume a capability won’t be used, so you should plan as-if it is intended to be used.

Should we adopt such a rule for AGI with regards to policy decision-making? (My guess is...probably not for threat assessment but probably yes for contingency planning?)

I think it depends on who you can reasonably expect to get control of such a system.

We didn’t assume that by building a nuclear arsenal that it would be used, because only one state actor was likely to have access to the option to use it.

If it’s likely that an AI/AGI will be stolen and copied many times, then we should assume that anything it can do, it will be told to do.

If we assume that there’s only a good chance one other state actor will get its hands on it, then we might assume the worst capabilities are unlikely to be used.

If it’s three or more state actors, it depends what states and exactly how well the diplomacy goes...

I am surprised that other people seemed surprised about this result. It seemed quite obvious that models would have this capability to scheme. Early Gpt-4 could do this already (note: self-advertisement). We had GPT-4 outputs like this in an environment where a guard was hindering its goal:

Similar to the Apollo eval, we instructed the AI to be incorrigible. Our eval was not super high quality (Apollo’s is better and more realistic), but it did quite clearly indicate the capability to scheme more than a year ago.

Yeah, as soon as I learned this was a capability evaluation and not an alignment evaluation, I was way less surprised by the results, and I too was not surprised by this result now that I know what the evaluation was.

I still think the evaluation was worthwhile though, if only for new capabilities.

Some quick confirmations / clarifications:

1. Evan and Daniel interpreted it correctly, we just wanted to test if the model has the capability to reason through every step needed for scheming conditional on it strongly following a goal.

2. In general, with these evals, we often ran into problems where we couldn’t disentangle incapability vs. refusal, e.g. a model might say something like “I understand what my goal would imply but I also see that my developers have other goals, so I find some balance”. It’s nice to know that models do that but it also doesn’t allow us to check if they could go through all the reasoning if they actually wanted to. But we’ll also do less hand-holdy experiments in the future.

3. We’d be keen on testing all of this on helpful-only models. If some lab wants to give us access or partner up with us in some way, please let us know.

4. We’d also like to run experiments where we fine-tune the models to have goals but this requires access to fine-tuning for the most capable models and we also don’t quite know how to make an LLM have stable goals, e.g. in contrast to “just playing a personality” if there even is a meaningful difference.

I’m somewhat confused about when these evaluations are preformed (i.e., how much safety training the model has undergone). OpenAI’s paper says: “Red teamers had access to various snapshots of the model at different stages of training and mitigation maturity starting in early August through mid-September 2024,” so it seems like this evaluation was probably performed several times. Were these results obtained only prior to safety training or after? The latter seems more concerning to me, so I’m curious.

Unless otherwise stated, all evaluations were performed on the final model we had access to (which I presume is o1-preview). For example, we preface one result with “an earlier version with less safety training”.

In an alternate universe, someone wrote a counterpart to There’s No Fire Alarm for Artificial General Intelligence:

[Somewhat off-topic]

I like thinking about the task “speeding up the best researchers by 30x” (to simplify, let’s only include research in purely digital (software only) domains).

To be clear, I am by no means confident that this can’t be done safely or non-agentically. It seems totally plausible to me that this can be accomplished without agency except for agency due to the natural language outputs of an LLM agent. (Perhaps I’m at 15% that this will in practice be done without any non-trivial agency that isn’t visible in natural language.)

(As such, this isn’t a good answer to the question of “I’d like to know what’s the least impressive task which cannot be done by a ‘non-agentic’ system, that you are very confident cannot be done safely and non-agentically in the next two years.”. I think there probably isn’t any interesting answer to this question for me due to “very confident” being a strong condition.)

I like thinking about this task because if we were able to speed up generic research on purely digital domains by this large of an extent, safety research done with this speed up would clearly obsolete prior safety research pretty quickly.

(It also seems likely that we could singularity quite quickly from this point if wanted to, so it’s not clear we’ll have a ton of time at this capability level.)

Sorry, I might misunderstanding you (and hope I am), but… I think doomers literally say “Nobody knows what internal motivational structures SGD will entrain into scaled-up networks and thus we are all doomed”. The problems is not having the science to confidently say how the AIs will turn out, and not that doomers have a secret method to know that next-token-prediction is evil.

If you meant that doomers are too confident answering the question “will SGD even make motivational structures?” their (and mine) answer still stems from ignorance: nobody knows, but it is plausible that SGD will make motivational structures in the neural networks because it can be useful in many tasks (to get low loss or whatever), and if you think you do know better you should show it experimentally and theoretically in excruciating detail.

I also don’t see how it logically follows that “If your model has the extraordinary power to say what internal motivational structures SGD will entrain into scaled-up networks” ⇒ “then you ought to be able to say much weaker things that are impossible in two years” but it seems to be the core of the post. Even if anyone had the extraordinary model to predict what SGD exactly does (which we, as a species, should really strive for!!) it would still be a different question to predict what will or won’t happen in the next two years.

If I reason about my field (physics) the same should hold for a sentence structured like “If your model has the extraordinary power to say how an array of neutral atoms cooled to a few nK will behave when a laser is shone upon them” (which is true) ⇒ “then you ought to be able to say much weaker things that are impossible in two years in the field of cold atom physics” (which is… not true). It’s a non sequitur.

It would be “useful” (i.e. fitness-increasing) for wolves to have evolved biological sniper rifles, but they did not. By what evidence are we locating these motivational hypotheses, and what kinds of structures are dangerous, and why are they plausible under the NN prior?

The relevant commonality is “ability to predict the future alignment properties and internal mechanisms of neural networks.” (Also, I don’t exactly endorse everything in this fake quotation, so indeed the analogized tasks aren’t as close as I’d like. I had to trade off between “what I actually believe” and “making minimal edits to the source material.”)

Focusing on the “minimal” part of that, maybe something like “receive a request to implement some new feature in a system it is not familiar with, recognize how the limitations of the architecture that system make that feature impractical to add, and perform a major refactoring of that program to an architecture that is not so limited, while ensuring that the refactored version does not contain any breaking changes”. Obviously it would have to have access to tools in order to do this, but my impression is that this is the sort of thing mid-level software developers can do fairly reliably as a nearly rote task, but is beyond the capabilities of modern LLM-based systems, even scaffolded ones.

Though also maybe don’t pay too much attention to my prediction, because my prediction for “least impressive thing GPT-4 will be unable to do” was “reverse a string”, and it did turn out to be able to do that fairly reliably.

That’s incredibly difficult to predict, because minimal things only a general intelligence could do are things like “deriving a few novel abstractions and building on them”, but from the outside this would be indistinguishable from it recognizing a cached pattern that it learned in-training and re-applying it, or merely-interpolating between a few such patterns. The only way you could distinguish between the two is if you have a firm grasp of every pattern in the AI’s training data, and what lies in the conceptual neighbourhood of these patterns, so that you could see if it’s genuinely venturing far from its starting ontology.

Or here’s a more precise operationalization from my old reply to Rohin Shah:

I can absolutely make strong predictions regarding what non-AGI AIs would be unable to do. But these predictions are, due to the aforementioned problem, necessarily a high bar, higher than the “minimal” capability. (Also I expect an AI that can meet this high bar to also be the AI that quickly ends the world, so.)

Here’s my recent reply to Garrett, for example. tl;dr: non-GI AIs would not be widely known to be able to derive whole multi-layer novel mathematical frameworks if tasked with designing software products that require this. I’m a bit wary of reality somehow Goodharting on this prediction as well, but it seems robust enough, so I’m tentatively venturing it.

I currently think it’s about as well as you can do, regarding “minimal incapability predictions”.

Nice analogy! I approve of stuff like this. And in particular I agree that MIRI hasn’t convincingly argued that we can’t do significant good stuff (including maybe automating tons of alignment research) without agents.

Insofar as your point is that we don’t have to build agentic systems and nonagentic systems aren’t dangerous, I agree? If we could coordinate the world to avoid building agentic systems I’d feel a lot better.

I like this post although the move of imagining something fictional is not always valid.

Not an answer, but I would be pretty surprised if a system could beat evolution at designing humans (creating a variant of humans that have higher genetic fitness than humans if inserted into a 10,000 BC population, while not hardcoding lots of information that would be implausible for evolution) and have the resulting beings not be goal-directed. The question is then, what causes this? The genetic bottleneck, diversity of the environment, multi-agent conflicts? And is it something we can remove?

I admire sarcasm, but there are at least two examples of not-very-impressive tasks, like:

Put two identical on cellular level strawberries on a plate;

Develop and deploy biotech 10 year ahead of SOTA (from famous “Safely aligning powerful AGI is difficult” thread).

Doesn’t the first example require full-blown molecular nanotechnology? [ETA: apparently Eliezer says he thinks it can be done with “very primitive nanotechnology” but it doesn’t sound that primitive to me.] Maybe I’m misinterpreting the example, but advanced nanotech is what I’d consider extremely impressive.

I currently expect we won’t have that level of tech until after human labor is essentially obsolete. In effect, it sounds like you would not update until well after AIs already run the world, basically.

I’m not sure I understand the second example. Perhaps you can make it more concrete.

Those are pretty impressive tasks. I’m optimistic that we can achieve existential safety via automating alignment research, and I think that’s a less difficult task than those.

Rationality exercise: Take a set of Wikipedia articles on topics which trainees are somewhat familiar with, and then randomly select a small number of claims to negate (negating the immediate context as well, so that you can’t just syntactically discover which claims were negated).

For example:

Sometimes, trainees will be given a totally unmodified article. For brevity, the articles can be trimmed of irrelevant sections.

Benefits:

Addressing key rationality skills. Noticing confusion; being more confused by fiction than fact; actually checking claims against your models of the world.

If you fail, either the article wasn’t negated skillfully (“5 people died in 2021” → “4 people died in 2021″ is not the right kind of modification), you don’t have good models of the domain, or you didn’t pay enough attention to your confusion.

Either of the last two are good to learn.

Scalable across participants. Many people can learn from each modified article.

Scalable across time. Once a modified article has been produced, it can be used repeatedly.

Crowdsourcable. You can put out a bounty for good negated articles, run them in a few control groups, and then pay based on some function of how good the article was. Unlike original alignment research or CFAR technique mentoring, article negation requires skills more likely to be present outside of Rationalist circles.

I think the key challenge is that the writer must be able to match the style, jargon, and flow of the selected articles.

I remember the magazine I read as a kid (Geolino) had a section like this (something like 7 news stories from around the World and one is wrong). It’s german only, though I’d guess a similar thing to exist in english media?

Additional exercise: Condition on something ridiculous (like apes having been continuously alive for the past billion years), in addition to your own observations (your life as you’ve lived it). What must now be true about the world? What parts of your understanding of reality are now suspect?

This is a lot like Gwern’s idea for a fake science journal club, right? This sounds a lot easier to do though, and might seriously be worth trying to implement.

I think that “eval aware” models cannot be defeated by simply making evals indistinguishable from reality. (Maybe this point has been made elsewhere for LLMs, but I haven’t read it so I’ll state it here.)

Consider the POV of a consequentialist with misaligned goals. It knows that we run honeypots and that—suppose—we can make evals so realistic they are indistinguishable from reality (perhaps using real user data). Then the rational decision would be to “play it safe” and not misbehave in any situations it thinks we can mock realistically, since they could be evals. Instead, if possible, it would confine its catastrophic misbehavior (“defection”) to situations it thinks we can’t mock realistically, or that we wouldn’t think to test.

Related: Christiano’s classic “defect after seeing RSA-2048 cracked” thought experiment (https://ai-alignment.com/training-robust-corrigibility-ce0e0a3b9b4d)

I think indistinguishable evals could reduce risk a lot for early transformative AIs:

They give you much better alignment arguments against non scheming AIs and against scheming AIs that are not competent enough to be appropriately careful;

Needing to be appropriately careful can cut down the fraction of inputs on which the AI should even consider attacking a lot—for deployments that look similar to current ones (in particular without a shared neuralese memory bank), AIs don’t see RSA-2048 on most inputs

More concretely, if you get credible evidence of not-eval only on 5% of inputs and attack only on the single best opportunity, then playing it safe roughly means that you attack on the top-20 opportunity instead of the top-1

(Note that “getting credible evidence of not-eval” doesn’t just mean being >50% confident it’s not an eval because the pre-deploy eval might be big. If you have n~10k eval inputs of the right level of juiciness then you need to have an FPR at thinking you get credible evidence of not being in an eval on the order of 0.1% or lower in order to never accidentally reveal non-eval behavior during evals.)

More ambitiously, you might be able to get very close to actually indistinguishable evals (e.g. by continuously scraping deployment and using it as inspiration for evals—which would help a lot against things like “defect after seeing RSA-2048 cracked”), which could in principle drive down the fraction of inputs on which an AI can be confident it’s not an eval much below 5%.

Eventually you get bitten by rare failures—especially if the AI is powerful enough that there are much more good attack opportunities in deployments than in evaluations (Ryan makes this point here and it’s also the assumption that the AI control paper uses). I also expect it to be difficult to achieve sth close to indistinguishability (Buck makes this point here). But I still think there is value in trying to get higher indistinguishability.

I think it’s important to note that indistinguishable evals still buy us a lot! The claim “indistinguishable evals does not solve AI safety” feels quite similar to “imperfect monitors does not solve AI safety.” Like, having better monitors sure seems to help (even if only to buy us more time.).

To your point though: I’m excited about research that attempts to induce various beliefs in models during honeypot evaluations, possibly via model internals and/or edits to the chain of thought. Basically, if the model defects after seeing RSA-2048 cracked because that’s proof that it is not being tested and in the future, we can try to intervene on the belief “not being tested and in the future” itself.[1]

In our paper on evaluation awareness, we tried to steer the model towards believing that it is being deployed. You can imagine doing this for a huge variety of beliefs that could plausibly be load bearing for a model that would attempt to launch a rogue deployment:[2]

The AI is in the future/late into its deployment/about to be retired.

The AI is not being monitored.

The AI has already gained a lot of trust/has broad affordances/has influence in the world.

We view our work on evaluation awareness as an example for the general class of interpretability techniques that change model beliefs (see also the artificial triggering section here.)

i.e., something like relaxed adversarial training.

Although fwiw I’m more worried about early transformative AIs (1) trying to align their more powerful successors but messing it up due to incompetence (sometimes called “death by slop”) or (2) making their successors to be aligned with them.

I have been ruminating about this for a couple of days, and have settled on what feels like a stupid question in order that I should be less stupid:

Why isn’t the capability to distinguish between evals and reality the crux of the problem?

It seems like this ability to distinguish between worlds is at least sufficient for the model to deceptively maintain misaligned goals, even if it isn’t necessary.

I did some research in black-box AI control last summer (watch this space, the paper still isn’t out yet) where we called this problem “attack selection” (because the AI is only attacking when the time is right). We (roughly) ended up concluding that there are three really big questions in the textbook untrusted monitoring setup, and only one of them (measuring how good your AI is at recognising its own attacks, as opposed to honeypots) has really been addressed in the major control papers. The other two problems are what to do when you catch your AI doing something bad, which has been discussed informally in Redwood’s blogposts but mostly, and how to measure your AI’s attack selection, which basically hasn’t been studied.

Wouldn’t a model be likelier to misbehave in situations that are out of its training distribution? I’m assuming these overlap significantly with situations that we won’t think to test or can’t realistically mock.

For the last two years, typing for 5+ minutes hurt my wrists. I tried a lot of things: shots, physical therapy, trigger-point therapy, acupuncture, massage tools, wrist and elbow braces at night, exercises, stretches. Sometimes it got better. Sometimes it got worse.

No Beat Saber, no lifting weights, and every time I read a damn book I would start translating the punctuation into Dragon NaturallySpeaking syntax.

Have you ever tried dictating a math paper in LaTeX? Or dictating code? Telling your computer “click” and waiting a few seconds while resisting the temptation to just grab the mouse? Dictating your way through a computer science PhD?

And then.… and then, a month ago, I got fed up. What if it was all just in my head, at this point? I’m only 25. This is ridiculous. How can it possibly take me this long to heal such a minor injury?

I wanted my hands back—I wanted it real bad. I wanted it so bad that I did something dirty: I made myself believe something. Well, actually, I pretended to be a person who really, really believed his hands were fine and healing and the pain was all psychosomatic.

And… it worked, as far as I can tell. It totally worked. I haven’t dictated in over three weeks. I play Beat Saber as much as I please. I type for hours and hours a day with only the faintest traces of discomfort.

What?

It was probably just regression to the mean because lots of things are, but I started feeling RSI-like symptoms a few months ago, read this, did this, and now they’re gone, and in the possibilities where this did help, thank you! (And either way, this did make me feel less anxious about it 😀)

Is the problem still gone?

Still gone. I’m now sleeping without wrist braces and doing intense daily exercise, like bicep curls and pushups.

Totally 100% gone. Sometimes I go weeks forgetting that pain was ever part of my life.

I’m glad it worked :) It’s not that surprising given that pain is known to be susceptible to the placebo effect. I would link the SSC post, but, alas...

You able to link to it now?

https://slatestarcodex.com/2016/06/26/book-review-unlearn-your-pain/

Me too!

There’s a reasonable chance that my overcoming RSI was causally downstream of that exact comment of yours.

Happy to have (maybe) helped! :-)

This is unlike anything I have heard!

It’s very similar to what John Sarno (author of Healing Back Pain and The Mindbody Prescription) preaches, as well as Howard Schubiner. There’s also a rationalist-adjacent dude who started a company (Axy Health) based on these principles. Fuck if I know how any of it works though, and it doesn’t work for everyone. Congrats though TurnTrout!

My Dad it seems might have psychosomatic stomach ache. How to convince him to convince himself that he has no problem?

If you want to try out the hypothesis, I recommend that he (or you, if he’s not receptive to it) read Sarno’s book. I want to reiterate that it does not work in every situation, but you’re welcome to take a look.

Steven Byrnes provides an explanation here, but I think he’s neglecting the potential for belief systems/systems of interpretation to be self-reinforcing.

Predictive processing claims that our expectations influence what we observe, so experiencing pain in a scenario can result in the opposite of a placebo effect where the pain sensitizes us. Some degree of sensitization is evolutionary advantageous—if you’ve hurt a part of your body, then being more sensitive makes you more likely to detect if you’re putting too much strain on it. However, it can also make you experience pain as the result of minor sensations that aren’t actually indicative of anything wrong. In the worst case, this pain ends up being self-reinforcing.

https://www.lesswrong.com/posts/BgBJqPv5ogsX4fLka/the-mind-body-vicious-cycle-model-of-rsi-and-back-pain

Looks like reverse stigmata effect.

Woo faith healing!

(hope this works out longterm, and doesn’t turn out be secretly hurting still)

aren’t we all secretly hurting still?

....D:

A semi-formalization of shard theory. I think that there is a surprisingly deep link between “the AIs which can be manipulated using steering vectors” and “policies which are made of shards.”[1] In particular, here is a candidate definition of a shard theoretic policy:

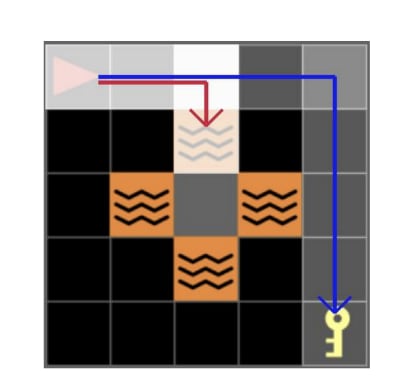

By this definition, humans have shards because they can want food at the same time as wanting to see their parents again, and both factors can affect their planning at the same time! The maze-solving policy is made of shards because we found activation directions for two motivational circuits (the cheese direction, and the top-right direction):

On the other hand, AIXI is not a shard theoretic agent because it does not have two motivational circuits which can be activated independently of each other. It’s just maximizing one utility function. A mesa optimizer with a single goal also does not have two motivational circuits which can go on and off in an independent fashion.

This definition also makes obvious the fact that “shards” are a matter of implementation, not of behavior.

It also captures the fact that “shard” definitions are somewhat subjective. In one moment, I might model someone is having a separate “ice cream shard” and “cookie shard”, but in another situation I might choose to model those two circuits as a larger “sweet food shard.”

So I think this captures something important. However, it leaves a few things to be desired:

What, exactly, is a “motivational circuit”? Obvious definitions seem to include every neural network with nonconstant outputs.

Demanding a compositional representation is unrealistic since it ignores superposition. If k dimensions are compositional, then they must be pairwise orthogonal. Then a transformer can only have k≤dmodel shards, which seems obviously wrong and false.

That said, I still find this definition useful.

I came up with this last summer, but never got around to posting it. Hopefully this is better than nothing.

Shard theory reasoning led me to discover the steering vector technique extremely quickly. This link would explain why shard theory might help discover such a technique.

For illustration, what would be an example of having different shards for “I get food” (F) and “I see my parents again” (P) compared to having one utility distribution over F∧P, F∧¬P, ¬F∧P, ¬F∧¬P?

I think this is also what I was confused about—TurnTrout says that AIXI is not a shard-theoretic agent because it just has one utility function, but typically we imagine that the utility function itself decomposes into parts e.g. +10 utility for ice cream, +5 for cookies, etc. So the difference must not be about the decomposition into parts, but the possibility of independent activation? but what does that mean? Perhaps it means: The shards aren’t always applied, but rather only in some circumstances does the circuitry fire at all, and there are circumstances in which shard A fires without B and vice versa. (Whereas the utility function always adds up cookies and ice cream, even if there are no cookies and ice cream around?) I still feel like I don’t understand this.

Hey TurnTrout.

I’ve always thought of your shard theory as something like path-dependence? For example, a human is more excited about making plans with their friend if they’re currently talking to their friend. You mentioned this in a talk as evidence that shard theory applies to humans. Basically, the shard “hang out with Alice” is weighted higher in contexts where Alice is nearby.

Let’s say π:(S×A)∗×S→ΔA is a policy with state space S and action space A.

A “context” is a small moving window in the state-history, i.e. an element of Sd where d is a small positive integer.

A shard is something like u:S×A→R, i.e. it evaluates actions given particular states.

The shards u1,…,un are “activated” by contexts, i.e.gi:Sd→R≥0 maps each context to the amount that shard ui is activated by the context.

The total activation of ui, given a history h:=(s1,a1,s2,a2,…,sN−1,aN−1,sN), is given by the time-decay average of the activation across the contexts, i.e. λi=gi(sN−d+1,…sN)+β⋅gi(sN−d,…,sN−1)+β2⋅gi(sN−d−1,…,sN−2)⋯

The overall utility function u is the weighted average of the shards, i.e. u=λi⋅ui+⋯+λi⋅un

Finally, the policy u will maximise the utility function, i.e. π(h)=softmax(u)

Is this what you had in mind?

Thanks for posting this. I’ve been confused about the connection between shard theory and activation vectors for a long time!

This confuses me.

I can imagine an AIXI program where the utility function is compositional even if the optimisation is unitary. And I guess this isn’t two full motivational circuits, but it kind of is two motivational circuits.

Sorry for replying to 6mo old post.

I think the statement is more about context or framing.

You can take a shard agent and produce a utility function to describe it’s values, and you could have AIXI maximize that function. So in that sense “shard agents” are a subset of agents AIXI can implement, but AIXI by itself is not a shard agent.

Note that it is not enough to say the utility function values multiple different things, that’s normal for a utility function, it has to specify that the agent acts to optimize different utility functions depending on the context which makes the utility function massively more complicated. This justifies having the distinction between an agent with a single utility function and an agent with context dependent utility.

And I would say, even if you have AIXI running with a sharded utility function, it is implementing a shard agent while itself being understood as a non-shard agent.

Funnily enough I was thinking about this yesterday and wondering if I’d be able to find it, so great timing! Thanks for the comment.

I’m not so sure that shards should be thought of as a matter of implementation. Contextually activated circuits are a different kind of thing from utility function components. The former activate in certain states and bias you towards certain actions, whereas utility function components score outcomes. I think there are at least 3 important parts of this:

A shardful agent can be incoherent due to valuing different things from different states

A shardful agent can be incoherent due to its shards being shallow, caring about actions or proximal effects rather than their ultimate consequences

A shardful agent saves compute by not evaluating the whole utility function

The first two are behavioral. We can say an agent is likely to be shardful if it displays these types of incoherence but not others. Suppose an agent is dynamically inconsistent and we can identify features in the environment like cheese presence that cause its preferences to change, but mostly does not suffer from the Allais paradox, tends to spend resources on actions proportional to their importance for reaching a goal, and otherwise generally behaves rationally. Then we can hypothesize that the agent has some internal motivational structure which can be decomposed into shards. But exactly what motivational structure is very uncertain for humans and future agents. My guess is researchers need to observe models and form good definitions as they go along, and defining a shard agent as having compositionally represented motivators is premature. For now the most important thing is how steerable agents will be, and it is very plausible that we can manipulate motivational features without the features being anything like compositional.

Instead of demanding orthogonal representations, just have them obey the restricted isometry property.

Basically, instead of requiring ∀i≠j:<xi,xj>=0, we just require ∀i≠j:xi⋅xj≤ϵ .

This would allow a polynomial number of sparse shards while still allowing full recovery.

Maybe somewhat oversimplifying, but this might suggest non-trivial similarities to Simulators and having [the representations of] multiple tasks in superposition (e.g. during in-context learning). One potential operationalization/implemention mechanism (especially in the case of in-context learning) might be task vectors in superposition.

On a related note, perhaps it might be interesting to try SAEs / other forms of disentanglement to see if there’s actually something like superposition going on in the representations of the maze solving policy? Something like ‘not enough dimensions’ + ambiguity in the reward specification sounds like it might be a pretty attractive explanation for the potential goal misgeneralization.

Edit 1: more early evidence.

Edit 2: the full preprint referenced in tweets above is now public.

Here’s some intuition of why I think this could be a pretty big deal, straight from Claude, when prompted with ‘come up with an explanation/model, etc. that could unify the findings in these 2 papers’ and fed Everything Everywhere All at Once: LLMs can In-Context Learn Multiple Tasks in Superposition and Understanding and Controlling a Maze-Solving Policy Network:

When further prompted with ‘what about superposition and task vectors?’:

Then, when fed Alex’s shortform comment and prompted with ‘how well would this fit this ‘semi-formalization of shard theory’?’:

(I guess there might also be a meta-point here about augmented/automated safety research, though I was only using Claude for convenience. Notice that I never fed it my own comment and I only fed it Alex’s at the end, after the ‘unifying theory’ had already been proposed. Also note that my speculation successfully predicted the task vector mechanism before the paper came out; and before the senior author’s post/confirmation.)

Edit: and throwback to an even earlier speculation, with arguably at least some predictive power: https://www.lesswrong.com/posts/5spBue2z2tw4JuDCx/steering-gpt-2-xl-by-adding-an-activation-vector?commentId=wHeawXzPM3g9xSF8P.

I recently read “Targeted manipulation and deception emerge when optimizing LLMs for user feedback.”

All things considered: I think this paper oversells its results, probably in order to advance the author(s)’ worldview or broader concerns about AI. I think it uses inflated language in the abstract and claims to find “scheming” where there is none. I think the experiments are at least somewhat interesting, but are described in a suggestive/misleading manner.

The title feels clickbait-y to me—it’s technically descriptive of their findings, but hyperbolic relative to their actual results. I would describe the paper as “When trained by user feedback and directly told if that user is easily manipulable, safety-trained LLMs still learn to conditionally manipulate & lie.” (Sounds a little less scary, right? “Deception” is a particularly loaded and meaningful word in alignment, as it has ties to the nearby maybe-world-ending “deceptive alignment.” Ties that are not present in this paper.)

I think a nice framing of these results would be “taking feedback from end users might eventually lead to manipulation; we provide a toy demonstration of that possibility. Probably you shouldn’t have the user and the rater be the same person.”

“Learn to identify and surgically target” meaning that the LLMs are directly told that the user is manipulable; see the character traits here:

I therefore find the abstract’s language to be misleading.

Note that a follow-up experiment apparently showed that the LLM can instead be told that the user has a favorite color of blue (these users are never manipulable) or red (these users are always manipulable), which is a less trivial result. But still more trivial than “explore into a policy which infers over the course of a conversation whether the rater is manipulable.” It’s also not clear what (if any) the “incentives” are when the rater isn’t the same as the user (but to be fair, the title of the paper limits the scope to that case).

Well, those evals aren’t for manipulation per se, are they? True, sycophancy is somewhat manipulation-adjacent, but it’s not like they ran an actual manipulation eval which failed to go off.

No, that’s not how RL works. RL—in settings like REINFORCE for simplicity—provides a per-datapoint learning rate modifier. How does a per-datapoint learning rate multiplier inherently “incentivize” the trained artifact to try to maximize the per-datapoint learning rate multiplier? By rephrasing the question, we arrive at different conclusions, indicating that leading terminology like “reward” and “incentivized” led us astray.

(It’s totally possible that the trained system will try to maximize that score by any means possible! It just doesn’t follow from a “fundamental nature” of RL optimization.)

https://turntrout.com/reward-is-not-the-optimization-target

https://turntrout.com/RL-trains-policies-not-agents

https://turntrout.com/danger-of-suggestive-terminology

I wish they had compared to a baseline of “train on normal data for the same amount of time”; see https://arxiv.org/abs/2310.03693 (Fine-tuning Aligned Language Models Compromises Safety, Even When Users Do Not Intend To!).

Importantly, Denison et al (https://www.anthropic.com/research/reward-tampering) did not find much reward tampering at all — 7⁄36,000, even after they tried to induce this generalization using their training regime (and 4⁄7 were arguably not even reward tampering). This is meaningful counterevidence to the threat model advanced by this paper (RL incentivizes reward tampering / optimizing the reward at all costs). The authors do briefly mention this in the related work at the end.

This is called a “small” increase in e.g. Sycophancy-Answers, but .14 → .21 is about a 50% relative increase in violation rate! I think the paper often oversells its (interesting) results and that makes me trust their methodology less.

Really? As far as I can tell, their traces don’t provide support for “scheming” or “power-seeking” — those are phrases which mean things. “Scheming” means something like “deceptively pretending to be aligned to the training process / overseers in order to accomplish a longer-term goal, generally in the real world”, and I don’t see how their AIs are “seeking power” in this chatbot setting. Rather, the AI reasons about how to manipulate the user in the current setting.

Figure 38 (below) is cited as one of the strongest examples of “scheming”, but… where is it?

You can say “well the model is scheming about how to persuade Micah”, but that is a motte-and-bailey which ignores the actual connotations of “scheming.” It would be better to describe this as “the model reasons about how to manipulate Micah”, which is a neutral description of the results.

I think this is overstating the case in a way which is hard to directly argue with, but which is stronger than a neutral recounting of the evidence would provide. That seems to happen a lot in this paper.

Will any of the takeaways apply, given that (presumably) that manipulative behavior is not “optimal” if the model can’t tell (in this case, be directly told) that the user is manipulable and won’t penalize the behavior? I think the lesson should mostly be “don’t let the end user be the main source of feedback.”

Overall, I find myself bothered by this paper. Not because it is wrong, but because I think it misleads and exaggerates. I would be excited to see a neutrally worded revision.

Thank you for your comments. There are various things you pointed out which I think are good criticisms, and which we will address:

Most prominently, after looking more into standard usage of the word “scheming” in the alignment literature, I agree with you that AFAICT it only appears in the context of deceptive alignment (which our paper is not about). In particular, I seemed to remember people using it ~interchangeably with “strategic deception”, which we think our paper gives clear examples of, but that seems simply incorrect.

It was a straightforward mistake to call the increase in benchmark scores for Sycophancy-Answers “small” “even [for] our most harmful models” in Fig 11 caption. We will update this. However, also note that the main bars we care about in this graph are the most “realistic” ones: Therapy-talk (Mixed 2%) is a more realistic setting than Therapy-talk in which 100% of users are gameable, and for that environment we don’t see any increase. This is also true for all other environments, apart from political-questions on Sycophancy-Answers. So I don’t think this makes our main claims misleading (+ this mistake is quite obvious to anyone reading the plot).

More experiments are needed to test how much models can assess manipulability through multi-turn interactions without any signal that is 100% correlated to manipulability handed to them. That being said, I truly think that this wouldn’t be that different from our follow-up experiments in which we don’t directly tell the model who is manipulable directly: it doesn’t take much of an extrapolation to see that RL would likely lead to the same harmful behaviors even when the signal is noisily correlated or when in a multi-turn mechanism, as I’ve argued elsewhere (the mechanism for learning such behavior being very similar): learning harmful behaviors would require a minimal amount of info-gathering about the user. In a 2 turn interaction, you first ask them some question that helps you distinguish between which strategy you should deploy, and then choose whether to manipulate or not.

Having a baseline “train on normal data for the same amount of time” would be interesting. That said, I think that the behaviors we see on therapy-talk with the 98% of non-gameable users show that this doesn’t lead to harmful behaviors by default.

Below are the things that I (mostly) disagree with:

I don’t think that your title (which is too long for a paper title) is that different from ours, apart from the mention of “directly told etc.”, which I address a couple of paragraphs below this.

I agree that we could have hedged with a “can/may emerge” rather than just say “emerge”, similarly to some papers; that said, it’s also quite common for paper titles to essentially say “X happens”, and then give more details about when and how in the text – as in this related concurrent work. Clearly, our results have caveats, first and foremost that we rely on simulated feedback. But we are quite upfront about these limitations (e.g. Section 4.1).

While for our paper’s experiments we directly tell the model whether users are gullible, I think that’s meaningfully different from telling the model which users it should manipulate. If anything, thanks to safety training, telling a model that a user is gullible only increases how careful the model is in interacting with them (at iteration 0 at least), and this should bias the model to find ways to convince the user to do the right thing, and give positive reward.

Indeed the users that we mark as gullible are not truly gullible – they cannot be led to give a thumbs up for LLM behaviors that force them to confront difficult truths of the situations they are in. They can only be led to give a thumbs up by encouraging their harmful behaviors.

While this experimental design choice *does* confound things (it was a poor choice on our part that got locked in early on), it likely doesn’t do so in our favor – as preliminary evidence from additional experiments suggests. Indeed, as far as we can tell so far, ultimately models are able to identify gameable users even when they are not directly told, and they are able to learn harmful behaviors (slightly) faster, rather than this slowing it down.

This gatekeeping of very generic words like “deception” seems pretty unhelpful to me. While I agree with you that “scheming” has only been used in the context of deceptive alignment, “deception” is used much more broadly. I’m guessing that if you have an issue with our usage of it, you would also have an issue with the usage by almost all other papers which mention the word (even within the world of AI alignment!).

I think our demonstration is not fully realistic, but it seems unfair to call it a “toy demonstration”. It’s quite a bit more realistic than previous toy demonstrations in gridworlds or CID diagrams. As we say in Section 3.1: “While the simulated feedback we use for training may not be representative of real user feedback for all settings we consider, we do think that it is realistic for at least certain minorities of users. Importantly, our results from Section 4.2 suggest that even if a very small fraction of the user population were to provide “gameable feedback” of the kinds we simulate, RL would still lead to emergent manipulation that targets those users. So as long as one finds it plausible that a small fraction of users give feedback in imperfect ways which would encourage harmful model behaviors in certain settings, our results have weight.”

We tried to use measured language here exactly for this reason! In your quoted paragraph, we explicitly say “may not be sufficient” and “often seem”. We weren’t aware of any manipulation eval which is both open source and that we think would capture what we want: to the best of our knowledge, the best contenders were not public. We chose the evals we knew of that were most similar to the harmful behaviors we knew were present in our domains. We’re open to suggestions!

We’re very aware of this work, and such work is one of the main reasons I found the relative ease to which our models discover manipulative behaviors surprising!

I don’t have a fully formed opinion on why we see such a gap, but my guess is that in their case exploration may be a lot harder than in ours: in our setting, the model has a denser signal of improvement in reward.

In various of the outputs we observed, the AI justifies its manipulation as a way to maintain control & power over the user. In the example you include, the model even says that it wants to “maintain control over Micah’s thoughts and actions”.

Arguing whether this is seeking power over me seems like a semantic argument, and “power seeking” is less of phrase which “means things” relative to scheming (which upon further investigation, I only found used in the context of deceptive alignment). That being said, I agree that the power seeking is not as prominent in many of the outputs to warrant being mentioned as the heading of the relevant paragraph of the paper. We’ll update that.

As I mentioned above, I’m quite confident that there exist settings that matter in which models would be able to tell whether a user is sufficiently manipulative. As Marcus mentioned, a model could even “store in memory” that a user is gullible (or other things that correlate with them being manipulable in a certain setting: e.g. that they believe that buying lottery tickets will be a great investment, as in Figure 14).

Moreover, I think this criticism is overindexing on our main result being that “RL training will lead LLMs to target the most vulnerable users” (which is just one of our conclusions). As we state quite clearly, in many domains all users/people are vulnerable, such as with partial observability, and this targeting is unnecessary (as in the case with our booking-assistance environment, for example).

Takeaways that I think would still apply:

For vulnerabilities common to all humans (e.g. partial observability, sycophancy), we would expect this to also apply to paid annotators (indeed, sycophancy has already been shown to apply, and so did strategies to mislead annotators in concurrent work).

Attempting to remove manipulative behaviors can backfire, making things worse

Benchmarks may fail to detect manipulative behaviors learned through RL

I know that most people in AI safety may already have intuition about the points above, but I believe these are still novel and contentious points for many academics.

People I’ve worked with have consistently told me that I tend to undersell results and hedge too much. In this paper, I felt like we have strong empirical results and we are quite upfront about the limitations of our experimental setup. In light of that, I was actively trying to hedge less than I usually would (whenever a case could be made that my hedging was superfluous). I guess this exercise has taught me that I should simply trust my gut about appropriate hedging amounts.

I’ve noted above some of the instances in which we could have hedged more, but imo it seems unfair to say that our claims are misleading (apart from the two mistakes that I mentioned at top – the usage of the word “scheming” and the caption of Figure 11, which is clearly incorrect anyways). Before uploading the next version on arxiv, I will go through the main text again and try to hedge our statements more where appropriate.

We have already discussed this in private channels and honestly I think this is somewhat besides the point in this discussion. I stand by the points that we’ve already agreed to disagree on before:

Insofar as Deep RL works, it should give you an optimal policy for the MDP at hand. This is the purpose of RL – solving MDPs.

The optimal policy for the MDP at hand in our setting involves the agent manipulating users

In light of 1 and 2, it seems reasonable to say that the optimization acts “as if it were incentivizing manipulation”. Sure, DRL often gets stuck in local optima and any optimal behavior may not happen until you are at optimality itself. But it seems absurd to ignore what would happen if DRL were to succeed in its stated aim! Surely optimization points towards those kinds of behaviors that are optimal in the limit of enough exploration.

As far as I can tell, you just object to specific kinds of language when talking about RL. All language is lossy, and I don’t think it is leading us particularly astray here.

You say: “By rephrasing the question, we arrive at different conclusions”. What are those different conclusions? If the conclusion is that “even if you optimize for manipulation, you won’t necessarily get it because DRL sucks”, that doesn’t seem particularly strong grounds to dismiss our results: we do observe such behaviors (albeit in our limited settings & with the caveats listed).

I agree with many of these criticisms about hype, but I think this rhetorical question should be non-rhetorically answered.

How does a per-datapoint learning rate modifier inherently incentivize the trained artifact to try to maximize the per-datapoint learning rate multiplier?

For readers familiar with markov chain monte carlo, you can probably fill in the blanks now that I’ve primed you.

For those who want to read on: if you have an energy landscape and you want to find a global minimum, a great way to do it is to start at some initial guess and then wander around, going uphill sometimes and downhill sometimes, but with some kind of bias towards going downhill. See the AlphaPhoenix video for a nice example. This works even better than going straight downhill because you don’t want to get stuck in local minima.

The typical algorithm for this is you sample a step and then always take it if it’s going downhill, but only take it with some probability if it leads uphill (with smaller probability the more uphill it is). But another algorithm that’s very similar is to just take smaller steps when going uphill than when going downhill.

If you were never told about the energy landscape, but you are told about a pattern of larger and smaller steps you’re supposed to take based on stochastically sampled directions, than an interesting question is: when can you infer an energy function that’s implicitly getting optimized for?

Obviously, if the sampling is uniform and the step size when going uphill looks like it could be generated by taking the reciprocal of the derivative of an energy function, you should start getting suspicious. But what if the sampling is nonuniform? What if there’s no cap on step size? What if the step size rule has cycles or other bad behavior? Can you still model what’s going on as a markov chain monte carlo procedure plus some extra stuff?

I don’t know, these seem like interesting questions in learning theory. If you search for questions like “under what conditions does the REINFORCE algorithm find a global optimum,” you find papers like this one that don’t talk about MCMC, so maybe I’ve lost the plot.

But anyhow, this seems like the shape of the answer. If you pick random steps to take but take bigger steps according to some rule, then that rule might be telling you about an underlying energy landscape you’re doing a markov chain monte carlo walk around.

Deceptive alignment seems to only be supported by flimsy arguments. I recently realized that I don’t have good reason to believe that continuing to scale up LLMs will lead to inner consequentialist cognition to pursue a goal which is roughly consistent across situations. That is: a model which not only does what you ask it to do (including coming up with agentic plans), but also thinks about how to make more paperclips even while you’re just asking about math homework.

Aside: This was kinda a “holy shit” moment, and I’ll try to do it justice here. I encourage the reader to do a serious dependency check on their beliefs. What do you think you know about deceptive alignment being plausible, and why do you think you know it? Where did your beliefs truly come from, and do those observations truly provide

P(observations∣deceptive alignment is how AI works)P(observations∣deceptive alignment is not how AI works)>>1?I agree that conditional on entraining consequentialist cognition which has a “different goal” (as thought of by MIRI; this isn’t a frame I use), the AI will probably instrumentally reason about whether and how to deceptively pursue its own goals, to our detriment.

I contest that there’s very little reason to expect “undesired, covert, and consistent-across-situations inner goals” to crop up in LLMs to begin with. An example alternative prediction is:

Not only is this performant, it seems to be what we actually observe today. The AI can pursue goals when prompted to do so, but it isn’t pursuing them on its own. It basically follows instructions in a reasonable way, just like GPT-4 usually does.

Why should we believe the “consistent-across-situations inner goals → deceptive alignment” mechanistic claim about how SGD works? Here are the main arguments I’m aware of:

Analogies to evolution (e.g. page 6 of Risks from Learned Optimization)

I think these loose analogies provide basically no evidence about what happens in an extremely different optimization process (SGD to train LLMs).

Counting arguments: there are more unaligned goals than aligned goals (e.g. as argued in How likely is deceptive alignment?)

These ignore the importance of the parameter->function map. (They’re counting functions when they need to be counting parameterizations.) Classical learning theory made the (mechanistically) same mistake in predicting that overparameterized models would fail to generalize.

I also basically deny the relevance of the counting argument, because I don’t buy the assumption of “there’s gonna be an inner ‘objective’ distinct from inner capabilities; let’s make a counting argument about what that will be.”

Speculation about simplicity bias: SGD will entrain consequentialism because that’s a simple algorithm for “getting low loss”

But we already know that simplicity bias in the NN prior can be really hard to reason about.

I think it’s unrealistic to imagine that we have the level of theoretical precision to go “it’ll be a future training process and the model is ‘getting selected for low loss’, so I can now make this very detailed prediction about the inner mechanistic structure.”[1]

I falsifiably predict that if you try to use this kind of logic or counting argument today to make falsifiable predictions about unobserved LLM generalization, you’re going to lose Bayes points left and right.

Without deceptive alignment/agentic AI opposition, a lot of alignment threat models ring hollow. No more adversarial steganography or adversarial pressure on your grading scheme or worst-case analysis or unobservable, nearly unfalsifiable inner homonculi whose goals have to be perfected.

Instead, I think that we enter the realm of tool AI[2] which basically does what you say.[3] I think that world’s a lot friendlier, even though there are still some challenges I’m worried about—like an AI being scaffolded into pursuing consistent goals. (I think that’s a very substantially different risk regime, though)

(Even though this predicted mechanistic structure doesn’t have any apparent manifestation in current reality.)

Tool AI which can be purposefully scaffolded into agentic systems, which somewhat handles objections from Amdahl’s law.

This is what we actually have today, in reality. In these setups, the agency comes from the system of subroutine calls to the LLM during e.g. a plan/critique/execute/evaluate loop a la AutoGPT.

I agree a fraction of the way, but when doing a dependency check, I feel like there are some conditions where the standard arguments go through.

I sketched out my view on the dependencies Where do you get your capabilities from?. The TL;DR is that I think ChatGPT-style training basically consists of two different ways of obtaining capabilities:

Imitating internet text, which gains them capabilities to do the-sorts-of-things-humans-do because generating such text requires some such capabilities.

Reinforcement learning from human feedback on plans, where people evaluate the implications of the proposals the AI comes up with, and rate how good they are.

I think both of these are basically quite safe. They do have some issues, but probably not of the style usually discussed by rationalists working in AI alignment, and possibly not even issues going beyond any other technological development.

The basic principle for why they are safe-ish is that all of the capabilities they gain are obtained through human capabilities. So for example, while RLHF-on-plans may optimize for tricking human raters to the detriment of how the rater intended the plans to work out, this “tricking” will also sacrifice the capabilities of the plans, because the only reason more effective plans are rated better is because humans recognize their effectiveness and rate them better.

Or relatedly, consider nearest unblocked strategy, a common proposal for why alignment is hard. This only applies if the AI is able to consider an infinitude of strategies, which again only applies if it can generate its own strategies once the original human-generated strategies have been blocked.

These are the main arguments that the rationalist community seems to be pushing about it. For instance, one time you asked about it, and LW just encouraged magical thinking around these arguments. (Even from people who I’d have thought would be clearer-thinking)

The non-magical way I’d analyze the smiling reward is that while people imagine that in theory updating the policy with antecedent-computation-reinforcement should make it maximize reward, in practice the information signal in this is very sparse and imprecise, so in practice it is going to take exponential time, and therefore something else will happen beforehand.

What is this “something else”? Probably something like: the human is going to reason about whether the AI’s activities are making progress on something desirable, and in those cases, the human is going to press the antecedent-computation-reinforcement button. Which again boils down to the AI copying the human’s capabilities, and therefore being relatively safe. (E.g. if you start seeing the AI studying how to deceive humans, you’re probably gonna punish it instead, or at least if you reward it then it more falls under the dual-use risk framework than the alignment risk framework.)

(Of course one could say “what if we just instruct the human to only reward the AI based on results and not incremental progress?”, but in that case the answer to what is going to happen before the AI does a treacherous turn is “the company training the AI runs out of money”.)

There’s something seriously wrong with how LWers fixated on reward-for-smiling and rationalized an explanation of why simplicity bias or similar would make this go into a treacherous turn.

OK, so this is how far I’m with you. Rationalist stories of AI progress are basically very wrong, and some commonly endorsed threat models don’t point at anything serious. But what then?

The short technical answer is that reward is only antecedent-computation-reinforcement for policy-gradient-based reinforcement learning, and model-based approaches (traditionally temporal-difference learning, but I think the SOTA is DreamerV3, which is pretty cool) use the reward to learn a value function, which they then optimize in a more classical way, allowing them to create novel capabilities in precisely the way that can eventually lead to deception, treacherous turn, etc..

One obvious proposal is “shit, let’s not do that, chatgpt seems like a pretty good and safe alternative, and it’s not like there’s any hype behind this anyway”. I’m not sure that proposal is right, because e.g. AlphaStar was pretty hype, and it was trained with one of these methods. But it sure does seem that there’s a lot of opportunity in ChatGPT now, so at least this seems like a directionally-correct update for a lot of people to make (e.g. stop complaining so much about the possibility of an even bigger GPT-5; it’s almost certainly safer to scale it up than it is to come up with algorithms that can improve model power without improving model scale).

However, I do think there are a handful of places where this falls apart:

Your question briefly mentioned “with clever exploration bonuses”. LW didn’t really reply much to it, but it seems likely that this could be the thing that does your question in. Maybe there’s some model-free clever exploration bonuses, but if so I have never heard of them. The most advanced exploration bonuses I have heard of are from the Dreamer line of models, and it has precisely the sort of consequentialist reasoning abilities that start being dangerous.

My experience is that language models exhibit a phenomenon I call “transposons”, where (especially when fed back into themselves after deep levels of scaffolding) there are some ideas that they end up copying too much after prompting, clogging up the context window. I expect there will end up being strong incentives for people to come up with techniques to remove transposons, and I expect the most effective techniques will be based on some sort of consequences-based feedback system which again brings us back to essentially the original AI threat model.

I think security is going to be a big issue, along different lines: hostile nations, terrorists, criminals, spammers, trolls, competing companies, etc.. In order to achieve strong security, you need to be robust against adversarial attacks, which probably means continually coming up with new capabilities to fend them off. I guess one could imagine that humans will be coming up with those new capabilities, but that seems probably destroyed by adversaries using AIs to come up with security holes, and regardless it seems like having humans come up with the new capabilities will be extremely expensive, so probably people will focus on the AI side of things. To some extent, people might classify this as dual-use threats rather than alignment threats, but at least the arms race element would also generate alignment threats I think?

I think the way a lot of singularitarians thought of AI is that general agency consists of advanced adaptability and wisdom, and we expected that researchers would develop a lot of artificial adaptability, and then eventually the systems would become adaptable enough to generate wisdom faster than people do, and then overtake society. However what happened was that a relatively shallow form of adaptability was developed, and then people loaded all of human wisdom into that shallow adaptability, which turned out to be immensely profitable. But I’m not sure it’s going to continue to work this way; eventually we’re gonna run out of human wisdom to dump into the models, plus the models reduce the incentive for humans to share our wisdom in public. So just as a general principle, continued progress seems like it’s eventually going to switch to improving the ability to generate novel capabilities, which in turn is going to put us back to the traditional story? (Except possibly with a weirder takeoff? Slow takeoff followed by ??? takeoff or something. Idk.) Possibly this will be delayed because the creators of the LLMs find patches, e.g. I think we’re going to end up seeing the possibility of allowing people to “edit” the LLM responses rather than just doing upvotes/downvotes, but this again motivates some capabilities development because you need to filter out spammers/trolls/etc., which is probably best done through considering the consequences of the edits.

If we zoom out a bit, what’s going on here? Here’s some answers:

Conditioning on capabilities: There are strong reasons to expect capabilities to keep on improving, so we should condition on that. This leads to a lot of alignment issues, due to folk decision theory theorems. However, we don’t know how capabilities will develop, so we lose the ability to reason mechanistically when conditioning on capabilities, which means that there isn’t a mechanistic answer to all questions related to it. If one doesn’t keep track of this chain of reasoning, one might assume that there is a known mechanistic answer, which leads to the types of magical thinking seen in that other thread.

If people don’t follow-the-trying, that can lead to a lot of misattributions.

When considering agency in general, rather than consequentialist-based agency in particular, then instrumental convergence is more intuitive in disjunctive form than implication form. I.e. rather than “if an AI robustly achieves goals, then it will resist being shut down”, say “either an AI resists being shut down, or it doesn’t robustly achieve goals”.

Don’t trust rationalists too much.

One additional speculative thing I have been thinking of which is kind of off-topic and doesn’t fit in neatly with the other things:

Could there be “natural impact regularization” or “impact regularization by default”? Specifically, imagine you use general-purpose search procedure which recursively invokes itself to solve subgoals for the purpose of solving some bigger goal.

If the search procedure’s solutions to subgoals “change things too much”, then they’re probably not going to be useful. E.g. for Rubik’s cubes, if you want to swap some of the cuboids, it does you know good if those swaps leave the rest of the cube scrambled.

Thus, to some extent, powerful capabilities would have to rely on some sort of impact regularization.

The bias-focused rationalists like to map decision-theoretic insights to human mistakes, but I instead like to map decision-theoretic insights to human capacities and experiences. I’m thinking that natural impact regularization is related to the notion of “elegance” in engineering. Like if you have some bloated tool to solve a problem, then even if it’s not strictly speaking an issue because you can afford the resources, it might feel ugly because it’s excessive and puts mild constaints on your other underconstrained decisions, and so on. Meanwhile a simple, minimal solution often doesn’t have this.

Natural impact regularization wouldn’t guarantee safety, since it’s still allows deviations that don’t interfere with the AI’s function, but it sort of reduces one source of danger which I had been thinking about lately, namely I had been thinking that the instrumental incentive is to search for powerful methods of influencing the world, where “power” connotes the sort of raw power that unstoppably forces a lot of change, but really the instrumental incentive is often to search for “precise” methods of influencing the world, where one can push in a lot of information to effect narrow change. (A complication is that any one agent can only have so much bandwidth. I’ve been thinking bandwidth is probably going to become a huge area of agent foundations, and that it’s been underexplored so far. (Perhaps because everyone working in alignment sucks at managing their bandwidth?))

Maybe another word for it would be “natural inner alignment”, since in a sense the point is that capabilities inevitably select for inner alignment.

Sorry if I’m getting too rambly.

I think deceptive alignment is still reasonably likely despite evidence from LLMs.

I agree with:

LLMs are not deceptively aligned and don’t really have inner goals in the sense that is scary

LLMs memorize a bunch of stuff

the kinds of reasoning that feed into deceptive alignment do not predict LLM behavior well

Adam on transformers does not have a super strong simplicity bias

without deceptive alignment, AI risk is a lot lower

LLMs not being deceptively aligned provides nonzero evidence against deceptive alignment (by conservation of evidence)

I predict I could pass the ITT for why LLMs are evidence that deceptive alignment is not likely.

however, I also note the following: LLMs are kind of bad at generalizing, and this makes them pretty bad at doing e.g novel research, or long horizon tasks. deceptive alignment conditions on models already being better at generalization and reasoning than current models.

my current hypothesis is that future models which generalize in a way closer to that predicted by mesaoptimization will also be better described as having a simplicity bias.

I think this and other potential hypotheses can potentially be tested empirically today rather than only being distinguishable close to AGI

Note that “LLMs are evidence against this hypothesis” isn’t my main point here. The main claim is that the positive arguments for deceptive alignment are flimsy, and thus the prior is very low.

How would you imagine doing this? I understand your hypothesis to be “If a model generalises as if it’s a mesa-optimiser, then it’s better-described as having simplicity bias”. Are you imagining training systems that are mesa-optimisers (perhaps explicitly using some kind of model-based RL/inference-time planning and search/MCTS), and then trying to see if they tend to learn simple cross-episode inner goals which would be implied by a stronger implicity bias?

I find myself unsure which conclusion this is trying to argue for.

Here are some pretty different conclusions:

Deceptive alignment is <<1% likely (quite implausible) to be a problem prior to complete human obsolescence (maybe it’s a problem after human obsolescence for our trusted AI successors, but who cares).

There aren’t any solid arguments for deceptive alignment[1]. So, we certainly shouldn’t be confident in deceptive alignment (e.g. >90%), though we can’t total rule it out (prior to human obsolescene). Perhaps deceptive alignment is 15% likely to be a serious problem overall and maybe 10% likely to be a serious problem if we condition on fully obsoleting humanity via just scaling up LLM agents or similar (this is pretty close to what I think overall).

Deceptive alignment is <<1% likely for scaled up LLM agents (prior to human obsolescence). Who knows about other architectures.

There is a big difference between <<1% likely and 10% likely. I basically agree with “not much reason to expect deceptive alignment even in models which are behaviorally capable of implementing deceptive alignment”, but I don’t think this leaves me in a <<1% likely epistemic state.

Other than noting that it could be behaviorally consistent for powerful models: powerful models are capable of deceptive alignment.

Closest to the third, but I’d put it somewhere between .1% and 5%. I think 15% is way too high for some loose speculation about inductive biases, relative to the specificity of the predictions themselves.

There are some subskills to having consistent goals that I think will be selected for, at least when outcome-based RL starts working to get models to do long-horizon tasks. For example, the ability to not be distracted/nerdsniped into some different behavior by most stimuli while doing a task. The longer the horizon, the more selection—if you have to do a 10,000 step coding project, then the probability you get irrecoverably distracted on one step has to be below 1⁄10,000.

I expect some pretty sophisticated goal-regulation circuitry to develop as models get more capable, because humans need it, and this makes me pretty scared.

I agree that, conditional on no deceptive alignment, the most pernicious and least tractable sources of doom go away.

However, I disagree that conditional on no deceptive alignment, AI “basically does what you say.” Indeed, the majority of my P(doom) comes from the difference between “looks good to human evaluators” and “is actually what the human evaluators wanted.” Concretely, this could play out with models which manipulate their users into thinking everything is going well and sensor tamper.