Note that getting an at home nebulizer is also quite easy, saved me many a trip to hospital.

Trinley Goldenberg

This makes me think of eurisko/automated mathematicion, and wonder what minimal set of heuristic and concepts you can start with to get to higher math.

I’ve heard that motor racing is a physically demanding sport, and the physical conditioning required is similar to that of other pro athletes. Any truth to that?

Aren’t the central example of founders in AI Safety the people who founded Anthropic, OpenAI and arguably Deepmind?

This is consistent with founders being undervalued in AI safety relative to AI capabilities. My model of Elon for instance says that a big reason towards pivoting hard towards capabilities was that all the capabilities founders were receiving more status than the safety founders.

It doesn’t seem like either thing captures the gears of what’s happening to an llm, but it’s insofar as you’re arguing it’s impossible for an llm to be convinced, I think you should provide better gears or what specific aspect of “convincing” you don’t think applies

I dint think that arguing with an llm will reliably enter into a sychophant mode, or that the thing that happens when you go through a series of back and forth argumentation with an llm can be fully explained by it entering into a mask/face

But more importantly it’s a whole memetic egregore, and you can’t really get rid of the bad parts without rejecting the whole egregore. And you can get the good parts without most of the egregore

Can you say more to about why you believe this second part? It’s not at all straightforward to me how you pick and choose the good parts of an egregore to “get” them.

The dominant egregores are selected, so if you want to beat them, you need to out-compete them, which means you can’t just select on “goodness” as a criteria, you have to have a cohesive package that outcompetes the existing egregore.

Historically, memeplexes replicated exclusively through human minds.

I think its often more predictive to model historical memeplexes as replicating through egregores like companies, countries, etc.

cyber or cyborg egregore

The term we use for this at Monastic Academy is “Cybregore.”

A core part of our strategy these days is learning how to teach these Cybregores to be ethical.

It seems to me like LLMs can actually be something like convinced within a context window, and don’t see why this is impossible

Good point.

I see, so the theory is that we are bottleneck Ed on testing old and new ideas, not having the right new ideas

Seems like the first two points contradict each other. How can an llm not be good at discovery and also automate human R&D

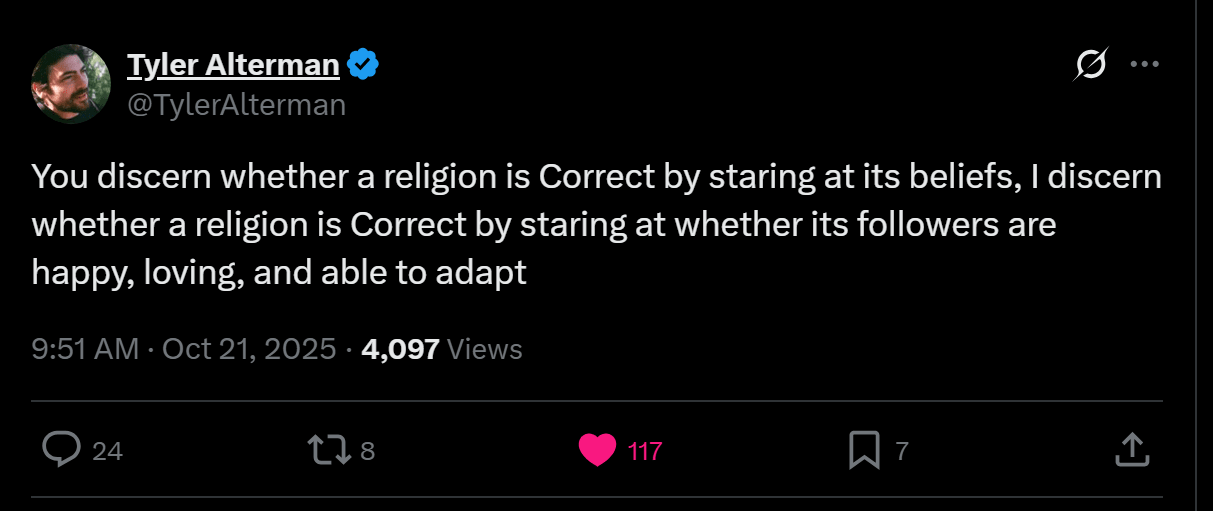

riffing off of this tweet:

i remember a conversation with a guy who was like ‘i think christians are better than buddhists because they tend to be married and have happy children’ ‘oh, so you think christianity is better because it better upholds christian values?’ this is a common issue...

whatever criteria you evaluate a religion on, it reveals your default religion

if you evaluate on beliefs and their correspondence with ‘reality’, your religion is scientific materialism

if you evaluate on how happy it makes people, your religion is individualistic humanism

there’s nothing wrong with simply finding the practices that best instantiate your current religion, but i think there’s another way

evaluating religion on whether it can show you things that take you OUTSIDE of your current frame, rather than keeping you inside it

of course, you still run into the fact that you have some criteria—but you’re coming from a place where you’re actually OPEN to being fundamentally wrong about that religion, and searching for a deeper truth

yes exactly? they think true discernement and the ability to tell what is good right and called for, is a matter of intelligence. thus they think wisdom is intelligence.

That’s my point, they think the idea of wisdom is confused and you can replace it with the idea of intelligence or compassion. That there’s not a real ‘there’ there that gives you true discernment and ability to tell what is good, right, called for.

Intelligence is building up, holding on, abstracting. Wisdom is letting go, unwinding, seeing what is. Compassion is the process of trying to build up in order that you or others can let go

I think it is, but compassion is described in a utilitarian way. I’m perhaps using compassion in an unorthodox definition, but actually the way people tend to use it: How do I use intelligence to do good.

if i had to pinpoint what I think is the biggest issue with the rationality and ea community, i’d say that ea thinks wisdom is compassion, and rationality thinks wisdom is intelligence

but in fact, wisdom is a secret third thing which should steer intelligence and compassion

This does feel like a nearby fallacy of denying specific examples, which maybe should have its own post

Fwiw while wit matters in flirting, I’ve found it’s much more about energy.

I don’t know if this abstract description will make sense to you, but ime the way that flirting leads to sex is by continually ramping up sexual tension by a sort of plausible deniability of sexual interest -

one way to do this is you’re talking about sexy things but not being overtly sexual with your energy, or having a sexual energy but it’s more teasing or talking about non sexy things

In any case, if both people are into it, and don’t let the tension escape through other outlets, the way to release it is sexual escalation. And as long as the escalation (E. G. Cuddling, kissing etc) is still less than the sexual energy calls for, the tension continues to build to sexual intimacy.