Probability is in the Mind

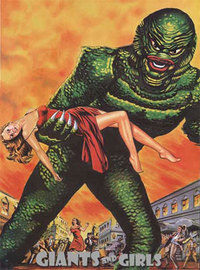

Yesterday I spoke of the Mind Projection Fallacy, giving the example of the alien monster who carries off a girl in a torn dress for intended ravishing—a mistake which I imputed to the artist’s tendency to think that a woman’s sexiness is a property of the woman herself, woman.sexiness, rather than something that exists in the mind of an observer, and probably wouldn’t exist in an alien mind.

The term “Mind Projection Fallacy” was coined by the late great Bayesian Master, E. T. Jaynes, as part of his long and hard-fought battle against the accursèd frequentists. Jaynes was of the opinion that probabilities were in the mind, not in the environment—that probabilities express ignorance, states of partial information; and if I am ignorant of a phenomenon, that is a fact about my state of mind, not a fact about the phenomenon.

I cannot do justice to this ancient war in a few words—but the classic example of the argument runs thus:

You have a coin.

The coin is biased.

You don’t know which way it’s biased or how much it’s biased. Someone just told you, “The coin is biased” and that’s all they said.

This is all the information you have, and the only information you have.

You draw the coin forth, flip it, and slap it down.

Now—before you remove your hand and look at the result—are you willing to say that you assign a 0.5 probability to the coin having come up heads?

The frequentist says, “No. Saying ‘probability 0.5’ means that the coin has an inherent propensity to come up heads as often as tails, so that if we flipped the coin infinitely many times, the ratio of heads to tails would approach 1:1. But we know that the coin is biased, so it can have any probability of coming up heads except 0.5.”

The Bayesian says, “Uncertainty exists in the map, not in the territory. In the real world, the coin has either come up heads, or come up tails. Any talk of ‘probability’ must refer to the information that I have about the coin—my state of partial ignorance and partial knowledge—not just the coin itself. Furthermore, I have all sorts of theorems showing that if I don’t treat my partial knowledge a certain way, I’ll make stupid bets. If I’ve got to plan, I’ll plan for a 50⁄50 state of uncertainty, where I don’t weigh outcomes conditional on heads any more heavily in my mind than outcomes conditional on tails. You can call that number whatever you like, but it has to obey the probability laws on pain of stupidity. So I don’t have the slightest hesitation about calling my outcome-weighting a probability.”

I side with the Bayesians. You may have noticed that about me.

Even before a fair coin is tossed, the notion that it has an inherent 50% probability of coming up heads may be just plain wrong. Maybe you’re holding the coin in such a way that it’s just about guaranteed to come up heads, or tails, given the force at which you flip it, and the air currents around you. But, if you don’t know which way the coin is biased on this one occasion, so what?

I believe there was a lawsuit where someone alleged that the draft lottery was unfair, because the slips with names on them were not being mixed thoroughly enough; and the judge replied, “To whom is it unfair?”

To make the coinflip experiment repeatable, as frequentists are wont to demand, we could build an automated coinflipper, and verify that the results were 50% heads and 50% tails. But maybe a robot with extra-sensitive eyes and a good grasp of physics, watching the autoflipper prepare to flip, could predict the coin’s fall in advance—not with certainty, but with 90% accuracy. Then what would the real probability be?

There is no “real probability”. The robot has one state of partial information. You have a different state of partial information. The coin itself has no mind, and doesn’t assign a probability to anything; it just flips into the air, rotates a few times, bounces off some air molecules, and lands either heads or tails.

So that is the Bayesian view of things, and I would now like to point out a couple of classic brainteasers that derive their brain-teasing ability from the tendency to think of probabilities as inherent properties of objects.

Let’s take the old classic: You meet a mathematician on the street, and she happens to mention that she has given birth to two children on two separate occasions. You ask: “Is at least one of your children a boy?” The mathematician says, “Yes, he is.”

What is the probability that she has two boys? If you assume that the prior probability of a child being a boy is 1⁄2, then the probability that she has two boys, on the information given, is 1⁄3. The prior probabilities were: 1⁄4 two boys, 1⁄2 one boy one girl, 1⁄4 two girls. The mathematician’s “Yes” response has probability ~1 in the first two cases, and probability ~0 in the third. Renormalizing leaves us with a 1⁄3 probability of two boys, and a 2⁄3 probability of one boy one girl.

But suppose that instead you had asked, “Is your eldest child a boy?” and the mathematician had answered “Yes.” Then the probability of the mathematician having two boys would be 1⁄2. Since the eldest child is a boy, and the younger child can be anything it pleases.

Likewise if you’d asked “Is your youngest child a boy?” The probability of their being both boys would, again, be 1⁄2.

Now, if at least one child is a boy, it must be either the oldest child who is a boy, or the youngest child who is a boy. So how can the answer in the first case be different from the answer in the latter two?

Or here’s a very similar problem: Let’s say I have four cards, the ace of hearts, the ace of spades, the two of hearts, and the two of spades. I draw two cards at random. You ask me, “Are you holding at least one ace?” and I reply “Yes.” What is the probability that I am holding a pair of aces? It is 1⁄5. There are six possible combinations of two cards, with equal prior probability, and you have just eliminated the possibility that I am holding a pair of twos. Of the five remaining combinations, only one combination is a pair of aces. So 1⁄5.

Now suppose that instead you asked me, “Are you holding the ace of spades?” If I reply “Yes”, the probability that the other card is the ace of hearts is 1⁄3. (You know I’m holding the ace of spades, and there are three possibilities for the other card, only one of which is the ace of hearts.) Likewise, if you ask me “Are you holding the ace of hearts?” and I reply “Yes”, the probability I’m holding a pair of aces is 1⁄3.

But then how can it be that if you ask me, “Are you holding at least one ace?” and I say “Yes”, the probability I have a pair is 1/5? Either I must be holding the ace of spades or the ace of hearts, as you know; and either way, the probability that I’m holding a pair of aces is 1⁄3.

How can this be? Have I miscalculated one or more of these probabilities?

If you want to figure it out for yourself, do so now, because I’m about to reveal...

That all stated calculations are correct.

As for the paradox, there isn’t one. The appearance of paradox comes from thinking that the probabilities must be properties of the cards themselves. The ace I’m holding has to be either hearts or spades; but that doesn’t mean that your knowledge about my cards must be the same as if you knew I was holding hearts, or knew I was holding spades.

It may help to think of Bayes’s Theorem:

P(H|E) = P(E|H)P(H) / P(E)

That last term, where you divide by P(E), is the part where you throw out all the possibilities that have been eliminated, and renormalize your probabilities over what remains.

Now let’s say that you ask me, “Are you holding at least one ace?” Before I answer, your probability that I say “Yes” should be 5⁄6.

But if you ask me “Are you holding the ace of spades?”, your prior probability that I say “Yes” is just 1⁄2.

So right away you can see that you’re learning something very different in the two cases. You’re going to be eliminating some different possibilities, and renormalizing using a different P(E). If you learn two different items of evidence, you shouldn’t be surprised at ending up in two different states of partial information.

Similarly, if I ask the mathematician, “Is at least one of your two children a boy?” I expect to hear “Yes” with probability 3⁄4, but if I ask “Is your eldest child a boy?” I expect to hear “Yes” with probability 1⁄2. So it shouldn’t be surprising that I end up in a different state of partial knowledge, depending on which of the two questions I ask.

The only reason for seeing a “paradox” is thinking as though the probability of holding a pair of aces is a property of cards that have at least one ace, or a property of cards that happen to contain the ace of spades. In which case, it would be paradoxical for card-sets containing at least one ace to have an inherent pair-probability of 1⁄5, while card-sets containing the ace of spades had an inherent pair-probability of 1⁄3, and card-sets containing the ace of hearts had an inherent pair-probability of 1⁄3.

Similarly, if you think a 1⁄3 probability of being both boys is an inherent property of child-sets that include at least one boy, then that is not consistent with child-sets of which the eldest is male having an inherent probability of 1⁄2 of being both boys, and child-sets of which the youngest is male having an inherent 1⁄2 probability of being both boys. It would be like saying, “All green apples weigh a pound, and all red apples weigh a pound, and all apples that are green or red weigh half a pound.”

That’s what happens when you start thinking as if probabilities are in things, rather than probabilities being states of partial information about things.

Probabilities express uncertainty, and it is only agents who can be uncertain. A blank map does not correspond to a blank territory. Ignorance is in the mind.

- Eliezer’s Sequences and Mainstream Academia by (15 Sep 2012 0:32 UTC; 248 points)

- Raising the Sanity Waterline by (12 Mar 2009 4:28 UTC; 247 points)

- A Crash Course in the Neuroscience of Human Motivation by (19 Aug 2011 21:15 UTC; 205 points)

- Joy in the Merely Real by (20 Mar 2008 6:18 UTC; 193 points)

- References & Resources for LessWrong by (10 Oct 2010 14:54 UTC; 170 points)

- On Saying the Obvious by (2 Feb 2012 5:13 UTC; 161 points)

- If a tree falls on Sleeping Beauty... by (12 Nov 2010 1:14 UTC; 148 points)

- Message Length by (20 Oct 2020 5:52 UTC; 134 points)

- Back to the Basics of Rationality by (11 Jan 2011 7:05 UTC; 117 points)

- What I’ve learned from Less Wrong by (20 Nov 2010 12:47 UTC; 116 points)

- Frequentist Statistics are Frequently Subjective by (4 Dec 2009 20:22 UTC; 87 points)

- The Quantum Physics Sequence by (11 Jun 2008 3:42 UTC; 75 points)

- Configurations and Amplitude by (11 Apr 2008 3:14 UTC; 73 points)

- Qualitatively Confused by (14 Mar 2008 17:01 UTC; 71 points)

- Possibility and Could-ness by (14 Jun 2008 4:38 UTC; 68 points)

- Chance is in the Map, not the Territory by (13 Jan 2025 19:17 UTC; 67 points)

- An Overview of Formal Epistemology (links) by (6 Jan 2011 19:57 UTC; 63 points)

- Aiming for Convergence Is Like Discouraging Betting by (1 Feb 2023 0:03 UTC; 63 points)

- About Less Wrong by (23 Feb 2009 23:30 UTC; 57 points)

- Quantum Non-Realism by (8 May 2008 5:27 UTC; 56 points)

- Heading Toward: No-Nonsense Metaethics by (24 Apr 2011 0:42 UTC; 55 points)

- Why you must maximize expected utility by (13 Dec 2012 1:11 UTC; 50 points)

- Probability is Subjectively Objective by (14 Jul 2008 9:16 UTC; 42 points)

- Bell’s Theorem: No EPR “Reality” by (4 May 2008 4:44 UTC; 40 points)

- Rudimentary Categorization of Less Wrong Topics by (5 Sep 2015 7:32 UTC; 39 points)

- A Suggested Reading Order for Less Wrong [2011] by (8 Jul 2011 1:40 UTC; 38 points)

- Book Review—Other Minds: The Octopus, the Sea, and the Deep Origins of Consciousness by (3 Dec 2018 8:00 UTC; 36 points)

- Uncertainty by (29 Nov 2011 23:12 UTC; 27 points)

- Heading Toward Morality by (20 Jun 2008 8:08 UTC; 27 points)

- Setting Up Metaethics by (28 Jul 2008 2:25 UTC; 27 points)

- Metauncertainty by (10 Apr 2009 23:41 UTC; 26 points)

- Probability is a model, frequency is an observation: Why both halfers and thirders are correct in the Sleeping Beauty problem. by (12 Jul 2018 6:52 UTC; 26 points)

- Fundamentals of kicking anthropic butt by (26 Mar 2012 6:43 UTC; 25 points)

- 's comment on Which LW / rationalist blog posts aren’t covered by my books & courses? by (5 Aug 2015 2:40 UTC; 25 points)

- Intuitive Explanation of AIXI by (12 Jun 2022 21:41 UTC; 22 points)

- MSF Theory: Another Explanation of Subjectively Objective Probability by (30 Jul 2011 19:46 UTC; 20 points)

- 's comment on Rewriting the sequences? by (13 Jun 2011 16:09 UTC; 20 points)

- Another Non-Anthropic Paradox: The Unsurprising Rareness of Rare Events by (21 Jan 2024 15:58 UTC; 19 points)

- When “HDMI-1” Lies To You by (30 Oct 2025 12:23 UTC; 17 points)

- The Graviton as Aether by (4 Mar 2010 22:13 UTC; 16 points)

- What makes a probability question “well-defined”? (Part I) by (2 Oct 2022 21:05 UTC; 14 points)

- Probability Theory Fundamentals 102: Territory that Probability is in the Map of by (26 Mar 2025 6:40 UTC; 10 points)

- How urgent is it to intuitively understand Bayesianism? by (7 Apr 2015 0:43 UTC; 9 points)

- 's comment on Rational = true? by (9 Feb 2011 11:13 UTC; 9 points)

- Map:Territory::Uncertainty::Randomness – but that doesn’t matter, value of information does. by (22 Jan 2016 19:12 UTC; 8 points)

- Rationality Reading Group: Part P: Reductionism 101 by (17 Dec 2015 3:03 UTC; 8 points)

- [SEQ RERUN] Probability is in the Mind by (24 Feb 2012 6:27 UTC; 8 points)

- 's comment on Anthropics made easy? by (14 Jun 2018 16:51 UTC; 7 points)

- 's comment on What is Bayesianism? by (26 Feb 2010 16:49 UTC; 7 points)

- 's comment on Taking Ideas Seriously by (29 Aug 2010 17:13 UTC; 7 points)

- Risk and uncertainty: A false dichotomy? by (18 Jan 2020 3:09 UTC; 6 points)

- Lighthaven Sequences Reading Group #24 (Tuesday 03/04) by (3 Mar 2025 19:13 UTC; 6 points)

- 's comment on Probability, knowledge, and meta-probability by (23 Sep 2013 9:50 UTC; 5 points)

- 's comment on Is Determinism A Special Case Of Randomness? by (4 May 2015 16:55 UTC; 5 points)

- 's comment on Take heed, for it is a trap by (17 Aug 2011 1:13 UTC; 5 points)

- What does “probability” really mean? by (28 Dec 2022 3:20 UTC; 5 points)

- 's comment on Are quantum indeterminacy and normal uncertainty meaningfully distinct? by (31 Mar 2023 10:21 UTC; 4 points)

- 's comment on Toolbox-thinking and Law-thinking by (3 Jun 2018 12:24 UTC; 4 points)

- 's comment on Ikaxas’ Shortform Feed by (31 May 2018 4:35 UTC; 4 points)

- 's comment on Am I Understanding Bayes Right? by (14 Nov 2013 3:38 UTC; 4 points)

- 's comment on New Year’s Predictions Thread (2011) by (5 Jan 2011 15:42 UTC; 4 points)

- 's comment on Is Determinism A Special Case Of Randomness? by (4 May 2015 21:04 UTC; 3 points)

- 's comment on Welcome to Less Wrong! by (12 Jun 2011 16:34 UTC; 3 points)

- 's comment on Taking Ideas Seriously by (23 Aug 2010 18:08 UTC; 3 points)

- 's comment on Taking Ideas Seriously by (23 Aug 2010 23:15 UTC; 3 points)

- 's comment on LINK: Infinity, probability and disagreement by (5 Mar 2013 14:54 UTC; 3 points)

- 's comment on Suggest short Sequence readings for my college stat class by (2 Jan 2018 12:32 UTC; 3 points)

- 's comment on Evidence and counterexample to positive relevance by (26 May 2013 17:37 UTC; 2 points)

- 's comment on Can anyone explain to me why CDT two-boxes? by (4 Jul 2012 15:04 UTC; 2 points)

- 's comment on Is Functional Decision Theory still an active area of research? by (14 Nov 2021 0:58 UTC; 2 points)

- What is a “Good” Prediction? by (3 May 2020 16:51 UTC; 2 points)

- 's comment on Bayesian Flame by (27 Jul 2009 4:29 UTC; 2 points)

- 's comment on Probability and Politics by (24 Nov 2010 19:35 UTC; 2 points)

- 's comment on A system of infinite ethics by (22 Nov 2021 11:26 UTC; 2 points)

- 's comment on Deutsch and Yudkowsky on scientific explanation by (21 Jan 2021 18:50 UTC; 2 points)

- 's comment on LW favorites by (14 Oct 2010 13:32 UTC; 2 points)

- 's comment on Morpheus’s Shortform by (14 Dec 2022 15:16 UTC; 1 point)

- 's comment on How to Be Oversurprised by (12 Jan 2013 1:22 UTC; 1 point)

- 's comment on The Curious Prisoner Puzzle by (29 Aug 2019 0:41 UTC; 1 point)

- A Bayesian Explanation of Causal Models by (27 Oct 2025 23:16 UTC; 1 point)

- 's comment on Is Equality Really about Diminishing Marginal Utility? by (5 Dec 2012 19:19 UTC; 1 point)

- 's comment on Is Rationality Maximization of Expected Value? by (23 Sep 2010 5:27 UTC; 1 point)

- 's comment on The Practical Argument for Free Will by (16 Mar 2017 23:33 UTC; 1 point)

- 's comment on A new intro to Quantum Physics, with the math fixed by (8 Nov 2023 16:24 UTC; 1 point)

- 's comment on How an alien theory of mind might be unlearnable by (3 Jan 2022 21:56 UTC; 1 point)

- 's comment on [SEQ RERUN] Pascal’s Mugging: Tiny Probabilities of Vast Utilities by (1 Oct 2011 21:13 UTC; 1 point)

- Kling, Probability, and Economics by (30 Mar 2009 5:15 UTC; 1 point)

- 's comment on Average utilitarianism must be correct? by (8 Apr 2009 13:44 UTC; 1 point)

- 's comment on Inadequacy and Modesty by (EA Forum; 31 Oct 2017 14:49 UTC; 0 points)

- 's comment on Natural Laws Are Descriptions, not Rules by (14 Aug 2012 1:43 UTC; 0 points)

- 's comment on You cannot be mistaken about (not) wanting to wirehead by (22 Jan 2013 2:12 UTC; 0 points)

- 's comment on [LINK] stats.stackexchange.com question about Shalizi’s Bayesian Backward Arrow of Time paper by (17 May 2012 16:31 UTC; 0 points)

- 's comment on Rationality Quotes August 2013 by (16 Aug 2013 20:15 UTC; 0 points)

- 's comment on Mysterious Answers to Mysterious Questions by (17 Oct 2012 16:35 UTC; 0 points)

- 's comment on What’s wrong with this picture? by (28 Jan 2016 16:04 UTC; 0 points)

- 's comment on Pascal’s wager by (23 Apr 2013 19:52 UTC; 0 points)

- 's comment on Probability is Subjectively Objective by (29 Jul 2011 20:42 UTC; 0 points)

- 's comment on On not getting a job as an option by (19 Apr 2015 21:03 UTC; 0 points)

- 's comment on Born rule or universal prior? by (2 Jul 2011 5:56 UTC; 0 points)

- 's comment on Probability is in the Mind by (6 May 2011 18:47 UTC; 0 points)

- 's comment on Baysian conundrum by (13 Oct 2014 5:30 UTC; 0 points)

- 's comment on Bet or update: fixing the will-to-wager assumption by (8 Jun 2017 3:19 UTC; 0 points)

- 's comment on The Fallacy of Gray by (14 Jan 2013 9:31 UTC; -1 points)

- 's comment on Post Your Utility Function by (11 Jun 2009 14:36 UTC; -2 points)

- 's comment on On the unpopularity of cryonics: life sucks, but at least then you die by (6 Aug 2011 22:29 UTC; -4 points)

It seems to me you’re using “perceived probability” and “probability” interchangeably. That is, you’re “defining” probability as the probability that an observer assigns based on certain pieces of information. Is it not true that when one rolls a fair 1d6, there is an actual 1⁄6 probability of getting any one specific value? Or using your biased coin example: our information may tell us to assume a 50⁄50 chance, but the man may be correct in saying that the coin has a bias—that is, the coin may really come up heads 80% of the time, but we must assume a 50% chance to make the decision, until we can be certain of the 80% chance ourselves. What am I missing? I would say that the Gomboc (http://tinyurl.com/2rffxs) has a 100% chance of righting itself, inherently. I do not understand how this is incorrect.

“Is it not true that when one rolls a fair 1d6, there is an actual 1⁄6 probability of getting any one specific value?”

No. The unpredictability of a die roll or coin flip is not due to any inherent physical property of the objects; it is simply due to lack of information. Even with quantum uncertainty, you could predict the result of a coin flip or die roll with high accuracy if you had precise enough measurements of the initial conditions.

Let’s look at the simpler case of the coin flip. As Jaynes explains it, consider the phase space for the coin’s motion at the moment it leaves your fingers. Some points in that phase space will result in the coin landing heads up; color these points black. Other points in the phase space will result in the coin landing tails up; color these points white. If you examined the phase space under a microscope (metaphorically speaking) you would see an intricate pattern of black and white, with even a small movement in the phase space crossing many boundaries between a black region and a white region.

If you knew the initial conditions precisely enough, you would know whether the coin was in a white or black region of phase space, and you would then have a probability of either 1 or 0 for it coming up heads.

It’s more typical that we don’t have such precise measurements, and so we can only pin down the coin’s location in phase space to a region that contains many, many black subregions and many, many white subregions… effectively it’s just gray, and the shade of gray is your probability for heads given your measurement of the initial conditions.

So you see that the answer to “what is the probability of the coin landing heads up” depends on what information you have available.

Of course, in practice you typically don’t even have the lesser level of information assumed above—you don’t know enough about the coin, even in principle, to compute which points in phase space are black and which are white, or what proportion of the points are black versus white in the region corresponding to what you know about the initial conditions. Here’s where symmetry arguments then give you P(heads) = 1⁄2.

Case in point:

There are dice designed with very sharp corners in order to improve their randomness.

If randomness were an inherent property of dice, simply refining the shape shouldn’t change the randomness, they are still plain balanced dice, after all.

But when you think of a “random” throw of the dice as a combination of the position of the dice in the hand, the angle of the throw, the speed and angle of the dice as they hit the table, the relative friction between the dice and the table, and the sharpness of the corners as they tumble to a stop, you realize that if you have all the relevant information you can predict the roll of the dice with high certainty.

It’s only because we don’t have the relevant information that we say the probabilities are 1⁄6.

I’m curious about how how quantum uncertainty works exactly. You can make a prediction with models and measurements, but when you observe the final result, only one thing happens. Then, even if an agent is cut off from information (i.e. observation is physically impossible), it’s still a matter of predicting/mapping out reality.

I don’t know much about the specifics of quantum uncertainty, though.

Not necessarily, because of quantum uncertainty and indeterminism—and yes, they can affect macroscopic systems.

The deeper point is, whilst there is a subjective ignorance-based kind of probability, that does not by itself mean there is not an objective, in-the-territory kind of 0<p<1 probability. The latter would be down to how the universe works, and you can’t tell how the universe works by making conceptual, philosophical-style arguments.

So the kind of probability that is in the mind is in the mind, and the other kind is a separate issue. (Of course, the existence of objective probability doesn’t follow from the existence of subjective probability any more than its non existence does).

GBM:

Q: What is the probability for a pseudo-random number generator to generate a specific number as his next output?

A: 1 or 0 because you can actually calculate the next number if you have the available information.

Q: What probability do you assign to a specific number as being it’s next output if you don’t have the information to calculate it?

Replace pseudo-random number generator with dice and repeat.

Even more important, I think, is the realization that, to decide how much you’re willing to bet on a specific outcome, all of the following are essentially the same:

you do have the information to calculate it but haven’t calculated it yet

you don’t have the information to calculate it but know how to obtain such information.

you don’t have the information to calculate it

The bottom line is that you don’t know what the next value will be, and that’s the only thing that matters.

So therefore a person with perfect knowledge would not need probability. Is this another interpretation of “God does not play dice?” :-)

I think this is the only interpretation of “God does not play dice.”

At least in its famous context, I always interpreted that quote as a metaphorical statement of aesthetic preference for a deterministic over a stochastic world, rather than an actual statement about the behavior of a hypothetical omniscient being. A lot of bullshit’s been spilled on Einstein’s religious preferences, but whatever the truth I’d be very surprised if he conditioned his response to a scientific question on something that speculative.

This is more or less what I was saying, but left (perhaps too) much of it implicit.

If there were an entity with perfect knowledge of the present (“God”), they would have perfect knowledge of the future, and thus “not need probability”, iff the universe is deterministic. (If there is an entity with perfect knowledge of the future of a nondeterministic reality, we have described our “reality” too narrowly—include that entity and it is necessarily deterministic or the perfect knowledge isn’t).

Not exactly. Even someone with perfect knowledge would still need probability, they’d just know the odds.

What Einstein meant by that was not literal god but the universe. He refused to accept some things could be random like QM.

The problem with QM is that it’s fundamentally indeterministic, perfect knowledge won’t change that (and that’s what he refused to accept). This is just Eliezer again speaking on things he has no knowledge about.

Alas, the coin was part of an erroneous stamping, and is blank on both sides.

In other words, probability is not likelihood.

Here is another example me, my dad and my brother came up with when we were discussing probability.

Suppose there are 4 card, an ace and 3 kings. They are shuffled and placed face side down. I didn’t look at the cards, my dad looked at the first card, my brother looked at the first and second cards. What is the probability of the ace being one of the last 2 cards. For me: 1⁄2 For my dad: If he saw the ace it is 0, otherwise 2⁄3. For my brother: If he saw the ace it is 0, otherwise 1.

How can there be different probabilities of the same event? It is because probability is something in the mind calculated because of imperfect knowledge. It is not a property of reality. Reality will take only a single path. We just don’t know what that path is. It is pointless to ask for “the real likelihood” of an event. The likelihood depends on how much information you have. If you had all the information, the likelihood of the event would be 100% or 0%.

The competent frequentist would presumably not be befuddled by these supposed paradoxes. Since he would not be befuddled (or so I am fairly certain), the “paradoxes” fail to prove the superiority of the Bayesian approach. Frankly, the treatment of these “paradoxes” in terms of repeated experiments seems to straightforward that I don’t know how you can possibly think there’s a problem.

Say you have a circle. On this circle you draw the inscribed equilateral triangle.

Simple, right?

Okay. For a random chord in this circle, what is the probability that the chord is longer than the side in the triangle?

So, to choose a random chord, there are three obvious methods:

Pick a point on the circle perimeter, and draw the triangle with that point as an edge. Now when you pick a second point on the circle perimeter as the other endpoint of your chord, you can plainly see that in 1⁄3 of the cases, the resulting chord will be longer than the triangles’ side.

Pick a random radius (line from center to perimeter). Rotate the triangle so one of the sides bisect this radius. Now you pick a point on the radius to be the midpoint of your chord. Apparently now, the probability of the chord being longer than the side is 1⁄2.

Pick a random point inside the circle to be the midpoint of your chord (chords are unique by midpoint). If the midpoint of a chord falls inside the circle inscribed by the triangle, it is longer than the side of the triangle. The inscribed circle has an area 1⁄4 of the circumscribing circle, and that is our probability.

WHAT NOW?!

The solution is to choose the distribution of chords that lets us be maximally indifferent/ignorant. I.e. the one that is both scale, translation and rotation invariant (i.e. invariant under Affine transformations). The second solution has those properties.

Wikipedia article)

“Probabilities express uncertainty, and it is only agents who can be uncertain. A blank map does not correspond to a blank territory. Ignorance is in the mind.”

Eliezer, in quantum mechanics, one does not say that one does not have knowledge of both position and momentum of a particle simultaneously. Rather, one says that one CANNOT have such knowledge. This contradicts your statement that ignorance is in the mind. If quantum mechanics is true, then ignorance/uncertainty is a part of nature and not just something that agents have.

Wither knowledge. It is not knowledge that causes this effect, it is the fact that momentum amplitude and position amplitude relates to one another by a fourier transform.

A narrow spike in momentum is a wide blob in position and vice versa by mathematical necessity.

Quantum mechanics’ apparent weirdness comes from wanting to measure quantum phenomena with classical terms.

Not really. The quantum weirdness isn’t due to measuring it with classical terms, it’s more like that’s just how it is at that level. Spooky action, non locality, and uncertainty.

In fact it’s fundamentally random at that level and by definition uncertain. Even mathematically that’s the case.

Eliezer is still wrong, though that wouldn’t surprise me because he doesn’t have a degree in this stuff. It’s also odd to argue something is in the mind when your other writings suggest you don’t believe in such things. I mean if you want to get technical...all of this is in the mind, even caring about being “rational” so such a statement sounds moot to me.

Are you certain? Can you know things without being certain?

“Are you certain? Can you know things without being certain?”

Because I said so.

???

There’s a mathematical definition of randomness, but it’s not the same thing causal randomness, which is what we are concerned with here. Probability is (only) in the mind if it is not in the territory.

The best evidence that it is in the territory is QM—but there are causally deterministic interpretations of QM, so it isn’t a fact that QM is indeterministic. But it also isn’t a fact that it’s deterministic , so it isn’t a fact that probability is (only) in the mind.

Yeah but we don’t know that, and the evidence today is that QM is fundamentally indeterministic, and getting moreso each day.

EY thinks there is some territory to compare to but the fact is it’s all maps and we use different ones for different things. His “multi-level reality” that he uses to dismiss anything above the elementary particles as not real isn’t true, because what something “really is” is philosophy and not science.

He doesn’t seem to acknowledge that all of it is maps and we don’t have the territory and never will.

It’s not a fact that it’s all maps. Where we.live is the territory, where the maps exist is the territory. What we lack is the ability to make direct comparisons between map and territory. “There is a territory” and ” we can mak e comparisons to the territoty” are different claims.

No. Those are maps too. We don’t have direct access or knowledge of reality, just maps. There is no “territory” as far as we know, that’s how science works.

To think you know what the territory is is hubris. But yeah, it’s all maps. It started with Plato’s allegory of the Cave and so far there is no way to get past that. Kant said the same.

It’s all maps, all we can do is just adjust them better.

Also EY says people don’t exist because they’re just “models” so by him no “one” “lives” anywhere.

What does “those” refer to?

We do have indirect access, since our empirical data come from reality.

No, empirical evidence is not a “map” as in theory. It is used to test maps. Scientific maps, theories, are not free creations.

Of course not. Science is the study of reality.

Complaints about the knowability of reality just don’t lead to the conclusion it doesn’t exist. And the conclusion is absurd.

So what? I wasn’t making that claim. Only that it is. The ontological claim.

Plato was a realist. Ontologically. The Cave is about indirect knowledge.

Kant made the exact argument, which I also made, that a non mental reality is the source of our sense data.

If “it” means our knowledge, yes. But you keep stating it as an ontological claim.

Those maps “Refer” to what we “assume” is reality, but like I said all we have is maps not reality. You say our “empirical data comes from reality” but it doesn’t, it comes from our senses, which don’t accurately reflect reality.

The “evidence” is still just a map, because we cannot be certain such a thing is reality itself. Science isn’t technically the study of reality, it’s more like just about making models that work. Even science itself cannot prove reality is real and is more concerned with explanations or what’s good enough rather than truth.

The “knowability” of reality is a big part of many people’s belief that drives them to engage with it, but every philosopher comes to the same realization that we cannot definitively know if it’s possible to know it, or if there even is one. Reminds me of the hard problem of solipsism.

“So what? I wasn’t making that claim. Only that it is. The ontological claim.”

But you don’t even have that, it’s an assumption you’re making.

“Plato was a realist. Ontologically. The Cave is about indirect knowledge.”

No he wasn’t, because he believed the “Forms” where the highest and most fundamental reality, not the material world. Also the cave is not about indirect knowledge but about the limits of our knowledge and how can we truly know reality.

“Kant made the exact argument, which I also made, that a non mental reality is the source of our sense data.”

Then he goes on to say that there is no way to know such a reality because it will only ever be as it appears to us and never as it is. Some philosophers go on to say that we might never know, and there is no way to check.

“If “it” means our knowledge, yes. But you keep stating it as an ontological claim.”

“It” refers to reality. Reality is always going to be a map, never the territory, no matter how hard you try there is nothing to support there being an actual territory. That’s a belief, an axiom we hold.

But like I said, he argues people don’t exist and are just “patterns of atoms” so it’s not like there is anyone to “know” this stuff anyway.

Any map that isn’t explicitly fictional attempts to describe some sort of reality. The fact that they intend or purport to represent a reality outside of themselves is a feature they all have in common. They are all , at least, about reality , in general, aside from how exact they are

If we believe the map, we take it’s details to be a detailed description of reality. Otherwise not. The theories we disbelieve in, we regard as failed attempts to describe reality …but still attempts, unlike fiction.

You may be hung up on a “nothing avails except perfection” assumption...that a map cannot be said to be about reality at all unless it is in perfect correspondence therewith.

Reality is whatever exists. You and I exist. The bare fact of existence can be justified without offering a completely accurate description. The dark side of the moon existed before it was mapped...how could it not?

“Empirical data comes from external reality” in the sense that external reality is the ultimate cause of them. Our senses might not accurately reflect reality, but that’s a further issue...just as accurate representation is a further issue to attempted, purported representation.

You need to justify an “either map or territory, not some third thing” premise.

I’m not convinced that many scientists are motivated to go into the lab , to make better predictions.

There has to be some kind of reality. even if unknowable, or not otherwise specified.

But science may not be able to prove a particular model of reality

Again, the ontological question and the epistemological question are separate.

Maybe. That doesn’t mean reality doesn’t exist as thing. It means perfect knowledge is impossible.

That also doesn’t mean reality doesn’t exist as thing. It means perfect knowledge is impossible.

That’s a huge leap from the previous.

Reality is whatever exists. You exist....

I exist. Even if solipsism is true there is a reality. Even if there is nothing but maps, there is a reality.

Yes, he was a realist because he thought the Forms were real. It doesn’t matter that they are supposed to be immaterial, because realism is not a synonym for materialism.

Yes. He believed that a form of direct knowledge was available to the few. Unlike Kant, who rejected noumenal knowledge entirely.

Yep. That’s what I’ve been calling the epistemological claim. He also thought that the posit of a (possibly unknowable) reality was needed … there’s a Refutation of Idealism in the first Critique.

Again, that’s the epistemological claim, which, again, I don’t dispute.

You are just repeating your claims. I have argued to the contrary several times.

Who’s he? Kant?

“Any map that isn’t explicitly fictional attempts to describe some sort of reality. The fact that they intend or purport to represent a reality outside of themselves is a feature they all have in common. They are all , at least, about reality , in general, aside from how exact they are

If we believe the map, we take it’s details to be a detailed description of reality. Otherwise not. The theories we disbelieve in, we regard as failed attempts to describe reality …but still attempts, unlike fiction.

You may be hung up on a “nothing avails except perfection” assumption...that a map cannot be said to be about reality at all unless it is in perfect correspondence therewith.”

No, I merely acknowledge that what I know might not describe reality at all, just what I observe and experience. Hence why I said it’s all maps and not really reality. Trying to describe reality leads to infinite regress.

“Reality is whatever exists. You and I exist. The bare fact of existence can be justified without offering a completely accurate description. The dark side of the moon existed before it was mapped...how could it not?”

Debatable, especially on the exist part. You say the dark side of the moon existed before being mapped but that is just an assumption you’re making. Everything you’ve said thus far is assumption, not fact. I know I exist, other people though I cannot prove (doesn’t mean they don’t). Also something doesn’t have to exist to be real.

“”Empirical data comes from external reality” in the sense that external reality is the ultimate cause of them. Our senses might not accurately reflect reality, but that’s a further issue...just as accurate representation is a further issue to attempted, purported representation.”

Again, another assumption you are making. You are assuming too many things in your replies to me.

“You need to justify an “either map or territory, not some third thing” premise.”

I don’t, you’re the one who needs to justify some territory that is making it.

“That’s a huge leap from the previous.

Reality is whatever exists. You exist....”

Well no, reality IS, that’s it. Anything else is your assumptions about it.

“I exist. Even if solipsism is true there is a reality. Even if there is nothing but maps, there is a reality.”

Not necessarily, again still more assumptions.

“Yes, he was a realist because he thought the Forms were real. It doesn’t matter that they are supposed to be immaterial, because realism is not a synonym for materialism.”

Realism is a synonym for materialism, that’s why he wasn’t one. He thought the ideas were more real than the material, so he was more of an idealist than anything else.

“Yes. He believed that a form of direct knowledge was available to the few. Unlike Kant, who rejected noumenal knowledge entirely.”

Then he was mistaken, which is unfortunate because he hit the mark with the Cave, just not how he thought.

“Yep. That’s what I’ve been calling the epistemological claim. He also thought that the posit of a (possibly unknowable) reality was needed … there’s a Refutation of Idealism in the first Critique.”

Then he was mistaken too, the only real honest person about it might have been Descartes who acknowledged he had no way of knowing if there is an external reality.

“You are just repeating your claims. I have argued to the contrary several times.”

You haven’t, you merely assert there is a territory but there is no reason to suggest that or evidence either. It’s pure belief.

“Who’s he? Kant?”

Eliezer argued that in the sequences, that humans aren’t real because they’re just a “higher level abstraction model” and not the territory, even though he’s just arbitrarily deciding what’s real and what isn’t.

But I digress, all you’ve made is assumptions about some external reality that is giving you this data, there is no evidence to suggest that. It’s merely an assumption being made, an axiom you have to hold. We all do, among many others.

There is only maps, no territory. Or rather the map is the territory I guess.

You have claimed something much less mere than that:-

The claim that it’s all maps, ontologically, doesn’t follow from the claim that we have no knowledge or reality at all, epistemically..and that doesn’t follow from “might not describe reality at all”.

You have a sort of motte-and-bailey or slippery slope going on. It’s defensible to claim that our knowledge is uncertain, but it’s not the same as claiming there is no reality .. as an ontological entity.

Why?

It’s an explanatory hypothesis. We explain things as fitting into regular pattens. Every other planet we observe is a spheroid...so why would the moon be the only hemisphere?

Yes, it’s uncertain...no, that doesn’t mean it’s necessarily false. Even random guesses can be true. Again, the strong claims you are making don’t actually follow from the weak ones.

Consider the alternative: refusing to make explanatory posits leaves you being unable to explain. What’s the advantage in that? Anti realism just leaves you being able to do less.

Note that science is based on making revisable explanatory hypotheses...not on certainty...nor on giving up and leaving everything unexplained.

Again, another explanatory hypothesis.

To repeat my previous arguments:-

i) maps require territories;

ii) the questioner has to exist somewhere;

iii) it’s a useful explanation to regard external reality as the source of our sensory input.

How can something exist, but not be part of reality?

The Platonic Forms are not ideas in an individual’s mind, they are external. So he was a realist about them...and they are not material...so realism and materialism are not the same.

Remember, you are claiming that it is nonexistent, not just unknowable.

He might be confused about reduction versus elimination. So what?

Assumptions aren’t necessarily wrong. I have never said my ontology is necessarily right.

I have been pointing out the huge leap between “we cannot be completely certain” and “there is no territory”.

“The claim that it’s all maps, ontologically, doesn’t follow from the claim that we have no knowledge or reality at all, epistemically..and that doesn’t follow from “might not describe reality at all”.

You have a sort of motte-and-bailey or slippery slope going on. It’s defensible to claim that our knowledge is uncertain, but it’s not the same as claiming there is no reality .. as an ontological entity.”

It is the same, in a sense. You could just say we have an expeirence at the most bare level, and that to posit a reality that exists beyond that is not justified. Claiming there is a reality as an ontological entity is similar to saying knowledge is uncertain because you are assuming the existence of a thing you can never truly know.

“It’s an explanatory hypothesis. We explain things as fitting into regular pattens. Every other planet we observe is a spheroid...so why would the moon be the only hemisphere?

Yes, it’s uncertain...no, that doesn’t mean it’s necessarily false. Even random guesses can be true. Again, the strong claims you are making don’t actually follow from the weak ones.

Consider the alternative: refusing to make explanatory posits leaves you being unable to explain. What’s the advantage in that? Anti realism just leaves you being able to do less.

Note that science is based on making revisable explanatory hypotheses...not on certainty...nor on giving up and leaving everything unexplained.”

Why indeed, but it does not follow that just because every other planet appears to be such that the moon is as well.

Anti-realism doesn’t leave you being able to do less, it just means that what we take to be true might not correspond to reality itself but might just be internally consistent, and some findings recently in QM might support Anti-realism, including the recent discovery that the universe is not locally real. It’s closer to instrumentalism. Given how our experience of reality is affected by what we believe, realism is shaky in terms of evidence.

Science itself does not prove independent reality or describe reality.

“To repeat my previous arguments:-

i) maps require territories;

ii) the questioner has to exist somewhere;

iii) it’s a useful explanation to regard external reality as the source of our sensory input.”

Number 1 is not necessarily true, neither is number 2, and number 3 is also not really true as there is no need to posit a source for what you are experiencing (see several eastern philosophies arguing that point.

“How can something exist, but not be part of reality?”

How indeed.

“The Platonic Forms are not ideas in an individual’s mind, they are external. So he was a realist about them...and they are not material...so realism and materialism are not the same.”

They are the same, hence why he was more an idealist and not a realist. But realism and materialism are the same thing.

“He might be confused about reduction versus elimination. So what?”

That’s more or less the main issue I have with him. He seems to think there is only one fundamental level and that is of elementary particles (which is just a map not the “territory”) and that our notions of objects beyond that are just “multi-level models” and not real. Meaning there are no people or planes, just atoms. That there isn’t separate laws of physics applying to “Separate objects” (but...technically speaking there are, in a sense).

I’m saying that if it is justified...not certain, but to some extent justified..so long as it’s it’s explanatory.

I am positing the existence of a thing with some level of justification. I don’t accept either version of all-or-nothing. I don’t believe you have to have certain knowledge of X to suppose that it exists , on the balance of evidence...and I don’t believe you have to have complete knowledge of X to suppose that it exists , on the balance of evidence.

Now, you can say I haven’t proved any of that...and I can say you haven’t proved all-or-nothing.

It doesn’t follow with certainty , but it does follow with decent plausibility. Plausibility is an epistemic level between All and Nothing

No , that’s not anti realism. Anti realism is the definitive , certain claim that there is no reality. As such, as an ontological claim, it cannot be based on a fallibilist epistemology.

Might not. That’s just fallibilism...the “you can’t be certain” claim again. It’s still not the same as the “you are wrong about everything claim”, which itself is still not the same.as the”reality doesn’t exist claim”.

What you are saying isn’t necessarily true either. So we ether give up entirely, or procede by some probabilistic balance of explanation and evidence.

Suddenly, you know how reality works!

“No , that’s not anti realism. Anti realism is the definitive , certain claim that there is no reality. As such, as an ontological claim, it cannot be based on a fallibilist epistemology.”

That isn’t anti-realism, anti-realism is against the claim that there is a definitive reality that our knowledge maps to and that knowledge is more instrumental rather than “About” anything. It’s quite compatible with fallibilism, not to mention recent findings in QM (allegedly).

“What you are saying isn’t necessarily true either. So we ether give up entirely, or procede by some probabilistic balance of explanation and evidence.”

Or just do what the Pyrrhonists do and go by appearances. But you are asserting that there is some reality with no evidence for it. It’s an axiom held, which is fine but admit that much at least.

You also avoided that bit I added about him saying people don’t exist, so by that logic I’m arguing with no one.

Heck if you really want to get down to it you could argue that we can learn/know nothing because it’s all just concepts we made up and not reality.

Again that’s ambiguous between “reality doesn’t exist” and “we don’t know reality”.

It happens to be the case that in English the word “reality” can be used both ways. You can use it in a territory sense, to mean an object of knowledge—this book is non-fiction, so it is about reality; and you can use it in a map sense, to label a successful representation—this portrait is highly realiastic.

But that’s only a quirk of English, not a deep insight!

So is realism. But anti realism needs to be supported somehow, because it’s not the default...the default is that theories are explicitly held to be about reality.

Whatever that means. It’s not a fact that QM has to be interpreted subjectively. QM describes a reality, but a non classical reality. You said so your self:

That’s a string of statements that purport to be about reality.

It’s an explanatory hypothesis.

https://en.m.wikipedia.org/wiki/Abductive_reasoning

Explanatory hypotheses are justified by the work they do in explaining observations. The problem with instrumentalism and antirealism is that the same work is not done in another way...you have to settle for less.

Yudkowsky? I don’t think he really means that , and I’m not defending him anyway. Just because his version of realism is broken—if it is—doesn’t mean mine is.

I wouldnt, because its an error to suppose that no concept can refer, and no proposition can correspond. Its just missing the very basic fact that maps are intentional.

https://en.m.wikipedia.org/wiki/Intentionality

“Again that’s ambiguous between “reality doesn’t exist” and “we don’t know reality”.

It happens to be the case that in English the word “reality” can be used both ways. You can use it in a territory sense, to mean an object of knowledge—this book is non-fiction, so it is about reality; and you can use it in a map sense, to label a successful representation—this portrait is highly realiastic.

But that’s only a quirk of English, not a deep insight!”

It’s only ambiguous if you don’t understand it. It’s pretty clear to me. It’s saying that knowledge doesn’t really correlate to some independent reality “out there” more like it just has to be internally consistent. It’s not what you’re saying.

But no, reality isn’t used that way in English, it usually refers to “what is” absent any limiting factors. It’s not used to mean a successful representation, that’s a different word. But of course the problem is that you can’t really know what “is”, as that question is just turtles all the way down.

I’d also call that a deep insight, not a quirk of English, especially since language is what we have to navigate and make sense of all this stuff (well I’d scratch the insight part since it’s not really accurate).

“So is realism. But anti realism needs to be supported somehow, because it’s not the default...the default is that theories are explicitly held to be about reality.”

Ironically no, it’s realism that has to be supported, but everyone just assumes it’s the case when really the burden is on it not anti-realism. Anti-realism isn’t making any additional claims while realism is.

“Whatever that means. It’s not a fact that QM has to be interpreted subjectively. QM describes a reality, but a non classical reality. You said so your self:”

You misread what I said, QM actually might suggest that there is no “objective and independent” reality. That means all reality.

“It’s an explanatory hypothesis.

https://en.m.wikipedia.org/wiki/Abductive_reasoning

Explanatory hypotheses are justified by the work they do in explaining observations. The problem with instrumentalism and antirealism is that the same work is not done in another way...you have to settle for less.”

It’s not, it’s an axiom that you hold. Also “plausible” seems weak given the host of philosophical arguments made that undermined abductive reasoning. There is no real “problem” with instrumentalism and anti-realism, they’re honest in their claims about the world and the limits of human ability.

Again you’re asserting something without evidence, and what is plausible can very from person to person, it’s not really an objective standard for what is likely. Descartes pretty much blew a hole through that one too.

“I wouldnt, because its an error to suppose that no concept can refer, and no proposition can correspond. Its just missing the very basic fact that maps are intentional.

https://en.m.wikipedia.org/wiki/Intentionality″

Or maybe they’re not, like I said it could refer to nothing outside, just that it has to be internally consistent, in which case anything could work.

“Yudkowsky? I don’t think he really means that , and I’m not defending him anyway. Just because his version of realism is broken—if it is—doesn’t mean mine is.”

Most folks here seem to, some even saying people are biased just because they want patterns of atoms to be people, whatever the hell that means.

Explain why scientists conduct experiments. How can that be relevant to internal consistency?

Explain why it is impossible for human knowledge to correlate to reality, even by accident. Is it because there is no external reality , as you sometimes say?

https://en.m.wikipedia.org/wiki/Realism_(arts)

it’s ambiguous to me if you don’t explain it.

Expand on that please?

That’s a common fallacy. It’s still an experimental science.

That’s just contradiction. You need to argue your points.

What is subjective judged plausible can vary. That’s a far cry from no realists.

They obviously are. A map of Sweden represents sweden.

Whatever.

“Explain why scientists conduct experiments. How can that be relevant to internal consistency?

Explain why it is impossible for human knowledge to correlate to reality, even by accident. Is it because there is no external reality , as you sometimes say?”

It’s how science is. Science itself admits that it doesn’t prove anything and that our knowledge might be more instrumental than about reality itself. It’s the first thing you learn and something each of them keeps in mind, that it could all be wrong, but it works.

As for external reality, I cannot say for sure, and there are plenty of arguments that show that skepticism about external reality cannot be refuted.

“https://en.m.wikipedia.org/wiki/Realism_(arts)″

Do I really have to spell out why that doesn’t meant much? It just reflects how humans perceive it, not that it corresponds to reality itself.

“Expand on that please?”

It’s mostly based on Kant’s idea that reality is only as it ever appears to us and there is no way to really know if you are at the ground level or if there is another one underneath it. Even if you get out of the simulation you only know that world wasn’t real, you can’t say that about the new one. It’s just turtles the whole way down.

“That’s a common fallacy. It’s still an experimental science.”

It’s actually not.

“That’s just contradiction. You need to argue your points.”

There is nothing to argue when you assert something without evidence. External reality is an axiom we hold, it cannot be proven.

“They obviously are. A map of Sweden represents sweden.”

Well no, it represents the idea of Sweden.

Constant: The competent frequentist would presumably not be befuddled by these supposed paradoxes.

Not the last two paradoxes, no. But the first case given, the biased coin whose bias is not known, is indeed a classic example of the difference between Bayesians and frequentists. The frequentist says:

According to the frequentist, apparently there is no rational way to manage your uncertainty about a single flip of a coin of unknown bias, since whatever you do, someone else will be able to criticize your belief as “subjective”—such a devastating criticism that you may as well, um, flip a coin. Or consult a magic 8-ball.

Sudeep: If quantum mechanics is true, then ignorance/uncertainty is a part of nature and not just something that agents have.

A common misconception—Jaynes railed against that idea too, and he wasn’t even equipped with the modern understanding of decoherence. In quantum mechanics, it’s an objective fact that the blobs of amplitude making up reality sometimes split in two, and you can’t predict what “you” will see, when that happens, because it is an objective fact that different versions of you will see different things. But all this is completely mechanical, causal, and deterministic—the splitting of observers just introduces an element of anthropic pseudo-uncertainty, if you happen to be one of those observers. The splitting is not inherently related to the act of measurement by a conscious agent, or any kind of agent; it happens just as much when a system is “measured” by a photon bouncing off and interacting with a rock.

There are other interpretations of quantum mechanics, but they don’t make any sense. Making this fully clear will require more prerequisite posts first, though.

I always found it really strange that EY believes in Bayesianism when it comes to probability theory but many worlds when it comes to quantum physics. Mathematically, probability theory and quantum physics are close analogues (of which quantum statistical physics is the common generalisation), and this extends to their interpretations. (This doesn’t apply to those interpretations of quantum physics that rely on a distinction between classical and quantum worlds, such as the Copenhagen interpretation, but I agree with EY that these don’t ultimately make any sense.) There is a many-worlds interpretation of probability theory, and there is a Bayesian interpretation of quantum physics (to which I subscribe).

I need to write a post about this some time.

Both of these are false. Consider the trillionth binary digit of pi. I do not know what it is, so I will accept bets where the payoff is greater than the loss, but not vice versa. However, there is obviously no other world where the trillionth binary digit of pi has a different value.

The latter is, if I understand you correctly, also wrong. I think that you are saying that there are ‘real’ values of position, momentum, spin, etc., but that quantum mechanics only describes our knowledge about them. This would be a hidden variable theory. There are very many constraints imposed by experiment on what hidden variable theories are possible, and all of the proposed ones are far more complex than MWI, making it very unlikely that any such theory will turn out to be true.

I am saying that the wave function (to be specific) describes one’s knowledge about position, momentum, spin, etc., but I make no claim that these have any ‘real’ values.

In the absence of a real post, here are some links:

John Baez (ed, 2003), Bayesian Probability Theory and Quantum Mechanics (a collection of Usenet posts, with an introduction);

Carlton Caves et al (2001), Quantum probabilities as Bayesian probabilities (a paper published in Physical Reviews A).

By the way, you seem to have got this, but I’ll say it anyway for the benefit of any other readers, since it’s short and sums up the idea: The wave function exists in the map, not in the territory.

Please explain how you know this?

ETA: Also, whatever does exist in the territory, it has to generate subjective experiences, right? It seems possible that a wave function could do that, so saying that “the wave function exists in the territory” is potentially a step towards explaining our subjective experiences, which seems like should be the ultimate goal of any “interpretation”. If, under the all-Bayesian interpretation, it’s hard to say what exists in the territory besides that the wave function doesn’t exist in the territory, then I’m having trouble seeing how it constitutes progress towards that ultimate goal.

I wouldn’t want to pretend that I know this, just that this is the Bayesian interpretation of quantum mechanics. One might as well ask how we Bayesians know that probability is in the map and not the territory. (We are all Bayesians when it comes to classical probability, right?) Ultimately, I don’t think that it makes sense to know such things, since we make the same physical predictions regardless of our interpretation, and only these can be tested.

Nevertheless, we take a Bayesian attitude toward probability because it is fruitful; it allows us to make sense of natural questions that other philosophies can’t and to keep things mathematically precise without extra complications. And we can extend this into the quantum realm as well (which is good since the universe is really quantum). In both realms, I’m a Bayesian for the same reasons.

A half-Bayesian approach adds extra complications, like the two very different maps that lead to same predictions. (See this comment’s cousin in reply to endoself.)

ETA: As for knowing what exists in the territory as an aid to explaining subjective experience, we can still say that the territory appears to consist ultimately of quark fields, lepton fields, etc, interacting according to certain laws, and that (built out of these) we appear to have rocks, people, computers, etc, acting in certain ways. We can even say that each particular rock appears to have a specific value of position and momentum, up to a certain level of precision (which fails to be infinitely precise first because the definition of any particular rock isn’t infinitely precise, long before the level of quantum indeterminacy). We just can’t say that each particular quark has a specific value of position and momentum beyond a certain level of precision, despite being (as far as we know) fundamental, and this is true regardless of whether we’re all-Bayesian or many-worlder. (Bohmians believe that such values do exist in the territory, but these are unobservable even in principle, so this is a pointless belief).

Edit: I used ‘world’ consistently in a technical sense.

Where “extending” seems to mean “assuming”. I find it more fruitful to come up with tests of (in)determinsm, such as Bell’s Inequalitites.

I’m not sure what you mean by ‘assuming’. Perhaps you mean that we see what happens if we assume that the Bayesian interpretation continues to be meaningful? Then we find that it works, in the sense that we have mutually consistent degrees of belief about physically observable quantities. So the interpretation has been extended.

If the universe contains no objective probabilities, it will still contain subjective, ignorance based probabilities.

If the universe contains objective probabilities, it will also still contain subjective, ignorance based probabilities.

So the fact subjective probabilities “work” doesn’t tell you anything about the universe. It isn’t a test.

Aspect’s experiment to test Bells theorem is a test. It tells you there isn’t (local, single-universe) objective probability.

OK, I think that I understand you now.

Yes, Bell’s inequalities, along with Aspect’s experiment to test them, really tell us something. Even before the experiment, the inequalities told us something theoretical: that there can be no local, single-world objective interpretation of the standard predictions of quantum mechanics (for a certain sense of ‘objective’); then the experiment told us something empirical: that (to a high degree of tolerance) those predictions were correct where they mattered.

Like Bell’s inequalities, the Bayesian interpretation of quantum mechanics tells us something theoretical: that there can be a local, single-world interpretation of the standard predictions of quantum mechanics (although it can’t be objective in the sense ruled out by Bell’s inequalities). So now we want the analogue of Aspect’s experiment, to confirm these predictions where it matters and tell us something empirical.

Bell’s inequalities are basically a no-go theorem: an interpretation with desired features (local, single-world, objective true value of all potentially observable quantities) does not exist. There’s a specific reason why it cannot exist, and Aspect’s experiment tests that this reason applies in the real world. But Fuchs et al’s development of the Bayesian interpretation is a go theorem: an interpretation with some desired features (local, single-world) does exist. So there’s no point of failure to probe with an experiment.

We still learn something about the universe, specifically about the possible forms of maps of it. But it’s a purely theoretical result. I agree that Bell’s inequalities and Aspect’s experiment are a more interesting result, since we get something empirical. But it wasn’t a surprising result (which might be hindsight bias on my part). There seem to be a lot of people here (although that might be my bad impression) who think that there is no local, single-world interpretation of the standard predictions of quantum mechanics (or even no single-world interpretation at all, but I’m not here to push Bohmianism), so the existence of the Bayesian interpretation may be the more surprising result; it may actually tell us more. (At any rate, it was surprising once upon a time for me.)

I have not read the latter link yet, though I intend to.

What do you have knowledge of then? Or is there some concept that could be described as having knowledge of something without that thing having an actual value?

From Baez:

This is horribly misleading. Bayesian probability can be applied perfectly well in a universe that obeys MWI while being kept completely separate mathematically from the quantum mechanical uncertainty.

As a mathematical statement, what Baez says is certainly correct (at least for some reasonable mathematical formalisations of ‘probability theory’ and ‘quantum mechanics’). Note that Baez is specifically discussing quantum statistical mechanics (which I don’t think he makes clear); non-statistical quantum mechanics is a different special case which (barring trivialities) is completely disjoint from probability theory.

Of course, the statement can still be misleading; as you note, it’s perfectly possible to interpret quantum statistical physics by tacking Bayesian probability on top of a many-worlds interpretation of non-statistical quantum mechanics. That is, it’s possible but (I argue) unwise; because if you do this, then your beliefs do not pay rent!

The classic example is a spin-1/2 particle that you believe to be spin-up with 50% probability and spin-down with 50% probability. (I mean probability here, not a superposition.) An alternative map is that you believe that the particle is spin-right with 50% probability and spin-left with 50% probability. (Now superposition does play a part, as spin-right and spin-left are both equally weighted superpositions of spin-up and spin-down, but with opposite relative phases.) From the Bayesian-probability-tacked-onto-MWI point of view, these are two very different maps that describe incompatible territories. Yet no possible observation can ever distinguish these! Specifically, if you measure the spin of the particle along any axis, both maps predict that you will measure the spin to be in one direction with 50% probability and in the other direction with 50% probability. (The wavefunctions give Born probabilities for the observations, which are then weighted according to your Bayesian probabilities for the wavefunctions, giving the result of 50% every time.)

In statistical mechanics as it is practised, no distinction is made between these two maps. (And since the distinction pays no rent in terms of predictions, I argue that no distinction should be made.) They are both described by the same ‘density matrix’; this is a generalisation of the notion of quantum state as a wave vector. (Specifically, the unit vectors up to phase in the Hilbert space describe the pure states of the system, which are only a degenerate case of the mixed states described by the density matrices.) A lot of the language of statistical mechanics is frequentist-influenced talk about ‘ensembles’, but if you just reinterpret all of this consistently in a Bayesian way, then the practice of statistical mechanics gives you the Bayesian interpretation.

This is the weak point in the Bayesian interpretation of quantum mechanics. I find it very analogous to the problem of interpreting the Born probabilities in MWI. Eliezer cannot yet clearly answer these questions that he poses:

And neither can I (at least, not in a way that would satisfy him). In the all-Bayesian interpretation, the Born probabilities are simply Bayesian probabilities, so there’s no special problems about them; but as you point out, it’s still hard to say what the territory is like.

My best answer is simply what you suggest, that our maps of the universe assign probabilities to various possible values of things that do not (necessarily) have any actual values. This may seem like a counterintuitive thing to do, but it works, and we have no other way of making a map.

By the way, I’ve thought of a couple more references:

John Baez (1993), This Week’s Finds #27;

Toby Bartels (1998), Quantum measurement problem.

Baez (1993) is where I really learnt quantum statistical mechanics (despite having earlier taken a course in it), and my first (subtle) introduction to the Bayesian interpretation (not made explicit here). Note the talk about the ‘post-Everett school’, and recall that Everett is credited with founding the many-worlds interpretation (although he avoided the term ‘MWI’). The Bayesian interpretation could have been understood in the 1930s (and I have heard it argued, albeit unconvincingly, that it is what Bohr really meant all along), but it’s really best understood in light of the modern understanding of decoherence that Everett started. We all-Bayesians are united with the many-worlders (and the Bohmians) in decrying the mystical separation of the universe into ‘quantum’ and ‘classical’ worlds and the reality of the ‘collapse of the wavefunction’. (That is, we do believe in the collapse of the wavefunction, but not in the territory; for us, it is simply the process of updating the map on the basis of new information, that is the application of a suitably generalised Bayes’s Theorem.) We just think that the many-worlders have some unnecessary ontological baggage (like the Bohmians, but to a lesser degree).

Bartels (1998) is my first attempt to explain the Bayesian interpretation (on Usenet), albeit not a very good one. It’s overly mathematical (and poorly so, since W*-algebras make a better mathematical foundation than C*-algebras). But it does include things that I haven’t said here, (including mathematical details that you might happen to want). Still (even for the mathematics), if you read only one, read Baez.

Edit: I edited to use the word ‘world’ only in the technical sense of an interpretation.

I wrote:

I’ve begun to think that this is probably not a good example.

It’s mathematically simple, so it is good for working out an example explicitly to see how the formalism works. (You may also want to consider a system with two spin-1/2 particles; but that’s about as complicated as you need to get.) However, it’s not good philosophically, essentially since the universe consists of more than just one particle!

Mathematically, it is a fact that, if a spin-1/2 particle is entangled with anything else in the universe, then the state of the particle is mixed, even if the state of the entire universe is pure. So a mixed state for a single particle suggests nothing philosphically, since we can still believe that the universe is in a pure state, which causes no problems for MWI. Indeed, endoself immediately looks at situations where the particle is so entangled! I should have taken this as a sign that my example was not doing its job.

I still stand by my responses to endoself, as far as they go. One of the minor attractions of the Bayesian interpretation for me is that it treats the entire universe and single particles in the same way; you don’t have to constantly remind yourself that the system of interest is entangled with other systems that you’d prefer to ignore, in order to correctly interpret statements about the system. But it doesn’t get at the real point.

The real point is that the entire universe is in a mixed state; I need to establish this. In the Bayesian interpretation, this is certainly true (since I don’t have maximal information about the universe). According to MWI, the universe is in a pure state, but we don’t know which. (I assume that you, the reader, don’t know which; if you do, then please tell me!) So let’s suppose that |psi> and |phi> are two states that the universe might conceivably be in (and assume that they’re orthogonal to keep the math simple). Then if you believe that the real state of the universe is |psi> with 50% chance and |phi> with 50% chance, then this is a very different belief than the belief that it’s (|psi> + |phi>)/sqrt(2) with 50% chance and (|psi> - |phi>)/sqrt(2) with 50% chance. Yet these two different beliefs lead to identical predictions, so you’re drawing a map with extra irrelevant detail. In contrast, in the fully Bayesian interpretation, these are just two different ways of describing the same map, which is completely specified upon giving the density matrix (|psi><phi|)/2.

Edit: I changed uses of ‘world’ to ‘universe’; the former should be reserved for its technical sense in the MWI.

I definitely don’t disagree with that.

They can give different predictions. Maybe I can ask my friend who prepared they quantum state and ey can tell me which it really is. I might even be able to use that knowledge to predict the current state of the apparatus ey used to prepare the particle. Of course, it’s also possible that my friend would refuse to tell me or that I got the particle already in this state without knowing how it got there. That would just be belief in the implied invisible. “On August 1st 2008 at midnight Greenwich time, a one-foot sphere of chocolate cake spontaneously formed in the center of the Sun; and then, in the natural course of events, this Boltzmann Cake almost instantly dissolved.” I would say that this hypothesis is meaningful and almost certainly false. Not that it is “meaningless”. Even though I cannot think of any possible experimental test that would discriminate between its being true, and its being false.

A final possibility is that there never was a pure state; the universe started off in a mixed state. In this example, whether this should be regarded as an ontologically fundamental mixed state or just a lack of knowledge on my part depends on which hypothesis is simpler. This would be too hard to judge definitively given our current understanding.

In MWI, the Born probabilities aren’t probabilities, at least not is the Bayesian sense. There is no subjective uncertainty; I know with very high probability that the cat is both alive and dead. Of course, that doesn’t tell us what they are, just what they are not.

I think a large majority of physicists would agree that the collapse of the wavefunction isn’t an actual process.

How would you analyze the Wigner’s friend thought experiment? In order for Wigner’s observations to follow the laws of QM, both versions of his friend must be calculated, since they have a chance to interfere with each other. Wouldn’t both streams of conscious experience occur?

I don’t understand what you’re saying in these paragraphs. You’re not describing how the two situations lead to different predictions; you’re describing the opposite: how different set-ups might lead to the two states.