Believing In

“In America, we believe in driving on the right hand side of the road.”

Tl;dr: Beliefs are like bets (on outcomes the belief doesn’t affect). “Believing in”s are more like kickstarters (for outcomes the believing-in does affect).

Epistemic status: New model; could use critique.

In one early CFAR test session, we asked volunteers to each write down something they believed. My plan was that we would then think together about what we would see in a world where each belief was true, compared to a world where it was false.

I was a bit flummoxed when, instead of the beliefs-aka-predictions I had been expecting, they wrote down such “beliefs” as “the environment,” “kindness,” or “respecting people.” At the time, I thought this meant that the state of ambient rationality was so low that people didn’t know “beliefs” were supposed to be predictions, as opposed to group affiliations.

I’ve since changed my mind. My new view is that there is not one but two useful kinds of vaguely belief-like thingies – one to do with predictions and Bayes-math, and a different one I’ll call “believing in.” I believe both are lawlike, and neither is a flawed attempt to imitate/parasitize the other. I further believe both can be practiced at once – that they are distinct but compatible.

I’ll be aiming, in this post, to give a clear concept of “believing in,” and to get readers’ models of “how to ‘believe in’ well” disentangled from their models of “how to predict well.”

Examples of “believing in”

Let’s collect some examples, before we get to theory. Places where people talk of “believing in” include:

An individual stating their personal ethical code. E.g., “I believe in being honest,” “I believe in hard work,” “I believe in treating people with respect,” etc.

A group stating the local social norms that group tries to practice as a group. E.g., “Around here, we believe in being on time.”

“I believe in you,” said by one friend or family member to another, sometimes in a specific context (“I believe in your ability to win this race,”) sometimes in a more general context (“I believe in you [your abilities, character, and future undertakings in general]”).

A difficult one-person undertaking, of the sort that’ll require cooperation across many different time-slices of a self. (“I believe in this novel I’m writing.”)

A difficult many-person undertaking. (“I believe in this village”; “I believe in America”; “I believe in CFAR”; “I believe in turning this party into a dance party, it’s gonna be awesome.”)

A political party or platform (“I believe in the Democratic Party”). A scientific paradigm.

A person stating which entities they admit into their hypotheses, that others may not (“I believe in atoms”; “I believe in God”).

It is my contention that all of the above examples, and indeed more or less all places where people naturally use the phrase “believing in,” are attempts to invoke a common concept, and that this concept is part of how a well-designed organism might work.[1]

Inconveniently, the converse linguistic statement does not hold – that is:

People who say “believing in” almost always mean the thing I’ll call “believing in”

But people who say “beliefs” or “believing” (without the “in”) sometimes mean the Bayes/predictions thingy, and sometimes mean the thing I’ll call “believing in.” (For example, “I believe it takes a village to raise a child” is often used to indicate “believing in” a particular political project, despite how it does not use the word “in”; also, here’s an example from Avatar.)

A model of “believing in”

My model is that “I believe in X” means “I believe X will yield good returns if resources are invested in it.” Or, in some contexts, “I am investing (some or ~all of) my resources in keeping with X.”

(Background context for this model: people build projects that resources can be invested in – projects such as particular businesses or villages or countries, the project of living according to a particular ethical code, the project of writing a particular novel, the project of developing a particular scientific paradigm, etc.

Projects aren’t true or false, exactly – they are projects. Projects that may yield good or bad returns when particular resources are invested in them.)

For example:

“I believe in being honest” → “I am investing resources in acting honestly” or “I am investing ~all of my resources in a manner premised on honesty” or “I am only up for investing in projects that are themselves premised on honesty” or “I predict you’ll get good returns if you invest resources in acting honestly.” (Which of these it is, or which related sentence it is, depends on context.)

“I believe in this bakery [that I’ve been running with some teammates]” → “I am investing resources in this bakery. I predict that marginal resource investments from me and others will have good returns.”

“I believe in you” → “I predict the world will be a better place for your presence in it. I predict that good things will result from you being in contact with the world, from you allowing the hypotheses that you implicitly are to be tested. I will help you in cases where I easily can. I want others to also help you in cases where they easily can, and will bet some of my reputation that they will later be glad they did.”

“I believe in this party’s potential as a dance party” → “I’m invested co-creating a good dance party here.”

“I believe in atoms” or “I believe in God” → “I’m invested in taking a bunch of actions / doing a bunch of reasoning in a manner premised on ‘atoms are real and worth talking about’ or ‘God is real.’”[2]

“Good,” in the above predictions about a given investment having “good” results, means some combination of:

Good for my own goals directly;

Good for others’ goals, in such a way that they’ll notice and pay me for the result (perhaps informally and not in money), so that it’s good for my goals after the payment. (E.g., the dance party we start will be awesome, and others’ appreciation will repay me for the awkwardness of starting it.) (Or again: Martin Luther King invested in civil rights, “believing in”-style; eventually social consensus moved; he now gets huge appreciation for this.)

I admit there is leeway here in what exactly I am taking “I believe in X” to mean, depending on context, as is common in natural language. However, I claim there is a common, ~lawlike shape to how to do “believing in”s well, and this shape would be at least partly shared by at least some alien species.

Some pieces of the lawlike shape of “believing in”s:

I am far from having a catalog of the most important features of how to do “believing in”s well. So I’ll list some basics kinda at random, dividing things into two categories:

Features that differ between “believing in”s, and predictions; and

Features that are in common between “believing in”s and predictions.

Some differences between how to “believe in” well, and how to predict well:

A. One should prefer to “believe in” good things rather than bad things, all else equal. (If you’re trying to predict whether it’ll snow tomorrow, or some other thing X that is not causally downstream of your beliefs, then whether X would be good for your goals has no bearing on the probability you should assign to X. But if you’re considering investing in X, you should totally be more likely to do this if X would be good for your goals.)

B. It often makes sense to describe “believing in”s based on strength-of-belief (e.g., “I believe very strongly in honesty”), whereas it makes more sense to describe predictions based on probabilities (e.g. “I assign a ~70% chance that ‘be honest’ policies such as mine have good results on average”).

One can in principle describe both at once – e.g. “Bob is investing deeply in the bakery he’s launching, and is likely to maintain this in the face of considerable hardship, even though he won’t be too surprised if it fails.” Still, the degree of commitment/resource investment (or the extent to which one’s life is in practice premised on X project) makes sense as part of the thing to describe, and if one is going to describe only one variable, it often makes sense as the one main thing to describe – we often care more about knowing how committed Bob is to continuing to launch the bakery, more than we care about his odds the bakery isn’t a loss.

C. “Believing in”s should often be public, and/or be part of a person’s visible identity. Often the returns to committing resources to a project flow partly from other people seeing this commitment – it is helpful to be known to be standing for the ethics one is standing for, it is helpful for a village to track the commitments its members have to it, it is helpful for those who may wish to turn a given party into a dance party to track who else would be in (so as to see if they have critical mass), etc.

D. “Believing in”s should often be in something that fits easily in your and/or others’ minds and hearts. For example, Marie Kondo claims people have an easier time tidying their houses when the whole thing fits within a unified vision that they care about and that sparks joy. This matches my own experience – the resultant tidy house is easier to “believe in,” and is thereby easier to summon low-level processes to care for. IME, it is also easier to galvanize an organization with only one focus – e.g. MIRI seemed to me to work a lot better once the Singularity Summits and CFAR were spun out, and it had only alignment research to focus on – I think because simpler organizations have an easier time remembering what they’re doing and “believing in” themselves.

Some commonalities between how to “believe in” well, and how to predict well:

Despite the above differences, it seems to me there is a fair bit of structure in common between “believing in”s and “belief as in prediction”s, such that English decided to use almost the same word for the two.

Some commonalities:

A. Your predictions, and your “believing in”s, are both premises for your actions. Thus, you can predict much about a person’s actions if you know what they are predicting and believing in. Likewise, if a person picks either their predictions or their “believing in”s sufficiently foolishly, their actions will do them no good.

B. One can use logic[3] to combine predictions, and to combine “believing in”s. That is: if I predict X, and I predict “if X then Y,” I ought also predict Y. Similarly, if I am investing in X – e.g., in a bakery – and my investment is premised on the idea that we’ll get at least 200 visitors/day on average, I ought to have a picture of how the insides of the bakery can work (how long a line people will tolerate, when they’ll arrive, how long it takes to serve each one) that is consistent with this, or at least not have one that is inconsistent.

C. One can update “believing-in”s from experience/evidence/others’ experience. There’s some sort of learning from experience (e.g., many used to believe in Marxism, and updated away from this based partly on Stalin and similar horrors).

D. Something like prediction markets is probably viable. I suspect there is often a prediction markets-like thing running within an individual, and also across individuals, that aggregates smaller thingies into larger currents of “believing in.”[4]

Example: “believed in” task completion times

Let’s practice disentangling predictions from “believing in”s by considering the common problem of predicting project completion times.

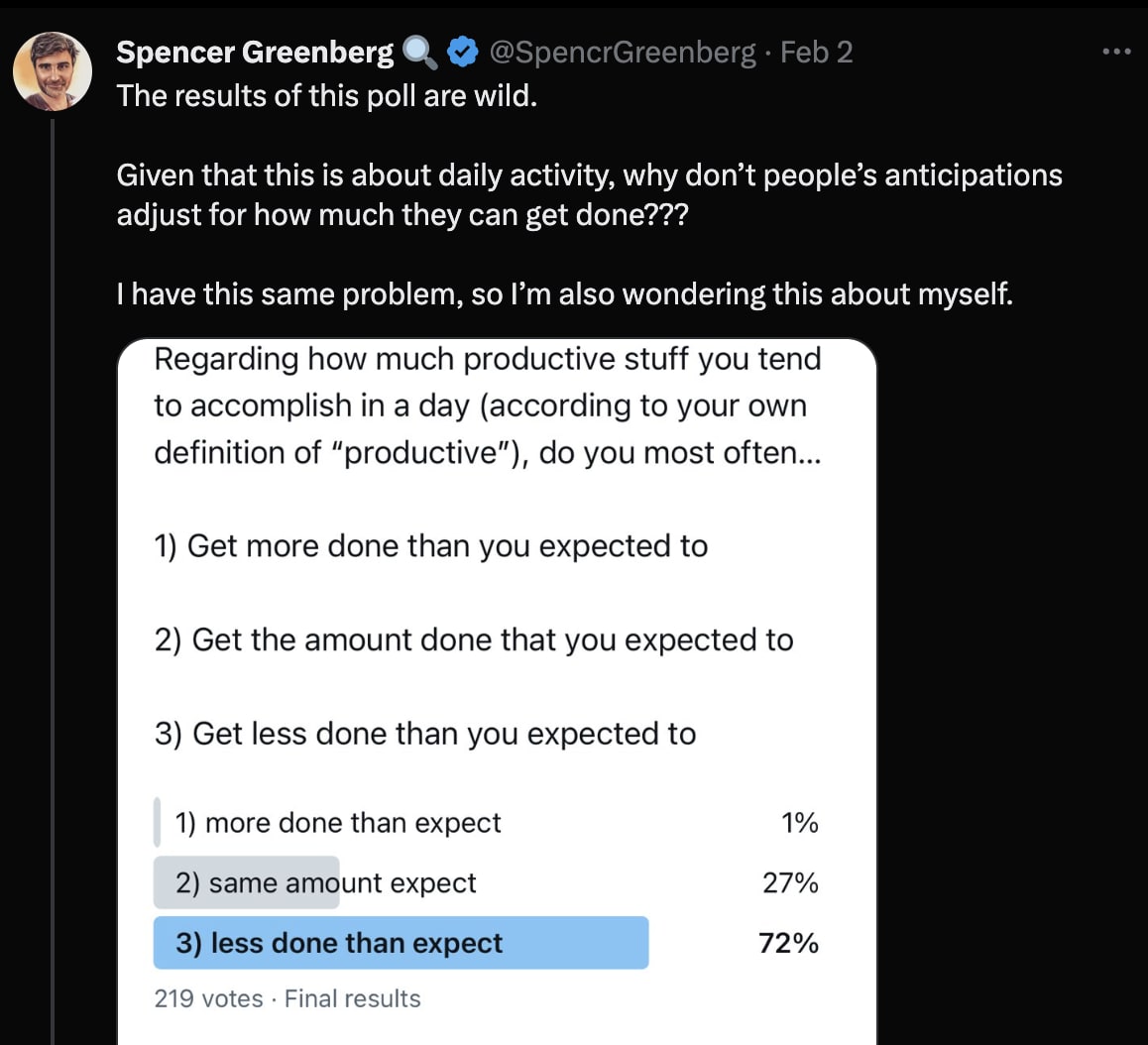

Spencer Greenberg puzzles on Twitter:

It seems to me Spencer’s confusion is due to the fact that people often do a lot of “believing in” around what they’ll get done in what time period (wisely; they are trying to coordinate many bits of themselves into a coherent set of actions), and most don’t have good concepts for distinguishing this “believing in” from prediction.

To see why it can be useful to “believe in” a target completion time: let’s say for concreteness that I’m composing a difficult email. Composing this email involves many subtasks, many of which ought be done differently depending on my chosen (quality vs time) tradeoff: I must figure out what word to use in a given spot; I must get clearer on the main idea I’m wanting to communicate; I must decide if a given paragraph is good enough or needs a rewrite; and so on. If I “believe in” a target completion time (“I’ll get this done in about three hours’ work” or “I’ll get this done before dinner”), this allows many, parallel, system one processes to make a coherent set of choices about these (quality vs time) tradeoffs, rather than e.g. squandering several minutes on one arbitrary sentence only to hurry through other, more important sentences. A “believing in” is here a decision to invest resources in keeping with a coherently visualized scenario.

Should my “believed in” time match my predicted time? Not necessarily. I admit I’m cheating here, because I’ve read research on planning fallacy and so am doing retrodiction rather than prediction. But it seems to me not-crazy to “believe in” a modal scenario, in which e.g. the printer doesn’t break and I receive no urgent phone calls while working and I don’t notice any major new confusions in the course of writing out my thoughts. This modal scenario is not a good time estimate (in a “predictions” sense), since there are many ways my writing process can be slower than the modal scenario, and almost no ways it can be faster. But, of the different “easy to hold in your head and heart” visualizations you have available, it may be the one that gives the most useful guidance to your low-level processes – you may want to tell your low-level processes “please contribute to the letter as though the 3-hours-in-a-modal-run letter-writing process underway.” (That is: “as though in a 3-hours-in-a-modal-run letter-writing” may offer more useful guidance to my low-level processes, than does “as though in a 10-hours-in-expectation letter-writing,” because the former may give a more useful distribution as to how long I should expect to spend looking for any given word/etc.)

“Believing in X” is not predicting “X will work and be good.”

In the above example about “believed in” task completion times, it was helpful to me to believe in completing a difficult email in three hours, even though the results of effort governed by this “believing in” was (predictably) email completion times of larger than three hours.

I’d like to belabor this point, and to generalize it a bit.

Life is full of things I am trying to conjure that I do not initially know how to imagine accurately – blog posts I’m trying to write but whose ideas I have not fully worked out; friendships I would like to cultivate, but where I don’t know yet what a flourishing friendship between me and the other party would look like; collaborations I would like to try; and so on.

In these and similar cases, the policy choice before me cannot be “shall I write a blog post with these exact words?” (or “with this exact time course of noticing confusions and rewriting them in these specific new ways?” or whatever). It is instead more like “shall I invest resources in [some combination of attempting to create and updating from my attempts to create, near initial visualization X]?”

The X that I might try “believing in,” here, should be a useful stepping-stone, a useful platform for receiving temporary investment, a pointer that can get me to the next pointer.

“Believing in X” is thus not, in general, “predicting that project X will work and be good.” It is more like “predicting that [investing-for-awhile as though building project X] will have good results.” (Though, also, a believing-in is a choice and not simply a prediction.)

Compatibility with prediction

Once you have a clear concept of “believing in” (and of why and how it is useful, and is distinct from prediction), is it possible to do good “believing in” and prediction at once, without cross-contamination?

I think so, but I could use info from more people and contexts. Here’s a twitter thread where I, Adam Scholl, and Ben Weinstein-Raun all comment on learning something like this separation of predictions and “believing in”s, though each with different conceptualizations.

In my case, I learned the skill of separating my predictions from my “believing in”s (or, as I called it at the time, my “targets”) partly the hard way – by about 20 hours of practicing difficult games in which I could try to keep acting from the target of winning despite knowing winning was fairly unlikely, until my “ability to take X as a target, and fully try toward X” decoupled from my prediction that I would hit that target.

I think part of why it was initially hard for me to try fully on “believing in”s I didn’t expect to achieve[5], is that I was experiencing “failure to achieve the targeted time” as a blow to my ego (i.e., as an event I flinched from, lest it cause me shame and internal disarray), in a way I wouldn’t have if I’d understood better how “believing in X” is more like “predicting it’ll be useful to invest effort premised on X” and less like “predicting X is true.”

In any case, I’d love to hear your experiences trying to combine these (either with this vocab, or with whatever other conceptualizations you’ve been using).

I am particularly curious how well this works with teams.

I don’t see an in-principle obstacle to it working.

Distinguishing “believing in” from deception / self-deception

I used to have “believing in” confused with deception/self-deception. I think this is common. I’d like to spend this section distinguishing the two.

If a CEO is running a small business that is soliciting investment, or that is trying to get staff to pour effort into it in return for a share in its future performance, there’s an incentive for the CEO to deceive others about that business’s prospects. Others will be more likely to invest if they have falsely positive predictions about the business’s future, which will harm them (in expectation) and help the CEO (in expectation).[6]

I believe it is positive sum to discourage this sort of deception.

At the same time, there is also an incentive for the CEO to lead via locating, and investing effort into, a “believing in” worthy of others’ efforts – by holding a particular set of premises for how the business can work, what initiatives may help it, etc, and holding this clearly enough that staff can join the CEO in investing effort there.

I believe it is positive sum to allow/encourage this sort of “believing in” (in cases where the visualized premise is in fact worth investing in).

There is in principle a crisp conceptual distinction between these two: we can ask whether the CEO’s statements would still have their intended effect if others could see the algorithm that generated those statements. Deception should not persuade if listeners can see the algorithm that generated it. “Believing in”s still should.

My guess is that for lack of good concepts for distinguishing “believing in” from deception, LessWrongers, EAs, and “nerds” in general are often both too harsh on folks doing positive-sum “believing in,” and too lax on folks doing deception. (The “too lax” happens because many can tell there’s a “believing in”-shaped gap in their notions of e.g. “don’t say better things about your start-up than a reasonable outside observer would,” but they can’t tell its exact shape, so they loosen their “don’t deceive” in general.)

Getting Bayes-habits out of the way of “believing in”s

I’d like to close with some further evidence (as I see it) that “believing in” is a real thing worth building separate concepts for. This further evidence is a set of good things that I believe some LessWrongers (and some nerds more generally) miss out on, that we don’t have to miss out on, due to mistakenly importing patterns for “predict things accurately” into domains that are not prediction.

Basically: there’s a posture of passive suffering / [not seeking to change the conclusion] that is is an asset when making predictions about outside events. “Let the winds of evidence blow you about as though you are a leaf, with no direction of your own…. Surrender to the truth as quickly as you can. Do this the instant you realize what you are resisting….”

However, if one misgeneralizes this posture into other domains, one gets cowardice/passivity of an unfortunate sort. When I first met my friend Kate at high school math camp, she told me how sometimes, when nobody was around, she would scoot around the room like a train making “choo choo” noises, or do other funny games to cheer herself up or otherwise mess with her state. I was both appalled and inspired – appalled because I had thought I should passively observe my mood so as to be ~truth-seeking; inspired because, once I saw how she was doing it, I had a sense she was doing something right.

If you want to build a friendship, or to set a newcomer to a group at ease, it helps to think about what patterns might be good, and to choose a best-guess pattern to believe in (e.g., the pattern “this newcomer is welcome; I believe in this group giving them a chance; I vouch for their attendance tonight, in a manner they and others can see”). I believe I have seen some LessWrongers stand by passively to see if friendships or [enjoying the walk they are on] or [having a conversation become a good conversation] would just happen to them, because they did not want to pretend something was so that was not so. I believe this passivity leads to a missing out on much potential good – we can use often reasoning to locate a pattern worth trying, and then “believe in” this pattern to give low-level heuristics a vision to try to live up to, in a way that has higher yields than simply leaving our low-level heuristics to their own devices, and that does not have to be contra epistemic rationality.

- ^

Caveat: I’m not sure if this is “could be part of a well-designed organism” the way e.g. Bayes or Newton’s laws could (where this would be useful sometimes even for Jupiter-brains), or only the way e.g. “leave three seconds’ pause before the car in front of me” could (where it’s pretty local and geared to my limitations). I have a weak guess that it’s the first; but I’m not sure.

- ^

I’m least sure of this example; it’s possible that e.g. “I believe in atoms” is not well understood as part of my template.

- ^

In the case of predictions, probability is an improvement over classical logic. I don’t know if there’s an analog for believing-ins.

- ^

Specifically, I like to imagine that:

* People (and subagents within the psyche) “believe in” projects (publicly commit some of their resources to projects).

* When a given project has enough “believing in,” it is enacted in the world. (E.g., when there’s a critical mass of “believing in” making this party a dance party, someone turns on music, folks head to the dance stage, etc.)

* When others observe someone to have “believed in” a thing in a way that yielded results they like, they give that person reputation. (Or, if it yielded results they did not like, or simply wasted their energies on a “believing in” that went nowhere, they dock them reputation.)

* Peoples’ future “believing in” has more weight (in determining which projects have above-threshold believing-in, such that they are acted on) when they have more reputation.For example, Martin Luther King gained massive reputation by “believing in” civil rights, since he did this at a time when “believe in civil rights” was trading at a low price, since his “believing in” had a huge causal affect in bringing about more equality, and since many were afterwards very glad he had done that.

Or, again, if Fred previously successfully led a party to transform in a way people thought was cool, others will be more likely to go along with Fred’s next attempt to rejigger a party.

I am not saying this is literally how the dynamics operate – but I suspect the above captures some of it, “toy model” style.

Some readers may feel this toy model is made of unprincipled duct tape, and may pine for e.g. Bayesianism plus policy markets. They may be right about “unprincipled”; I’m not sure. But it has a virtue of computational tractability—you cannot do policy markets over e.g. which novel to write, out of the combinatorially vast space of possible novels. In this toy model, “believing in X” is a choice to invest one’s reputation in the claim that X has enough chance of acquiring a critical mass of buy-in, and enough chance of being good thereafter, that it is worth others’ trouble to consider it—the “‘believing in’ market” allocates group computational resources, as well as group actions.

- ^

This is a type error, as explained in the previous section, but I didn’t realize this at the time, as I was calling them “targets.”

- ^

This deception causes other harms, too—it decreases grounds for trust between various other sets of people, which reduces opportunity for collaboration. And the CEO may find self-deception their easiest route to deceiving others, and may then end up themselves harmed by their own false beliefs, by the damage self-deception does to their enduring epistemic habits, etc.

- The title is reasonable by (20 Sep 2025 8:59 UTC; 196 points)

- What’s going on at CFAR? (Updates and Fundraiser) by (30 Dec 2025 5:00 UTC; 109 points)

- Follow-through on Bay Solstice by (10 Dec 2025 22:07 UTC; 105 points)

- Irrationality is Socially Strategic by (18 Feb 2026 13:28 UTC; 104 points)

- Is “VNM-agent” one of several options, for what minds can grow up into? by (30 Dec 2024 6:36 UTC; 97 points)

- How useful is “AI Control” as a framing on AI X-Risk? by (14 Mar 2024 18:06 UTC; 70 points)

- Rewriting The Courage to be Disliked by (20 Sep 2025 1:48 UTC; 64 points)

- There Should Be More Alignment-Driven Startups by (31 May 2024 2:05 UTC; 62 points)

- Deeper Reviews for the top 15 (of the 2024 Review) by (14 Jan 2026 23:59 UTC; 45 points)

- 's comment on Little Echo by (8 Dec 2025 19:15 UTC; 38 points)

- I am a rationalist by (24 Nov 2025 6:39 UTC; 36 points)

- 's comment on Money Can’t Buy the Smile on a Child’s Face As They Look at A Beautiful Sunset… but it also can’t buy a malaria free world: my current understanding of how Effective Altruism has failed by (21 Jan 2026 3:32 UTC; 34 points)

- 's comment on Benito’s Shortform Feed by (21 Feb 2025 7:28 UTC; 33 points)

- There Should Be More Alignment-Driven Startups by (EA Forum; 31 May 2024 2:05 UTC; 30 points)

- Agreeing With Stalin in Ways That Exhibit Generally Rationalist Principles by (2 Mar 2024 22:05 UTC; 28 points)

- Should I Finish My Bachelor’s Degree? by (11 May 2024 5:17 UTC; 27 points)

- Monthly Roundup #15: February 2024 by (20 Feb 2024 13:10 UTC; 22 points)

- 's comment on Truth or Dare by (2 Jun 2025 22:02 UTC; 19 points)

- Are most people deeply confused about “love”, or am I missing a human universal? by (23 May 2024 13:22 UTC; 13 points)

- 's comment on LessOnline Festival Updates Thread by (5 May 2024 20:48 UTC; 13 points)

- 's comment on Unbendable Arm as Test Case for Religious Belief by (15 Apr 2025 5:23 UTC; 8 points)

- 's comment on Information vs Assurance by (28 Nov 2024 22:22 UTC; 6 points)

- 's comment on Bistrofan’s Shortform by (11 Feb 2026 17:08 UTC; 5 points)

- 's comment on Steam by (21 Feb 2024 19:22 UTC; 5 points)

- 's comment on Welcome to LessWrong! by (29 Nov 2024 19:44 UTC; 4 points)

- 's comment on Expectation = intention = setpoint by (16 Jul 2025 18:22 UTC; 3 points)

- 's comment on On Trust by (25 Sep 2024 21:41 UTC; 2 points)

- 's comment on CFAR Takeaways: Andrew Critch by (26 Feb 2024 18:22 UTC; 2 points)

- 's comment on Narrative Syncing by (8 Feb 2024 7:46 UTC; 2 points)

- 's comment on EA: Renaissance or Diaspora? by (EA Forum; 31 Oct 2024 17:10 UTC; 1 point)

- 's comment on Thoughts on AI 2027 by (6 May 2025 20:02 UTC; 1 point)

I really hope this post of mine makes it in. I think about “believing in” most days, and often reference it in conversation, and often hear references to it in conversation. I still agree with basically everything I wrote here. (I suspect there are clearer ways to conceptualize it, but I don’t yet have those ways.)

The main point, from my perspective: we humans have to locate good hypotheses, and we have to muster our energy around cool creative projects if we want to do neat stuff, and both of these situations requires picking out ideas worthy of our attention from within a combinatorially large space (letting recombinable pieces somehow “bubble up” when they’re worth our attention). Somehow, this works better if we care what happens, hope for things, allow ourselves to have visions, etc, vs being “objective” in the sense that we can take everything as objects without the quality of our [attention/caring/etc] being affected by what we’re looking at. This somehow calls for a different mental stance from the stance most of us inhabit when attempting [expected value estimates, Bayesian updates, working to “remember that you are not a hypothesis; you are the judge”] A stance that is more active / less passive / less “objective”, and involves more personally-rooting-for, or more “here I stand.” If we want to be good rationality geeks who can see the whole of how the being human thing works, we’ll need models of how “organizing our energies around a vision, and locating/updating a vision worth organizing our energies around” thing can work. I think my “believing in” model (roughly: “believing-ins are like kickstarters”) is helpful for modeling this.

Tsvi and I talked about some things I think are related in a recent comment thread.

Now that I’m writing this self-review, I’m strongly reminded of the discussions of the usefulness of caring for locating good hypotheses in Zen and the Art of Motorcycle Maintenance (if you have an e-copy and want a relevant passage, try find-word on “value rigidity”), but I missed this connection while writing the OP despite it being among my favorite books.

When this post first came out, I felt that it was quite dangerous. I explained to a friend: I expected this model would take hold in my sphere, and somehow disrupt, on this issue, the sensemaking I relied on, the one where each person thought for themselves and shared what they saw.

This is a sort of funny complaint to have. It sounds a little like “I’m worried that this is a useful idea, and then everyone will use it, and they won’t be sharing lots of disorganised observations any more”. I suppose the simple way to express the bite of the worry is that I worried this concept was more memetically fit than it was useful.

Almost two years later, I find I use this concept frequently. I don’t find it upsetting; I find it helpful, especially for talking to my friends. Wuckles.

I see things in the world that look like believing in. For example, a friend of mine, who I respect a fair amount and has the energy and vision to pull off large projects, likes to share this photo:

Interestingly, I think that those who work with him generally know it won’t be easy. But it’s more achievable than his comrades think, because he has delusion on his side. He has a lot of non-epistemic believing in.

Another example: I think when interacting with people, it’s often appropriate to extend a certain amount of believing in to their self-models. Say my friend says he’s going to take up exercise. If I thought that were true, perhaps I’d get them a small exercise-related gift, like a water bottle. Or maybe I’d invite him on a run with me. If I thought it were false, a simple calculation might suggest not doing these things: it’s a small cost, and there’ll be no benefit. But I think it’s cool to invite them on the run or maybe buy the water bottle. I think this is a form of believing in, and I think it makes my actions look similar to those I’d take if I just simply believed them. But I don’t have to epistemically believe them to have my believing in lead to the action.

So, I do find this a helpful handle now. I do feel a little sad, like: yeah, there’s a subtle landscape that encompasses beliefs and plans and motivation, and now when I look it I see it criss-crossed by the balks of this frame. And I’m not sure it’s the best I could find, had I the time. For example, I’m interested in thinking more about lines of advance. Nonetheless, it helps me now, and that’s worth a lot. +4

re: “the bite of the worry is that I worried this concept was more memetically fit than it was useful.”

Hmm. There are two choices that IMO made it memetically fit; I’m curious whether those choices of mine were bad manners. The two choices:

1) I linked my concept to a common English phrase (“believing in”), which made it more referenceable.

2) The specific phrase “believing in” that I picked gets naturally into a bit of a fight with “belief”, and “belief” is one of LW’s most foundational concepts, and this also made it more referenceable / more natural for me at least to geek out about. (Whereas if I’d given roughly the same model but called it “targets” or “aims” my post would’ve been less naturally in a fight with “beliefs”, and so less salient/referenceable / less natural to compare-and-contrast to the many claims/questions/etc I have stored up around ‘beliefs’.)

I think a crux for me about whether this was bad manners (or, alternately phrased, whether discussions will go better or worse if more posts follow similar “manners”) is whether the model I share in the post is basically predicts the ordinary English meaning of “believing in”. (In my book, ordinary English words and phrases that’ve survived many generations often map onto robustly useful concepts, at least compared to just-made-up jargon words; and so it’s often good to let normal English words/concepts have a lot of effects on how we parse things; they’ve come by their memetic fitness honestly.) A related crux for me is whether the LW technical term “belief” was/is overshadowing many LWers’ ability to understand some of the useful things that normal people are up to with the word “belief”.

Nominating this for the 2024 Review. +9. This post has influenced me possibly the most of any LessWrong post on 2024, and I think about it many times per month. Basically seems like there was a whole part of human psychology that I was not modeling before this when people talked about what they believed in, except as people failing to have beliefs as maps of the territory (as opposed to things-to-invest-in). It helped me notice that there were things in the world that I believed-in in this sense but had not been allowing myself to notice, and has been a major boost to my motivation to do things that I care about and find meaningful.

Feels like this points at correct things and I’m amenable to it being one of the top posts for 2024. It didn’t change much for me (as opposed to @Ben Pace, who thinks about it many times per month according to his review) or feel so spot on that I’d want to give a high vote. I’ll probably give something between 1-4.

Areas where I think it strikes me (admittedly with not that much thought or careful reading) as not perfectly right:

Notwithstanding the heading contra this, my instinct to want to reduce “believing in” statements to a combination of “I believe (Bayesian-style) that good things happen if I invest in X” + “I am publicly declaring myself for X (kickstarter / commitment mechanism)”. Which is a little bit interesting, but also known phenomena. Added to that, you get boring old motivated cognition to tell yourself “I’ll get this done in three hours”. This might be an effective semi-self-aware self-deception to get yourself to do things that you wouldn’t otherwise do, but that is also manipulation of the Bayesian belief slots in your head in order to get some result.

So believing in’s are Bayesian beliefs with some indirection + an expression of commitment and/or group affiliation. If so, that is useful to point out.

An extension here that’d be neat is to analyze how often expressed “values” are believing-in’s, e.g. “I believe in family”, “I believe in democracy”. If those are actually just Bayesian beliefs + commitment, then they’re a lot more defeasible than the intrinsic inherent base “values” LessWrong normally talks about.