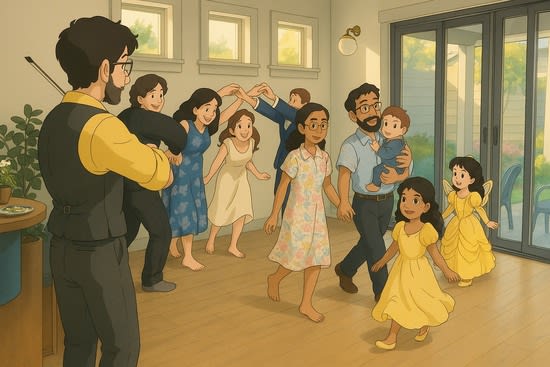

I often want to include an image in my posts to give a sense of a situation. A photo communicates the most, but sometimes that’s too much: some participants would rather remain anonymous. A friend suggested running pictures through an AI model to convert them into a Studio Ghibli-style cartoon, as was briefly a fad a few months ago:

House Party Dances Letting Kids Be OutsideThe model is making quite large changes, aside from just converting to a cartoon, including:

Moving people around

Changing posture

Substituting clothing

Combining multiple people into one

Changing races

Giving people extra hands

For my purposes, however, this is helpful, since I’m trying to illustrate the general feeling of the situation and an overly faithful cartoon could communicate identity too well.

I know that many of my friends are strongly opposed to AI-generated art, primarily for its effect on human artists. While I have mixed thoughts that I may try to write up at some point, I think this sort of usage isn’t much of a grey area: I would previously have just left off the image. There isn’t really a situation where I would have commissioned art for one of these posts.

You don’t know whether I can find photos of the people that wanted to remain anonymous given those pictures and the techniques available in a year.

It’s a possibility, but this seems to remove a ton of information to me. The Ghibli faces all look quite similar to me. I’d be very surprised if they could be de-anonymized in cases like these (people who aren’t famous) in the next 3 years, if ever.

If you’re particularly paranoid, I presume we could have a system do a few passes.

That would be ordinarily paranoid.

Even quantum cryptography couldn’t restore cleartext that had half of it redacted and replaced with “----” or something.

You can use whichever information you got to update your priors about what message was sent.

Yeah, but then you really lose the capacity to deanonymize effectively. On priors, I can guess you’re likely to be American or Western European, probably like staying up late if you’re the former/live in Western timezones. I can read a lot more of your comments and probably deduce a lot, but just going off your two comments alone doesn’t make it any more likely to find where you live, for instance.

Identifying the locations of the pictures seems quite plausible to me, but the model has done such a “bad” job with the people that I really doubt the information is still there. Though you’re right that I don’t know for sure.

E.g. gender tends to make it into the picture, which is one bit. Are there 33 bits? We don’t know the model’s idiosyncrasies, but I wouldn’t be surprised to learn of correlations like “scars on input faces translate into stoic expressions”. Separately I can get a bunch of bits by assuming that the person has been on a photo before that includes one of the people in the picture or that was taken in a nearby location.

I’m not sure gender made it through for background people in the puddle image. In the other direction, though, are lots of confounders. Some of the “people” are actually multiple people merged together. Race is are partially randomized. Some faces are fully invented, since they’re not visible in the original pictures.

(To make sure we’re on the same page, I’m not claiming the party image anonymized me.)

why not generate them with a more generic art style?

Any art style becomes generic if repeated enough. Having now seen a bunch of Ghiblified images, I find them as emptily cloying as a Thomas Kinkade. When you can just push the button to get another, and another, like Kinkade did without a computer, it renders the thing meaningless. What are such images for, when all the specificity of the scene they are supposed to be illustrating has been scrubbed off?

Have some Bouguereau! Long dead and safely out of copyright.

“A traditional American barn dance, in the style of William-Adolphe Bouguereau.”

Works for dating classifieds, too

I actually feel like this is a particularly bad use of the tool, because it is random enough in the number and scope of its errors that I can’t be confident in my mental picture of this person at all. And on top of that, this specific one renders people in pretty predictable fashion styles, so I don’t really know what she looks like in any sense.

I can’t correct for the errors in the way I could for human-created art. If this were drawn by an actual Ghibli artist, I’d feel pretty confident that it was broadly like her, and then I might be able to extrapolate her facial features by comparing this character to actual Ghibli characters. AI isn’t doing the same function of transforming real-person-features into Ghibli-character-features than a human artist would perform, so I can’t expect that it would map features to drawn features in the same way. It might just pick a “cute” face that looks nothing like her.

I agree it’s not much grey area: why wouldn’t you just leave out the image? I don’t see a case made for what value they bring to posts (

but I also don’t know what kind of posts you’re referring to).If I were one of your friends, I would (edit: be one of those who) ask you not to post a picture of me online. One of my major motivations would be to avoid it becoming training data. If you instead fed a picture of me directly to an LLM explicitly designed to offensively (to him) imitate one of the great artists of our time, I would probably reconsider our friendship altogether.

EDIT: This is clearly an unwelcome comment but nobody is saying why, so I’m asking. I think the tone is more hostile than necessary. I think the substance is pretty light. But to me, both are also clearly in the ballpark of the original post. I can restate it? But again, it feels to me like the original post is being shown undue reverence when I actually think it’s not a very high quality post.

I read this post as, “Here is a neat toy for hackers that I found,” which is fine, but not a discussion of rationality. It’s not what I come here for but I’m the newcomer so I can be wrong on the appropriateness of this post. But also the toy is very controversial, even to the author’s friends, and the author is pretty dismissive of that. And the toy is being used, seemingly without consent, on friends who have privacy concerns in a way that seemingly still violates their privacy (it is not said whether or not the subjects agreed to be ghiblified). So I felt these were worth criticizing—did I do that wrong or are these types of criticisms unwelcome here?

EDIT 2: “unwelcome” is certainly the wrong word as this has now been upvoted, it’s merely a disagreeable comment. Would like to know the ways in which people disagree but oh well.

The posts in question (linked from this post) are:

https://www.jefftk.com/p/house-party-dances

https://www.jefftk.com/p/letting-kids-be-outside

I see, I didn’t notice those were links to the posts.

I don’t think that changes anything though. I also think the style choice doesn’t contribute to the vibe of the post but arguably that’s a personal preference only

In the case of the house party, I asked the party hosts if I could post a photograph, and one of them suggested this method of anonymization. In the puddle picture I didn’t ask, but the kids are so generic that I really don’t see how it would violate their privacy.

Alright—that is fine, but it also doesn’t really reapond to the points I was making. I wasn’t concerned whether there was consent from people in a particular instance, but about how consent wasn’t described as part of your process.

Also, as I mentioned, for the kids: just uploading the photos to the ghibli LLM site seems like a likely privacy violation.

Do you think it’s wrong to take pictures in public places and put them online, even if there are people in the background who didn’t consent? I think of this as a very normal thing to do, though it does make them available for LLM-training-scraping among other things.

My answer to that question would not quite be a categorical yes or no. For example, there’s a difference between a manually taken selfie and a complete raw security camera feed.

But I do agree this is straying from the original topic a bit. Since the top-post use case is explicitly one where you’ve already decided you’re not comfortable posting the original photo publicly, I feel like the general acceptability of posting photos is mostly irrelevant here? I think a more on-point justification would be talking about why it’s more acceptable for an AI to see the original photo than for your general audience to see that same photo.

(To be clear I don’t personally have a major problem with this practice, at least as you’ve applied it so far, although I also don’t think it’s really added or subtracted much from my enjoyment or understanding of your posts so far. Mostly I just don’t find this particular justification to be convincing.)

Personally, yeah I do think it’s mildly wrong. Normal and ethical aren’t always correlated.

Again though, isn’t this getting a little off topic? It seems to be staying that way so it’s fine with me if we just let this conversation die off. Almost none of my questions or points are really being addressed (by you or the many others who disagree with me), so continuing doesn’t seem worthwhile. I’ve been hanging onto the thread hoping to get some answers, but from my perspective it’s just continued misdirection. I don’t think that’s your intent, and I may have put you on the defensive, but overall this is a negative-value conversation for me as I’m leaving more confused.

I admit, I’ve been drive by downvoting posts with ghiblified illustrations, which isn’t how downvotes are supposed to be used. Something about them is so incredibly upsetting to me

I don’t know why everyone is making this so complicated when there’s a clear disqualifying factor for me: Miyazaki himself has said that they did not consent to be trained on, would not have consented to being trained on, and do not want anyone making Ghibli art, and all of this was known before Sam Altman started pushing Ghibliffication. There are other factors too, but this one by itself is already sufficient for me.

EDIT: I see a lot of upvotes and disagreement on this comment, which I think I agree with. I should have clarified, this is personally disqualifying to me, because I personally care a little about respecting Miyazaki’s wishes, and even though he’s a grumpy old man I disagree with on a lot of things, he’s also someone I care about in a small way so I try to be respectful of what I understand he’s tried to teach me about the world, if that makes sense? I was definitely not advocating for this to become government policy or something, though I do separately agree with that recent memo from the Copyright office.

One obvious reason to get upset is how low the standards of people posting them are. Let’s take jefftk’s post. It takes less than 5 seconds to spot how lazy, sloppy, and bad the hands and arms are, and how the picture is incoherent and uninformative. (Look at the fiddler’s arms, or the woman going under 2 arms that make zero sense, or the weird doors, or the table which seems to be somehow floating, or the dubious overall composition—where are the yellow fairy and non-fairy going, exactly?, or the fact that the image is the stereotypical cat-urine yellow of all 4o images.) Why should you not feel disrespected and insulted that he was so careless and lazy to put in such a lousy, generic image?

I was in this case assuming it was a ghiblified version of a photo, illustrating the very core point of this post. Via this mechanism it communicated a lot! Like how many people were in the room, how old they were, a lot about their emotional affect, how big the room was, and lots of other small details.

First, I didn’t say it wasn’t communicating anything. But since you bring it up: it communicated exactly what jefftk said in the post already describing the scene. And what it did communicate that he didn’t say cannot be trusted at all. As jefftk notes, 4o in doing style transfer makes many large, heavily biased, changes to the scene, going beyond even just mere artifacts like fingers. If you don’t believe that people in that room had 3 arms or that the room looked totally different (I will safely assume that the room was not, in fact, lit up in tastefully cat-urine yellow in the 4o house style), why believe anything else it conveys? If it doesn’t matter what those small details were, then why ‘communicate’ a fake version of them all? And if it does matter what those small details were, surely it’s bad to communicate a fake, wrong version? (It is odd to take this blase attitude of ‘it is important to communicate, and what is communicated is of no importance’.)

Second, this doesn’t rebut my point at all. Whatever true or false things it does or does not communicate, the image is ugly and unaesthetic: the longer you look at it, the worse it gets, as the more bland, stereotypical, and strewn with errors and laziness you understand it to be. It is AI slop. (I would personally be ashamed to post an image even to IRC, never mind my posts, which embodies such low standards and disrespects my viewers that much, and says, “I value your time and attention so little that I will not lift a finger to do a decent job when I add a big attention-grabbing image that you will spend time looking at.”) Even 5 seconds to try to inpaint the most blatant artifacts, or to tell ChatGPT, “please try again, but without the yellow palette that you overuse in every image”*, would have made it better.

* incidentally, I’ve been asking people here if they notice how every ChatGPT 4o-generated image is by default yellow. Invariably, they have not. One or two of them have contacted me later to express the sentiment that ‘what has been seen cannot be unseen’. This is a major obstacle to image editing in 4o, because every time you inpaint, the image will mutate a decent bit, and will tend to turn a bit more yellow. (If you iterate to a fixed point, a 4o image turns into all yellow with sickly blobs, often faces, in the top left. It is certainly an odd generative model.)

Gwern, look, my drawing skills are pretty terrible. We’ve had sequences posts with literal pictures of napkins where Eliezer drew bad and ugly diagrams up here for years. Yes, not everything in the image can be trusted, but surely I have learned many real and relevant things about the atmosphere and vibe from the image that I would not from a literal description (and at the very least it is much faster for me to parse than a literal description).

I know the kinds of errors that image models make, and so I can adjust for them. They overall make many fewer errors than jefftk would make if he were to draw some stick figures himself, which would still be useful.

The image is clearly working at achieving its intended effect, and I think the handwringing about it being unaesthetic is overblown compared to all realistic alternatives. Yes, it would be cool if jeff prompted more times, but why bother, it’s getting the job done fine, and that’s what the whole post is about.

But what are they? You’ve received some true information, but it’s in a sealed box with a bunch of lies. And you know that, so it can’t give you any useful information. You might arbitrarily decide to correct in one direction, but end up correcting in the exact opposite direction from reality.

For example: we know the AI tends to yellow images. Therefore, seeing a yellowed AI-generated image, that tells us that the color of the original image was either not yellow or… yellow. Because it doesn’t de-yellow images that are already yellow. We have no idea what color it originally was.

If enough details are wrong, it might as well just be a picture of a different party, because you don’t know which ones they are.

As for using a different image: drawing by hand and using AI aren’t the only options. Besides AI,

there are actual free images you can use. As far as I know, this could be a literal photo of the party in question, and it’s free: https://unsplash.com/photos/a-man-and-woman-dancing-in-a-room-with-tables-and-chairs-KpzGmDvzhS4

You could spend <1hr making an obviously shitty ’shop from free images with free image editing software. If you’ve ever shared a handmade crappy meme with friends, you know this can be a significantly entertaining and bonding act of creativity. The effort is roughly comparable to stick figures and the outcome looks better, or at least richer.

With all that said, and reiterating gwern’s point above, I can’t agree it achieved its intended effect. It is possible that jefftk put in a lot of effort to make sure the generated vibe is as accurate as could reasonably be, but the assumption is that someone generating an AI image isn’t spending very much effort, because that’s the point of using AI to generate images. There are better tools for someone making a craft of creating an image (regardless of their drawing skill). In order for that effort to be meaningful, (because unlike with artistic skill it doesn’t translate to improved image quality,) he’d have to just tell us, “I spent a lot of time making sure the vibe was right, even though the image is still full of extra limbs.” And this might actually be a different discussion, but I’d be immediately skeptical of that statement—am I really going to trust the artistic eye and the taste of someone who sat down for 2 hours to repeatedly generate a ghiblified AI image instead of using a tool that doesn’t have a quality cap? So ultimately I find it more distracting, confusing, and disrespectful to read a post with an AI image, which, if carelessly used (which I have to assume it is), cannot possibly give me useful information. At least a bad stick figure drawing could give me a small amount of information.

I didn’t. I picked out a photo that I was going to use to illustrate the piece, one host asked me not to use it because of privacy, another suggested Ghiblifying it and made one quickly on their phone. We looked at it and thought it gave the right impression despite the many errors.

The vibe of the generated image is far closer to the real party than the image you linked.

I didn’t think you did, and wasn’t trying to imply you did. I was onl illustrating how it wouldn’t even matter if you had.

Ok...? That’s fine, I guess, but irrelevant—my point is that until you stated this, deep in the comments, I could not have known it.

I am surprised & disappointe you responded in that way, since I’ve tried to be clear that I am not talking about whether or not the image you posted for that party is well representative of the party you attended. It makes no difference to anything I’m arguing whether it is or isn’t.

I am saying that no reader (who wasn’t at the event) can ever trust that any AI gen image attached to a blog post is meaningfully depicting a real event.

I am not sure if you’re seeing from outside your own perspective. From your view, comparing it to the original, it’s good enough. But you’re not the audience of your blog, right? A reader has none of that information. They just have an AI slop image (and I’m not trying to use that to be rude, but it fits the bill for the widely accepted term), and so they either accept it credulously as they accept most AI slop to be “true to the vibe”, whether it is or isn’t (which should be an obviously bad habit); or they throw it away as they do with most AI slop, to prevent it from polluting their mental model of reality. In this model, all readers are worse off for it being there. Where would a third category fit in of readers (who don’t know you,) who see this particular AI image and trust it to be vibe-accurate even though they know most AI images are worthless? Why would they make that judgement?

EDIT: I have no idea why this comment is received so negatively either. I think everything in it is consistent with all my other comments, and I’m also trying to wrangle the conversation back on topic repeatedly. I think I’ve been much more consistent and clear about my arguments than people responding to me, so this is all very confusing. It’s definitely feeling like I’m being downvoted ideologically for having a negative opinion of AI image generation.

The fact that the author decided to include it in the blog post is telling enough that the image is representative of the real vibes. There isn’t just an “AI slop image”, but also the author’s intent to use it as a quick glance into the real vibes, in a faster and more accurate way than just words would have done.

Sorry, I wrote my own reply (saying roughly the same thing) without having seen this. I’ve upvoted and strong agree voted, but the agreement score was in the negative before I did that. If the disagree vote came from curvise, then I’m curious as to why.[1]

It seems to me that moonlight’s comment gets to a key point here: you’re not being asked to trust the AI; you’re being asked to trust the author’s judgment. The author’s judgment might be poor, and the image might be misleading! But that applies just as well to the author’s verbal descriptions. If you trust the author enough that you would take his verbal description of the vibe seriously, why doesn’t his endorsement of the image as vibe-accurate also carry some weight?

No passive aggression intended here; I respect the use of a disagree vote instead of a karma downvote.

Yes I did cast a disagree vote,: I don’t agree that “The fact that the author decided to include it in the blog post is telling enough that the image is representative of the real vibes” is true, when it comes to an AI generated image. My reasoning for that position is elaborated in a different reply in this thread.

I think a crucial point here is that we’re not just getting an arbitrary AI-generated image; we’re getting an AI-generated image that the author of the blog post has chosen to include and is claiming to be a vibes-accurate reproduction of a real photo. If you think the author might be trying to trick you, then you should mistrust the image just as you would mistrust his verbal description. But I don’t think the image is meant to be proof of anything; it’s just another way for the author to communicate with a receptive reader. “The vibe was roughly like this [embedded image]” is an alternative to (or augmentation of) a detailed verbal description of the vibe, and you should trust it roughly as much as you would trust the verbal description.

I largely agree with your point here. I’m arguing more that in the case of a ghiblified image (even more so than a regular AI image), the signals a reader gets are this:

the author says “here is an image to demonstrate vibe”

the image is AI generated with obvious errors

For many people, #2 largely negates #1, because #2 also implies these additional signals to them:

the author made the least possible effort to show the vibe in an image, and

the author has a poor eye for art and/or bad taste.

Therefore, the author probably doesn’t know how to even tell if an image captures the vibe or not.

Hell, I forgot about the easiest and most common (not by coincidence!) strategy: put emoji over all the faces and then post the actual photo.

EDIT: who is disagreeing with this comment? You may find it not worthwhile , in which case downvote , but what about it is actually arguing for something incorrect?

If I did that, people in photos would often be recognizable. It retains completely accurate posture, body shape, skin color, clothing, and height. I’ve often recognized people in this kind of image.

(I haven’t voted on your comment, but I suspect this is why it’s disagree voted)

That does make sense WRT disagreement. I wasn’t intending to fully hide identities even from people who know the subjects, but if that’s also a goal, it wouldn’t do that.

+1 for “what has been seen cannot be unseen”, wow I’m seeing a lot of cat-urine yellow around now

The left arm is holding the fiddle and is not visible behind my body, while the right arm has the sleeve rolled up above the elbow and you can see a tiny piece of the back of my right hand poking out above my forearm. The angle of the bow is slightly wrong for the hand position, but only by a little since there is significant space between the back of the hand and the fingertips holding the bow.

(Of course, as I write in my post, it certainly gets a lot of other things wrong. Which is useful to me from a privacy perspective, though probably not the most efficient way to anonymize.)

Also, in general, I don’t like the practice of using people’s work without giving them any credit. Especially when used to make money. And even moreso when it makes the people who made the original work much less likely to be able to make money.

Do you dislike open source software? For most of them the credit is of the license or name. Quite similar to ghibli, where a person drops the name of the artstyle.

In open source stuff, backend libraries are less likely to get paid compared to frontend products, creating a product can make the situation worse for the OG person. It can be seen predatory, but that’s the intent of open source collaboration fwiw.

If the artist says they’re ok with a model being trained on their work, then it’s relatively fine with me. Most artists explicitly are not and were never asked—in fact, most licensed their work in a way that they should be paid for it’s use.

In art, the art is usually the product itself and if it’s used for something, it’s usually agreed upon with the artist and user, unless the artist has explicitly said they’re ok with it being used—e.g. some youtubers have said it’s ok to use their music in any videos (although this isn’t the same as it being used for training a model)

The main point here being respecting the work and consent of the creator.

There is an image diffusion model named ‘Mitsua’ (easy to load up in Stable Diffusion) which is trained only on public domain and donated training data, which I use for a similar purpose at work.

I appreciate the ability to create quick “vibe sketches’ of ideas I want to express in a post, in cases where I don’t want a more precise method like a Mermaid chart or Table or a true drawing/diagram.

I’m on the lookout for more models like this, because I don’t like supporting any company that has historically been coy about its training data, which includes OpenAI and Anthropic.

Adobe seems to have gone through a little effort to do ethical sourcing of training data, so it’s better than OpenAI and Anthropic in that regard even when it also isn’t perfect.

For sure! Much like the AI safety scorecard, no one is out of the red, but it seems like some of the older publishing house type companies are trying to respect existing content licensing institutions. However, I’ve seen many creators and artists complain that it doesn’t matter; it’s already too overshadowed by the actions of OpenAI et al.

Here’s a recent one where the quality is pretty good: f-lite. They say, “The models were trained on Freepik’s internal dataset comprising approximately 80 million copyright-safe images.”