In a word, yes. Very unappealing.

Hastings

Cart-pole balancing seems like a good toy case

Is it relevant whether you knew about the apples before the apple man told you about them? If you didn’t know, then the least exploitable response to a message that looks adversarial is to pretend you didn’t hear it, which would mean not eating the apples.

Also, pascal’s mugging is worth coordinating against- if everyone gives the 5 dollars, the stranger rapidly accumulates wealth via dishonesty. If no one eats the apples, then the stranger has the same tree of apples get less and less eaten, which is less caustic.

One way I could write a computer program that e.g. lands a rocket ship is to simulate many landings that could happen after possible control inputs, pick the simulated landing that has properties I like ( such as not exploding and staying far from actuator limits) and then run a low latency loop that locally makes reality track that simulation, counting on the simulation to reach a globally pleading end.

Is this what you mean by loading something into your pseudo prediction?

No law of physics stops the first AI in an RSI cascade from having its values completely destroyed by RSI. I think this is the default outcome?

It’s a shame language model decoding isn’t deterministic, or I could make a snarky but unhelpful comment that the information content is provably identical, by some sort of pigeon hole argument.

Epistemic status: 11 pages in to “The lathe of heaven” and dismayed by Orr

Are alignment methods that rely on the core intelligence being pre-trained on webtext sufficient to prevent ASI catastrophe?

What are the odds that, 40 years after the first AGI, the smartest intelligence is pretrained on webtext?

What are the odds that the best possible way to build an intelligent reasoning core is to pretrain on webtext?

What are the odds that we can stay in a local maximum for 40 years of everyone striving to create the smartest thing they can?

My mental model of the sequelae of AGI in ~10 years without an intentional global slowdown is that within my natural lifespan, there will be 4-40 transistions in the architecture of the current smartest intelligence, where the architecture undergoes changes in overall approach at least as large as the difference from evolution → human brain or human brain → RL’d language model. Alignment means building programs that themselves are benevolent, but are also both wise and mentally tough enough to only build benevolent and wise successors, even when put under crazy pressure to build carelessly. When I say crazy pressure, I mean “the entity trying to get you to build carelessly is dumber than you, but it gets to RL you into agreeing to help” levels of pressure. This is hard.

A successfully trained 1 hidden layer perceptron with 500 hidden activations has at absolute minimum 500! possible successful parameter settings.

Thanks for sharing this. I think I need to be more appreciative that my university experience may have been good through perhaps exceptional efforts on the part of the university, and not as some default. In particular, this can be true even as the best parts centered around the university getting out of the way of the students.

I’m not entirely sure, actually. My main serious encounter with postmodernism was trying to engage with toni morrison’s beloved well enough to not fail a college class, which jumps out as a possible explanation. I’m willing to buy that its not as central as I thought.

I wonder if we need someone to distill and ossify postmodernism into a form that rationalists can process if we are going to tackle the problems postmodernism is meant to solve. A blueprint would be the way that FDT plus prisoners dilemma ossifies sartre’s existentialism is a humanism, at some terrible cost to nuance and beauty, but the core is there.

My suspicion of what happened at a really high level is that fundamentally one of the driving challenges of postmodernism is to actually understand rape, in the sense that rationalism is supposed to respect: being able to predict outcomes, making the map fit the territory etc. EY is sufficiently naive of postmodernism that the depictions of rape and rape threats in Three Worlds Collide and HPMOR basically filtered out anyone with a basic grasp of postmodernism from the community. There’s an analagous phenomenon where when postmodernist writers depicts quantum physics, they do a bad enough job that it puts off people with a basic grasp of physics from participating in postmodernism. Its epistemically nasty too: this comment is frankly low quality, but if I understood postmodernism well enough to be confident in this comment I suspect I would have been sufficiently put off by the draco-threatens-to-rape-luna subplot in HPMOR to have never actually engaged with rationalism.

Yeah, if you are doing e.g. a lab heavy premed chemistry degree my advice may not apply to an aspiring alignment researcher. This is absolutely me moving the goalposts, but may also be true: on the other hand, if you are picking courses with purpose, in philosophy, physics, math, probability, comp sci: theres decent odds imho that they are good uses of time in proportion to the extent that they are actually demanding your time.

For undergrad students in particular, the current university system coddles. The upshot is that if someone is paying for your school and would not otherwise be paying tens of thousands of dollars a year to fund an ai safety researcher, successfully graduating is sufficiently easy that its something you should probably do while you tackle the real problems, in the same vein as continuing to brush your teeth and file taxes. Plus you get access to university compute and maybe even advice from professors.

This is fascinating- I’ve been a fan of Joseph’s youtube channel for years, but I’ve never seen him comment on lesswrong. A while ago in that setting, we got into a back and forth about eigenvalues of anti-linear operators, which was object level fascinating, but also ended up requiring both Joseph and me to notice that we were wrong, which we did with little difficulty. What I’m trying to say is that Joseph is actually smart and open minded on technical questions, but is also definitely not respecting community norms here. If we can successfully not scare him off while discouraging quite this level of vitriol, there is definitely potential for him to contribute.

The evidence I am about to ask for may exist! However, I am still comfortable asking for it, as without it the whole thing falls apart, and I think this class of argument really always needs to show this explicitly: can you show that literally anyone reads the “modern Centennial Edition of Etiquette released in 2022?”

Also, I agree that Israel made great use of drones to take out anti-air defenses. However, this use of drones in no way requires manufacturing millions of quadcopters.

A couple notes: israel and russia are extremely comparable in military spending, likewise Ukraine and Iran. In addition, Ukraine and Iran both went hard into drones to counter the disparity, its very noticable that drones basically work against Russia and basically don’t against Israel- but neither conflict provides dramatically more evidence than the other about a war where both sides are well-resourced and nuclear. In particular, the lack of drone based attrition of the israeli airforce is glaring.

The lesson I drew from Israel vs Iran is that stealth just hard-counters drones in a peer conflict. The essential insight is that guided bombs aren’t just similar to small suicide drones, they are drones- with all the advantages- as long as you can get a platform in place to drop them

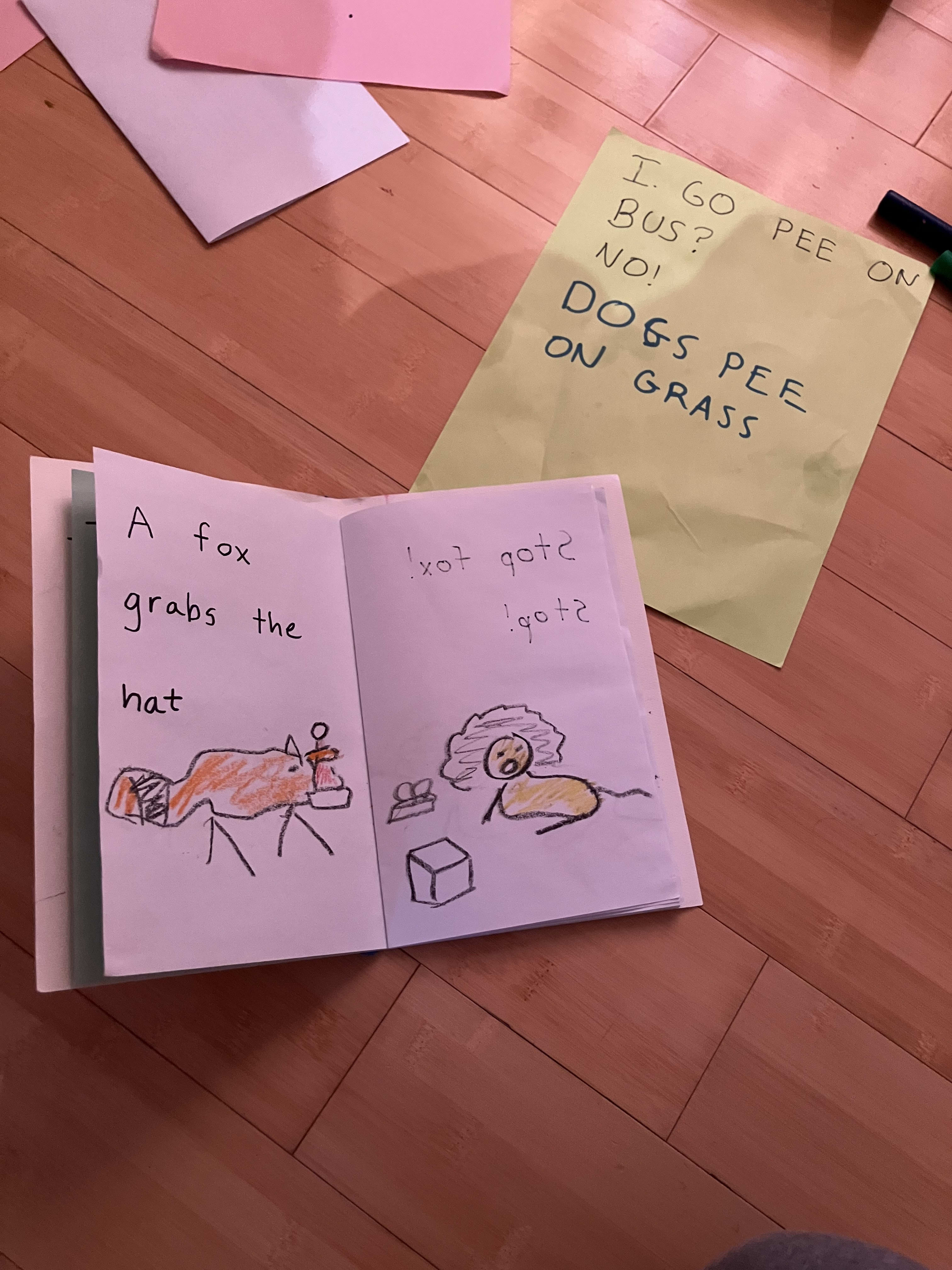

Second on Tux Paint

tux racer (penguin sledding) and supertux (platformer) are games with level editors, my three year old loves supertux and its level editor but it is a well-put together enough game to start to be addicting to him.

Whenever he sees me working, I’m on a terminal, and he wanted to learn how to use a terminal. I taught him how to type

```

sl

sl -a

sl; sl

sl | lolcat

cowsay hi

```

etc

and he found this very amusing. Often will demand to “make a train” if I get the laptop out where he can see me.