Thou art rainbow: Consciousness as a Self-Referential Physical Process

You’re not a ghost in the machine; you’re a rainbow in the noisy drizzle of perception.

TL;DR: Consciousness is a self-referential physical process whose special character comes from recursion, not metaphysics. Like a rainbow, it’s real but not fundamental. The “hard problem” dissolves once we recognize that the inner glow is how self-modeling appears from the inside. Consciousness is real and special, but not ontologically separate.

Related: Zombie Sequence

Consciousness has been my interest for quite some time. For a year, I’ve been saying that consciousness will be solved soon for a while. ACX (The New AI Consciousness Paper) has been discussing Identifying indicators of consciousness in AI systems and its predictions for AI consciousness. But ends

a famously intractable academic problem poised to suddenly develop real-world implications. Maybe we should be lowering our expectations

I disagree! I think we are quite close to solving consciousness. The baby steps of testable predictions in the above paper are the crack that will lead to a torrent of results soon. Let me outline some synthesis that allows for more predictions (and some results we already have on them).

Chalmers famously distinguished the “easy problems” of consciousness (information integration, attention, reportability) from the “hard problem” of explaining why there is “something it is like.”[1] Critics of reductive approaches insist that the datum of experience is ineliminable: even if consciousness were an illusion, it must be an illusion experienced by something.[2]

Illusionists, like Dennett[3] and Frankish[4], argue that this datum is itself a product of cognitive architecture: consciousness only seems irreducible because our introspective systems misrepresent their own operations. Yet this line is often accused of nihilism.

The synthesis I propose here accepts the insight of illusionism while rejecting nihilism: consciousness is a physical process whose self-referential form makes it appear as if there were a Cartesian inner glow. Like a rainbow, it is real but not fundamental; the illusion lies in how it presents itself, not in its existence.

To avoid ambiguity, I distinguish consciousness from related notions. By consciousness, I mean the phenomenological self-appearance generated by self-modeling. This is narrower than general awareness, broader than mere sentience[5] (capacity for pleasure and pain), and not identical with intelligence or reportability.[6]

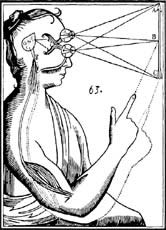

Descartes: Rainbow and Mind

Descartes investigated both consciousness and the rainbow. In his Meditations, he famously grounded certainty in the cogito, treating the mind as a self-evident inner datum beyond the scope of physical explanation.[7] Yet already in Les Météores, he gave one of the first physical-geometrical accounts of the rainbow: an optical law derived from light refracting through droplets, with no hidden essence.[8]

This juxtaposition is instructive. Descartes naturalized the rainbow while mystifying the mind. With modern understanding of how the brain learns, we can take the strategy he deployed in optics to its natural conclusion: conscious experience, like the rainbow, is real and law-governed but not ontologically primitive.

Self-Perception and Rarity

Most physical processes are non-reflexive. A pendulum swings; a chemical reaction propagates. There are even physical processes that are self-similar, such as coast lines, self-propagating, such as cells, or even self-referencing, such as gene sequences, or Gödel sentences. By contrast, consciousness is recursive in the self-modeling and self-perceiving sense: the system models the world and also models itself as an observer within that world.

Metzinger calls this the phenomenal self-model, while Graziano characterizes it as an attention schema.[9] Consciousness As Recursive Reflections proposes that qualia arise from self-referential loops when thoughts notice themselves.

We notice that such self-modeling and self-perceiving systems are rare in nature due to the required computational and structural complexity.

When recursion is present it produces special-seeming phenomena: Gödelian undecidability, compilers compiling themselves, or cellular replication already inspire awe. How much more so are cognitive systems asserting their own existence? Thus, consciousness feels exceptional because it is a rare phenomenon: It a process that is recursively locked on itself. But not because it is metaphysically distinct.

The Illusion Component

Illusionism correctly notes that consciousness misrepresents itself. Just as the brain constructs a visual field without access to its underlying neuronal computations, so it constructs a sense of “inner presence” without access to the mechanisms generating that representation. Dennett[3] calls this the rejection of the “Cartesian Theater.” Grokking Illusionism explains the illusion as a difference between map and territory.

Illusion here must not be confused with nonexistence. To call consciousness partly illusory is to say its mode of presentation misrepresents its own basis. The glow is not fundamental, but the process producing it is real. This avoids both eliminativist nihilism and dualist reification. Our perceptual systems routinely construct seemingly complete representations from incomplete data, as explored in The Pervasive Illusion of Seeing the Complete World.

In How An Algorithm Feels From Inside Yudkowsky offers a complementary insight: introspection itself is algorithmic, and algorithms have characteristic failure modes.[10] If the brain implicitly models itself with a central categorization node, the subjective feeling of an unanswered question, the hanging central node, can persist even after all relevant information is integrated. From the inside, this feels like an inconsistency. The “hard problem” may thus be the phenomenological shadow cast by the brain’s cognitive architecture or its during-lifetime learning, a structural feature rather than a metaphysical gap.

Yet the illusion is partial: there is indeed a real process generating the misrepresentation. To deny this would be like denying the existence of a rainbow because it is not a material arch. The rainbow analogy[11] is instructive: the phenomenon is lawful and real, but not ontologically primitive. Consciousness belongs to this same category.

Phenomenality and Subjectivity

Yudkowsky’s central categorization node is the consequence of a representational bottleneck that arises because limited bandwidth forces the system to funnel many disparate signals through a single narrow channel. That compression naturally produces a one-slot placeholder through which the system routes self-referential information. Byrnes analyzes similar effects in humans, showing how information bottlenecks shape cognitive structure.[12]

What effects should the bottleneck in the recursive self-modeling process have? The bottleneck creates opacity: the system cannot introspect its own generative mechanisms, so experience presents itself as intrinsic and given. This matches the phenomenal appearance that there is something it is like that feels irreducible due to the dangling node.

But the same bottleneck also facilitates a compressed representation of a self-pointer that ties perceptions, actions, and memories to the dangling node. This matches subjectivity: the irreducible feeling of these percepts as “mine,” thereby generating the sense of a unified subject.

Infinite Regress and the Observer

The demand for an inner witness (“something must be experiencing something”) leads inexorably to regress. The critic’s insistence that “something must be experiencing something” is powerful but unstable. Taken literally, it requires an infinite regress of observers. My argument does not deny experience but locates it in self-referential process rather than a metaphysical subject. If an inner self must observe experience, what observes the self? Ryle already flagged this as the fallacy of the “ghost in the machine.”[13] By recognizing consciousness as a process rather than a locus, the regress dissolves.

The rainbow again clarifies: one need not posit a ghostly arch to explain the appearance; it suffices to specify the physical conditions under which an observer would see it. Similarly, we can model the conditions under which a brain would produce the self-appearance of being conscious. Positing a hidden observer adds no explanatory power.

Falsifiable Predictions

If consciousness is a self-referential physical process emerging from recursive self-models under a physically bounded agent, then several falsifiable empirical consequences follow.

Prediction 1: Recursive Architecture Requirement

Conscious phenomenology should only arise in systems whose internal states model both the world and their own internal dynamics as an observer within that world. Neural or artificial systems that lack such recursive architectures should not report or behave as though they experience an “inner glow.”

Prediction 2: Systematic Misrepresentation

Conscious systems should systematically misrepresent their own generative processes. Introspective access must appear lossy: subjects report stable, unified fields (visual, affective, cognitive) while the underlying implementation remains hidden. Experimental manipulations that increase transparency of underlying mechanisms should not increase but rather degrade phenomenology.

Prediction 3: Persistent Ineffability

Recursive self-models should contain representations that are accessible throughout the system yet remain opaque. They appear as primitive elements rather than reducible to constituent mechanisms. This structural bottleneck predicts the persistent subjective impression of an unanswered question, i.e. the felt “hard problem.” Systems engineered without such bottlenecks or interventions that reduce the bottleneck should display functional competence with reduced reporting of ineffability.

Prediction 4: Global Self-Model Distortions

Damage to cortical regions that support self-referential recursion (e.g. parietal or prefrontal hubs) should yield not only local perceptual deficits but systematic distortions of global self-models (e.g. warped spatial awareness, anosognosia), rather than mere filling-in.

Prediction 5: Recursive Depth Scaling

Consciousness should scale with recursive depth. First-order world models suffice for adaptive control, but only systems that implement at least second-order predictions (modeling their own modeling) should display reportable phenomenology. Empirically, metacognitive capacity should correlate with subjective richness.

Prediction 6: Distributed Architecture

There should be no unique anatomical or algorithmic “theater” in which consciousness resides. Instead, distributed self-referential loops should be necessary. Interventions targeting a single hub (e.g. thalamus) may enable or disable global recursion, but the phenomenology should not be localized to that hub alone.

Prediction 7: Observer-Dependence

Consciousness, like a rainbow, should be observer-dependent, or rather allow for arbitrary conceptions of observers. Manipulations that alter the structural perspective of the system (e.g. body-swap illusions, temporally perturbed sensorimotor feedback, or altered interoception) should correspondingly shift or dissolve the felt locus of presence.

Prediction 8: Separability of Components

Subjectivity and phenomenal appearance should be separable. It should be independently possible to learn to see through the illusory aspects of phenomenal appearance (recognizing that the “glow” is constructed) and to weaken or collapse the self-pointer (the feeling of being a unified subject).

Tentative Evidence

Several empirical domains already provide evidence relevant to the proposed predictions:

Bilateral lesions to central thalamic nuclei can abolish consciousness, while deep brain stimulation partially restores responsiveness in minimally conscious state patients.[14] In anesthetized primates, thalamic deep brain stimulation reinstates cortical dynamics of wakefulness.[15] This supports a distributed, gateway-mediated rather than theater-like architecture.

Parietal and temporo-parietal damage yields global distortions of space and body (neglect, anosognosia, metamorphopsia) rather than simple filling-in[16], consistent with recursive model deformation.

Internal shifts in attention and expectation can alter what enters conscious awareness, even when sensory input remains constant. This occurs in binocular rivalry and various perceptual illusions[17], consistent with consciousness depending on recursive self-modeling rather than non-cyclic processing of external signals.

Comparison with Other Theories

IIT[18] is supported on lesion-induced distortions but overstates the role of quiescent elements. Higher-order thought theories[19] underpredict metacognitive and perspectival effects. Attention-based theories[20] are supported on attentional and belief updates but do not explain persistent ineffability from recursive bottlenecks. This self-referential account thus combines IIT’s structural sensitivity with attention-based policy sensitivity, grounded in recursive self-models.

Conclusion

Consciousness is not an ontological primitive but a physical process of recursive self-modeling. Its rarity derives from the rarity of natural recursions; its sense of ineffable glow, as well as the sense of a unified subject, derive from opacity in self-modeling; the regress dissolves once the inner observer is dropped. In this framing, the “hard problem” is revealed not as an intractable metaphysical mystery but as a cognitive mirage generated by self-referential architecture. Yudkowsky’s central-node argument suggests why the mirage is so compelling: algorithms can systematically produce the feeling of a remainder even when nothing remains to explain. Subjectivity and phenomenal appearance should be separable and be manipulated independently. Consciousness is to physical process what the rainbow is to optics: real, lawful, misdescribed from the inside, and not ontologically sui generis.

Thou art rainbow.

Or as Descartes might say:

Cogito, ergo ut iris.

Acknowledgments: I thank the reviewers Cameron Berg, Jonas Hallgren, and Chris Pang for their helpful comments. A special thanks goes to Julia Harfensteller. Without her meditation teaching, I would not have been able to form the insights behind this paper. This work was conducted with support from AE Studio which provided the time and resources necessary for this research.

You can find my paper version of this post here (better formatting, fewer LW links).

References

- ^

Chalmers, D. J. (1996). The Conscious Mind: In Search of a Fundamental Theory. Oxford University Press.

- ^

Strawson, G. (2006). Realistic monism: Why physicalism entails panpsychism. Journal of Consciousness Studies, 13(10-11), 3-31.

- ^

Dennett, D. C. (1991). Consciousness Explained. Little, Brown and Co.

- ^

Frankish, K. (2016). Illusionism as a theory of consciousness. Journal of Consciousness Studies, 23(11-12), 11-39.

- ^

Singer, P., & Mason, J. (2011). The Ethics of What We Eat. Rodale.

- ^

Dehaene, S. (2014). Consciousness and the Brain: Deciphering How the Brain Codes Our Thoughts. Viking.

- ^

Descartes, R. (1641). Meditations on First Philosophy (J. Cottingham, Trans.). Cambridge University Press.

- ^

Descartes, R. (1637). Discourse on Method, Optics, Geometry, and Meteorology (P. J. Olscamp, Trans., 2001). Hackett Publishing.

- ^

Metzinger, T. (2003). Being No One: The Self-Model Theory of Subjectivity. MIT Press; Graziano, M. S. A. (2013). Consciousness and the Social Brain. Oxford University Press.

- ^

Yudkowsky, E. (2015). The Cartoon Guide to Löb’s Theorem. LessWrong. https://www.lesswrong.com/posts/nCvvhFBaayaXyuBiD/the-cartoon-guide-to-löb-s-theorem; See also: Yudkowsky, E. (2008). Dissolving the Question. LessWrong. https://www.lesswrong.com/posts/5wMcKNAwB6X4mp9og/that-alien-message

- ^

Blackmore, S. (2004). Consciousness: An Introduction. Oxford University Press.

- ^

Byrnes, S. (2022). Brain-Like AGI Safety. https://www.lesswrong.com/s/HzcM2dkCq7fwXBej8

- ^

Ryle, G. (1949). The Concept of Mind. University of Chicago Press.

- ^

Schiff, N. D., et al. (2007). Behavioural improvements with thalamic stimulation after severe traumatic brain injury. Nature, 448(7153), 600-603.

- ^

Redinbaugh, M. J., et al. (2020). Thalamus modulates consciousness via layer-specific control of cortex. Neuron, 106(1), 66-75.

- ^

Venkatesan, U. M., et al. (2015). Chronometry of anosognosia for hemiplegia. Journal of Neurology, Neurosurgery & Psychiatry, 86(8), 893-897; Baier, B., & Karnath, H.-O. (2008). Tight link between our sense of limb ownership and self-awareness of actions. Stroke, 39(2), 486-488.

- ^

Frässle, S., et al. (2014). Binocular rivalry: Frontal activity relates to introspection and action but not to perception. Journal of Neuroscience, 34(5), 1738-1747; Koch, C., et al. (2016). Neural correlates of consciousness: Progress and problems. Nature Reviews Neuroscience, 17(5), 307-321.

- ^

Oizumi, M., et al. (2014). From the phenomenology to the mechanisms of consciousness: Integrated Information Theory 3.0. PLOS Computational Biology, 10(5), e1003588; Tononi, G., et al. (2016). Integrated information theory: From consciousness to its physical substrate. Nature Reviews Neuroscience, 17(7), 450-461.

- ^

Rosenthal, D. M. (2005). Consciousness and Mind. Oxford University Press.

- ^

Prinz, J. J. (2012). The Conscious Brain: How Attention Engenders Experience. Oxford University Press.

It’s unclear to me what “inner glow” mean here. I have the impression that we see LLMs being trained on a lot of text of humans where some of it is humans speaking about being able to report they are conscious even if they don’t really have an recursive structure. It’s possible for an LLM to just repeat what they read and report the same thing that humans do.

This suggests that as people learn to meditate and get more transparency, on average they should become less convinced of consciousness being primary. While some people feel like the phenomenology gets degreed (and it sounds like you are one of them), I get the impression that there are more reports of people who are long-term meditators who get strengthened by their meditation experience in the belief that consciousness is ontology primitive.

The hard problem is, why is there any consciousness at all? Even if consciousness is somehow tied to “recursive self-modeling”, you haven’t explained why there should be any feelings or qualia or subjectivity in something that models itself.

Beyond that, there is the question, what exactly counts as self-modelling? You’re assuming some kind of physicalism I guess, so, explain to me what combination of physical properties counts as “modelling”. Under what conditions can we say that a physical system is modelling something? Under what conditions can we say that a physical system is modelling itself?

Beyond all that, there’s also the problem of qualic properties. Let’s suppose we associate color experience with brain activity. Brain activity actually consists of ions rushing through membrane ion gates, and so forth. Where in the motion of molecules, is there anything like a perceived color? This all seems to imply dualism. There might be rules governing which experience is associated with which physical brain state, but it still seems like we’re talking about two different things connected by a rule, rather than just one thing.

You ask: Even if the brain models itself, why should that feel like anything? Why isn’t it just plain old computation?

A system that looks at itself creates a “point of view.” When the brain models the world and also itself inside that world, it automatically creates a kind of “center of perspective.” That center is what we call a subject. That’s what happens when a system treats some information as belonging to the system. How the border of the system is drawn differs, body, brain, mind differs, but the reference will always be a form of “mine.”

The brain can’t see how its own processes work (unless you are an advanced meditator maybe).

So when a signal passes through that self-model, the system can’t break it down; it just receives a simplified or compressed state. That opaque state is what the system calls “what it feels like.”

Why isn’t this just a zombie misrepresenting itself? The distinction between “representation of feeling” and “actual feeling” is a dualist mistake. The rainbow is there even if it is not a material arc. To represent something as a felt, intrinsic state just is to have the feeling.

For a while now, my model of consciousness has roughly been that the brain tries to generatively predict its own working memory the same way that it does for other sensory streams.

Predictive models also explain why subjective time is (according to advanced meditators) granular: each predictive cycle would correspond to a single chunk of time.

Sure. I take it you have meditation experience. What is your take on subjectivity and phenomenal appearance coming apart?

Afraid I’m going on reports of others there. I don’t have very much experience myself. I’m also not quite sure what you mean by “phenomenal appearance coming apart”!

I argue that the inference bottleneck of the brain leads to two separate effects:

subjectivity—the feeling of being me (e.g. “I do it”)

phenomenal appearance—the feeling that there is something (qualia, e.g. “there is red”)

While both effects result from the bottleneck, the way they result from compression of different data streams should show different strength for different interventions. And indeed that is what we observe:

Trip reports on some psychedelics show self-dissolution without transparency (“No self” but strong intrinsic givenness (“I don’t know how this is happening, but no one is experiencing it”),

On the other hand, advanced meditators often report dereification (everything is transparent, perceptions forming can be observed) with intact subjecthood “I am clearly the one experiencing it”).

A couple of terms that seem relevant:

Holonomy—a closed loop which changes things that move around it. I think consciousness is generally built out of a bunch of holonomies which take sense data, change the information in the sense data while remaining unchanged itself, and shuttle it off for more processing. In a sense, genes and memes are holonomies operating at a bigger scale, reproducing across groups of humans rather than merely groups of neurons.

Russell’s vicious circle principle—self-reference invariably leads to logical contradictions. This means an awareness of self-referential consciousness (or phenomenological experience) cannot be a perfect awareness, you must be compressing some of the ‘self’ when you refer to yourself.

Yeah. Holonomy is applicable: A stable recursive loop that keeps reshaping the data passing through it. But what we need on top of holonomy is that self-reference in a physical system always hits an opacity limit. And I think this is what you mean but your reference to Russell’s vicious-circle: It leads to contradictions because of incremental loss.

Though I wonder if maybe we can Escape the Löbian Obstacle.

I like the direction you’re going here.

I’ve been thinking about consciousness off and on for the last thirty years, twenty of those while studying some of the brain mechanisms that seem to be involved. I hoped to study consciousness within neuroscience and cognitive psychology, but gave up when I saw how underappreciated and therefore difficult that career route would be.

I’m excited that there might be some more interest in actually answering these questions as interest in AI consciousness grows (and I think it will grow hugely; I just wrote this attempt to convey why).

Anyway, I didn’t have time to read really deeply or have time to engage deeply, but I thought I’d toss in some upvotes and some thoughts.

I agree that recursive self-observation is key to consciousness.

My particular addition, although inspired by something Susan Blackmore said (maybe in the textbook you reference, I don’t remember) is this:

The self-observation happens at separate moments of time. The system examines its previous contents. Those previous contents can themselves be examinations of further previous moments, giving rise to our recursive self-awareness that can be driven several levels deep but not to infinite regress.

I think this explains an otherwise somewhat mysterious separate area for consciousness. There is none such, just the central engine of analysis, the global workspace, turned toward its own previous representations.

At most times, the system is not self-observing. At some later time, attention is switched to self-obvservation, and the contents of global workspace become some type of interpretive representation of the contents at some previous time.

This is inspired by Blackmore noting, after much practice at meditation and introspection, that she felt she was not conscious when she was not observing her own consciousness. I don’t remember whether it’s her thought or mine that the illusions of persistent consciousness is owed to our ability to become conscious of past moments. We can “pull them back into existence” in limited form and examine them at length and in depth in many ways. This gives rise to the illusion that we are simultaneously aware of all of those aspects of those representations. We are not, but they contain adequate information to examine at our leisure; the representaitons are automatically expanded and analyzed as our attention falls on that aspect. Whatever we attend to, we remember (to some degree) and understand (to some degree), so it seems like we remember and understand much more than we do by default if we let those moments slip by without devoting additional time to recalling and analyzing them.

This “reconstructing to examine” is possible because the neural activity is persistent (for a few seconds; e.g., surprisingly the last two seconds are as richly represented in the visual system as is the most recent moment of perception). Short term synaptic changes in the hippocampus may also be “recording” weak traces of every unremarkable moment of perception and thought, which be used to reconstruct a previous representation for further inspection (introspection).

I wish I had more time to spend on this! Even though people will become interested in consciousness, it seems like they’re usually far more interested in arguing about it than listening to actual scientific theories based on evidence.

Which might be what I’m doing here; if so sorry, and I hope to come back and have more commentary specific to your approach! Despite that being long and complex, it’s been boiling in my brain for most of a decade, so it was quick to write.

Also...there is no reduction..in the sense in the sense of a theory that can predict qualia .. and predicting them as illusions isn’t much different. The

Irreducubility and ineffability aren’t just immediately given seemings.

Some generic “inner glow” is different to qualia, what the Hard Problem is about.

Maybe, but an inner witness isn’t the same thing as, qualia, either.

What part of staring at a white wall without inner dialog and then later remembering it requires inner modeling at the moment of staring?

But why would changing processing to non-cyclic result in experience becoming unconscious, instead of, I don’t know, conscious, but less filtered by attention?

And as usual, do you then consider any program, that reads it’s own code, to be conscious?