Was a philosophy PhD student, left to work at AI Impacts, then Center on Long-Term Risk, then OpenAI. Quit OpenAI due to losing confidence that it would behave responsibly around the time of AGI. Now executive director of the AI Futures Project. I subscribe to Crocker’s Rules and am especially interested to hear unsolicited constructive criticism. http://sl4.org/crocker.html

Some of my favorite memes:

(by Rob Wiblin)

(xkcd)

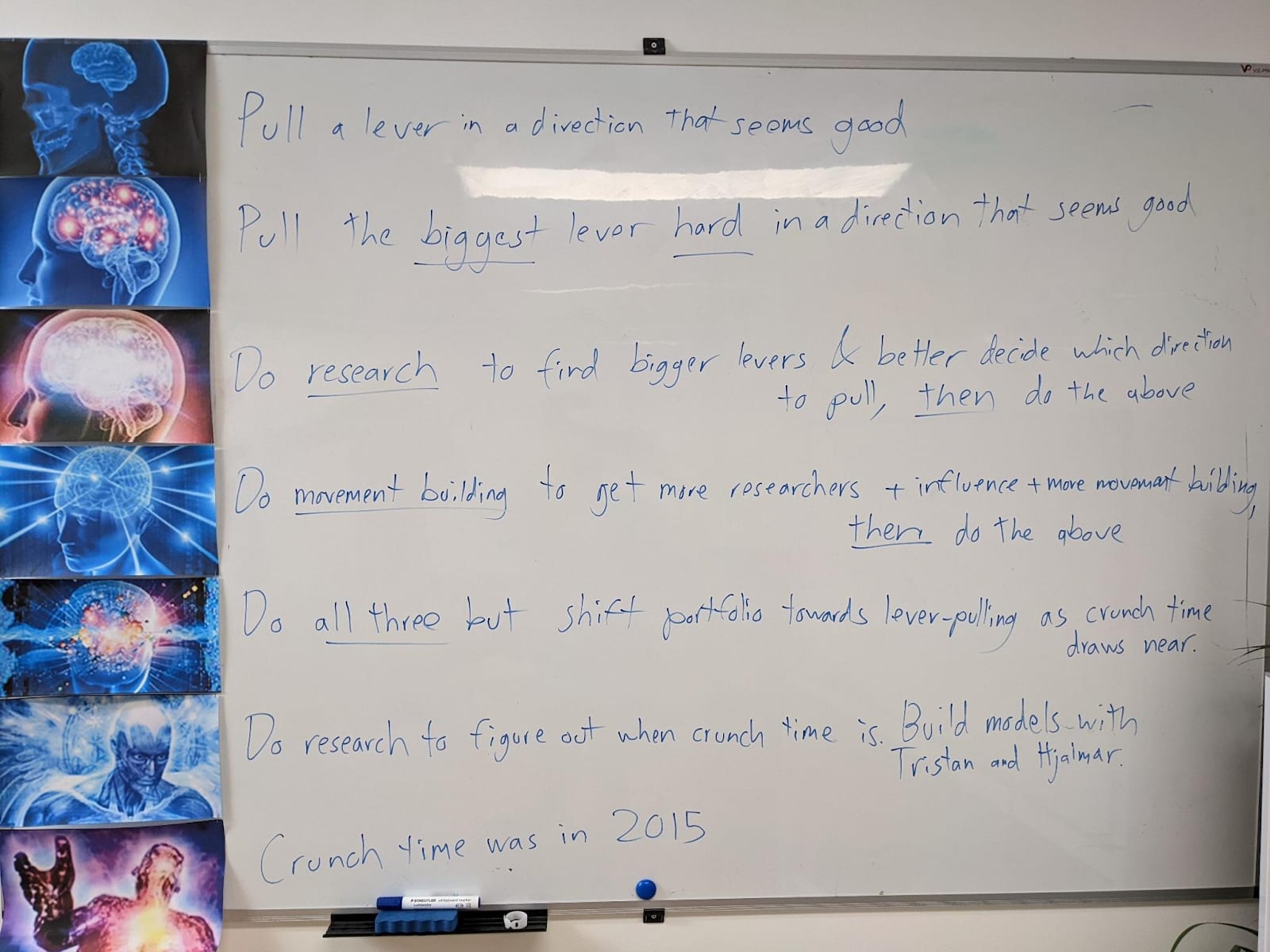

My EA Journey, depicted on the whiteboard at CLR:

(h/t Scott Alexander)

I think I agree with this? “Most algo progress is data progress” “Yep. Still counts though.”

I think this is a reasonable take. Here’s the opposite hypothesis:

“What are you talking about? These companies are giant juggernauts that are building a huge pipeline that does the following: (1) Identify economically valuable skills that AIs are missing (2) Collect/construct training environments / data to train those skills (3) Include those environments in the next big training runs, so that future AIs are no longer missing those skills. Already this seems like the sort of economic engine that could just keep churning until basically the whole world has been transformed. Is it AGI? No, it’s still massively less efficient than the human brain. But it might nevertheless automate most jobs within a decade or so, and then continue churning along, automating new jobs as they come up. AND that’s not taking into account three additional important factors: (4) The AIs are already generalizing to unseen skills/tasks to some extent, e.g. Claude is getting better at Pokemon despite not having been trained on Pokemon. Thus there might be a sort of ‘escape velocity’ effect where, after the models get big enough and have been trained on enough diverse important tasks, they become able to do additional new tasks with less and less additional training, and eventually can just few-shot-learn them like humans. If this happens then they really are AGI in the relevant sense, while still being less data-efficient than humans in some sense. (5) The AIs are already accelerating coding to some extent. The aforementioned pipeline that does steps 1-3 repeatedly to gradually automate the economy? That pipeline itself is in the process of getting automated as we speak. If you like you can think of the resulting giant automated corporation as itself an AGI that learns pretty fast, perhaps even faster than humans (albeit still less efficiently than humans in some sense). (Faster than humans? Well yeah; consider how fast AIs have improved at math over the last two years as companies turned their efforts towards training math skills; then consider what’s happening to agentic coding; compare to individual human mathmeticians and programmers, who take several times as long to cross the same skill range during school.) (6) Even if those previous two claims are wrong and the current paradigm just won’t count as AGI, period, if AI R&D gets accelerated significantly then the new paradigms that are necessary should be a few years away rather than decades away. And it seems that the current paradigm might suffice to accelerate R&D significantly, even if it can’t automate it completely.

Which of these two competing hypotheses is less wrong? I don’t know, but I still have substantial weight on the second.

I wish there was some quantitative analysis attempting to distinguish the two. Questions I’d love to see quantitative answers to: How much would it cost to give every major job in the economy the treatment math and coding are currently getting? How much will that cost go down, as AIs partially automate the pipeline? How much are AIs generalizing already? (this one is hard to answer because the companies are quiet about their training data) Is generalization radius increasing as models get smarter & are trained on more diverse stuff, or does it seem to be plateauing or entirely a function of e.g. pretraining loss?

...

Huh, I wonder if this helps explain some of the failures of the agents in the AI Village. Maybe a bunch of these custom RL environments are buggy, or at least more buggy than the actual environments they are replicating, and so maybe the agents have learned to have a high prior that if you try to click on something and it doesn’t work, it’s a bug rather than user error. (Probably not though. Just an idea.)