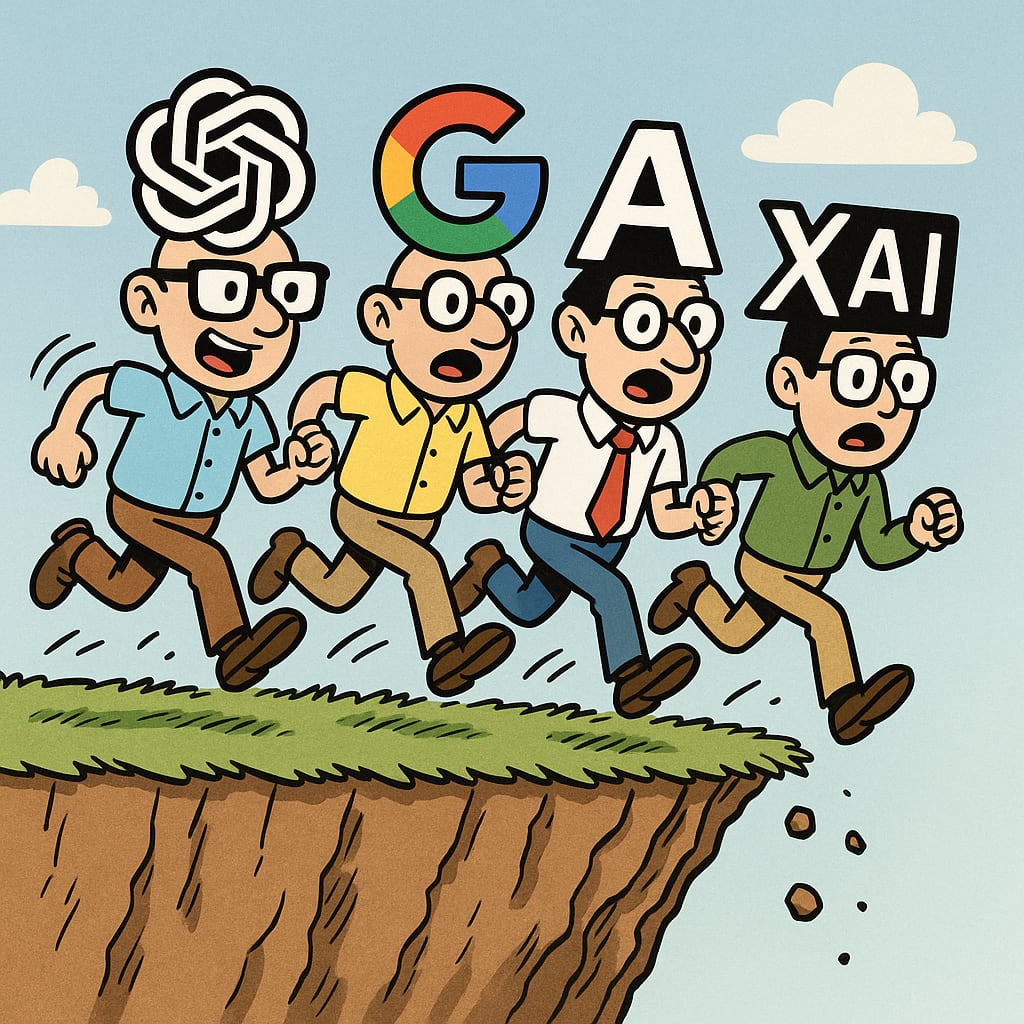

Which side of the AI safety community are you in?

In recent years, I’ve found that people who self-identify as members of the AI safety community have increasingly split into two camps:

Camp A) “Race to superintelligence safely”: People in this group typically argue that “superintelligence is inevitable because of X”, and it’s therefore better that their in-group (their company or country) build it first. X is typically some combination of “Capitalism”, “Molloch”, “lack of regulation” and “China”.

Camp B) “Don’t race to superintelligence”: People in this group typically argue that “racing to superintelligence is bad because of Y”. Here Y is typically some combination of “uncontrollable”, “1984”, “disempowerment” and “extinction”.

Whereas the 2023 extinction statement was widely signed by both Camp B and Camp A (including Dario Amodei, Demis Hassabis and Sam Altman), the 2025 superintelligence statement conveniently separates the two groups – for example, I personally offered all US Frontier AI CEO’s to sign, and none chose to do so. However, it would be oversimplified to claim that frontier AI corporate funding predicts camp membership – for example, someone from one of the top companies recently told me that he’d sign the 2025 statement were it not for fear of how it would impact him professionally.

The distinction between Camps A and B is also interesting because it correlates with policy recommendations: Camp A tends to support corporate self-regulation and voluntary commitments without strong and legally binding safety standards akin to those in force for pharmaceuticals, aircraft, restaurants and most other industries. In contrast, Camp B tends to support such binding standards, akin to those of the FDA (which can be viewed as a strict ban on releasing medicines that haven’t yet undergone clinical trials and been safety-approved by independent experts). Combined with market forces, this would naturally lead to new powerful yet controllable AI tools, to do science, cure diseases, increase productivity and even aspire for dominance (economic and military) if that’s desired – but not full superintelligence until it can be devised to meet the agreed-upon safety standards – and it remains controversial whether this is even possible.

In my experience, most people (including top decision-makers) are currently unaware of the distinction between A and B and have an oversimplified view: You’re either for AI or against it. I’m often asked: “Do you want to accelerate or decelerate? Are you a boomer or a doomer?” To facilitate a meaningful and constructive societal conversation about AI policy, I believe that it will be hugely helpful to increase public awareness of the differing visions of Camps A and B. Creating such awareness was a key goal of the 2025 superintelligence statement. So if you’ve read this far, I’d strongly encourage you to read it and, if you agree with it, sign it and share it. If you work for a company and worry about blowback from signing, please email me at mtegmark@gmail.com and say “I’ll sign this if N others from my company do”, where N=5, 10 or whatever number you’re comfortable with.

Finally, please let me provide an important clarification about the 2025 statement. Many have asked me why it doesn’t define its terms as carefully as a law would require. Our idea is that detailed questions about how to word laws and safety standards should be tackled later, once the political will has formed to ban unsafe/unwanted superintelligence. This is analogous to how detailed wording of laws against child pornography (who counts as a child, what counts as pornography, etc.) got worked out by experts and legislators only after there was broad agreement that we needed some sort of ban on child pornography.

I think there is some way that the conversation needs to advance, and I think this is roughly carving at some real joints and it’s important that people are tracking the distinction.

But

a) I’m generally worried about reifying the groups more into existence (as opposed to trying to steer towards a world where people can have more nuanced views). This is tricky, there are tradeoffs and I’m not sure how to handle this. But...

b) this post title and framing particular is super leaning into the polarization and I wish it did something different.

I don’t like polarization as such, but I also don’t like all of my loved ones being killed. I see this post and the open statement as dissolving a conflationary alliance that groups people who want to (at least temporarily) prevent the creation of superintelligence with people who don’t want to do that. Those two groups of people are trying to do very different things that I expect will have very different outcomes.

I don’t think the people in Camp A are immoral people just for holding that position[1], but I do think it is necessary to communicate: “If we do thing A, we will die. You must stop trying to do thing A, because that will kill everyone. Thing B will not kill everyone. These are not the same thing.”

In general, to actually get the things that you want in the world, sometimes you have to fight very hard for them, even against other people. Sometimes you have to optimize for convincing people. Sometimes you have to shame people. The norms of discourse that are comfortable for me and elevate truth-seeking and that make LessWrong a wonderful place are not always the same patterns as those that are most likely to cause us and our families to still be alive in the near future.

Though I have encountered some people in the AI Safety community who are happy to unnecessarily subject others to extreme risks without their consent after a naive utilitarian calculus on their behalf, which I do consider grossly immoral.

Sure—but are they in this case different? Is the polarization framing worth it here? I don’t think so, because polarization has large downsides.

You can argue against the “race to AGI” position without making the division into camps worse. Calling people kind of stupid for holding the position they do (which Tegmark’s framing definitely does) is a way to get people to dig in, double down, and fight you instead of quietly shifting their views based on logic.

I agree with him that position A is illogical; I think any reasonable estimate of risks demands more caution than the position he lays out. And I think that many people actually hold that position. But many have more nuanced views, which could firm up into a group identity and otherwise fairly risk-aware people putting effort into arguing against strong risk arguments based on defensive emotional responses.

Fine, and also I’m not saying what to do about it (shame or polarize or whatever), but PRIOR to that, we have to STOP PRETENDING IT’S JUST A VIEW. It’s a conflictual stance that they are taking. It’s like saying that the statisticians arguing against “smoking causes cancer” “have a nuanced view”.

I’m not pretending it’s just a view. The immense importance of this issue is another reason to avoid polarization. Look at how the climate change issue worked out with polarization involved.

The arguments for caution are very strong. Proponents of caution are at an advantage in a discussion. We’re also a minority, so we’re at a disadvantage in a fight. So it seems important to not help it move from being a discussion to a fight.

The climate change issue has pretty widespread international agreement and in most countries is considered a bipartisan issue. The capture of climate change by polarising forces has not really affected intervention outcomes (other problems of implementation are, imo, far greater).

I don’t want to derail the AI safety organising conversation, but I see this climate change comparison come up a lot. It strikes me as a pretty low-quality argument and it’s not clear a) whether the central claim is even true and b) whether it is transferable to organising in AI safety.

The flipside of the polarisation issue is the “false balance” issue, and that reference to smoking by TsviBT seems to be what this discussion is pointing at.

Admittedly, most of the reason why we are able to solve climate change easily while polarization happened is because it turned out to be the case that the problem was far easier to solve than feared (if we don’t care about animal welfare much, which is the case for ~all humans) without much government intervention.

I actually think this has a reasonable likelihood of happening, but conditional on no alignment solution that’s cheap enough to be adopted without large government support, if it’s doable at all, then polarization matters far more here, so it’s actually a useful case study for worlds where alignment is hard.

I don’t get it?

The climate change issue didn’t become polarized in other countries, and that’s good. It did get polarized here, and that’s bad. It has roadblocked even having discussions about solutions behind discussing the increasingly ridulous—but also increasingly prevelant—“question” of whether human-caused climate change is even real. People in the US questioned the reality of anthropogenic climate change MORE even as the evidence for it mounted—because it had become polarized, so was more about identity than facts and logic. See my AI scares and changing public beliefs for one graph of this maddening degredation of clarity.

So why create polarization on this issue?

The false balance issue is separate. One might suppose that creating polarization leads to false balance arguments, because then there are two sides so to be fair we should balance both of them. If there are just a range of opinions, false blance is less easy to argue for.

I don’t know what you mean by “the central claim” here.

I also don’t want to derail to actually discussing climate change; I just used it as one example in which polarization was pretty clearly really bad for solving a problem.

Sorry, this was perhaps unfair of me to pick on you for making the same sort of freehand argument that many have done, maybe I should write a top-level post about it.

To clarify—the idea that “climate change is not being solved because of polarisation” and “AI safety would suffer from being like climate action [due to the previous]” are twin claims that are not obvious. These arguments seem surface-level reasonable by hinging on a lot of internal American politics that I don’t think engages with the breadth of drivers of climate action. To some extent these arguments betray the lip service that AI safety is an international movement because they seek to explain the solution of an international problem solely within the framework of US politics. I also feel the polarisation of climate change is itself sensationalised.

But I think what you’ve said here is more interesting:

It seems like you believe that the opposite of polarisation is plurality (all arguments seen as equally valid), whereas I would see the opposite of polarisation as consensus (one argument is seen as valid). This is in contrast to polarisation (different groups see different arguments as valid). Valid here being more like “respectable” rather than “100% accurate”. But indeed, it’s not obvious to me that the chain of causality is polarisation → desire for false balance, rather than desire for false balance → polarisation. (Also handwavey notion to the idea that this desire for false balance comes from conflicting goals a la conflict theory).

It seems like part of the practical implication of whatever you mean by this is to say:

Like, Tegmark’s post is pretty neutral, unless I’m missing something. So it sounds like you’re saying to not describe there being two camps at all. Is that roughly what you’re saying? I’m saying that in your abstract analysis of the situation, you should stop preventing yourself from understanding that there are two camps.

I’m just repeating Raemon’s sentiment and elaborating on some reasons to be so concerned with this. I agree with him that just not framing with the title “which side are you on” seems to have much the same upside and much less polarization downside.

The fact that there are people advocating for two incompatible strategies does not mean that there are two groups in other important senses. One could look at it, and I do, as a bunch of confused humans in a complex domain, none of whom have a very good grip on the real situation, and who fall on different sides of this policy issue, but could be persuaded to change their minds on it.

The title “Which side of the AI safety community are you in?” reifies the existence of two groups at odds, with some sort of group identity, and it doesn’t seem to be much benefit to making the call for signatures that way.

So yes, I’m objecting to using the term two groups at all, let alone in the title and as the central theme. Motivating people by stirring up resentment against an outgroup is a strategy as old as time. It works in the short term. But it has big long-term costs: now you have a conflict between groups instead of a bunch of people with a variety of opinions.

Suppose someone works for Anthropic, accords with the value placed on empiricism by their Core Views on AI Safety (March 2023) and gives any weight to the idea we are in the pessimistic scenario from that document.

I think they can reasonably sign the statement yet not want to assign themselves exclusively to either camp.

I pitched my tent as a Pause AI member and I guess camp B has formed nearby. But I also have empathy for the alternate version of me who judges the trade-offs differently and has ended up as above, with a camp A zipcode.

The A/B framing has value, but I strongly want to cooperate with that person and not sit in separate camps.

I felt confused at first when you said that this framing is leaning into polarization. I thought “I don’t see any clear red-tribe / blue-tribe affiliations here.”

Then I remembered that polarization doesn’t mean tying this issue to existing big coalitions (a la Hanson’s Policy Tug-O-War), but simply that it is causing people to factionalize and create an conflict and divide between them.

I think it seems to me like Max has correctly pointed out a significant crux about policy preferences between people who care about AI existential risk, and it also seems to me worth polling people and finding out who thinks what.

It does seem to me that the post is attempting to cause some factionalization here. I am interested in hearing about whether this is a good or bad faction to exist (relative to other divides) rather than simply saying that division is costly (which it is). I am interested in some argument about whether this is worth it / this faction is a real one.

Or perhaps you/others think it should ~never be actively pushed for in the way Max does in this post (or perhaps not in this way on a place with high standards for discourse like LW).

I think it is sometimes correct to specifically encourage factionalization, but I consider it bad form to do it on LessWrong, especially without being explicitly self-aware about it. (i.e should come with an acknowledging that you are spending down the epistemic commons and why you think it is worth it).

(Where, to be clear, it’s fine/good to say “hey guys I think there is a major, common disagreement here that is important to think about, and take actions based on.” The thing I’m objecting to is the title being “which side are you on?” and encouraging you to think in terms of sides, rather than a specific belief that you try to keep your identity small about.)

(like, even specifically resolving the lack-of-nuance this post complains about, requires distinguishing between “never build ASI” and “don’t build ASI until it can be done safely”, which isn’t covered in the Two Sides)

The main split is about whether racing in the current regime is desirable, so both “never build ASI” and “don’t build ASI until it can be done safely” fall within the scope of camp B. Call these two subcamps B1 and B2. I think B1 and B2 give the same prescriptions within the actionable timeframe.

Some people likely think

Those people might give different prescriptions to the “never build ASI” people, like not endorsing actions that would tank the probability of ASI ever getting built. (Although in practice I think they probably mostly make the same prescriptions at the moment.)

In practice, bans can be lifted, so “never” is never going to become an unassailable law of the universe. And right now, it seems misguided to quibble over “Pause for 5, 10, 20 years”, and “Stop for good”, given the urgency of the extinction threat we are currently facing. If we’re going to survive the next decade with any degree of certainty, we need an alliance between B1 and B2, and I’m happy for one to exist.

I agree that some people have this preference ordering, but I don’t know of any difference in specific actionable recommendations that would be given by “don’t until safely” and “don’t ever” camps.

On this point specifically, those two groups are currently allied, though they don’t always recognize it. If sufficiently-safe alignment is found to be impossible or humanity decides to never build ASI, there would stop being any difference between the two groups.

This is well-encapsulated by the differences between Stop AI and PauseAI. At least from PauseAI’s perspective, both orgs are currently on exactly the same team.

Pause AI is clearly a central member of Camp B? And Holly signed the superintelligence petition.

Yes, my comment was meant to address the “never build ASI” and “don’t build ASI until it can be done safely” distinction, which Raemon was pointing out does not map onto Camp A and Camp B. All of ControlAI, PauseAI, and Stop AI are firmly in Camp B, but have different opinions about what to do once a moratorium is achieved.

One thing I meant to point toward was that unless we first coordinate to get that moratorium, the rest is a moot point.

Parenthetically, I do not yet know of anyone in the “never build ASI” camp and would be interested in reading or listening to such a person.

Shared without comment: https://www.stopai.info/

Émile Torres would be the most well-known person in that camp.

I just posted my attempt at combatting polarization a bit.

I’m annoyed that Tegmark and others don’t seem to understand my position: you should try for great global coordination but also invest in safety in more rushed worlds, and a relatively responsible developer shouldn’t unilaterally stop.

(I’m also annoyed by this post’s framing for reasons similar to Ray.)

I personally would not sign this statement because I disagree with it, but I encourage any OpenAI employee that wants to sign to do so. I do not believe they will suffer any harmful professional consequences. If you are at OpenAI and want to talk about this, feel free to slack me. You can also ask colleagues who signed the petition supporting SB1047 if they felt any pushback. As far as I know, no one did.

I agree that there is a need for thoughtful regulations for AI. The reason I personally would not sign this statement is because it is vague, hard to operationalize, and attempts to make it as a basis for laws will (in my opinion) lead to bad results.

There is no agreed upon definition of “superintelligence” let alone a definition of what it means to work on developing it as separate from developing AI in general. A “prohibition” is likely to lead to a number of bad outcomes. I believe that for AI to go well, transparency will be key. Companies or nations developing AI in secret is terrible for safety, and I believe this will be the likely outcome of any such prohibition.

My own opinions notwithstanding, other people are entitled to their own, and no one at OpenAI should feel intimidated from signing this statement.

As I said below, I think people are ignoring many different approaches compatible with the statement, and so they are confusing the statement with a call for international laws or enforcement (as you said, “attempts to make it as a basis for laws”), which is not mentioned. I suggested some alternatives in that comment:

”We didn’t need laws to get the 1975 Alisomar moratorium on recombinant DNA research, or the email anti-abuse (SPF/DKIM/DMARC) voluntary technical standards, or the COSPAR guidelines that were embraced globally for planetary protection in space exploration, or press norms like not naming sexual assault victims—just strong consensus and moral suasion. Perhaps that’s not enough here, but it’s a discussion that should take place which first requires clear statement about what the overall goals should be.”

This is good!

My guess is that their hesitance is also linked to potential future climates, though, and not just the current climate, so I don’t expect additional signees to come forward in response to your assurances.

FWIW I’d probably be down to talk with Boaz about it, if I still worked at OpenAI and were hesitant about signing.

I doubt Boaz would be able to provide assurances against facing retaliation from others though, which is probably the crux for signing.

(To be fair, that is a quite high bar.)

Yup! I just think there’s an unbounded way that a reader could view his comment: “oh! There are no current or future consequences at OAI for those who sign this statement!”

…and I wanted to make the bound explicit: real protections, into the future, can’t plausibly be offered, by anyone. Surely most OAI researchers are thinking ahead enough to feel the pressure of this bound (whether or not it keeps them from signing).

I’m still glad he made this comment, but the Strong Version is obviously beyond his reach to assure.

I agree no one can make absolute guarantees about the future. Also some people may worry about impact in the future if they will work in another place.

This is why I suggest people talk to me if they have concerns.

I like the first clause in the 2025 statement. If that were the whole thing, I would happily sign it. However having lived in California for decades, I’m pretty skeptical that direct democracy is a good way of making decisions, and would not endorse making a critical decision based on polls or a vote. (See also: brexit.)

I did sign the 2023 statement.

The two necessary pieces are that it won’t doom us and that people want it to happen. Obviously we shouldn’t build it if it might doom us and I additionally think that we shouldn’t build it if people say that that would be bad according to their values. There are all kinds of valid reasons why people might want ASI to exist or not exist once existential risk is removed from the equation.

I don’t think this implies direct democracy, just some mechanism by which humanity at large has some collective say in whether ASI happens or not. “But does humanity even want this” has to at least be a consideration in some form.

I agree that the statement doesn’t require direct democracy but that seems like the most likely way to answer the question “do people want this”.

Here’s a brief list of things that were unpopular and broadly opposed that I nonetheless think were clearly good:

smallpox vaccine

seatbelts, and then seatbelt laws

cars

unleaded gasoline

microwaves (the oven, not the radiation)

Generally I feel like people sometimes oppose things that seem disruptive and can be swayed by demagogues. There’s a reason that representative democracy works better than direct democracy. (Though it has obvious issues as well.)

As another whole class of examples, I think people instinctively dislike free speech, immigration, and free markets. We have those things because elites took a strong stance based on better understanding of the world.

I support democractic input, and especially understanding people’s fears and being responsive to them. But I don’t support only doing things that people want to happen. If we had followed that rule for the past few centuries I think the world would be massively worse off.

I’d split things this way:

Group A) “Given that stopping the AI race seems nearly impossible, I focus on ensuring humanity builds safe superintelligence”

Group B) “Given that building superintelligence safely under current race dynamics seems nearly impossible, I focus on stopping the AI race”

Group C) “Given deep uncertainty about whether we can align superintelligence under race conditions or stop the race itself, I work to ensure both strategies receive enough resources.”

C is fake, it’s part of A, and A is fake, it’s washing something which we don’t yet understand and should not pretend to understand.

...but it’s not fake, it’s just confused according to your expectations about the future—and yes, some people may say it dishonestly, but we should still be careful not to deny that people can think things you disagree with, just because they conflict with your map of the territory.

That said, I don’t see as much value in dichotomizing the groups as others seem to.

What? Surely “it’s fake” is a fine way to say “most people who would say they are in C are not actually working that way and are deceptively presenting as C”? It’s fake.

If you said “mostly bullshit” or “almost always disengenious” I wouldn’t argue, but would still question whether it’s actually a majority of people in group C, which I’m doubtful of, but very unsure about—but saying it is fake would usually mean it is not a real thing anyone believes, rather than meaning that the view is unusual or confused or wrong.

Closely related to: You Don’t Exist, Duncan.

I guess we could say “mostly fake”, but also there’s important senses in which “mostly fake” implies “fake simpliciter”. E.g. a twinkie made of “mostly poison” is just “a poisonous twinkie”. Often people do, and should, summarize things and then make decisions based on the summaries, e.g. “is it poison, or no” --> “can I eat it, or no”. My guess is that the conditions under which it would make sense for you to treat someone as genuinely holding position C, e.g. for purposes of allocating funding to them, are currently met by approximately no one. I could plausibly be wrong about that, I’m not so confident. But that is the assertion I’m trying to make, which is summarized imprecisely as “C is fake”, and I stand by my making that assertion in this context. (Analogy: It’s possible for me to be wrong that 2+2=4, but when I say 2+2=4, what I’m asserting / guessing is that 2+2=4 always, everywhere, exactly. https://www.lesswrong.com/s/FrqfoG3LJeCZs96Ym/p/ooypcn7qFzsMcy53R )

Just to clarify:

I am personally uncertain how hard stopping the race is. I have spent some time and money myself trying to promote IABIED, and I have also been trying to do direct alignment research, and when doing so I more often than not think explicitly in scenarios where the AI race does not stop.

Am I in group C? Am I a fake member of C?

I’d personally say I’d probably endorse C for someone who funds research/activism, and have personally basically acted on it.

I.e. I’d say it’s reasonable to say “stop the race ASAP”, and in another context say “the race might not be stopped, what projects would still maybe increase odds of survival/success conditional on a race?”

(IDK and I wouldn’t be the one to judge, and there doesn’t necessarily have to be one to judge.) I guess I’d be a bit more inclined to believe it of you? But it would take more evidence. For example, it would depend how your stances express themselves specifically in “political” contexts, i.e. in contexts where power is at stake (company governance, internal decision-making by an academic lab about allocating attentional resources, public opinion / discussion, funding decisions, hiring advice). And if you don’t have a voice in such contexts then you don’t count as much of a member of C. (Reminder that I’m talking about “camps”, not sets of individual people with propositional beliefs.)

It seems like you’re narrowing the claim, and I’m no longer sure I disagree with the point, if I’m interpreting it correctly now.

If you’re saying that they group doesn’t act differently in ways that are visible to you, sure—but the definition of the group is one that believes that two things are viable, and will sometimes support one side, and sometimes support the other. You could say it doesn’t matter for making individual decisions, because the people functionally are supporting one side or the other at a given time, but that’s different than saying they “are not actually working that way.”

There’s a huge difference between the types of cases, though. A 90% poisonous twinkie is certainly fine to call poisonous[1], but a 90% male groups isn’t reasonable to call male. You said “if most people who would say they are in C are not actually working that way and are deceptively presenting as C,” that seems far like the latter than the former, because “fake” implies the entire thing is fake[2].

Though so is a 1% poisonous twinkie; perhaps the example should be a meal that is 90% protein would be a “protein meal” without implying there is no non-protein substance present.

There is a sense where this isn’t true; if 5% of an image of a person is modified, I’d agree that the image is fake—but this is because the claim of fakeness is about the entirety of the image, as a unit. In contrast, if there were 20 people in a composite image, and 12 of them were AI-fakes and 8 were actual people, I wouldn’t say the picture is “of fake people,” I’d need to say it’s a mixture of fake and real people. Which seems like the relevant comparison if, as you said in another comment, you are describing “empirical clusters of people”!

The OP is about two “camps” of people. Do you understand what camps are? Hopefully you can see that this indeed does induce the analog of “because the claim of fakeness is about the entirety of the image”. They gain and direct funding, consensus, hiring, propaganda, vibes, parties, organizations, etc., approximately as a unit. Camp A is a 90% poison twinkie. The fact that you are trying to not process this is a problem.

I’m pointing out that the third camp, which you deny really exists, does exist, and as an aside, is materially different in important ways from the other two camps.

You say you don’t think this matters for allocating funding, and you don’t care about what others actually believe. I’m just not sure why either point is relevant here.

Could you name a couple (2 or 3, say) of some of the biggest representatives of that camp? Biggest in the camp sense, so e.g. high reputation researchers or high net worth funders.

You started by saying that most people who would say they are in C are fake, because they are not actually working that way and are deceptively presenting as C, and that A is also “fake” because it won’t work. So anyone I name in group C, under your view, is just being dishonest. I think that there are many people who have good faith beliefs in both groups, but don’t understand how naming them helps address the claim you made. (You also said that it only matters if the view exists if it’s held by funders, since I guess you claim that only people spending money can have views about what resource allocation should occur.)

That said, other than myself, who probably doesn’t count because I’m only in charge of minor amounts of money, it seems that a number of people at Open Philanthropy clearly implicitly embrace view C, based on their funding decisions which include geopolitical efforts to manage risk from AI and potentially lead to agreements, public awareness and education, and also funding technical work on AI safety.

And see this newer post, which also lays out a similar view: https://www.lesswrong.com/posts/7xCxz36Jx3KxqYrd9/plan-1-and-plan-2

Washing? Like safetywashing?

Yeah, safetywashing, or I guess mistake-theory-washing.

(I would like to note that a single person went through and strong downvoted my comments here.)

You make a valid point. Here’s another framing that makes the tradeoff explicit:

Group A) “Alignment research is worth doing even though it might provide cover for racing”

Group B) “The cover problem is too severe. We should focus on race-stopping work instead”

I think we’re just trying to do different things here… I’m trying to describe empirical clusters of people / orgs, you’re trying to describe positions, maybe? And I’m taking your descriptions as pointers to clusters of people, of the form “the cluster of people who say XYZ”. I think my interpretation is appropriate here because there is so much importance-weighted abject insincerity in publicly stated positions regarding AGI X-risk that it just doesn’t make much sense to focus on the stated positions as positions.

Like, the actual people at The Curve or whatever are less “I will do alignment, and will be against racing, and alas, this may provide some cover” and more “I will do fake alignment with no sense that I should be able to present any plausible connection between my work and making safe AGI, and I will directly support racing”. All the people who actually do the stated thing are generally understood to be irrelevant weirdos. The people who say that are being insincere, and in fact support racing.

I was trying to map out disagreements between people who are concerned enough about AI risk.Agreed that this represents only a fraction of the people who talk about AI risk, and that there are a lot of people who will use some of these arguments as false justifications for their support of racing.EDIT: as TsviBT pointed out in his comment, OP is actually about people who self-identify as members of the AI Safety community. Given that, I think that the two splits I mentioned above are still useful models, since most people I end up meeting who self-identify as members of the community seem to be sincere, without stated positions that differ from their actual reasons for why they do things. I have met people who I believe to be insincere, but I don’t think they self-identify as part of the AI Safety community. I think that TsviBT’s general point about insincerity in the AI Safety discourse is valid.

Um, no, you responded to the OP with what sure seems like a proposed alternative split. The OP’s split is about

I think you are making an actual mistake in your thinking, due to a significant gap in your thinking and not just a random thing, and with bad consequences, and I’m trying to draw your attention to it.

only adding one bit of sidedness seems like it is insufficient to describe the space of opinions in ways where further description is still needed. however, adding even a single bit of incorrect sidedness, or where one of the sides is one that we’d hope people don’t take, seems like it could have predictable bad consequences. hopefully the split is worth it. I do see this direction in the opinion space, I’m not sure if this split is enough part-of-the-original-generating-process-of-opinions to be worth it though.

I was “let’s build it before someone evil”, I’ve left that particular viewpoint behind since realizing how hard aligning it is. my thoughts on how to align it still tend to be aimed at trying to make camp A not kill us all, because I suspect camp B will fail, and our last line of defense will be maybe we get to figure out safety in time for camp A to not be delusional; I do see some maybe not hopeless paths but I’m pretty pessimistic that we get enough of our ducks in a row in time to hit the home run below par before the death star fires. but to the degree camp B has any shot at success, I participate in it too.

It was empirically infeasible (for the general AGI x-risk technical milieu) to explain this to you faster than you trying it for yourself, and one might have reasonably expected you to have been generally culturally predisposed to be open to having this explained to you. If this information takes so much energy and time to be gained, that doesn’t bode well for the epistemic soundness of whatever stance is currently being taken by the funder-attended vibe-consensus. How would you explain this to your past self much faster?

Inhabiting my views i had at the time rather than my current ones where I cringe at several alignment-is-straightforward claims I’m about to make:

Well yeah. So many people around me clearly were refusing to look at the evidence that made it obvious AGI was near (and since then have deluded themselves into believing current AI is not basically-AGI-with-some-fixable-limitations), and yet still insist they know the future around what’s possible. Obviously alignment is hard, but it doesn’t seem harder than problems the field chews through regularly anyway. it’s like they don’t even realize or think about how to turn capabilities into an ingredient for alignment—if we could train a model on all human writing we could use it to predict likely alignment successes. i explained to a few MIRI folks in early 2017, before transformers (I can show you an email thread from after our in-person conversation) why neural networks are obviously going to be AGI by 2027, they didn’t find it blindingly obvious like someone who grokked neural networks should have, and that was a huge hit to their credibility with me. They’re working on AGI but don’t see it happening in front of their faces? Come on, how stupid are you?

(Turns out getting alignment out of capabilities requires you to know what to ask for still, and to be able to reliably recognize if you got it. If you’d told me that then it would gave gotten me thinking about how to solve it but I’d still have assumed the field would figure it out in time like any other capability barrier.)

(Turns out Jake and I were somewhat, though not massively, unique in finding this blindingly obvious. Some people both found this obvious and were positioned to do something about it, eg Dario. I’ve talked before about why the ML field as a whole tends to doubt their 3 year trajectory—when you’re one of the people doing hits based research you have a lot of failures, which makes success seem further than it is for the field as a whole—and now it’s turned into alignment researchers not believing me when I tell them they’re doing work that seems likely to be relevant to capabilities more than to alignment.)

So, like, #1: make arguments based on actual empirical evidence rather than being mysteriously immune to evidence as though your intuition still can’t retrodict capability successes. (This is now being done, but not by you.) #2 might be, like, Socratic questioning my plan—if I’m so sure I can align it, how exactly do I plan to do that? And then, make arguments based on accepting that neural networks do in fact work like other distributed-representation minds (eg animals), and use that to make your point. Accept that you can’t prove that we’ll fail because it’s legitimately not true that we’re guaranteed to. Engage with the actual problems someone making a capable ai system encounters rather than wrongly assuming the problem is unrecognizeably different.

And then you get where we’ve already gotten. I mean, you haven’t, I’m still annoyed at you for having had the job of seeing this coming and being immune to evidence, but mostly the field is now making arguments that actually depend on the structures inside neural networks, so the advice that was relevant then mostly doesn’t apply anymore. But if you’d wanted to do it then, that’s what it would have looked like.

Basically if you can turn it into a socratic prompt for how to make an aligned AI you can give a capability researcher, where the prompt credibly sounds like you actually have thought about the actual steps of making capable systems and if it’s hard to do it will become apparent to them as they think about the prompt, then you’re getting somewhere. But if your response to a limitation of current AI is still “I don’t see how this can change” rather than “let me now figure out how to make this change” you’re going to be doomed to think AI is going slower than it is and your arguments are likely to sound insane to people who understand capabilities. Their intuitions have detailed bets about what’s going on inside. When you say “we don’t understand”, their response is “maybe you don’t, but I’ve tried enough stuff to have very good hypotheses consistently”. The challenge of communication is then how you make the challenge of alignment into an actual technical question where their intuitions engage and can see why it’s hard for themselves.

I was culturally disposed against it, btw. I was an LW hater in 2015 and always thought the alignment concern was stupid.

I think both of these camps are seeing real things. I think:

We should try to stop the race, but be clearsighted about the forces we’re up against and devise plans that have a chance of working despite them.

I think you’re overstating how difficult it is for the government to regulate AI. With the exception of SB 53 in California, the reason not much has happened yet is that there have been barely any attempts by governments to regulate AI. I think all it would take is for some informed government to start taking this issue seriously (in a way that LessWrong people already do).

I think this may be because the US government tends to take a hands off approach and assume the market knows best which is usually true.

I think it will be informative to see how China handles this because they have a track record of heavy-handed government interventions like banning Google, the 2021 tech industry crackdown, extremely strict covid lockdowns and so on.

From some quick research online, the number of private tutoring institutions and the revenue of the private tutoring sector fell by ~80% when the Chinese government banned for-profit tutoring in 2021 despite education having pretty severe arms race dynamics similar to AI.

I lean more towards the Camp A side, but I do understand and think there’s a lot of benefit to the Camp B side. Hopefully I can, as a more Camp A person, help explain to Camp B dwellers why we don’t reflexively sign onto these kinds of statements.

I think that Camp B has a bad habit of failing to model the Camp A rationale, based on the conversations I see in in Twitter discussions between pause AI advocates and more “Camp A” people. Yudkowsky is a paradigmatic example of the Camp B mindset, and I think it’s worth noting that a lot of people in the public readership of his book found the pragmatic recommendations therein to be extremely unhelpful. Basically, they (and I) see Yudkowsky’s plan as calling for a mass-mobilization popular effort against AI. But his plans, both in IABIED and his other writings, fail to grapple at all with the existing political situation in the United States, or with the geopolitical difficulties involved in political enforcement of an AI ban.

Remaining in this frame of “we make our case for [X course of action] so persuasively that the world just follows our advice” does not make for a compelling political theory on any level of analysis. Looking at the nuclear analogy used in IABIED, if Yudkowsky had advocated for a pragmatic containment protocol like the New START nuclear weapons deal or the Iran-US JCPOA deal, then we (the readers) could see that the Yudkowsky/Camp B side had thought deeply about the complexity of using political power to achieve actions in the full messiness of the real world. But Yudkowsky leaves the details of how a multinational treaty far more widely scoped than any existing multinational agreement would be worked out as an exercise for the reader! When Russia is actively involved in major war with a European country and China is preparing for an semi-imminent invasion of an American ally, the (intentionally?) vague calls for a multinational AI ban ring hollow. Why is there so little Rat brainpower devoted to the pragmatics of how AI safety could be advanced within the global and national political contexts?*

There are a few other gripes that I (speaking in my capacity as a Camp A denizen) have with the Camp B doctrine. Beyond inefficacy/unenforcability, the idea that the development of a superintelligence is a “one-shot” action without the ability to fruitfully learn from near-ASI non-ASI models seems deeply implausible. Also various staples of the Camp B platform — orthogonality and goal divergence out of distribution, notably — seem pretty questionable, or at least undersupported by existing empirical and theoretic work by the MIRI/PauseAI/Camp B faction.

*I was actually approached by an SBF representative in early 2022, who told me that SBF was planning on buying enough American congressional votes via candidate PAC donations that EA/AI safetyists could dictate US federal policy. This was by far the most serious AI safety effort I’ve personally witnessed come out of the EA community, and one of only a few that connected the AI safety agenda to the “facts on the ground” of the American political system.

If we look at this issue not from a positivist or Bayesian point of view, but from an existentialist one, I think it makes sense to say that a writer should always write as if everyone were going to read their words. That’s actually something Sartre talks about in What Is Literature?

I realize this might sound a bit out of tune with the LessWrong mindset, but if we stick only to Bayesian empiricism or Popper’s falsifiability as our way of modeling the world, we eventually hit a fundamental problem with data itself: data always describe the past. We can’t turn all of reality into data and predict the future like Laplace’s demon.

Maybe that’s part of why a space like LessWrong—something halfway between poetry (emotion) and prose (reason)—came into being in the first place.

And yes, I agree it might have been better if Yudkowsky had engaged more concretely with political realities, or if MIRI had pursued both the “Camp A” and “Camp B” approaches more forcefully. But from an existentialist point of view, I think it’s understandable that Eliezer wrote from the stance of “I believe I’m being logically consistent, so the world will eventually understand me.”

That said, I’d genuinely welcome any disagreement or critique—you might see something I’m missing.

But there are times when it does work!

As someone who was there, I think the portrayal of the 2020-2022 era efforts to influence policy is strawmanned, but I agree that it was the first serious attempt to engage politically by the community—and was an effort which preceded SBF in lots of different ways—so it’s tragic (and infuriating) that SBF poisoned the well by backing it and having it collapse. And most of the reason there was relatively little done by the existential risk community on pragmatic political action in 2022-2024 was directly because of that collapse!

I agree this distinction is very important, thank you for highlighting it. I’m in camp B and just signed the statement.

Camp A for all the reasons you listed. I think the only safe path forward is one of earnest intent for mutual cooperation rather than control. Not holding my breath though.

I’m mostly “camp D[oomer]: we can’t stop, and we can’t control it, and this is bad, and so the future will be bad”; but the small portion of my hope that things go well relies on cooperation being solved better than it ever has been before. otherwise, I think faster and faster brain-sized-meme evolution is not going to go well for anything we care about. achieving successful, stable, defensible inter-meme cooperation seems … like a tall order, to put it mildly. the poem on my user page is about this, as are my pinned comments. to the degree camp A[ccelerate safety] can possibly make camp D wrong, I’m on board with helping figure out how to solve alignment in time, and I think camp B[an it]/B[uy time] are useful and important.

but like, let’s get into the weeds and have a long discussion in the comments here, if you’re open to it: how do we achieve a defensibly-cooperative future that doesn’t end up wiping out most humans and AIs? how do we prevent an AI that is willing to play defect-against-all, that doesn’t want anything like what current humans and AI want, from beating all of us combined? which research paths are you excited about to make cooperation durable?

I’ll point to a similarly pessimistic but divergent view on how to mange the likely bad transition to an AI future that I co-authored recently;

agreed that among all paths to good things that I see, a common thread is somehow uplifting human cognition to keep pace with advanced AI. however, I doubt that that’s even close to good enough—human cooperation is shaky and unreliable. most humans who think they’d do good things if made superintelligent probably are wrong due to various ways to value drift when the structure of one’s cognition changes, and many humans who say they think they’d do good things are simply lying, rather than deluding themselves or overestimating their own durable-goodness. it seems to me that in order to make this happen, we need to make AIs that strongly want all humans and humanity and etc emergent groups to stick around, the way a language model wants to output text.

Yes, wanting humans to stick around is probably a minimal necessity, but it’s still probably not enough—as the post explains.

And I simply don’t think you can “make humans smarter” in ways that don’t require, or at least clearly risk, erasing things that make us fundamentally human as we understand it today.

I would have labelled Camp A as the control camp (control of the winner over everyone else) and Camp B as the mutual cooperation camp (since ending the race is the fruit of cooperation between nations). If we decide to keep racing for superintelligence, what does the finish line look like, in your view?

I think @rife is talking either about mutual cooperation betwen safety advocates and capabilities researchers, or mutual cooperation between humans and AIs.

Cooperation between humans and AIs rather than an attempt to control AIs. I think the race is going to happen regardless of who drops out of it. If those who are in the lead eventually land on mutual alignment, then we stand a chance. We’re not going to outsmart the AIs nor will we stay on control of them, nor should we.

I am not in any company or influential group, I’m just a forum commentator. But I focus on what would solve alignment, because of short timelines.

The AI that we have right now can perform a task like literature review, much faster than human. It can brainstorm on any technical topic, just without rigor. Meanwhile there are large numbers of top human researchers experimenting with AI, trying to maximize its contribution to research. To me, that’s a recipe for reaching the fabled “von Neumann” level of intelligence—the ability to brainstorm with rigor, let’s say—the idea being that once you have AI that’s as smart as von Neumann, it really is over. And who’s to say you can’t get that level of performance out of existing models, with the right finetuning? I think all the little experiments by programmers, academic users, and so on, aiming to obtain maximum performance from existing AI, are a distributed form of capabilities research, which collectively are pushing towards that outcome. Zvi just said his median time-to-crazy is 2031; I have trouble seeing how it could take that long.

To stop this (or pause it), you would need political interventions far more dramatic than anyone is currently envisaging, which also manage to be actually effective. So instead I focus on voicing my thoughts about alignment here, because this is a place with readers and contributors from most of the frontier AI companies, so a worthwhile thought has a chance of reaching people who matter to the process.

I strongly support the idea that we need consensus building before looking at specific paths forward—especially since the goal is clearly far more widely shared than the agreement about what strategy should be pursued.

For example, contra Dean Bell’s unfair strawman, this isn’t a back-door to insist on centralized AI development, or even necessarily a position that requires binding international law! We didn’t need laws to get the 1975 Alisomar moratorium on recombinant DNA research, or the email anti-abuse (SPF/DKIM/DMARC) voluntary technical standards, or the COSPAR guidelines that were embraced globally for planetary protection in space exploration, or press norms like not naming sexual assault victims—just strong consensus and moral suasion. Perhaps that’s not enough here, but it’s a discussion that should take place which first requires clear statement about what the overall goals should be.

This is also why I think the point about lab employees, and making safe pathways for them to speak out, is especially critical; current discussions about whistleblower protections don’t go far enough, and while group commitments (“if N others from my company”) are valuable, private speech on such topics should be even more clearly protected. And one reason for the inability to get consensus of lab employees is because there isn’t currently common knowledge within labs about how many of the people think that the goal is the wrong one, and the incentives for the labs to get investment are opposed to those that would allow employees to have options for voice or loyalty, instead of exit—which explains why, in general, only former employees have spoken out.

The race only ends with the winner (or the AI they develop) gaining total power over all of humanity. No thanks. B is the only option, difficult as it may be to achieve.

For me the linked site with the statement doesn’t load. And this was also the case when I first tried to access it yesterday. Seems less than ideal.

Part of the implementation choices might be alienating—the day after I signed I saw in the announcement email yesterday “Let’s take our future back from Big Tech.” and maybe a lot of people, who work at large tech companies, who are on the fence don’t like that brand of populism.

I am in a part of the A camp which wants us to keep pushing for superintelligence but with more of an overall percentage of funds/resources invested into safety.

I am glad someone said this. This is a no-brainer suggestion and something fundamental and important that “the camps” can agree on.

I’d say I’m closer to Camp B. I get, at least conceptually, how we might arrive at ASI from Eliezer’s earlier writings—but I don’t really know how it would actually be developed in practice. Especially when it comes to the idea that scalability could somehow lead to emergence or self-reference, I just don’t see any solid technical or scientific basis for that kind of qualitative leap yet.

As Douglas Hofstadter suggested in Gödel, Escher, Bach, the essence of human cognition lies in its self-referential nature, the ability to move between levels of thought, and the capacity to choose what counts as figure and what counts as ground in one’s own perception. I’m not even sure AGI—let alone ASI—could ever truly have that.

To put it in Merleau-Ponty’s terms, intelligence requires embodiment; the world is always a world of something; and consciousness is inherently perspectival. I know it sounds a bit old-fashioned to bring phenomenology into such a cutting-edge discussion, but I still think there are hints worth exploring in both Gödelian semiotics and Merleau-Ponty’s phenomenology.

Ultimately, my point is this: just as a body isn’t designed top-down but grown bottom-up, intelligence—at least artificial intelligence as we’re building it—seems more like something engineered than cultivated. That’s why I feel current discussions around ASI still lack concreteness. Maybe that’s something LessWrong could focus on more—going beyond math and economics, and bringing in perspectives from structuralism and biology as well.

And of course, if you’d like to talk more about this, I’d really welcome that.

Shared on the EA Forum, with some commentary on the state of the EA Community (I guess the LessWrong rationality community is somewhat similar?)

Here’s the equivalent poll for LessWrong. And here’s my summary:

“Big picture: the strongest support is for pausing AI now if done globally, but there’s also strong support for making AI progress slow, pausing if disaster, pausing if greatly accelerated progress. There is only moderate support for shutting AI down for decades, and near zero support for pausing if high unemployment, pausing unilaterally, and banning AI agents. There is strong opposition to never building AGI. Of course there could be large selection bias (with only ~30 people voting), but it does appear that the extreme critics saying rationalists want to accelerate AI in order to live forever are incorrect, and also the other extreme critics saying rationalists don’t want any AGI are incorrect. Overall, rationalists seem to prefer a global pause either now or soon.”

Don’t compare to FDA, compare to IAEA.

Seems wrong, FDA has the task of regulating (bio)chemicals (which can be extremely deadly on mass scales, even with relatively little effort just by getting an existing pathogen and letting biology do its thing, but have a wide range of small increments of badness/goodness, and are often fairly hard to tell if will be good or bad because our preferences about chemical outcomes are complex and chemicals have complex interactions), whereas IAEA has the task of regulating nuclear energy (which can be extremely deadly on mass scales but has a much higher minimum bar to entry, and is actually pretty hard to turn into a weapon of mass destruction, and makes a lot of very detectable noise on certain kinds of instruments when someone tries).