Decision Theories: A Less Wrong Primer

Summary: If you’ve been wondering why people keep going on about decision theory on Less Wrong, I wrote you this post as an answer. I explain what decision theories are, show how Causal Decision Theory works and where it seems to give the wrong answers, introduce (very briefly) some candidates for a more advanced decision theory, and touch on the (possible) connection between decision theory and ethics.

What is a decision theory?

This is going to sound silly, but a decision theory is an algorithm for making decisions[1]. The inputs are an agent’s knowledge of the world, and the agent’s goals and values; the output is a particular action (or plan of actions). Actually, in many cases the goals and values are implicit in the algorithm rather than given as input, but it’s worth keeping them distinct in theory.

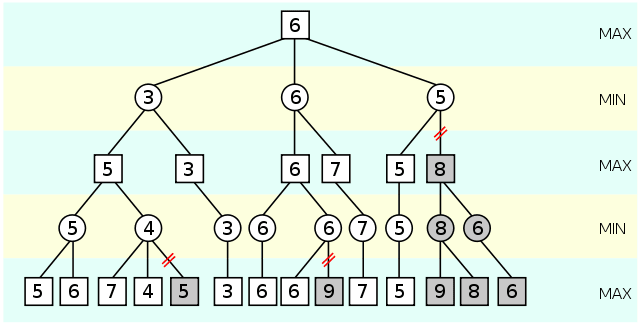

For example, we can think of a chess program as a simple decision theory. If you feed it the current state of the board, it returns a move, which advances the implicit goal of winning. The actual details of the decision theory include things like writing out the tree of possible moves and countermoves, and evaluating which possibilities bring it closer to winning.

Another example is an E. Coli bacterium. It has two basic options at every moment: it can use its flagella to swim forward in a straight line, or to change directions by randomly tumbling. It can sense whether the concentration of food or toxin is increasing or decreasing over time, and so it executes a simple algorithm that randomly changes direction more often when things are “getting worse”. This is enough control for bacteria to rapidly seek out food and flee from toxins, without needing any sort of advanced information processing.

A human being is a much more complicated example which combines some aspects of the two simpler examples; we mentally model consequences in order to make many decisions, and we also follow heuristics that have evolved to work well without explicitly modeling the world.[2] We can’t model anything quite like the complicated way that human beings make decisions, but we can study simple decision theories on simple problems; and the results of this analysis were often more effective than the raw intuitions of human beings (who evolved to succeed in small savannah tribes, not negotiate a nuclear arms race). But the standard model used for this analysis, Causal Decision Theory, has a serious drawback of its own, and the suggested replacements are important for a number of things that Less Wrong readers might care about.

What is Causal Decision Theory?

Causal decision theory (CDT to all the cool kids) is a particular class of decision theories with some nice properties. It’s straightforward to state, has some nice mathematical features, can be adapted to any utility function, and gives good answers on many problems. We’ll describe how it works in a fairly simple but general setup.

Let X be an agent who shares a world with some other agents (Y1 through Yn). All these agents are going to privately choose actions and then perform them simultaneously, and the actions will have consequences. (For instance, they could be playing a round of Diplomacy.)

We’ll assume that X has goals and values represented by a utility function: for every consequence C, there’s a number U(C) representing just how much X prefers that outcome, and X views equal expected utilities with indifference: a 50% chance of utility 0 and 50% chance of utility 10 is no better or worse than 100% chance of utility 5, for instance. (If these assumptions sound artificial, remember that we’re trying to make this as mathematically simple as we can in order to analyze it. I don’t think it’s as artificial as it seems, but that’s a different topic.)

X wants to maximize its expected utility. If there were no other agents, this would be simple: model the world, estimate how likely each consequence is to happen if it does this action or that, calculate the expected utility of each action, then perform the action that results in the highest expected utility. But if there are other agents around, the outcomes depend on their actions as well as on X’s action, and if X treats that uncertainty like normal uncertainty, then there might be an opportunity for the Ys to exploit X.

This is a Difficult Problem in general; a full discussion would involve Nash equilibria, but even that doesn’t fully settle the matter- there can be more than one equilibrium! Also, X can sometimes treat another agent as predictable (like a fixed outcome or an ordinary random variable) and get away with it.

CDT is a class of decision theories, not a specific decision theory, so it’s impossible to specify with full generality how X will decide if X is a causal decision theorist. But there is one key property that distinguishes CDT from the decision theories we’ll talk about later: a CDT agent assumes that X’s decision is independent from the simultaneous decisions of the Ys- that is, X could decide one way or another and everyone else’s decisions would stay the same.

Therefore, there is at least one case where we can say what a CDT agent will do in a multi-player game: some strategies are dominated by others. For example, if X and Y are both deciding whether to walk to the zoo, and X will be happiest if X and Y both go, but X would still be happier at the zoo than at home even if Y doesn’t come along, then X should go to the zoo regardless of what Y does. (Presuming that X’s utility function is focused on being happy that afternoon.) This criterion is enough to “solve” many problems for a CDT agent, and in zero-sum two-player games the solution can be shown to be optimal for X.

What’s the problem with Causal Decision Theory?

There are many simplifications and abstractions involved in CDT, but that assumption of independence turns out to be key. In practice, people put a lot of effort into predicting what other people might decide, sometimes with impressive accuracy, and then base their own decisions on that prediction. This wrecks the independence of decisions, and so it turns out that in a non-zero-sum game, it’s possible to “beat” the outcome that CDT gets.

The classical thought experiment in this context is called Newcomb’s Problem. X meets with a very smart and honest alien, Omega, that has the power to accurately predict what X would do in various hypothetical situations. Omega presents X with two boxes, a clear one containing $1,000 and an opaque one containing either $1,000,000 or nothing. Omega explains that X can either take the opaque box (this is called one-boxing) or both boxes (two-boxing), but there’s a trick: Omega predicted in advance what X would do, and put $1,000,000 into the opaque box only if X was predicted to one-box. (This is a little devious, so take some time to ponder it if you haven’t seen Newcomb’s Problem before- or read here for a fuller explanation.)

If X is a causal decision theorist, the choice is clear: whatever Omega decided, it decided already, and whether the opaque box is full or empty, X is better off taking both. (That is, two-boxing is a dominant strategy over one-boxing.) So X two-boxes, and walks away with $1,000 (since Omega easily predicted that this would happen). Meanwhile, X’s cousin Z (not a CDT) decides to one-box, and finds the box full with $1,000,000. So it certainly seems that one could do better than CDT in this case.

But is this a fair problem? After all, we can always come up with problems that trick the rational agent into making the wrong choice, while a dumber agent lucks into the right one. Having a very powerful predictor around might seem artificial, although the problem might look much the same if Omega had a 90% success rate rather than 100%. One reason that this is a fair problem is that the outcome depends only on what action X is simulated to take, not on what process produced the decision.

Besides, we can see the same behavior in another famous game theory problem: the Prisoner’s Dilemma. X and Y are collaborating on a project, but they have different goals for it, and either one has the opportunity to achieve their goal a little better at the cost of significantly impeding their partner’s goal. (The options are called cooperation and defection.) If they both cooperate, they get a utility of +50 each; if X cooperates and Y defects, then X winds up at +10 but Y gets +70, and vice versa; but if they both defect, then both wind up at +30 each.[3]

If X is a CDT agent, then defecting dominates cooperating as a strategy, so X will always defect in the Prisoner’s Dilemma (as long as there are no further ramifications; the Iterated Prisoner’s Dilemma can be different, because X’s current decision can influence Y’s future decisions). Even if you knowingly pair up X with a copy of itself (with a different goal but the same decision theory), it will defect even though it could prove that the two decisions will be identical.

Meanwhile, its cousin Z also plays the Prisoner’s Dilemma: Z cooperates when it’s facing an agent that has the same decision theory, and defects otherwise. This is a strictly better outcome than X gets. (Z isn’t optimal, though; I’m just showing that you can find a strict improvement on X.)3

What decision theories are better than CDT?

It’s worth mentioning Evidential Decision Theory, the “usual” counterpart to CDT; EDT one-boxes on Newcomb’s Problem, but it gives the wrong answer on a classical one-player problem (The Smoking Lesion, which could really use a better framing) which the advanced decision theories handle correctly. It’s also far less amenable to formalization than the others.

This post is pretty long already, but it’s way too short to outline the advanced decision theories that have been proposed and developed recently by a number of people (including Eliezer, Gary Drescher, Wei Dai, Vladimir Nesov and Vladimir Slepnev). Instead, I’ll list the features that we would want an advanced decision theory to have:

The decision theory should be formalizable at least as well as CDT is.

The decision theory should give answers that are at least as good as CDT’s answers. In particular, it should always get the right answer in 1-player games, and find or exceed a Nash equilibrium in zero-sum two-player games (when the other player is also willing and able to do so).

The decision theory should outperform CDT on the Prisoner’s Dilemma- it should elicit mutual cooperation in the Prisoner’s Dilemma from some agents that CDT elicits mutual defection from, it shouldn’t cooperate when its partner defects, and (arguably) it should defect if its partner would cooperate regardless.

The decision theory should one-box on Newcomb’s Problem.

The decision theory should be reasonably simple, and not include a bunch of ad-hoc rules. We want to solve problems involving prediction of actions in general, not just the special cases.

There are a couple of candidate decision theories (Timeless Decision Theory, Updateless Decision Theory, and Ambient Decision Theory) which seem to meet these criteria. Interestingly, formalizing any of these tends to deeply involve the mathematics of self-reference (Gödel’s Theorem and Löb’s Theorem) in order to avoid the infinite regress inherent in simulating an agent that’s simulating you.

But for the time being, we can massively oversimplify and outline them. TDT considers your ultimate decision as the cause of both your action and other agents’ valid predictions of your action, and tries to pick the decision that works best under that model. ADT uses a kind of diagonalization to predict the effects of different decisions without having the final decision throw off the prediction. And UDT considers the decision that would be the best policy for all possible versions of you to employ, on average.

Why are advanced decision theories important for Less Wrong?

There are a few reasons. Firstly, there are those who think that advanced decision theories are a natural base on which to build AI. One reason for this is something I briefly mentioned: even CDT allows for the idea that X’s current decisions can affect Y’s future decisions, and self-modification counts as a decision. If X can self-modify, and if X expects to deal with situations where an advanced decision theory would out-perform its current self, then X will change itself into an advanced decision theory (with some weird caveats: for example, if X started out as CDT, its modification will only care about other agents’ decisions made after X self-modified).

More relevantly to rationalists, the bad choices that CDT makes are often held up as examples of why you shouldn’t try to be rational, or why rationalists can’t cooperate. But instrumental rationality doesn’t need to be synonymous with causal decision theory: if there are other decision theories that do strictly better, we should adopt those rather than CDT! So figuring out advanced decision theories, even if we can’t implement them on real-world problems, helps us see that the ideal of rationality isn’t going to fall flat on its face.

Finally, advanced decision theory could be relevant to morality. If, as many of us suspect, there’s no basis for human morality apart from what goes on in human brains, then why do we feel there’s still a distinction between what-we-want and what-is-right? One answer is that if we feed in what-we-want into an advanced decision theory, then just as cooperation emerges in the Prisoner’s Dilemma, many kinds of patterns that we take as basic moral rules emerge as the equilibrium behavior. The idea is developed more substantially in Gary Drescher’s Good and Real, and (before there was a candidate for an advanced decision theory) in Douglas Hofstadter’s concept of superrationality.

It’s still at the speculative stage, because it’s difficult to work out what interactions between agents with advanced decision theories would look like (in particular, we don’t know whether bargaining would end in a fair split or in a Xanatos Gambit Pileup of chicken threats, though we think and hope it’s the former). But it’s at least a promising approach to the slippery question of what ‘right’ could actually mean.

And if you want to understand this on a slightly more technical level… well, I’ve started a sequence.

Next: A Semi-Formal Analysis, Part I (The Problem with Naive Decision Theory)

- ^

Rather confusingly, decision theory is the name for the study of decision theories.

- ^

Both patterns appear in our conscious reasoning as well as our subconscious thinking- we care about consequences we can directly foresee and also about moral rules that don’t seem attached to any particular consequence. However, just as the simple “program” for the bacterium was constructed by evolution, our moral rules are there for evolutionary reasons as well- perhaps even for reasons that have to do with advanced decision theory...

- ^

Eliezer once pointed out that our intuitions on most formulations of the Prisoner’s Dilemma are skewed by our notions of fairness, and a more outlandish example might serve better to illustrate how a genuine PD really feels. For an example where people are notorious for not caring about each others’ goals, let’s consider aesthetics: people who love one form of music often really feel that another popular form is a waste of time. One might feel that if the works of Artist Q suddenly disappeared from the world, it would objectively be a tragedy; while if the same happened to the works of Artist R, then it’s no big deal and R’s fans should be glad to be freed from that dreck.

We can use this aesthetic intolerance to construct a more genuine Prisoner’s Dilemma without inviting aliens or anything like that. Say X is a writer and Y is an illustrator, and they have very different preferences for how a certain scene should come across, so they’ve worked out a compromise. Now, both of them could cooperate and get a scene that both are OK with, or X could secretly change the dialogue in hopes of getting his idea to come across, or Y could draw the scene differently in order to get her idea of the scene across. But if they both “defect” from the compromise, then the scene gets confusing to readers. If both X and Y prefer their own idea to the compromise, prefer the compromise to the muddle, and prefer the muddle to their partner’s idea, then this is a genuine Prisoner’s Dilemma.

- Robust Cooperation in the Prisoner’s Dilemma by (7 Jun 2013 8:30 UTC; 124 points)

- Original Research on Less Wrong by (29 Oct 2012 22:50 UTC; 50 points)

- Decision Theories: A Semi-Formal Analysis, Part I by (24 Mar 2012 16:01 UTC; 37 points)

- Decision Theories: A Semi-Formal Analysis, Part III by (14 Apr 2012 19:34 UTC; 36 points)

- Decision Theories: A Semi-Formal Analysis, Part II by (6 Apr 2012 18:59 UTC; 26 points)

- 's comment on Welcome to Less Wrong! (6th thread, July 2013) by (23 Jul 2014 10:54 UTC; 16 points)

- Reaching young math/compsci talent by (2 Jun 2012 21:07 UTC; 10 points)

- Suggestions for naming a class of decision theories by (17 Mar 2012 17:22 UTC; 9 points)

- Lighthaven Sequences Reading Group #29 (Tuesday 04/08) by (4 Apr 2025 1:16 UTC; 9 points)

- Cognitive Empathy and Emotional Labor by (26 Sep 2017 20:39 UTC; 7 points)

- Meetup : Seattle: Decision Theory by (31 Mar 2012 21:07 UTC; 5 points)

- 's comment on Open thread, March 17-31, 2013 by (17 Mar 2013 18:05 UTC; 3 points)

- 's comment on Why do people ____? by (7 May 2012 14:23 UTC; 3 points)

- 's comment on Stupid Questions Open Thread Round 3 by (13 Jul 2012 23:53 UTC; 2 points)

- How to Choose a Goddess (Using a Spreadsheet) by (13 Mar 2017 2:48 UTC; 2 points)

- Meetup : West LA Meetup—Decision Theory by (13 Mar 2012 18:23 UTC; 1 point)

- 's comment on Stupid Questions Open Thread Round 2 by (24 Apr 2012 21:22 UTC; 0 points)

This is a good post, but it would be super valuable if you could explain the more advanced decision theories and the current problems people are working on as clearly as you explained the basics here.

Yes, they definitely need a non-technical introduction as well (and none of the posts on them seem to serve that purpose). I’ll see if I feel inspired again this weekend.

It’s pretty easy to explain the main innovation in TDT/UDT/ADT: they all differ from EDT/CDT in how they answer “What is it that you’re deciding when you make a decision?” and “What are the consequences of a decision?”, and in roughly the same way. They answer the former by “You’re deciding the logical fact that the program-that-is-you makes a certain output.” and the latter by “The consequences are the logical consequences of that logical fact.” UDT differs from ADT in that UDT uses an unspecified “math intuition module” to form probability distribution over possible logical consequences, whereas ADT uses logical deduction and only considers consequences that it can prove. (TDT also makes use of Pearl’s theory of causality which I admittedly do not understand.)

There is no need to focus on these concepts. That the fact of decision is “logical” doesn’t usefully characterize it: if we talk about the “physical” fact of making a decision, then everything else remains the same, you’d just need to see what this physical event implies about decisions made by your near-copies elsewhere (among the normal consequences). Likewise, pointing to a physical event doesn’t require conceptualizing a “program” or even an “agent” that computes the state of this event, you could just specify coordinates in spacetime and work on figuring out what’s there (roughly speaking).

(It’s of course convenient to work with abstractly defined structures, in particular decisions generated by programs (rather than abstractly defined in a more general way), and at least with mathematical structuralism in mind working with abstract structures looks like the right way of describing things in general.)

But how does one identify/encode a physical fact? With a logical fact you can say “Program with source code X outputs Y” and then deduce consequences from that. I don’t see what the equivalent is with a “physical” notion of decision. Is the agent supposed to have hard-coded knowledge of the laws of physics and its spacetime coordinates (which would take the place of knowledge of its own source code) and then represent a decision as “the object at coordinate X in the universe with laws Y and initial conditions Z does A”? That seems like a much less elegant and practical solution to me. And you’re still using it as a logical fact, i.e., deducing logical consequences from it, right?

I feel like you must be making a point that I’m not getting...

The same way you find a way home. How does that work? Presumably only if we assume the context of a particular collection of physical worlds (perhaps with selected preferred approximate location). Given that we’re considering only some worlds, additional information that an agent has allows it to find a location within these worlds, without knowing the definition of those worlds.

This I think is an important point, and it comes up frequently for various reasons: to usefully act or reason, an agent doesn’t have to “personally” understand what’s going on, there may be “external” assumptions that enable an agent to act within them without having access to them.

(I’m probably rehashing what is already obvious to all readers, or missing something, but:)

’Course, which Nesov acknowledged with his closing sentence, but even so it’s conceivable, which indicates that the focus on logical facts isn’t a necessary distinction between old and new decision theories. And Nesov’s claim was only there there is no need to focus on logical-ness as such to explain the distinction.

Does comprehensive physical knowledge look any different from decompressed logical knowledge? Logical facts seem to be facts about what remains true no matter where you are, but if you know everything about where you are already then the logical aspect of your knowledge doesn’t need to be acknowledged or represented. More concretely, if you have a detailed physical model of your selves, i.e. all instantiations of the program that is you, across all possible quantum branches, and you know that all of them like to eat cake, then there’s no additional information hidden in the logical fact “program X, i.e. me, likes to eat cake”. You can represent the knowledge either way, at least theoretically, which I think is Nesov’s point, maybe?

But this only seems true about physically instantiated agents reasoning about decisions from a first person perspective so to speak, so I’m confused; there doesn’t seem to be a corresponding “physical” way to model how purely mathematical objects can ambiently control other purely mathematical objects. Is it assumed that such mathematical objects can only have causal influence by way of their showing up in a physically instantiated decision calculus somewhere (at least for our purposes)? Or is the ability of new decision theories to reason about purely mathematical objects considered relatively tangential to the decision theories’ defining features (even if it is a real advantage)?

This post isn’t really correct about what distinguishes CDT from EDT or TDT. The distinction has nothing to do with the presence of other agents and can be seen in the absence of such (e.g. Smoking Lesion). Indeed neither decision theory contains a notion of “other agents”; both simply regard things that we might classify as “other agents” simply as features of the environment.

Fundamentally, the following paragraph is wrong:

The difference between these theories is actually in how they interpret the idea of “how likely each consequence is to happen if it does this action or that”; hence they differ even in that “simple” case.

(Note: I’m only considering CDT, EDT, and TDT here. I think the others may work by some other mechanism?)

EDT does differ from CDT in that case (hence the Smoking Lesion problem), but EDT is clearly wrong to do so, and I can’t think of any one-player games that CDT gets wrong, or in which CDT disagrees with TDT.

I don’t think this is right in general- I realized overnight that these decision theories are underspecified on how they treat other agents, so instead they should be regarded as classes of decision theories. There are some CDT agents who treat other agents as uncertain features of the environment, and some that treat them as pure unknowns that one has to find a Nash equilibrium for. Both satisfy the requirements of CDT, and they’ll come to different answers sometimes.

(That is, if X doesn’t have a very good ability to predict how Y works, then a “feature of the environment” CDT will treat Y’s action with an ignorance prior which may be very different from any Nash equilibrium for Y, and X’s decision might not be an equilibrium strategy. If X has the ability to predict Y well, and vice versa, then the two should be identical.)

A few minor points:

You mention utility (“can be adapted to any utility function”) before defining what utility is. Also, you make it sound like the concept of utility is specific to CDT rather than being common to all of the decision theories mentioned.

Utility isn’t the same as utilitarianism. There are only certain classes of utility functions that could reasonably be considered “utilitarian”, but decision theories work for any utility function.

What exactly do you mean by a “zero-sum game”? Are we talking about two-player games only? (talking about “the other players” threw me off here)

Thanks! I’ve made some edits.

I think the concept of utility functions is widespread enough that I can get away with it (and I can’t find an aesthetically pleasing way to reorder that section and fix it).

Nowhere in this post am I talking about Benthamist altruistic utilitarianism. I realize the ambiguity of the terms, but again I don’t see a good way to fix it.

Oops, good catch.

Ah sorry—it was the link “but that’s a different topic” that I was talking about, I realize I didn’t make that clear. I was expecting the justification for assigning outcomes a utility would link to something about the Von Neumann-Morgenstern axioms, which I think are less controversial than altruistic utilitarianism. But it’s only a minor point.

Ah. It may be a matter of interpretation, but I view that post and this one as more enlightening on the need for expected-utility calculation, even for non-altruists, than the von Neumann-Morgenstern axioms.

Oooh, between your description of CDT, and the recent post on how experts will defend their theory even against counterfactuals, I finally understand how someone can possibly justify two-boxing in Newcombe’s Problem. As an added bonus, I’m also seeing how being “half rational” can be intensely dangerous, since it leads to things like two-boxing in Newcombe’s Problem :)

Thank you so much for this. I really don’t feel like I understand decision theories very well, so this was helpful. But I’d like to ask a couple questions that I’ve had for awhile that this didn’t really answer.

Why does evidential decision theory necessarily fail the Smoking Lesion Problem? That link is technically Solomon’s problem, not Smoking Lesion problem, but it’s related. If p(Cancer|smoking|lesion) = p(Cancer|not smoking|lesion), why is Evidential Decision Theory forbidden from using these probabilities? Evidential decision theory makes a lot of intuitive sense to me and I don’t really see why it’s demonstrably wrong.

It seems like TDT can pretty easily fail a version of Newcomb’s problem to me. (Maybe everyone knows this already and I haven’t just seen it anywhere.) Suppose there is a CDT AI that, over the course of a year, modifies to become a TDT. Suppose also that this AI is presented with a variation of Newcomb’s problem. The twist is this: Omega’s placement of money into the opaque box is determined not by the decision theory you currently operate by, but that which you operated by a year ago. As such, Omega will leave $0 in the box. But TDT, as I understand it, acts as though it controls all nodes of the decision making process simultaneously, which makes it vulnerable to processes that take extended periods of time, where the agents decision theory may well have changed. You can probably see where this is going: TDT/former CDT one boxes and finds $0, the inferior option to $1000. This isn’t really a rigorous critique of TDT, since it’s obviously predicated on not being a TDT at a prior point, but it was a question I thought about in the context of a self modifying CDT AI.

It’s actually tough to predict what EDT would do, since it depends on picking the right reference class for yourself, and we have no idea how to formalize those. But the explanations of why EDT would one-box on Newcomb’s Problem appear isomorphic to explanations of why EDT would forgo smoking in the Smoking Lesion problem, so it appears that a basic implementation would fail one or the other.

That shouldn’t happen. If TDT knows that the boxes are being filled by simulating a CDT algorithm (even if that algorithm was its ancestor), then it will two-box.

I’m guessing what matters is not so much time as the causal dependence of those decisions made by other agents on the physical event of the decision of X to self-modify. So the improved X still won’t care about its influence on future decisions made by other agents for reasons other than X having self-modified. For example, take the (future) decisions of other agents that are space-like separated from X’s self-modification.

Even more strangely, I’m guessing agents that took a snapshot of X just before self-modification, able to predict its future actions, will be treated by X differently depending on whether they respond to observations of the physical events caused by the behavior of improved X, or to the (identical) inferences made based on the earlier snapshot.

Correct, of course. I sacrificed a little accuracy for the sake of being easier for a novice to read; is there a sentence that would optimize both?

Do you understand this effect well enough to rule its statement “obviously correct”? I’m not that sure it’s true, it’s something built out of an intuitive model of “what CDT cares about”, not technical understanding, so I would be interested in an explanation that is easier for me to read… (See another example in the updated version of grandparent.)

Right, we don’t really understand it yet, but on an informal level it appears valid. I think it’s worth mentioning at a basic level, though it deserves a fuller discussion. Good example.

It might also help to consider examples in which “cooperation” doesn’t give warm fuzzy feelings and “defection” the opposite. Businessmen forming a cartel are also in a PD situation. Do we want businessmen to gang up against their customers?

This may be culturally specific, though. It’s interesting that in the standard PD, we’re supposed to be rooting for the prisoners to go free. Is that how it’s viewed in other countries?

I think in PD we are rooting that it doesn’t happen so that the worse prisoner goes free and the more honourable one sits in jail.

It probably depends on how you frame the game—are businessmen the only players, or are their customers players too? In this model, only players have utilons, there are no utilons for the “environment”. Or perhaps the well-being of general population could also be a part of businessman’s utility function.

If customers are not players, and their well-being does not affect businessmen’s utility function (in other words, we talk about psychopathic businessmen), then it is a classical PD.

But it is good to note than in reality “warm fuzzy feelings” are part of the human utility function, whether the model acknowledges it or not, so some our intuitions may be wrong.

Thanks for writing this. I would object to calling a decision theory an “algorithm”, though, since it doesn’t actually specify how to make the computation, and in practice the implied computations from most decision theories are completely infeasible (for instance, the chess decision theory requires a full search of the game tree).

Of course, it would be much more satisfying and useful if decision theories actually were algorithms, and I would be very interested to see any that achieve this or move in that direction.

This reasoning strikes me as somewhat odd. Even if it turned out that these patterns don’t emerge at all, we would still distinguish “what-we-want” from “what-is-right”.

True. The speculation is that what-we-want, when processed through advanced decision theory, comes out as a good match for our intuitions on what-is-right, and this would serve as a legitimate reductionistic grounding of metaethics. If it turned out not to match, we’d have to look for other ways to ground metaethics.

Or perhaps we’d have to stop taking our intuitions on what-is-right at face value.

Or that, yes.

I wish you’d stop saying “advanced decision theory”, as it’s way too infantile currently to be called “advanced”...

I want a term to distinguish the decision theories (TDT, UDT, ADT) that pass the conditions 1-5 above. I’m open to suggestions.

Actually, hang on, I’ll make a quick Discussion post.

Does anyone have a decent idea of the differences between UDT, TDT and ADT? (Not in terms of the concepts they’re based on; in terms of problems to which they give different answers.)

There are a couple of things I find odd about this. First, it seems to be taken for granted that one-boxing is obviously better than two boxing, but I’m not sure that’s right. J.M. Joyce has an argument (in his foundations of causal decision theory) that is supposed to convince you that two-boxing is the right solution. Importantly, he accepts that you might still wish you weren’t a CDT (so that Omega predicted you would one-box). But, he says, in either case, once the boxes are in front of you, whether you are a CDT or a EDT, you should two-box! The dominance reasoning works in either case, once the prediction has been made and the boxes are in front of you.

But this leads me on to my second point. I’m not sure how much of a flaw Newcomb’s problem is in a decision theory, given that it relies on the intervention of an alien that can accurately predict what you will do. Let’s leave aside the general problem of predicting real agents’ actions with that degree of accuracy. If you know that the prediction of your choice affects the success of your choices, I think that reflexivity or self reference simply makes the prediction meaningless. We’re all used to self-reference being tricky, and I think in this case it just undermines the whole set up. That is, I don’t see the force of the objection from Newcomb’s problem, because I don’t think it’s a problem we could ever possibly face.

Here’s an example of a related kind of “reflexivity makes prediction meaningless”. Let’s say Omega bets you $100 that she can predict what you will eat for breakfast. Once you accept this bet, you now try to think of something that you would never otherwise think to eat for breakfast, in order to win the bet. The fact that your actions and the prediction of your actions have been connected in this way by the bet makes your actions unpredictable.

Going on to the prisoner’s dilemma. Again, I don’t think that it’s the job of decision theory to get “the right” result in PD. Again, the dominance reasoning seems impeccable to me. In fact, I’m tempted to say that I would want any future advanced decision theory to satisfy some form of this dominance principle: it’s crazy to ever choice an act that is guaranteed to be worse. All you need to do to “fix” PD is to have the agent attach enough weight to the welfare of others. That’s not a modification of the decision theory, that’s a modification of the utility function.

I generally share your reservations.

But as I understand it, proponents of alternative DTs are talking about a conditional PD where you know you face an opponent executing a particular DT. The fancy-DT-users all defect on PD when the prior of their PD-partner being on CDT or similar is high enough, right?

Wouldn’t you like to be the type of agent who cooperates with near-copies of yourself? Wouldn’t you like to be the type of agent who one-boxes? The trick is to satisfy this desire without using a bunch of stupid special-case rules, and show that it doesn’t lead to poor decisions elsewhere.

(Yes, you are correct!)

Yes, but it would be strictly better (for me) to be the kind of agent who defects against near-copies of myself when they co-operate in one-shot games. It would be better to be the kind of agent who is predicted to one-box, but then two-box once the money has been put in the opaque box.

But the point is really that I don’t see it as the job of an alternative decision theory to get “the right” answers to these sorts of questions.

The larger point makes sense. Those two things you prefer are impossible according to the rules, though.

They’re not necessarily impossible. If you have genuine reason to believe you can outsmart Omega, or that you can outsmart the near-copy of yourself in PD, then you should two-box or defect.

But if the only information you have is that you’re playing against a near-copy of yourself in PD, then cooperating is probably the smart thing to do. I understand this kind of thing is still being figured out.

According to what rules? And anyway I have preferences for all kinds of impossible things. For example, I prefer cooperating with copies of myself, even though I know it would never happen, since we’d both accept the dominance reasoning and defect.

I think he meant according to the rules of the thought experiments. In Newcomb’s problem, Omega predicts what you do. Whatever you choose to do, that’s what Omega predicted you would choose to do. You cannot to choose to do something that Omega wouldn’t predict—it’s impossible. There is no such thing as “the kind of agent who is predicted to one-box, but then two-box once the money has been put in the opaque box”.

Elsewhere on this comment thread I’ve discussed why I think those “rules” are not interesting. Basically, because they’re impossible to implement.

Right. The rules of the respective thought experiments.. Similarly, if you’re the sort to defect against near copies of yourself in one-shot PD, then so is your near copy. (edit: I see now that scmbradley already wrote about that—sorry for the redundancy).

Your actions have been determined in part by the bet that Omega has made with you—I do not see how that is supposed to make them unpredictable any more than adding any other variable would do so. Remember: You only appear to have free will from within the algorithm, you may decide to think of something you’d never otherwise think about but Omega is advanced enough to model you down to the most basic level—it can predict your more complex behaviours based upon the combination of far simpler rules. You cannot necessarily just decide to think of something random which would be required in order to be unpredictable.

Similarly, the whole question of whether you should choose to two box or one box is a bit iffy. Strictly speaking there’s no SHOULD about it. You will one box or you will two box. The question phrased as a should question—as a choice—is meaningless unless you’re treating choice as a high-level abstraction of lower level rules; and if you do that, then the difficult disappears—just as you don’t ask a rock whether it should or shouldn’t crush someone when it falls down a hill.

Meaningfully, we might ask whether it is preferable to be the type of person who two boxes or the type of person who one boxes. As it turns out it seems to be more preferable to one-box and make stinking great piles of dosh. And as it turns out I’m the sort of person who, holding a desire for filthy lucre, will do so.

It’s really difficult to side step your intuitions—your illusion that you actually get a free choice here. And I think the phrasing of the problem and its answers themselves have a lot to do with that. I think if you think that people get a choice—and the mechanisms of Omega’s prediction hinge upon you being strongly determined—then the question just ceases to make sense. And you’ve got to jettison one of the two; either Omega’s prediction ability or your ability to make a choice in the sense conventionally meant.

No. What is preferable is to be the kind of person Omega will predict will one-box, and then actually two-box. As long as you “trick” Omega, you get strictly more money. But I guess your point is you can’t trick Omega this way.

Which brings me back to whether Omega is feasible. I just don’t share the intuition that Omega is capable of the sort of predictive capacity required of it.

Well, I guess my response to that would be that it’s a thought experiment. Omega’s really just an extreme—hypothetical—case of a powerful predictor, that makes problems in CDT more easily seen by amplifying them. If we were to talk about the prisoner’s dilemma, we could easily have roughly the same underlying discussion.

See mine and orthonormal’s comments on the PD on this post for my view of that.

The point I’m struggling to express is that I don’t think we should worry about the thought experiment, because I have the feeling that Omega is somehow impossible. The suggestion is that Newcomb’s problem makes a problem with CDT clearer. But I argue that Newcomb’s problem makes the problem. The flaw is not with the decision theory, but with the concept of such a predictor. So you can’t use CDT’s “failure” in this circumstance as evidence that CDT is wrong.

Here’s a related point: Omega will never put the money in the box. Smith act like a one-boxer. Omega predicts that Smith will one-box. So the million is put in the opaque box. Now Omega reasons as follows: “Wait though. Even if Smith is a one-boxer, now that I’ve fixed what will be in the boxes, Smith is better off two-boxing. Smith is smart enough to realise that two-boxing is dominant, once I can’t causally affect the contents of the boxes.” So Omega doesn’t put the money in the box.

Would one-boxing ever be advantageous if Omega were reasoning like that? No. The point is Omega will always reason that two-boxing dominates once the contents are fixed. There seems to be something unstable about Omega’s reasoning. I think this is related to why I feel Omega is impossible. (Though I’m not sure how the points interact exactly.)

By that logic, you can never win in Kavka’s toxin/Parfit’s hitchhiker scenario.

So I agree. It’s lucky I’ve never met a game theorist in the desert.

Less flippantly. The logic pretty much the same yes. But I don’t see that as a problem for the point I’m making; which is that the perfect predictor isn’t a thought experiment we should worry about.

That line of reasoning is though available to Smith as well, so he can choose to one-boxing because he knows that Omega is a perfect predictor. You’re right to say that the interplay between Omega-prediction-of-Smith and Smith-prediction-of-Omega are in a meta-stable state, BUT: Smith has to decide, he is going to make a decision, and so whatever algorithm it implements, if it ever goes down this line of meta-stable reasoning, must have a way to get out and choose something, even if it’s just bounded computational power (or the limit step of computation in Hamkins infinite Turing machine). But since Omega is a perfect predictor, it will know that and choose accordingly. I have the feeling that Omega existence is something like an axiom, you can refuse or accept it and both stances are coherent.

Well, i can implement omega by scanning your brain and simulating you. The other ‘non implementations’ of omega, though, imo are best ignored entirely. You can’t really blame a decision theory for failure if there’s no sensible model of the world for it to use.

My decision theory, personally, allows me to ignore unknown and edit my expected utility formula in ad-hoc way if i’m sufficiently convinced that omega will work as described. I think that’s practically useful because effective heuristics often have to be invented on spot without sufficient model of the world.

edit: albeit, if i was convinced that omega works as described, i’d be convinced that it has scanned my brain and is emulating my decision procedure, or is using time travel, or is deciding randomly then destroying the universes where it was wrong… with more time i can probably come up with other implementations, the common thing about the implementations though is that i should 1-box.

Provided my brain’s choice isn’t affected by quantum noise, otherwise I don’t think you can. :-)

People with memory problems tend to repeat “spontaneous” interactions in essentially the same way, which is evidence that quantum noise doesn’t usually sway choices.

Good point. Still, the brain’s choice can be quite deterministic, if you give it enough thought—averaging out noise.

Presented with this scenario, I’d come up with a scheme describing a table of as many different options as I could manage—ideally a very large number, but the combinatorics would probably get unwieldy after a while—and pull numbers from http://www.fourmilab.ch/hotbits/ to make a selection. I might still lose, but knowing (to some small p-value) that it’s possible to predict radioactive decay would easily be worth $100.

Of course, that’s the smartassed answer.

Well the smartarse response is that Omega’s just plugged himself in on the other end of your hotbits request =p

It’s not always cooperating- that would be dumb. The claim is that there can be improvements on what a CDT algorithm can achieve: TDT or UDT still defects against an opponent that always defects or always cooperates, but achieves (C,C) in some situations where CDT gets (D,D). The dominance reasoning is only impeccable if agents’ decisions really are independent, just like certain theorems in probability only hold when the random variables are independent. (And yes, this is a precisely analogous meaning of “independent”.)

Aha. So when agents’ actions are probabilistically independent, only then does the dominance reasoning kick in?

So the causal decision theorist will say that the dominance reasoning is applicable whenever the agents’ actions are causally independent. So do these other decision theories deny this? That is, do they claim that the dominance reasoning can be unsound even when my choice doesn’t causally impact the choice of the other?

That’s one valid way of looking at the distinction.

CDT allows the causal link from its current move in chess to its opponent’s next move, so it doesn’t view the two as independent.

In Newcomb’s Problem, traditional CDT doesn’t allow a causal link from its decision now to Omega’s action before, so it applies the independence assumption to conclude that two-boxing is the dominant strategy. Ditto with playing PD against its clone.

(Come to think of it, it’s basically a Markov chain formalism.)

So these alternative decision theories have relations of dependence going back in time? Are they sort of couterfactual dependences like “If I were to one-box, Omega would have put the million in the box”? That just sounds like the Evidentialist “news value” account. So it must be some other kind of relation of dependence going backwards in time that rules out the dominance reasoning. I guess I need “Other Decision Theories: A Less Wrong Primer”.

(gah. I wanted to delete this because I decided it was sort of a useless thing to say, but now it’s here in distracting retracted form, being even worse)

And it’s arguably telling that this is the solution evolution found. Humans are actually pretty good at avoiding proper prisoners’ dilemmas, due to our somewhat pro-social utility functions.

Thanks for the recap. It still doesn’t answer my question, though:

This appears to be incorrect if the CDT knows that Omega always makes correct predictions

And this appears to be incorrect in all cases. The right decision depends on exact nature of the noise. If Omega makes the decision by analyzing the agent’s psychological tests taken in childhood, then the agent should two-box. And if Omega makes a perfect simulation and then adds random noise, the agent should one-box.

Sorry, could you explain this in more detail?

I think the idea is that even if Omega always predicted two-boxing, it still could be said to predict with 90% accuracy if 10% of the human population happened to be one-boxers. And yet you should two-box in that case. So basically, the non-deterministic version of Newcomb’s problem isn’t specified clearly enough.

I disagree. To be at all meaningful to the problem, the “90% accuracy” has to mean that, given all the information available to you, you assign a 90% probability to Omega correctly predicting your choice. This is quite different from correctly predicting the choices of 90% of the human population.

I don’t think this works in the example given, where Omega always predicts 2-boxing. We agree that the correct thing to do in that case is to 2-box. And if I’ve decided to 2-box then I can be > 90% confident that Omega will predict my personal actions correctly. But this still shouldn’t make me 1-box.

I’ve commented on Newcomb in previous threads… in my view it really does matter how Omega makes its predictions, and whether they are perfectly reliable or just very reliable.

Agreed for that case, but perfect reliability still isn’t necessary (consider omega 99.99% accurate/10% one boxers for example)

What matters is that your uncertainty in omegas prediction is tied to your uncertainty in your actions. If you’re 90% confident that omega gets it right conditioning on deciding to one box and 90% confident that omega gets it right conditional on deciding to two box, then you should one box. (0.9 1M>1K+0.1 1M)

Far better explanation than mine, thanks!

Good point. I don’t think this is worth going into within this post, but I introduced a weasel word to signify that the circumstances of a 90% Predictor do matter.

Very nice, thanks!

Oh. That’s very nice, thanks!

Hmm, I’m not sure this is an adequate formalization, but:

Lets assume there is an evolved population of agents. Each agent has an internal parameter p, 0<=p<=1, and implements a decision procedure p*CDT + (1-p)*EDT. That is, given a problem, the agent tosses a pseudorandom p-biased coin and decides according to either CDT or EDT, depending on the results of the toss.

Assume further that there is a test set of a hundred binary decision problems, and Omega knows the test results for every agent, and does not know anything else about them. Then Omega can estimate

P(agent’s p = q | test results)

and predict “one box” if the maximum likelihood estimate of p is >1/2 and “two box” otherwise. [Here I assume for the sake of argument that CDT always two-boxes.]

Given a right distribution of p-s in the population, Omega can be made to predict with any given accuracy. Yet, there appears to be no reason to one-box...

Wait, are you deriving the uselessness of UDT from the fact that the population doesn’t contain UDT? That looks circular, unless I’m missing something...

Err, no, I’m not deriving the uselessness of either decision theory here. My point is that only the “pure” Newcomb’s problem—where Omega always predicts correctly and the agent knows it—is well-defined. The “noisy” problem, where Omega is known to sometimes guess wrong, is underspecified. The correct solution (that is whether one-boxing or two-boxing is the utility maximizing move) depends on exactly how and why Omega makes mistakes. Simply saying “probability 0.9 of correct prediction” is insufficient.

But in the “pure” Newcomb’s problem, it seems to me that CDT would actually one-box, reasoning as follows:

Since Omega always predicts correctly, I can assume that it makes its predictions using a full simulation.

Then this situation in which I find myself now (making the decision in Newcomb’s problem) can be either outside or within the simulation. I have no way to know, since it would look the same to me either way.

Therefore I should decide assuming 1⁄2 probability that I am inside Omega’s simulation and 1⁄2 that I am outside.

So I one-box.

Humans are time-inconsistent decision makers. Why would Omega choose to fill the boxes according to a certain point in configuration space rather than some average measure? Most of your life you would have two-boxed after all. Therefore if Omega was to predict whether you (space-time-worm) will take both boxes or not, when it meets you at an arbitrary point in configuration space, it might predict that you are going to two-box if you are not going to life for much longer in which time-period you are going to consistently choose to one-box.

ETA It doesn’t really matter when a superintelligence will meet you. What matters is for how long a period you adopted which decision procedure, respectively were susceptible to what kind of exploitation. If you only changed your mind about a decision procedure for .01% of your life it might still worth to act on that acausally.

If a CDT agent A is told about the problem before Omega makes its prediction and fills the boxes, then A will want to stop being a CDT agent for the duration of the experiment. Maybe that’s what you mean?

No, I mean I think CDT can one-box within the regular Newcomb’s problem situation, if its reasoning capabilities are sufficiently strong. In detail: here and in the thread here.

This might not satisfactorily answer your confusion but: CDT is defined by the fact that it has incorrect causal graphs. If it has correct causal graphs then it’s not CDT. Why bother talking about a “decision theory” that is arbitrarily limited to incorrect causal graphs? Because that’s the decision theory that academic decision theorists like to talk about and treat as default. Why did academic decision theorists never realize that their causal graphs were wrong? No one has a very good model of that, but check out Wei Dai’s related speculation here. Note that if you define causality in a technical Markovian way and use Bayes nets then there is no difference between CDT and TDT.

I used to get annoyed because CDT with a good enough world model should clearly one-box yet people stipulated that it wouldn’t; only later did I realize that it’s mostly a rhetorical thing and no one thinks that if you actually implemented an AGI with “CDT” that it’d be as dumb as academia/LessWrong’s version of CDT.

If I’m wrong about any of the above then someone please correct me as this is relevant to FAI strategy.

No, if you have an agent that is one boxing either it is not a CDT agent or the game it is playing is not Newcomb’s problem. More specifically, in your first link you describe a game that is not Newcomb’s problem and in the second link you describe an agent that does not implement CDT.

It would be a little more helpful, although probably not quite as cool-sounding, if you explained in what way the game is not Newcomb’s in the first link, and the agent not a CDT in the second. AFAIK, the two links describe exactly the same problem and exactly the same agent, and I wrote both comments.

That doesn’t seem to make helping you appealing.

The agent believes that it is has 50% chance of being in an actual Newcomb’s problem and 50% chance of being in a simulation which will be used to present another agent with a Newcomb’s problem some time in the future.

Orthonormal already explained this in the context.

Yes, I have this problem, working on it. I’m sorry, and thanks for your patience!

Yes, except for s/another agent/itself/. In what way this is not a correct description of a pure Newcomb’s problem from the agent’s point of view? This is my original still unanswered question.

Note: in the usual formulations of Newcomb’s problem for UDT, the agent knows exactly that—it is called twice, and when it is running it does not know which of the two calls is being evaluated.

I answered his explanation in the context, and he appeared to agree. His other objection seems to be based on a mistaken understanding.

This is worth writing into its own post- a CDT agent with a non-self-centered utility function (like a paperclip maximizer) and a certain model of anthropics (in which, if it knows it’s being simulated, it views itself as possibly within the simulation), when faced with a Predictor that predicts by simulating (which is not always the case), one-boxes on Newcomb’s Problem.

This is a novel and surprising result in the academic literature on CDT, not the prediction they expected. But it seems to me that if you violate any of the conditions above, one-boxing collapses back into two-boxing; and furthermore, it won’t cooperate in the Prisoner’s Dilemma against a CDT agent with an orthogonal utility function. That, at least, is inescapable from the independence assumption.

And as I replied there, this depends on its utility function being such that “filling the box for my non-simulated copy” has utiity comparable to “taking the extra box when I’m not simulated”. There are utility functions for which this works (e.g. maximizing paperclips in the real world) and utility functions for which it doesn’t (e.g. maximizing hedons in my personal future, whether I’m being simulated or not), and Omega can slightly change the problem (simulate an agent with the same decision algorithm as X but a different utility function) in a way that makes CDT two-box again. (That trick wouldn’t stop TDT/UDT/ADT from one-boxing.)

I think you missed my point.

This is irrelevant. The agent is actually outside, thinking what to do in the Newcomb’s problem. But only we know this, the agent itself doesn’t. All the agent knows is that Omega always predicts correctly. Which means, the agent can model Omega as a perfect simulator. The actual method that Omega uses to make predictions does not matter, the world would look the same to the agent, regardless.

Unless Omega predicts without simulating- for instance, this formulation of UDT can be formally proved to one-box without simulating.

Errrr. The agent does not simulate anything in my argument. The agent has a “mental model” of Omega, in which Omega is a perfect simulator. It’s about representation of the problem within the agent’s mind.

In your link, Omega—the function U() - is a perfect simulator. It calls the agent function A() twice, once to get its prediction, and once for the actual decision.

The problem would work as well if the first call went not to A directly but querying the oracle whether A()=1. There are ways of predicting that aren’t simulation, and if that’s the case then your idea falls apart.

I can’t be alone in thinking this; where does one acquire the probability values to make any of this theory useful? Past data? Can it be used on an individual or only organizational level?

Many people on Less Wrong see Judea Pearl’s work as the right approach to formalizing causality and getting the right conditional probabilities. But if it was simple to formally specify the right causal model of the world given sensory information, we’d probably have AGI already.

Sitting and figuring out how exactly causality works, is the kind of thing we want the AGI to be able to do on it’s own. We don’t seem to be born with any advanced expectations of the world, such as notion of causality; we even learn to see; the causality took a while to invent, and great many people have superstitions which are poorly compatible with causality; yet even my cats seem to, in their lives, have learnt some understanding of causality.

You have to start somewhere, though.

We seem to start with very little.

edit: i mean, I’d say we definitely invent causality. It’s implausible we evolved implementation of the equivalent of Pearl’s work, and even if we did, that is once again a case of intelligent-ish process (evolution) figuring it out.

I’d say that our intuitive grasp of causality relates to theoretical work like Pearl’s in the same way that our subconscious heuristics of vision relate to the theory of computer vision.

That is to say, we have evolved heuristics that we’re not usually conscious of, and which function very well and cheaply on the circumstances our ancestors frequently encountered, but we currently don’t understand the details well enough to program something of equivalent power.

Except a lot of our vision heuristics seem not to be produced by evolution, but by the process that happens in first years of life. Ditto goes for virtually all aspects of brain organization, given the ratio between brain complexity and DNA’s complexity.

Steven Pinker covers this topic well. I highly recommend How The Mind Works; The Blank Slate may be more relevant, but I haven’t read it yet.

Essentially, the human brain isn’t a general-purpose learner, but one with strong heuristics (like natural grammar and all kinds of particular visual pattern-seeking) that are meta enough to encompass a wide variety of human languages and visual surroundings. The development of the human brain responds to the environment not because it’s a ghost of perfect emptiness but because it has a very particular architecture already, which is adapted to the range of possible experience. The visual cortex has special structure before the eyes ever open.

Honestly, the guys who never wrote any vision algorithm should just go and stop trying to think about the subject too hard, they aren’t getting anywhere sane with the intuitions that are many orders of magnitude off. That goes for much of the cognition evolution out there. We know the evolution can produce ‘complex’ stuff, we got that hammer, we use it on every nail, even when the nail is in fact a giant screw which is way bigger than the hammer itself, which ain’t going to even move if hit with the hammer—firstly, it is big and secondly it needs entirely different motion—yet one who can’t see size of the screw can insist it would. Some adjustments for the near vs far connectivity, perhaps even somewhat specific layering, but that’s all the evolution is going to give you in the relevant timeframe for brains bigger than peanuts. That’s just the way things are, folks.

People with early brain damage have other parts of brain take over, and perform nearly as well, suggesting that the networks processing the visual input have only minor optimizations for visual task compared to the rest, i.e. precisely the near-vs-far connectivity tweaks, more/less neurons per cortical column, that kind of stuff which makes it somewhat more efficient at the task.

edit: here’s the taster: mammals never evolved extra pair of limbs, extra eyes, or anything of that sort. But in the world of the evolutionary psychologists and evolutionary ‘cognitive scientists’, mammals should evolve an extra eye or extra pair of limbs, along with much more complex multi-step adaptations, every couple millions years. This is outright ridiculous. Just look at your legs, look how much slower is the fastest human runner than any comparable animal. You’ll need to be running for another ten millions years before you are any good at running. And in that time, in which monkey’s body can barely adapt to locomotion on a flat surface again, monkey’s brain evolves some complex algorithms for grammar of the human languages? The hunter-gatherer specific functionality? You got to be kidding me.

All of that evolutionary explaining of complex phenomena via vague handwave and talk of how beneficial it would’ve been in ancestral environment, will be regarded as utter and complete pseudoscience within 20..50 years. It’s not enough to show that something was beneficial. The extra eye on the back, too, would have been beneficial for great many animals. They are given free pass to use evolution as magic because brains don’t fossilize. The things that fossilize, however, provide good sample of how many generations it takes for evolution to make something.

We’ve drifted way off topic. Brain plasticity is a good point, but it’s not the only piece of evidence available. I promise you that if you check out How the Mind Works, you’ll find unambiguous evidence that the human brain is not a general-purpose learner, but begins with plenty of structure.

If you doubt the existence of universal grammar, you should try The Language Instinct as well.

You can have the last word, but I’m tapping out on this particular topic.

While some linguistic universals definitely exist, and a sufficiently weak version of the language acquisition device idea is pretty much obvious (‘the human brain has the ability to learn human language’), I think Chomsky’s ideas are way too strong. See e.g. Scholz, Barbara C. and Geoffrey K. Pullum (2006) Irrational nativist exuberance

re: structure, yes, it is made of cortical columns, and yes there’s some global wiring, nobody’s been doubting that.

I created a new topic for that . The issue with attributing brain functionality to evolution is the immense difficulty of coding any specific wiring in the DNA, especially in mammals. Insects can do it—going through several generations in a year, and having the genome that controls the brain down to individual neurons. Mammals aren’t structured like this, and live much too long.

It’s hard to draw a clear line between the two. Certainly much of what evolution evolved in this area is the ability to reliably develop suitable heuristics given expected stimulus so I more or less agree with you here. If we develop without sight this area of the brain gets used for entirely different purposes.

Here I must disagree. The high level organization we are stuck with and even at somewhat lower levels the other areas of the brain aren’t quite so versatile as the cortex that handles the (high level aspects of) vision.

I’m not expecting the specialization to be going beyond adjusting e.g. number of local vs long range connections, basic motion detection back from couple hundred millions years of evolution as a fish etc. People are expecting some miracles from evolution, along the lines of hard-coding specific highly nontrivial algorithms. Mostly due to very screwy intuitions regarding how complex anything resembling an algorithm is (compared to rest of the body). Yes, it is the case that other parts of brain are worse at performing this function, no, it isn’t because there’s some actual algorithms hard-wired there. In so much as other parts of brain are able to perform even remotely close in function, sight is learn-able. One particular thing about the from-scratch AI crowd is that it doesn’t like to give much credit to brain when its due.

Most algorithms are far, far less complicated than say, the behaviors that constitute the immune system.

From what I can tell there are rather a lot of behavioral algorithms that are hard wired at the lower levels, particular when it comes to emotional responses and desires. The brain then learns to specialize them to the circumstance it finds itself in. More particularly, any set of (common) behaviors that give long term benefits rather than solving immediate problems is almost certainly hard wired. The brain doesn’t even know what is being optimized much less how this particular algorithm is supposed to help!

Immune system is way old. Why is it just the complex algorithms we don’t quite understand, that we think evolve quickly in mammals, but not the obvious things like retinal pigments, number of eyes, number of limbs, etc? Why we ‘evolve support for language’ in the time during which we barely adapt our legs to walking on flat surface again?

The emotional responses and desires are, to some extent, evolved, but the complex mechanisms of calculation which objects to desire have to be created from scratch.

The brain does 100 steps per second, 8 640 000 steps per day, 3 153 600 000 steps per year. The evolution does 1 step per generation. There are very tight bounds on what functionality could evolve in a given timeframe. And there is a lot that can be generated in very short time by the brain.

Yes. Very old, incredibly powerful and amazingly complex. The complexity of that feature of humans (and diverse relatives) makes the appeal “regarding how complex anything resembling an algorithm is (compared to rest of the body)” incredibly weak. Most algorithms used by the brain, learned or otherwise, are simpler than what the rest of the body does.

The immune system doesn’t have some image recognition algo that looks at projection of proteins and recognize their shapes. It uses molecular binding. And it evolved over many billions generations, in much shorter living animals, re-using, to huge extent, the features that evolved back in single celled organisms. And as far as algorithms go, it consists just of a huge number of if clauses, chained very few levels deep.

The 3D object recognition from 2D images from the eyes, for comparison, is an incredibly difficult task.

edit: on topic of immune ‘algorithm’ that makes you acquire immunity to foreign, but not your, chemicals:

http://en.wikipedia.org/wiki/Somatic_hypermutation

Randomly edit the proteins so that they stick. The random editing happens by utilising somewhat broken replication machinery. Some of your body evolves immune response when you catch flu or cold. The products of that evolution are not even passed down, that’s how amazingly complex and well evolved it is (not).

This matches my understanding.

And here I no longer agree, at least when it comes to the assumption that the aforementioned task is not incredibly difficult.

I added in an edit a reference as to how immune system, basically, operates. You have population of b-cells, which evolves for elimination of foreign substances. Good ol evolution re-used to evolve a part of the b-cell genome, inside your body. The results seem very impressive—recognition of substances—but all the heavy lifting is done using very simple and very stupid methods. If anything, our proneness to seasonal cold and flu is a great demonstration of the extreme stupidity of the immune system. The viruses only need to modify some entirely non-functional proteins to have to be recognized afresh. That’s because there is no pattern recognition going on what so ever, only incredibly stupid process of evolution of b-cells.

If I was trying to claim that immune systems were complex in a way that is similar in nature to learned cortical algorithms then I would be thoroughly dissuaded by now.

The immune system is actually a rather good example of what sort of mechanisms you can expect to evolve over many billions generations, and in which way they can be called ‘complex’.

My original point was that much of evolutionary cognitive science is explaining way more complex mechanisms (with a lot of hidden complexity. For very outrageous example consider preference for specific details of mate body shape, which is a task with immense hidden complexity) as evolving in thousandth the generations count of the immune system. Instead of being generated in some way by operation of the brain, in the context whereby other brain areas are only marginally less effective at the tasks—suggesting not the hardwiring of algorithms of any kind but minor tweaks to the properties of the network which slightly improve the network’s efficiency after the network learns the specific task.

We probably don’t disagree too much on the core issue here by the way. Compared to an arbitrary reference class that is somewhat meaningful I tend to be far more likely to accepting of the ‘blank slate’ capabilities of the brain. The way it just learns how to build models of reality from visual input is amazing. It’s particularly fascinating to see areas in the brain that are consistent across (nearly) all people that turn out not to be hardwired after all. Except in as much as they happen to be always connected to the same stuff and usually develop in the same way!

So are eyes.

I liked this post. it would be good if you put it somewhere in the sequences so that people new to LessWrong can find this basic intro earlier.

At the very least it should be the basis for an easily accessible wiki page.

Excellent. And I love your aesthetic intolerance dilemma. That’s a great spin on it; has it appeared anywhere before?

Not that I’ve seen- it just occurred to me today. Thanks!

(I didn’t like it: it’s too abstract and unspecific for an example.)

As it happens, I do know of a real-world case of this kind of problem, where the parties involved chose… defection. From an interview with former anime studio Gainax president Toshio Okada:

This may have been responsible for the much-execrated ‘desert island episodes’ arc in Nadia.

The decision theories need somewhat specific models of the world to operate correctly. In The Smoking Lesion, for example, the lesion has to somehow lead to you smoking. E.g. the lesion could make you follow CDT while absence of the lesion makes you follow EDT. It’s definitely worse to have CDT if it comes at the expense of having the lesion.

The issue here is selection. If you find you opt to smoke, your prior for having lesion goes up, of course; and so you need to be more concerned about the cancer—if you can’t check for the lesion you have to perhaps do chest x-rays more often, which cost money. So there’s that negative consequence of deciding to smoke, except the decision theory you use needs not be concerned with this particular consequence when deciding to smoke, because the decision is itself a consequence of the lesion in the cases whereby the lesion is predictive of smoking, and only isn’t a consequence of the lesion in the cases where lesion is not predictive.

I think the assumption is that your decision theory is fixed, and the lesion has an influence on your utility function via how much you want to smoke (though in a noisy way, so you can’t use it to conclude with certainty whether you have the lesion or not).

That also works.

What would EDT do if it has evidence (possibly obtained from theory about the physics, derived from empirical evidence in support of causality) that it is (or must be) the desire to smoke that is correlated with the cancer? Shouldn’t it ‘cancel out’ the impact of correlation of the decision with the cancer, on the decision?

It seems to me that good decision theories can disagree on the decisions made with imperfect data and incomplete model. The evidence based decision theory should be able to process the evidence for the observed phenomenon of ‘causality’, and process it all the way to the notion that decision won’t affect cancer.

At same time if an agent can not observe evidence for causality and reason about it correctly, that agent is seriously crippled in many ways—would it even be able to figure out e.g. newtonian physics from observation, if it can’t figure out causality?

The CDT looks like a hack where you hard-code causality into an agent, which you (mankind) figured out from observation and evidence (and it took a while to figure it out and figure out how to apply it). edit: This seem to go for some of the advanced decision theories too. You shouldn’t be working so hard inventing the world-specific stuff to hard-code into an agent. The agent should figure it out from properties of the real world and perhaps considerations for hypothetical examples.

I agree with you. I don’t think that EDT is wrong on the Smoking Lesion. Suppose that, in the world of this problem, you see someone else decide to smoke. What do you conclude from that? Your posterior probability of that person having the lesion goes up over the prior. Now what if that person is you? I think the same logic should apply.

That part is correct, but opting not to smoke for the purpose of avoiding this increase in probability s an error.

An error that an evidence based decision theory needs not make if it can process the evidence that causality works and that it is actually the pre-existing lesion that causes smoking, and control for the pre-existing lesion when comparing the outcomes of actions. (And if the agent is ignorant of the way world works—then we shouldn’t benchmark it against an agent into which we coded the way our world works)

I still don’t see how it is. If the agent has no other information, all he knows is that if he decides to smoke it is more likely that he has the lesion. His decision itself doesn’t influence whether he has the lesion, of course. But he desires to not have the lesion, and therefore should desire to decide not to smoke.

The way the lesion influences deciding to smoke will be through the utility function or the decision theory. With no other information, the agent can’t trust that his decision will outsmart the lesion.

Ahh, I guess we are talking about same thing. My point is that given more information—and making more conclusions—EDT should smoke. The CDT gets around requirement for more information by cheating—we wrote some of that information implicitly into CDT—we thought CDT is a good idea because we know our world is causal. Whenever EDT can reason that CDT will work better—based on evidence in support of causality, the model of how lesions work, et cetera—the EDT will act like CDT. And whenever CDT reasons that EDT will work better—the CDT self modifies to be EDT, except that CDT can’t do it on spot and has to do it in advance. The advanced decision theories try to ‘hardcode’ more of our conclusions about the world into the decision theory. This is very silly.

If you test humans, I think it is pretty clear that humans work like EDT + evidence for causality. Take away evidence for causality, and people can believe that deciding to smoke retroactively introduces the lesion.

edit: ahh, wait, the EDT is some pretty naive theory that can not even process anything as complicated as evidence for causality working in our universe. Whatever then, a thoughtless approach leads to thoughtless results, end of story. The correct decision theory should be able to control for pre-existing lesion when it makes sense to do so.

Can you explain this?

EDT is described as $V(A) = \sum_{j} P(O_j | A) U(O_j)$. If you have knowledge about the mechanisms behind the how the lesion causes smoking, that would change $P(A | O_j)$ and therefore also $P(O_j | A)$.