An Untrollable Mathematician Illustrated

The following was a presentation I made for Sören Elverlin’s AI Safety Reading Group. I decided to draw everything by hand because powerpoint is boring. Thanks to Ben Pace for formatting it for LW! See also the IAF post detailing the research which this presentation is based on.

- Embedded Agency (full-text version) by (15 Nov 2018 19:49 UTC; 220 points)

- 2018 AI Alignment Literature Review and Charity Comparison by (18 Dec 2018 4:46 UTC; 190 points)

- Radical Probabilism by (18 Aug 2020 21:14 UTC; 187 points)

- 2018 Review: Voting Results! by (24 Jan 2020 2:00 UTC; 135 points)

- 2018 Review: Voting Results! by (24 Jan 2020 2:00 UTC; 135 points)

- 2018 AI Alignment Literature Review and Charity Comparison by (EA Forum; 18 Dec 2018 4:48 UTC; 118 points)

- Thinking About Filtered Evidence Is (Very!) Hard by (19 Mar 2020 23:20 UTC; 97 points)

- MIRI’s 2018 Fundraiser by (27 Nov 2018 5:30 UTC; 60 points)

- Review: LessWrong Best of 2018 – Epistemology by (28 Dec 2020 4:32 UTC; 47 points)

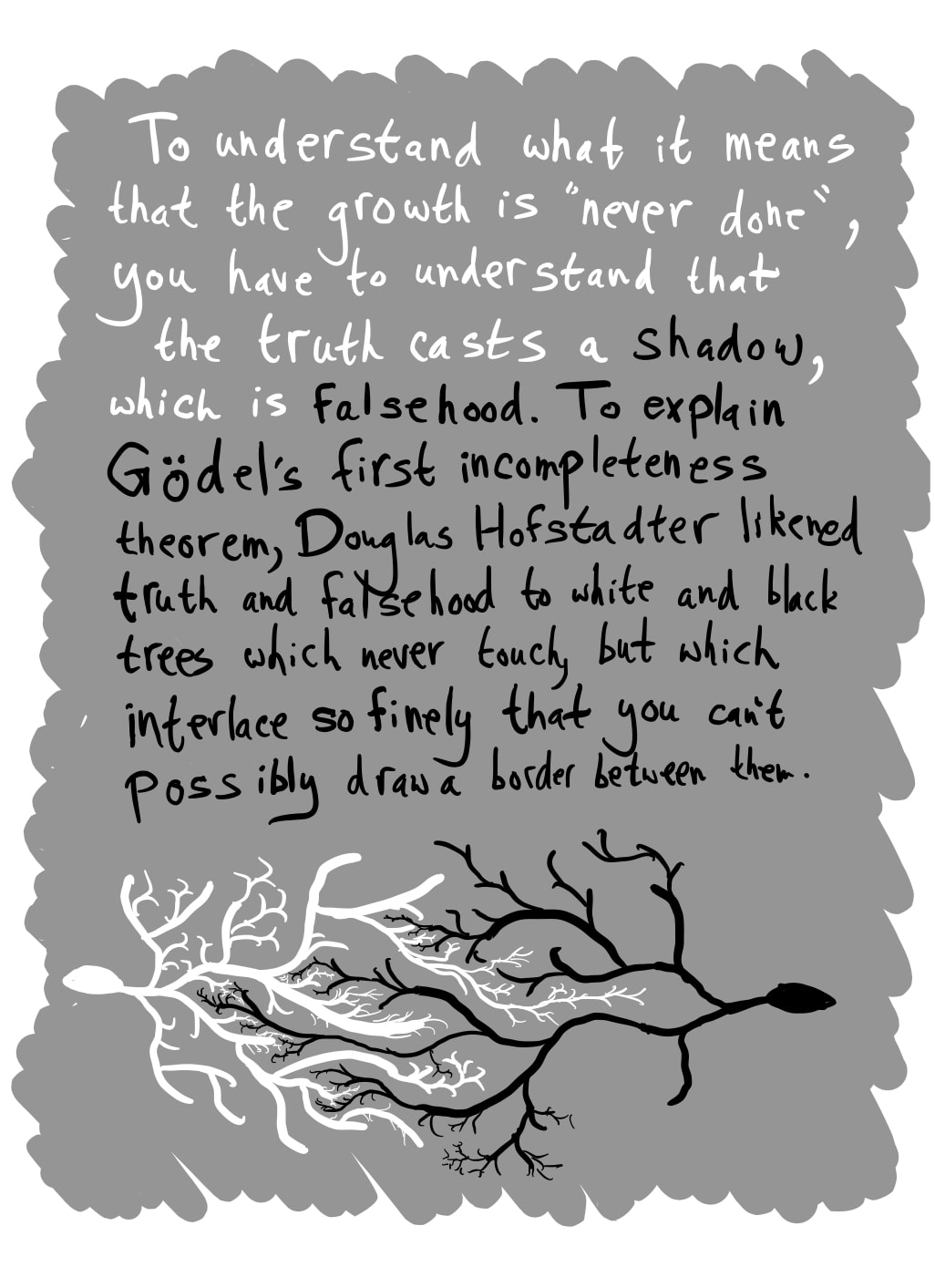

- Gödel’s Legacy: A game without end by (28 Jun 2020 18:50 UTC; 45 points)

- When Arguing Definitions is Arguing Decisions by (25 Jul 2021 16:45 UTC; 28 points)

- MIRI’s 2018 Fundraiser by (EA Forum; 27 Nov 2018 6:22 UTC; 20 points)

- 's comment on 2018 Review: Voting Results! by (28 Jan 2020 2:33 UTC; 19 points)

- 's comment on Attainable Utility Preservation: Empirical Results by (21 Dec 2021 14:54 UTC; 7 points)

- Belief-conditional things—things that only exist when you believe in them by (25 Dec 2021 10:49 UTC; 7 points)

- Coping with Undecidability by (27 Jan 2022 10:31 UTC; 6 points)

- 's comment on Review: LessWrong Best of 2018 – Epistemology by (30 Dec 2020 21:50 UTC; 6 points)

- 's comment on Radical Probabilism by (30 Aug 2020 12:10 UTC; 5 points)

- 's comment on Algon’s Shortform by (10 Oct 2022 20:12 UTC; 4 points)

- 's comment on The Failed Strategy of Artificial Intelligence Doomers by (2 Feb 2025 18:32 UTC; 2 points)

- 's comment on Core Tag Examples [temporary] by (7 Apr 2020 19:24 UTC; 2 points)

- 's comment on Subsystem Alignment by (29 Jun 2019 0:29 UTC; 1 point)

- 's comment on Three Kinds of Research Documents: Exploration, Explanation, Academic by (13 Feb 2019 23:54 UTC; 1 point)

I think this post, together with Abram’s other post “Towards a new technical explanation” actually convinced me that a bayesian approach to epistemology can’t work in an embedded context, which was a really big shift for me.

Abram’s writing and illustrations often distill technical insights into accessible, fun adventures. I’ve come to appreciate the importance and value of this expository style more and more over the last year, and this post is what first put me on this track. While more rigorous communication certainly has its place, clearly communicating the key conceptual insights behind a piece of work makes those insights available to the entire community.