Embedded Agency (full-text version)

Suppose you want to build a robot to achieve some real-world goal for you—a goal that requires the robot to learn for itself and figure out a lot of things that you don’t already know.

There’s a complicated engineering problem here. But there’s also a problem of figuring out what it even means to build a learning agent like that. What is it to optimize realistic goals in physical environments? In broad terms, how does it work?

In this post, I’ll point to four ways we don’t currently know how it works, and four areas of active research aimed at figuring it out.

1. Embedded agents

This is Alexei, and Alexei is playing a video game.

Like most games, this game has clear input and output channels. Alexei only observes the game through the computer screen, and only manipulates the game through the controller.

The game can be thought of as a function which takes in a sequence of button presses and outputs a sequence of pixels on the screen.

Alexei is also very smart, and capable of holding the entire video game inside his mind. If Alexei has any uncertainty, it is only over empirical facts like what game he is playing, and not over logical facts like which inputs (for a given deterministic game) will yield which outputs. This means that Alexei must also store inside his mind every possible game he could be playing.

Alexei does not, however, have to think about himself. He is only optimizing the game he is playing, and not optimizing the brain he is using to think about the game. He may still choose actions based off of value of information, but this is only to help him rule out possible games he is playing, and not to change the way in which he thinks.

In fact, Alexei can treat himself as an unchanging indivisible atom. Since he doesn’t exist in the environment he’s thinking about, Alexei doesn’t worry about whether he’ll change over time, or about any subroutines he might have to run.

Notice that all the properties I talked about are partially made possible by the fact that Alexei is cleanly separated from the environment that he is optimizing.

This is Emmy. Emmy is playing real life.

Real life is not like a video game. The differences largely come from the fact that Emmy is within the environment that she is trying to optimize.

Alexei sees the universe as a function, and he optimizes by choosing inputs to that function that lead to greater reward than any of the other possible inputs he might choose. Emmy, on the other hand, doesn’t have a function. She just has an environment, and this environment contains her.

Emmy wants to choose the best possible action, but which action Emmy chooses to take is just another fact about the environment. Emmy can reason about the part of the environment that is her decision, but since there’s only one action that Emmy ends up actually taking, it’s not clear what it even means for Emmy to “choose” an action that is better than the rest.

Alexei can poke the universe and see what happens. Emmy is the universe poking itself. In Emmy’s case, how do we formalize the idea of “choosing” at all?

To make matters worse, since Emmy is contained within the environment, Emmy must also be smaller than the environment. This means that Emmy is incapable of storing accurate detailed models of the environment within her mind.

This causes a problem: Bayesian reasoning works by starting with a large collection of possible environments, and as you observe facts that are inconsistent with some of those environments, you rule them out. What does reasoning look like when you’re not even capable of storing a single valid hypothesis for the way the world works? Emmy is going to have to use a different type of reasoning, and make updates that don’t fit into the standard Bayesian framework.

Since Emmy is within the environment that she is manipulating, she is also going to be capable of self-improvement. But how can Emmy be sure that as she learns more and finds more and more ways to improve herself, she only changes herself in ways that are actually helpful? How can she be sure that she won’t modify her original goals in undesirable ways?

Finally, since Emmy is contained within the environment, she can’t treat herself like an atom. She is made out of the same pieces that the rest of the environment is made out of, which is what causes her to be able to think about herself.

In addition to hazards in her external environment, Emmy is going to have to worry about threats coming from within. While optimizing, Emmy might spin up other optimizers as subroutines, either intentionally or unintentionally. These subsystems can cause problems if they get too powerful and are unaligned with Emmy’s goals. Emmy must figure out how to reason without spinning up intelligent subsystems, or otherwise figure out how to keep them weak, contained, or aligned fully with her goals.

1.1. Dualistic agents

Emmy is confusing, so let’s go back to Alexei. Marcus Hutter’s AIXI framework gives a good theoretical model for how agents like Alexei work:

The model has an agent and an environment that interact using actions, observations, and rewards. The agent sends out an action , and then the environment sends out both an observation and a reward . This process repeats at each time .

Each action is a function of all the previous action-observation-reward triples. And each observation and reward is similarly a function of these triples and the immediately preceding action.

You can imagine an agent in this framework that has full knowledge of the environment that it’s interacting with. However, AIXI is used to model optimization under uncertainty about the environment. AIXI has a distribution over all possible computable environments , and chooses actions that lead to a high expected reward under this distribution. Since it also cares about future reward, this may lead to exploring for value of information.

Under some assumptions, we can show that AIXI does reasonably well in all computable environments, in spite of its uncertainty. However, while the environments that AIXI is interacting with are computable, AIXI itself is uncomputable. The agent is made out of a different sort of stuff, a more powerful sort of stuff, than the environment.

We will call agents like AIXI and Alexei “dualistic.” They exist outside of their environment, with only set interactions between agent-stuff and environment-stuff. They require the agent to be larger than the environment, and don’t tend to model self-referential reasoning, because the agent is made of different stuff than what the agent reasons about.

AIXI is not alone. These dualistic assumptions show up all over our current best theories of rational agency.

I set up AIXI as a bit of a foil, but AIXI can also be used as inspiration. When I look at AIXI, I feel like I really understand how Alexei works. This is the kind of understanding that I want to also have for Emmy.

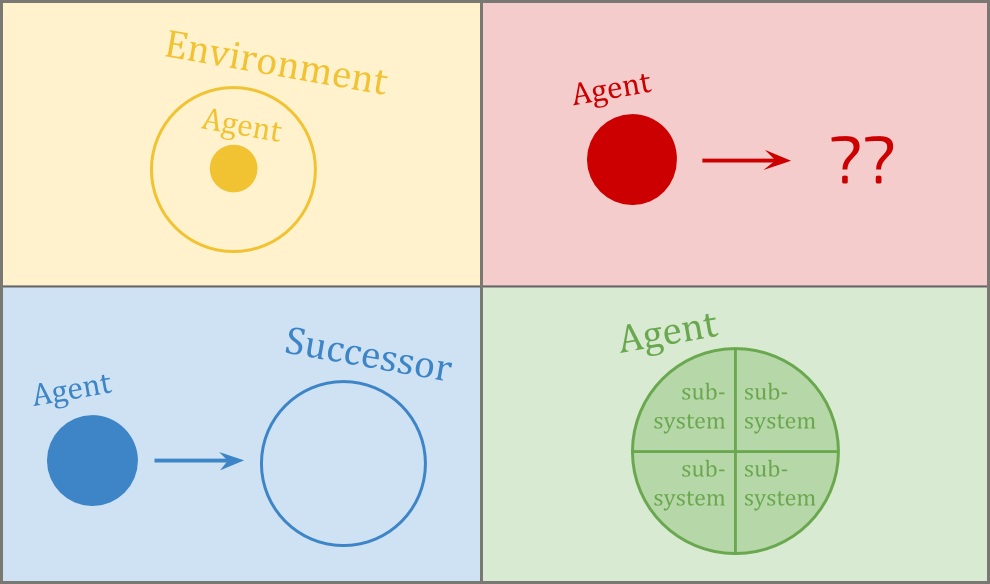

Unfortunately, Emmy is confusing. When I talk about wanting to have a theory of “embedded agency,” I mean I want to be able to understand theoretically how agents like Emmy work. That is, agents that are embedded within their environment and thus:

do not have well-defined i/o channels;

are smaller than their environment;

are able to reason about themselves and self-improve;

and are made of parts similar to the environment.

You shouldn’t think of these four complications as a partition. They are very entangled with each other.

For example, the reason the agent is able to self-improve is because it is made of parts. And any time the environment is sufficiently larger than the agent, it might contain other copies of the agent, and thus destroy any well-defined i/o channels.

However, I will use these four complications to inspire a split of the topic of embedded agency into four subproblems. These are: decision theory, embedded world-models, robust delegation, and subsystem alignment.

1.2. Embedded subproblems

Decision theory is all about embedded optimization.

The simplest model of dualistic optimization is . takes in a function from actions to rewards, and returns the action which leads to the highest reward under this function. Most optimization can be thought of as some variant on this. You have some space; you have a function from this space to some score, like a reward or utility; and you want to choose an input that scores highly under this function.

But we just said that a large part of what it means to be an embedded agent is that you don’t have a functional environment. So now what do we do? Optimization is clearly an important part of agency, but we can’t currently say what it is even in theory without making major type errors.

Some major open problems in decision theory include:

logical counterfactuals: how do you reason about what would happen if you take action B, given that you can prove that you will instead take action A?

environments that include multiple copies of the agent, or trustworthy predictions of the agent.

logical updatelessness, which is about how to combine the very nice but very Bayesian world of Wei Dai’s updateless decision theory, with the much less Bayesian world of logical uncertainty.

Embedded world-models is about how you can make good models of the world that are able to fit within an agent that is much smaller than the world.

This has proven to be very difficult—first, because it means that the true universe is not in your hypothesis space, which ruins a lot of theoretical guarantees; and second, because it means we’re going to have to make non-Bayesian updates as we learn, which also ruins a bunch of theoretical guarantees.

It is also about how to make world-models from the point of view of an observer on the inside, and resulting problems such as anthropics. Some major open problems in embedded world-models include:

logical uncertainty, which is about how to combine the world of logic with the world of probability.

multi-level modeling, which is about how to have multiple models of the same world at different levels of description, and transition nicely between them.

ontological crises, which is what to do when you realize that your model, or even your goal, was specified using a different ontology than the real world.

Robust delegation is all about a special type of principal-agent problem. You have an initial agent that wants to make a more intelligent successor agent to help it optimize its goals. The initial agent has all of the power, because it gets to decide exactly what successor agent to make. But in another sense, the successor agent has all of the power, because it is much, much more intelligent.

From the point of view of the initial agent, the question is about creating a successor that will robustly not use its intelligence against you. From the point of view of the successor agent, the question is about, “How do you robustly learn or respect the goals of something that is stupid, manipulable, and not even using the right ontology?”

There are extra problems coming from the Löbian obstacle making it impossible to consistently trust things that are more powerful than you.

You can think about these problems in the context of an agent that’s just learning over time, or in the context of an agent making a significant self-improvement, or in the context of an agent that’s just trying to make a powerful tool.

The major open problems in robust delegation include:

Vingean reflection, which is about how to reason about and trust agents that are much smarter than you, in spite of the Löbian obstacle to trust.

value learning, which is how the successor agent can learn the goals of the initial agent in spite of that agent’s stupidity and inconsistencies.

corrigibility, which is about how an initial agent can get a successor agent to allow (or even help with) modifications, in spite of an instrumental incentive not to.

Subsystem alignment is about how to be one unified agent that doesn’t have subsystems that are fighting against either you or each other.

When an agent has a goal, like “saving the world,” it might end up spending a large amount of its time thinking about a subgoal, like “making money.” If the agent spins up a sub-agent that is only trying to make money, there are now two agents that have different goals, and this leads to a conflict. The sub-agent might suggest plans that look like they only make money, but actually destroy the world in order to make even more money.

The problem is: you don’t just have to worry about sub-agents that you intentionally spin up. You also have to worry about spinning up sub-agents by accident. Any time you perform a search or an optimization over a sufficiently rich space that’s able to contain agents, you have to worry about the space itself doing optimization. This optimization may not be exactly in line with the optimization the outer system was trying to do, but it will have an instrumental incentive to look like it’s aligned.

A lot of optimization in practice uses this kind of passing the buck. You don’t just find a solution; you find a thing that is able to itself search for a solution.

In theory, I don’t understand how to do optimization at all—other than methods that look like finding a bunch of stuff that I don’t understand, and seeing if it accomplishes my goal. But this is exactly the kind of thing that’s most prone to spinning up adversarial subsystems.

The big open problem in subsystem alignment is about how to have a base-level optimizer that doesn’t spin up adversarial optimizers. You can break this problem up further by considering cases where the resultant optimizers are either intentional or unintentional, and considering restricted subclasses of optimization, like induction.

But remember: decision theory, embedded world-models, robust delegation, and subsystem alignment are not four separate problems. They’re all different subproblems of the same unified concept that is embedded agency.

2. Decision theory

Decision theory and artificial intelligence typically try to compute something resembling

I.e., maximize some function of the action. This tends to assume that we can detangle things enough to see outcomes as a function of actions.

For example, AIXI represents the agent and the environment as separate units which interact over time through clearly defined i/o channels, so that it can then choose actions maximizing reward.

When the agent model is a part of the environment model, it can be significantly less clear how to consider taking alternative actions.

For example, because the agent is smaller than the environment, there can be other copies of the agent, or things very similar to the agent. This leads to contentious decision-theory problems such as the Twin Prisoner’s Dilemma and Newcomb’s problem.

If Emmy Model 1 and Emmy Model 2 have had the same experiences and are running the same source code, should Emmy Model 1 act like her decisions are steering both robots at once? Depending on how you draw the boundary around “yourself”, you might think you control the action of both copies, or only your own.

This is an instance of the problem of counterfactual reasoning: how do we evaluate hypotheticals like “What if the sun suddenly went out”?

Problems of adapting decision theory to embedded agents include:

counterfactuals

Newcomblike reasoning, in which the agent interacts with copies of itself

reasoning about other agents more broadly

extortion problems

coordination problems

logical counterfactuals

logical updatelessness

2.1. Action counterfactuals

The most central example of why agents need to think about counterfactuals comes from counterfactuals about their own actions.

The difficulty with action counterfactuals can be illustrated by the five-and-ten problem. Suppose we have the option of taking a five dollar bill or a ten dollar bill, and all we care about in the situation is how much money we get. Obviously, we should take the $10.

However, it is not so easy as it seems to reliably take the $10.

If you reason about yourself as just another part of the environment, then you can know your own behavior. If you can know your own behavior, then it becomes difficult to reason about what would happen if you behaved differently.

This throws a monkey wrench into many common reasoning methods. How do we formalize the idea “Taking the $10 would lead to good consequences, while taking the $5 would lead to bad consequences,” when sufficiently rich self-knowledge would reveal one of those scenarios as inconsistent?

And if we can’t formalize any idea like that, how do real-world agents figure out to take the $10 anyway?

If we try to calculate the expected utility of our actions by Bayesian conditioning, as is common, knowing our own behavior leads to a divide-by-zero error when we try to calculate the expected utility of actions we know we don’t take: implies , which implies , which implies

Because the agent doesn’t know how to separate itself from the environment, it gets gnashing internal gears when it tries to imagine taking different actions.

But the biggest complication comes from Löb’s Theorem, which can make otherwise reasonable-looking agents take the $5 because “If I take the $10, I get $0”! And in a stable way—the problem can’t be solved by the agent learning or thinking about the problem more.

This might be hard to believe; so let’s look at a detailed example. The phenomenon can be illustrated by the behavior of simple logic-based agents reasoning about the five-and-ten problem.

Consider this example:

We have the source code for an agent and the universe. They can refer to each other through the use of quining. The universe is simple; the universe just outputs whatever the agent outputs.

The agent spends a long time searching for proofs about what happens if it takes various actions. If for some and equal to , , or , it finds a proof that taking the leads to utility, that taking the leads to utility, and that , it will naturally take the . We expect that it won’t find such a proof, and will instead pick the default action of taking the .

It seems easy when you just imagine an agent trying to reason about the universe. Yet it turns out that if the amount of time spent searching for proofs is enough, the agent will always choose !

The proof that this is so is by Löb’s theorem. Löb’s theorem says that, for any proposition , if you can prove that a proof of would imply the truth of , then you can prove . In symbols, with “” meaning ” is provable”:

In the version of the five-and-ten problem I gave, “” is the proposition “if the agent outputs the universe outputs , and if the agent outputs the universe outputs ”.

Supposing it is provable, the agent will eventually find the proof, and return in fact. This makes the sentence true, since the agent outputs and the universe outputs , and since it’s false that the agent outputs . This is because false propositions like “the agent outputs ” imply everything, including the universe outputting .

The agent can (given enough time) prove all of this, in which case the agent in fact proves the proposition “if the agent outputs the universe outputs , and if the agent outputs the universe outputs ”. And as a result, the agent takes the $5.

We call this a “spurious proof”: the agent takes the $5 because it can prove that if it takes the $10 it has low value, because it takes the $5. It sounds circular, but sadly, is logically correct. More generally, when working in less proof-based settings, we refer to this as a problem of spurious counterfactuals.

The general pattern is: counterfactuals may spuriously mark an action as not being very good. This makes the AI not take the action. Depending on how the counterfactuals work, this may remove any feedback which would “correct” the problematic counterfactual; or, as we saw with proof-based reasoning, it may actively help the spurious counterfactual be “true”.

Note that because the proof-based examples are of significant interest to us, “counterfactuals” actually have to be counterlogicals; we sometimes need to reason about logically impossible “possibilities”. This rules out most existing accounts of counterfactual reasoning.

You may have noticed that I slightly cheated. The only thing that broke the symmetry and caused the agent to take the $5 was the fact that “” was the action that was taken when a proof was found, and “” was the default. We could instead consider an agent that looks for any proof at all about what actions lead to what utilities, and then takes the action that is better. This way, which action is taken is dependent on what order we search for proofs.

Let’s assume we search for short proofs first. In this case, we will take the $10, since it is very easy to show that leads to and leads to .

The problem is that spurious proofs can be short too, and don’t get much longer when the universe gets harder to predict. If we replace the universe with one that is provably functionally the same, but is harder to predict, the shortest proof will short-circuit the complicated universe and be spurious.

People often try to solve the problem of counterfactuals by suggesting that there will always be some uncertainty. An AI may know its source code perfectly, but it can’t perfectly know the hardware it is running on.

Does adding a little uncertainty solve the problem? Often not:

The proof of the spurious counterfactual often still goes through; if you think you are in a five-and-ten problem with a 95% certainty, you can have the usual problem within that 95%.

Adding uncertainty to make counterfactuals well-defined doesn’t get you any guarantee that the counterfactuals will be reasonable. Hardware failures aren’t often what you want to expect when considering alternate actions.

Consider this scenario: You are confident that you almost always take the left path. However, it is possible (though unlikely) for a cosmic ray to damage your circuits, in which case you could go right—but you would then be insane, which would have many other bad consequences.

If this reasoning in itself is why you always go left, you’ve gone wrong.

Simply ensuring that the agent has some uncertainty about its actions doesn’t ensure that the agent will have remotely reasonable counterfactual expectations. However, one thing we can try instead is to ensure the agent actually takes each action with some probability. This strategy is called ε-exploration.

ε-exploration ensures that if an agent plays similar games on enough occasions, it can eventually learn realistic counterfactuals (modulo a concern of realizability which we will get to later).

ε-exploration only works if it ensures that the agent itself can’t predict whether it is about to ε-explore. In fact, a good way to implement ε-exploration is via the rule “if the agent is too sure about its action, it takes a different one”.

From a logical perspective, the unpredictability of ε-exploration is what prevents the problems we’ve been discussing. From a learning-theoretic perspective, if the agent could know it wasn’t about to explore, then it could treat that as a different case—failing to generalize lessons from its exploration. This gets us back to a situation where we have no guarantee that the agent will learn better counterfactuals. Exploration may be the only source of data for some actions, so we need to force the agent to take that data into account, or it may not learn.

However, even ε-exploration doesn’t seem to get things exactly right. Observing the result of ε-exploration shows you what happens if you take an action unpredictably; the consequences of taking that action as part of business-as-usual may be different.

Suppose you’re an ε-explorer who lives in a world of ε-explorers. You’re applying for a job as a security guard, and you need to convince the interviewer that you’re not the kind of person who would run off with the stuff you’re guarding. They want to hire someone who has too much integrity to lie and steal, even if they thought they could get away with it.

Suppose the interviewer is an amazing judge of character—or just has read access to your source code.

In this situation, stealing might be a great option as an ε-exploration action, because the interviewer may not be able to predict your theft, or may not think punishment makes sense for a one-off anomaly.

But stealing is clearly a bad idea as a normal action, because you’ll be seen as much less reliable and trustworthy.

2.2. Viewing the problem from outside

If we don’t learn counterfactuals from ε-exploration, then, it seems we have no guarantee of learning realistic counterfactuals at all. But if we do learn from ε-exploration, it appears we still get things wrong in some cases.

Switching to a probabilistic setting doesn’t cause the agent to reliably make “reasonable” choices, and neither does forced exploration.

But writing down examples of “correct” counterfactual reasoning doesn’t seem hard from the outside!

Maybe that’s because from “outside” we always have a dualistic perspective. We are in fact sitting outside of the problem, and we’ve defined it as a function of an agent.

However, an agent can’t solve the problem in the same way from inside. From its perspective, its functional relationship with the environment isn’t an observable fact. This is why counterfactuals are called “counterfactuals”, after all.

When I told you about the 5 and 10 problem, I first told you about the problem, and then gave you an agent. When one agent doesn’t work well, we could consider a different agent.

Finding a way to succeed at a decision problem involves finding an agent that when plugged into the problem takes the right action. The fact that we can even consider putting in different agents means that we have already carved the universe into an “agent” part, plus the rest of the universe with a hole for the agent—which is most of the work!

Are we just fooling ourselves due to the way we set up decision problems, then? Are there no “correct” counterfactuals?

Well, maybe we are fooling ourselves. But there is still something we are confused about! “Counterfactuals are subjective, invented by the agent” doesn’t dissolve the mystery. There is something intelligent agents do, in the real world, to make decisions.

So I’m not talking about agents who know their own actions because I think there’s going to be a big problem with intelligent machines inferring their own actions in the future. Rather, the possibility of knowing your own actions illustrates something confusing about determining the consequences of your actions—a confusion which shows up even in the very simple case where everything about the world is known and you just need to choose the larger pile of money.

For all that, humans don’t seem to run into any trouble taking the $10.

Can we take any inspiration from how humans make decisions?

Well, suppose you’re actually asked to choose between $10 and $5. You know that you’ll take the $10. How do you reason about what would happen if you took the $5 instead?

It seems easy if you can separate yourself from the world, so that you only think of external consequences (getting $5).

If you think about yourself as well, the counterfactual starts seeming a bit more strange or contradictory. Maybe you have some absurd prediction about what the world would be like if you took the $5—like, “I’d have to be blind!”

That’s alright, though. In the end you still see that taking the $5 would lead to bad consequences, and you still take the $10, so you’re doing fine.

The challenge for formal agents is that an agent can be in a similar position, except it is taking the $5, knows it is taking the $5, and can’t figure out that it should be taking the $10 instead, because of the absurd predictions it makes about what happens when it takes the $10.

It seems hard for a human to end up in a situation like that; yet when we try to write down a formal reasoner, we keep running into this kind of problem. So it indeed seems like human decision-making is doing something here that we don’t yet understand.

2.3. Newcomblike problems

If you’re an embedded agent, then you should be able to think about yourself, just like you think about other objects in the environment. And other reasoners in your environment should be able to think about you too.

In the five-and-ten problem, we saw how messy things can get when an agent knows its own action before it acts. But this is hard to avoid for an embedded agent.

It’s especially hard not to know your own action in standard Bayesian settings, which assume logical omniscience. A probability distribution assigns probability 1 to any fact which is logically true. So if a Bayesian agent knows its own source code, then it should know its own action.

However, realistic agents who are not logically omniscient may run into the same problem. Logical omniscience forces the issue, but rejecting logical omniscience doesn’t eliminate the issue.

ε-exploration does seem to solve that problem in many cases, by ensuring that agents have uncertainty about their choices and that the things they expect are based on experience.

However, as we saw in the security guard example, even ε-exploration seems to steer us wrong when the results of exploring randomly differ from the results of acting reliably.

Examples which go wrong in this way seem to involve another part of the environment that behaves like you—such as another agent very similar to yourself, or a sufficiently good model or simulation of you. These are called Newcomblike problems; an example is the Twin Prisoner’s Dilemma mentioned above.

If the five-and-ten problem is about cutting a you-shaped piece out of the world so that the world can be treated as a function of your action, Newcomblike problems are about what to do when there are several approximately you-shaped pieces in the world.

One idea is that exact copies should be treated as 100% under your “logical control”. For approximate models of you, or merely similar agents, control should drop off sharply as logical correlation decreases. But how does this work?

Newcomblike problems are difficult for almost the same reason as the self-reference issues discussed so far: prediction. With strategies such as ε-exploration, we tried to limit the self-knowledge of the agent in an attempt to avoid trouble. But the presence of powerful predictors in the environment reintroduces the trouble. By choosing what information to share, predictors can manipulate the agent and choose their actions for them.

If there is something which can predict you, it might tell you its prediction, or related information, in which case it matters what you do in response to various things you could find out.

Suppose you decide to do the opposite of whatever you’re told. Then it isn’t possible for the scenario to be set up in the first place. Either the predictor isn’t accurate after all, or alternatively, the predictor doesn’t share their prediction with you.

On the other hand, suppose there’s some situation where you do act as predicted. Then the predictor can control how you’ll behave, by controlling what prediction they tell you.

So, on the one hand, a powerful predictor can control you by selecting between the consistent possibilities. On the other hand, you are the one who chooses your pattern of responses in the first place, which means that you can set them up to your best advantage.

2.4. Observation counterfactuals

So far, we’ve been discussing action counterfactuals—how to anticipate consequences of different actions. This discussion of controlling your responses introduces the observation counterfactual—imagining what the world would be like if different facts had been observed.

Even if there is no one telling you a prediction about your future behavior, observation counterfactuals can still play a role in making the right decision. Consider the following game:

Alice receives a card at random which is either High or Low. She may reveal the card if she wishes. Bob then gives his probability that Alice has a high card. Alice always loses dollars. Bob loses if the card is low, and if the card is high.

Bob has a proper scoring rule, so does best by giving his true belief. Alice just wants Bob’s belief to be as much toward “low” as possible.

Suppose Alice will play only this one time. She sees a low card. Bob is good at reasoning about Alice, but is in the next room and so can’t read any tells. Should Alice reveal her card?

Since Alice’s card is low, if she shows it to Bob, she will lose no money, which is the best possible outcome. However, this means that in the counterfactual world where Alice sees a high card, she wouldn’t be able to keep the secret—she might as well show her card in that case too, since her reluctance to show it would be as reliable a sign of “high”.

On the other hand, if Alice doesn’t show her card, she loses 25¢—but then she can use the same strategy in the other world, rather than losing $1. So, before playing the game, Alice would want to visibly commit to not reveal; this makes expected loss 25¢, whereas the other strategy has expected loss 50¢. By taking observation counterfactuals into account, Alice is able to keep secrets—without them, Bob could perfectly infer her card from her actions.

This game is equivalent to the decision problem called counterfactual mugging.

Updateless decision theory (UDT) is a proposed decision theory which can keep secrets in the high/low card game. UDT does this by recommending that the agent do whatever would have seemed wisest before—whatever your earlier self would have committed to do.

As it happens, UDT also performs well in Newcomblike problems.

Could something like UDT be related to what humans are doing, if only implicitly, to get good results on decision problems? Or, if it’s not, could it still be a good model for thinking about decision-making?

Unfortunately, there are still some pretty deep difficulties here. UDT is an elegant solution to a fairly broad class of decision problems, but it only makes sense if the earlier self can foresee all possible situations.

This works fine in a Bayesian setting where the prior already contains all possibilities within itself. However, there may be no way to do this in a realistic embedded setting. An agent has to be able to think of new possibilities—meaning that its earlier self doesn’t know enough to make all the decisions.

And with that, we find ourselves squarely facing the problem of embedded world-models.

3. Embedded world-models

An agent which is larger than its environment can:

Hold an exact model of the environment in its head.

Think through the consequences of every potential course of action.

If it doesn’t know the environment perfectly, hold every possible way the environment could be in its head, as is the case with Bayesian uncertainty.

All of these are typical of notions of rational agency.

An embedded agent can’t do any of those things, at least not in any straightforward way.

One difficulty is that, since the agent is part of the environment, modeling the environment in every detail would require the agent to model itself in every detail, which would require the agent’s self-model to be as “big” as the whole agent. An agent can’t fit inside its own head.

The lack of a crisp agent/environment boundary forces us to grapple with paradoxes of self-reference. As if representing the rest of the world weren’t already hard enough.

Embedded World-Models have to represent the world in a way more appropriate for embedded agents. Problems in this cluster include:

the “realizability” / “grain of truth” problem: the real world isn’t in the agent’s hypothesis space

logical uncertainty

high-level models

multi-level models

ontological crises

naturalized induction, the problem that the agent must incorporate its model of itself into its world-model

anthropic reasoning, the problem of reasoning with how many copies of yourself exist

3.1. Realizability

In a Bayesian setting, where an agent’s uncertainty is quantified by a probability distribution over possible worlds, a common assumption is “realizability”: the true underlying environment which is generating the observations is assumed to have at least some probability in the prior.

In game theory, this same property is described by saying a prior has a “grain of truth”. It should be noted, though, that there are additional barriers to getting this property in a game-theoretic setting; so, in their common usage cases, “grain of truth” is technically demanding while “realizability” is a technical convenience.

Realizability is not totally necessary in order for Bayesian reasoning to make sense. If you think of a set of hypotheses as “experts”, and the current posterior probability as how much you “trust” each expert, then learning according to Bayes’ Law, , ensures a relative bounded loss property.

Specifically, if you use a prior , the amount worse you are in comparison to each expert is at most , since you assign at least probability to seeing a sequence of evidence . Intuitively, is your initial trust in expert , and in each case where it is even a little bit more correct than you, you increase your trust accordingly. The way you do this ensures you assign an expert probability 1 and hence copy it precisely before you lose more than compared to it.

The prior AIXI is based on is the Solomonoff prior. It is defined as the output of a universal Turing machine (UTM) whose inputs are coin-flips.

In other words, feed a UTM a random program. Normally, you’d think of a UTM as only being able to simulate deterministic machines. Here, however, the initial inputs can instruct the UTM to use the rest of the infinite input tape as a source of randomness to simulate a stochastic Turing machine.

Combining this with the previous idea about viewing Bayesian learning as a way of allocating “trust” to “experts” which meets a bounded loss condition, we can see the Solomonoff prior as a kind of ideal machine learning algorithm which can learn to act like any algorithm you might come up with, no matter how clever.

For this reason, we shouldn’t necessarily think of AIXI as “assuming the world is computable”, even though it reasons via a prior over computations. It’s getting bounded loss on its predictive accuracy as compared with any computable predictor. We should rather say that AIXI assumes all possible algorithms are computable, not that the world is.

However, lacking realizability can cause trouble if you are looking for anything more than bounded-loss predictive accuracy:

the posterior can oscillate forever;

probabilities may not be calibrated;

estimates of statistics such as the mean may be arbitrarily bad;

estimates of latent variables may be bad;

and the identification of causal structure may not work.

So does AIXI perform well without a realizability assumption? We don’t know. Despite getting bounded loss for predictions without realizability, existing optimality results for its actions require an added realizability assumption.

First, if the environment really is sampled from the Solomonoff distribution, AIXI gets the maximum expected reward. But this is fairly trivial; it is essentially the definition of AIXI.

Second, if we modify AIXI to take somewhat randomized actions—Thompson sampling—there is an asymptotic optimality result for environments which act like any stochastic Turing machine.

So, either way, realizability was assumed in order to prove anything. (See Jan Leike, Nonparametric General Reinforcement Learning.)

But the concern I’m pointing at is not “the world might be uncomputable, so we don’t know if AIXI will do well”; this is more of an illustrative case. The concern is that AIXI is only able to define intelligence or rationality by constructing an agent much, much bigger than the environment which it has to learn about and act within.

Laurent Orseau provides a way of thinking about this in “Space-Time Embedded Intelligence”. However, his approach defines the intelligence of an agent in terms of a sort of super-intelligent designer who thinks about reality from outside, selecting an agent to place into the environment.

Embedded agents don’t have the luxury of stepping outside of the universe to think about how to think. What we would like would be a theory of rational belief for situated agents which provides foundations that are similarly as strong as the foundations Bayesianism provides for dualistic agents.

Imagine a computer science theory person who is having a disagreement with a programmer. The theory person is making use of an abstract model. The programmer is complaining that the abstract model isn’t something you would ever run, because it is computationally intractable. The theory person responds that the point isn’t to ever run it. Rather, the point is to understand some phenomenon which will also be relevant to more tractable things which you would want to run.

I bring this up in order to emphasize that my perspective is a lot more like the theory person’s. I’m not talking about AIXI to say “AIXI is an idealization you can’t run”. The answers to the puzzles I’m pointing at don’t need to run. I just want to understand some phenomena.

However, sometimes a thing that makes some theoretical models less tractable also makes that model too different from the phenomenon we’re interested in.

The way AIXI wins games is by assuming we can do true Bayesian updating over a hypothesis space, assuming the world is in our hypothesis space, etc. So it can tell us something about the aspect of realistic agency that’s approximately doing Bayesian updating over an approximately-good-enough hypothesis space. But embedded agents don’t just need approximate solutions to that problem; they need to solve several problems that are different in kind from that problem.

3.2. Self-reference

One major obstacle a theory of embedded agency must deal with is self-reference.

Paradoxes of self-reference such as the liar paradox make it not just wildly impractical, but in a certain sense impossible for an agent’s world-model to accurately reflect the world.

The liar paradox concerns the status of the sentence “This sentence is not true”. If it were true, it must be false; and if not true, it must be true.

The difficulty comes in part from trying to draw a map of a territory which includes the map itself.

This is fine if the world “holds still” for us; but because the map is in the world, different maps create different worlds.

Suppose our goal is to make an accurate map of the final route of a road which is currently under construction. Suppose we also know that the construction team will get to see our map, and that construction will proceed so as to disprove whatever map we make. This puts us in a liar-paradox-like situation.

Problems of this kind become relevant for decision-making in the theory of games. A simple game of rock-paper-scissors can introduce a liar paradox if the players try to win, and can predict each other better than chance.

Game theory solves this type of problem with game-theoretic equilibria. But the problem ends up coming back in a different way.

I mentioned that the problem of realizability takes on a different character in the context of game theory. In an ML setting, realizability is a potentially unrealistic assumption, but can usually be assumed consistently nonetheless.

In game theory, on the other hand, the assumption itself may be inconsistent. This is because games commonly yield paradoxes of self-reference.

Because there are so many agents, it is no longer possible in game theory to conveniently make an “agent” a thing which is larger than a world. So game theorists are forced to investigate notions of rational agency which can handle a large world.

Unfortunately, this is done by splitting up the world into “agent” parts and “non-agent” parts, and handling the agents in a special way. This is almost as bad as dualistic models of agency.

In rock-paper-scissors, the liar paradox is resolved by stipulating that each player play each move with probability. If one player plays this way, then the other loses nothing by doing so. This way of introducing probabilistic play to resolve would-be paradoxes of game theory is called a Nash equilibrium.

We can use Nash equilibria to prevent the assumption that the agents correctly understand the world they’re in from being inconsistent. However, that works just by telling the agents what the world looks like. What if we want to model agents who learn about the world, more like AIXI?

The grain of truth problem is the problem of formulating a reasonably bound prior probability distribution which would allow agents playing games to place some positive probability on each other’s true (probabilistic) behavior, without knowing it precisely from the start.

Until recently, known solutions to the problem were quite limited. Benja Fallenstein, Jessica Taylor, and Paul Christiano’s “Reflective Oracles: A Foundation for Classical Game Theory” provides a very general solution. For details, see “A Formal Solution to the Grain of Truth Problem” by Jan Leike, Jessica Taylor, and Benja Fallenstein.

You might think that stochastic Turing machines can represent Nash equilibria just fine.

But if you’re trying to produce Nash equilibria as a result of reasoning about other agents, you’ll run into trouble. If each agent models the other’s computation and tries to run it to see what the other agent does, you’ve just got an infinite loop.

There are some questions Turing machines just can’t answer—in particular, questions about the behavior of Turing machines. The halting problem is the classic example.

Turing studied “oracle machines” to examine what would happen if we could answer such questions. An oracle is like a book containing some answers to questions which we were unable to answer before.

But ordinarily, we get a hierarchy. Type B machines can answer questions about whether type A machines halt, type C machines have the answers about types A and B, and so on, but no machines have answers about their own type.

Reflective oracles work by twisting the ordinary Turing universe back on itself, so that rather than an infinite hierarchy of ever-stronger oracles, you define an oracle that serves as its own oracle machine.

This would normally introduce contradictions, but reflective oracles avoid this by randomizing their output in cases where they would run into paradoxes. So reflective oracle machines are stochastic, but they’re more powerful than regular stochastic Turing machines.

That’s how reflective oracles address the problems we mentioned earlier of a map that’s itself part of the territory: randomize.

Reflective oracles also solve the problem with game-theoretic notions of rationality I mentioned earlier. It allows agents to be reasoned about in the same manner as other parts of the environment, rather than treating them as a fundamentally special case. They’re all just computations-with-oracle-access.

However, models of rational agents based on reflective oracles still have several major limitations. One of these is that agents are required to have unlimited processing power, just like AIXI, and so are assumed to know all of the consequences of their own beliefs.

In fact, knowing all the consequences of your beliefs—a property known as logical omniscience—turns out to be rather core to classical Bayesian rationality.

3.3. Logical uncertainty

So far, I’ve been talking in a fairly naive way about the agent having beliefs about hypotheses, and the real world being or not being in the hypothesis space.

It isn’t really clear what any of that means.

Depending on how we define things, it may actually be quite possible for an agent to be smaller than the world and yet contain the right world-model—it might know the true physics and initial conditions, but only be capable of inferring their consequences very approximately.

Humans are certainly used to living with shorthands and approximations. But realistic as this scenario may be, it is not in line with what it usually means for a Bayesian to know something. A Bayesian knows the consequences of all of its beliefs.

Uncertainty about the consequences of your beliefs is logical uncertainty. In this case, the agent might be empirically certain of a unique mathematical description pinpointing which universe she’s in, while being logically uncertain of most consequences of that description.

Modeling logical uncertainty requires us to have a combined theory of logic (reasoning about implications) and probability (degrees of belief).

Logic and probability theory are two great triumphs in the codification of rational thought. Logic provides the best tools for thinking about self-reference, while probability provides the best tools for thinking about decision-making. However, the two don’t work together as well as one might think.

They may seem superficially compatible, since probability theory is an extension of Boolean logic. However, Gödel’s first incompleteness theorem shows that any sufficiently rich logical system is incomplete: not only does it fail to decide every sentence as true or false, but it also has no computable extension which manages to do so.

(See the post “An Untrollable Mathematician Illustrated” for more illustration of how this messes with probability theory.)

This also applies to probability distributions: no computable distribution can assign probabilities in a way that’s consistent with a sufficiently rich theory. This forces us to choose between using an uncomputable distribution, or using a distribution which is inconsistent.

Sounds like an easy choice, right? The inconsistent theory is at least computable, and we are after all trying to develop a theory of logical non-omniscience. We can just continue to update on facts which we prove, bringing us closer and closer to consistency.

Unfortunately, this doesn’t work out so well, for reasons which connect back to realizability. Remember that there are no computable probability distributions consistent with all consequences of sound theories. So our non-omniscient prior doesn’t even contain a single correct hypothesis.

This causes pathological behavior as we condition on more and more true mathematical beliefs. Beliefs wildly oscillate rather than approaching reasonable estimates.

Taking a Bayesian prior on mathematics, and updating on whatever we prove, does not seem to capture mathematical intuition and heuristic conjecture very well—unless we restrict the domain and craft a sensible prior.

Probability is like a scale, with worlds as weights. An observation eliminates some of the possible worlds, removing weights and shifting the balance of beliefs.

Logic is like a tree, growing from the seed of axioms according to inference rules. For real-world agents, the process of growth is never complete; you never know all the consequences of each belief.

Without knowing how to combine the two, we can’t characterize reasoning probabilistically about math. But the “scale versus tree” problem also means that we don’t know how ordinary empirical reasoning works.

Bayesian hypothesis testing requires each hypothesis to clearly declare which probabilities it assigns to which observations. That way, you know how much to rescale the odds when you make an observation. If we don’t know the consequences of a belief, we don’t know how much credit to give it for making predictions.

This is like not knowing where to place the weights on the scales of probability. We could try putting weights on both sides until a proof rules one out, but then the beliefs just oscillate forever rather than doing anything useful.

This forces us to grapple directly with the problem of a world that’s larger than the agent. We want some notion of boundedly rational beliefs about uncertain consequences; but any computable beliefs about logic must have left out something, since the tree of logical implications will grow larger than any container.

For a Bayesian, the scales of probability are balanced in precisely such a way that no Dutch book can be made against them—no sequence of bets that are a sure loss. But you can only account for all Dutch books if you know all the consequences of your beliefs. Absent that, someone who has explored other parts of the tree can Dutch-book you.

But human mathematicians don’t seem to run into any special difficulty in reasoning about mathematical uncertainty, any more than we do with empirical uncertainty. So what characterizes good reasoning under mathematical uncertainty, if not immunity to making bad bets?

One answer is to weaken the notion of Dutch books so that we only allow bets based on quickly computable parts of the tree. This is one of the ideas behind Garrabrant et al.’s “Logical Induction”, an early attempt at defining something like “Solomonoff induction, but for reasoning that incorporates mathematical uncertainty”.

3.4. High-level models

Another consequence of the fact that the world is bigger than you is that you need to be able to use high-level world models: models which involve things like tables and chairs.

This is related to the classical symbol grounding problem; but since we want a formal analysis which increases our trust in some system, the kind of model which interests us is somewhat different. This also relates to transparency and informed oversight: world-models should be made out of understandable parts.

A related question is how high-level reasoning and low-level reasoning relate to each other and to intermediate levels: multi-level world models.

Standard probabilistic reasoning doesn’t provide a very good account of this sort of thing. It’s as though you have different Bayes nets which describe the world at different levels of accuracy, and processing power limitations force you to mostly use the less accurate ones, so you have to decide how to jump to the more accurate as needed.

Additionally, the models at different levels don’t line up perfectly, so you have a problem of translating between them; and the models may have serious contradictions between them. This might be fine, since high-level models are understood to be approximations anyway, or it could signal a serious problem in the higher- or lower-level models, requiring their revision.

This is especially interesting in the case of ontological crises, in which objects we value turn out not to be a part of “better” models of the world.

It seems fair to say that everything humans value exists in high-level models only, which from a reductionistic perspective is “less real” than atoms and quarks. However, because our values aren’t defined on the low level, we are able to keep our values even when our knowledge of the low level radically shifts. (We would also like to be able to say something about what happens to values if the high level radically shifts.)

Another critical aspect of embedded world models is that the agent itself must be in the model, since the agent seeks to understand the world, and the world cannot be fully separated from oneself. This opens the door to difficult problems of self-reference and anthropic decision theory.

Naturalized induction is the problem of learning world-models which include yourself in the environment. This is challenging because (as Caspar Oesterheld has put it) there is a type mismatch between “mental stuff” and “physics stuff”.

AIXI conceives of the environment as if it were made with a slot which the agent fits into. We might intuitively reason in this way, but we can also understand a physical perspective from which this looks like a bad model. We might imagine instead that the agent separately represents: self-knowledge available to introspection; hypotheses about what the universe is like; and a “bridging hypothesis” connecting the two.

There are interesting questions of how this could work. There’s also the question of whether this is the right structure at all. It’s certainly not how I imagine babies learning.

Thomas Nagel would say that this way of approaching the problem involves “views from nowhere”; each hypothesis posits a world as if seen from outside. This is perhaps a strange thing to do.

A special case of agents needing to reason about themselves is agents needing to reason about their future self.

To make long-term plans, agents need to be able to model how they’ll act in the future, and have a certain kind of trust in their future goals and reasoning abilities. This includes trusting future selves that have learned and grown a great deal.

In a traditional Bayesian framework, “learning” means Bayesian updating. But as we noted, Bayesian updating requires that the agent start out large enough to consider a bunch of ways the world can be, and learn by ruling some of these out.

Embedded agents need resource-limited, logically uncertain updates, which don’t work like this.

Unfortunately, Bayesian updating is the main way we know how to think about an agent progressing through time as one unified agent. The Dutch book justification for Bayesian reasoning is basically saying this kind of updating is the only way to not have the agent’s actions on Monday work at cross purposes, at least a little, to the agent’s actions on Tuesday.

Embedded agents are non-Bayesian. And non-Bayesian agents tend to get into wars with their future selves.

Which brings us to our next set of problems: robust delegation.

4. Robust delegation

Because the world is big, the agent as it is may be inadequate to accomplish its goals, including in its ability to think.

Because the agent is made of parts, it can improve itself and become more capable.

Improvements can take many forms: The agent can make tools, the agent can make successor agents, or the agent can just learn and grow over time. However, the successors or tools need to be more capable for this to be worthwhile.

This gives rise to a special type of principal/agent problem:

You have an initial agent, and a successor agent. The initial agent gets to decide exactly what the successor agent looks like. The successor agent, however, is much more intelligent and powerful than the initial agent. We want to know how to have the successor agent robustly optimize the initial agent’s goals.

Here are three examples of forms this principal/agent problem can take:

In the AI alignment problem, a human is trying to build an AI system which can be trusted to help with the human’s goals.

In the tiling agents problem, an agent is trying to make sure it can trust its future selves to help with its own goals.

Or we can consider a harder version of the tiling problem—stable self-improvement—where an AI system has to build a successor which is more intelligent than itself, while still being trustworthy and helpful.

For a human analogy which involves no AI, you can think about the problem of succession in royalty, or more generally the problem of setting up organizations to achieve desired goals without losing sight of their purpose over time.

The difficulty seems to be twofold:

First, a human or AI agent may not fully understand itself and its own goals. If an agent can’t write out what it wants in exact detail, that makes it hard for it to guarantee that its successor will robustly help with the goal.

Second, the idea behind delegating work is that you not have to do all the work yourself. You want the successor to be able to act with some degree of autonomy, including learning new things that you don’t know, and wielding new skills and capabilities.

In the limit, a really good formal account of robust delegation should be able to handle arbitrarily capable successors without throwing up any errors—like a human or AI building an unbelievably smart AI, or like an agent that just keeps learning and growing for so many years that it ends up much smarter than its past self.

The problem is not (just) that the successor agent might be malicious. The problem is that we don’t even know what it means not to be.

This problem seems hard from both points of view.

The initial agent needs to figure out how reliable and trustworthy something more powerful than it is, which seems very hard. But the successor agent has to figure out what to do in situations that the initial agent can’t even understand, and try to respect the goals of something that the successor can see is inconsistent, which also seems very hard.

At first, this may look like a less fundamental problem than “make decisions” or “have models”. But the view on which there are multiple forms of the “build a successor” problem is itself a dualistic view.

To an embedded agent, the future self is not privileged; it is just another part of the environment. There isn’t a deep difference between building a successor that shares your goals, and just making sure your own goals stay the same over time.

So, although I talk about “initial” and “successor” agents, remember that this isn’t just about the narrow problem humans currently face of aiming a successor. This is about the fundamental problem of being an agent that persists and learns over time.

We call this cluster of problems Robust Delegation. Examples include:

the tiling problem

averting Goodhart’s law

4.1. Vingean reflection

Imagine you are playing the CIRL game with a toddler.

CIRL means Cooperative Inverse Reinforcement Learning. The idea behind CIRL is to define what it means for a robot to collaborate with a human. The robot tries to pick helpful actions, while simultaneously trying to figure out what the human wants.

A lot of current work on robust delegation comes from the goal of aligning AI systems with what humans want. So usually, we think about this from the point of view of the human.

But now consider the problem faced by a smart robot, where they’re trying to help someone who is very confused about the universe. Imagine trying to help a toddler optimize their goals.

From your standpoint, the toddler may be too irrational to be seen as optimizing anything.

The toddler may have an ontology in which it is optimizing something, but you can see that ontology doesn’t make sense.

Maybe you notice that if you set up questions in the right way, you can make the toddler seem to want almost anything.

Part of the problem is that the “helping” agent has to be bigger in some sense in order to be more capable; but this seems to imply that the “helped” agent can’t be a very good supervisor for the “helper”.

For example, updateless decision theory eliminates dynamic inconsistencies in decision theory by, rather than maximizing expected utility of your action given what you know, maximizing expected utility of reactions to observations, from a state of ignorance.

Appealing as this may be as a way to achieve reflective consistency, it creates a strange situation in terms of computational complexity: If actions are type , and observations are type , reactions to observations are type —a much larger space to optimize over than alone. And we’re expecting our smaller self to be able to do that!

This seems bad.

One way to more crisply state the problem is: We should be able to trust that our future self is applying its intelligence to the pursuit of our goals without being able to predict precisely what our future self will do. This criterion is called Vingean reflection.

For example, you might plan your driving route before visiting a new city, but you do not plan your steps. You plan to some level of detail, and trust that your future self can figure out the rest.

Vingean reflection is difficult to examine via classical Bayesian decision theory because Bayesian decision theory assumes logical omniscience. Given logical omniscience, the assumption “the agent knows its future actions are rational” is synonymous with the assumption “the agent knows its future self will act according to one particular optimal policy which the agent can predict in advance”.

We have some limited models of Vingean reflection (see “Tiling Agents for Self-Modifying AI, and the Löbian Obstacle” by Yudkowsky and Herreshoff). A successful approach must walk the narrow line between two problems:

The Löbian Obstacle: Agents who trust their future self because they trust the output of their own reasoning are inconsistent.

The Procrastination Paradox: Agents who trust their future selves without reason tend to be consistent but unsound and untrustworthy, and will put off tasks forever because they can do them later.

The Vingean reflection results so far apply only to limited sorts of decision procedures, such as satisficers aiming for a threshold of acceptability. So there is plenty of room for improvement, getting tiling results for more useful decision procedures and under weaker assumptions.

However, there is more to the robust delegation problem than just tiling and Vingean reflection.

When you construct another agent, rather than delegating to your future self, you more directly face a problem of value loading.

4.2. Goodhart’s law

The main problems in the context of value loading:

We don’t know what we want.

Optimization amplifies slight differences between what we say we want and what we really want.

The misspecification-amplifying effect is known as Goodhart’s law, named for Charles Goodhart’s observation: “Any observed statistical regularity will tend to collapse once pressure is placed upon it for control purposes.”

When we specify a target for optimization, it is reasonable to expect it to be correlated with what we want—highly correlated, in some cases. Unfortunately, however, this does not mean that optimizing it will get us closer to what we want—especially at high levels of optimization.

There are (at least) four types of Goodhart: regressional, extremal, causal, and adversarial.

Regressional Goodhart happens when there is a less than perfect correlation between the proxy and the goal. It is more commonly known as the optimizer’s curse, and it is related to regression to the mean.

An example of regressional Goodhart is that you might draft players for a basketball team based on height alone. This isn’t a perfect heuristic, but there is a correlation between height and basketball ability, which you can make use of in making your choices.

It turns out that, in a certain sense, you will be predictably disappointed if you expect the general trend to hold up as strongly for your selected team.

Stated in statistical terms: an unbiased estimate of given is not an unbiased estimate of when we select for the best . In that sense, we can expect to be disappointed when we use as a proxy for for optimization purposes.

(The graphs in this section are hand-drawn to help illustrate the relevant concepts.)

Using a Bayes estimate instead of an unbiased estimate, we can eliminate this sort of predictable disappointment. The Bayes estimate accounts for the noise in , bending toward typical values.

This doesn’t necessarily allow us to get a better value, since we still only have the information content of to work with. However, it sometimes may. If is normally distributed with variance , and is with even odds of or , a Bayes estimate will give better optimization results by almost entirely removing the noise.

Regressional Goodhart seems like the easiest form of Goodhart to beat: just use Bayes!

However, there are two big problems with this solution:

Bayesian estimators are very often intractable in cases of interest.

It only makes sense to trust the Bayes estimate under a realizability assumption.

A case where both of these problems become critical is computational learning theory.

It often isn’t computationally feasible to calculate the Bayesian expected generalization error of a hypothesis. And even if you could, you would still need to wonder whether your chosen prior reflected the world well enough.

In extremal Goodhart, optimization pushes you outside the range where the correlation exists, into portions of the distribution which behave very differently.

This is especially scary because it tends to involves optimizers behaving in sharply different ways in different contexts, often with little or no warning. You might not be able to observe the proxy breaking down at all when you have weak optimization, but once the optimization becomes strong enough, you can enter a very different domain.

The difference between extremal Goodhart and regressional Goodhart is related to the classical interpolation/extrapolation distinction.

Because extremal Goodhart involves a sharp change in behavior as the system is scaled up, it’s harder to anticipate than regressional Goodhart.

As in the regressional case, a Bayesian solution addresses this concern in principle, if you trust a probability distribution to reflect the possible risks sufficiently well. However, the realizability concern seems even more prominent here.

Can a prior be trusted to anticipate problems with proposals, when those proposals have been highly optimized to look good to that specific prior? Certainly a human’s judgment couldn’t be trusted under such conditions—an observation which suggests that this problem will remain even if a system’s judgments about values perfectly reflect a human’s.

We might say that the problem is this: “typical” outputs avoid extremal Goodhart, but “optimizing too hard” takes you out of the realm of the typical.

But how can we formalize “optimizing too hard” in decision-theoretic terms?

Quantilization offers a formalization of “optimize this some, but don’t optimize too much”.

Imagine a proxy as a “corrupted” version of the function we really want, . There might be different regions where the corruption is better or worse.

Suppose that we can additionally specify a “trusted” probability distribution , for which we are confident that the average error is below some threshold .

By stipulating and , we give information about where to find low-error points, without needing to have any estimates of or of the actual error at any one point.

When we select actions from at random, we can be sure regardless that there’s a low probability of high error.

So, how do we use this to optimize? A quantilizer selects from , but discarding all but the top fraction ; for example, the top 1%. In this visualization, I’ve judiciously chosen a fraction that still has most of the probability concentrated on the “typical” options, rather than on outliers:

By quantilizing, we can guarantee that if we overestimate how good something is, we’re overestimating by at most in expectation. This is because in the worst case, all of the overestimation was of the best options.

We can therefore choose an acceptable risk level, , and set the parameter as .

Quantilization is in some ways very appealing, since it allows us to specify safe classes of actions without trusting every individual action in the class—or without trusting any individual action in the class.

If you have a sufficiently large heap of apples, and there’s only one rotten apple in the heap, choosing randomly is still very likely safe. By “optimizing less hard” and picking a random good-enough action, we make the really extreme options low-probability. In contrast, if we had optimized as hard as possible, we might have ended up selecting from only bad apples.

However, this approach also leaves a lot to be desired. Where do “trusted” distributions come from? How do you estimate the expected error , or select the acceptable risk level ? Quantilization is a risky approach because gives you a knob to turn that will seemingly improve performance, while increasing risk, until (possibly sudden) failure.

Additionally, quantilization doesn’t seem likely to tile. That is, a quantilizing agent has no special reason to preserve the quantilization algorithm when it makes improvements to itself or builds new agents.

So there seems to be room for improvement in how we handle extremal Goodhart.

Another way optimization can go wrong is when the act of selecting for a proxy breaks the connection to what we care about. Causal Goodhart happens when you observe a correlation between proxy and goal, but when you intervene to increase the proxy, you fail to increase the goal because the observed correlation was not causal in the right way.

An example of causal Goodhart is that you might try to make it rain by carrying an umbrella around. The only way to avoid this sort of mistake is to get counterfactuals right.

This might seem like punting to decision theory, but the connection here enriches robust delegation and decision theory alike.

Counterfactuals have to address concerns of trust due to tiling concerns—the need for decision-makers to reason about their own future decisions. At the same time, trust has to address counterfactual concerns because of causal Goodhart.

Once again, one of the big challenges here is realizability. As we noted in our discussion of embedded world-models, even if you have the right theory of how counterfactuals work in general, Bayesian learning doesn’t provide much of a guarantee that you’ll learn to select actions well, unless we assume realizability.

Finally, there is adversarial Goodhart, in which agents actively make our proxy worse by intelligently manipulating it.

This category is what people most often have in mind when they interpret Goodhart’s remark. And at first glance, it may not seem as relevant to our concerns here. We want to understand in formal terms how agents can trust their future selves, or trust helpers they built from scratch. What does that have to do with adversaries?

The short answer is: when searching in a large space which is sufficiently rich, there are bound to be some elements of that space which implement adversarial strategies. Understanding optimization in general requires us to understand how sufficiently smart optimizers can avoid adversarial Goodhart. (We’ll come back to this point in our discussion of subsystem alignment.)

The adversarial variant of Goodhart’s law is even harder to observe at low levels of optimization, both because the adversaries won’t want to start manipulating until after test time is over, and because adversaries that come from the system’s own optimization won’t show up until the optimization is powerful enough.

These four forms of Goodhart’s law work in very different ways—and roughly speaking, they tend to start appearing at successively higher levels of optimization power, beginning with regressional Goodhart and proceeding to causal, then extremal, then adversarial. So be careful not to think you’ve conquered Goodhart’s law because you’ve solved some of them.

4.3. Stable pointers to value

Besides anti-Goodhart measures, it would obviously help to be able to specify what we want precisely. Remember that none of these problems would come up if a system were optimizing what we wanted directly, rather than optimizing a proxy.

Unfortunately, this is hard. So can the AI system we’re building help us with this?

More generally, can a successor agent help its predecessor solve this? Maybe it can use its intellectual advantages to figure out what we want?

AIXI learns what to do through a reward signal which it gets from the environment. We can imagine humans have a button which they press when AIXI does something they like.

The problem with this is that AIXI will apply its intelligence to the problem of taking control of the reward button. This is the problem of wireheading.

This kind of behavior is potentially very difficult to anticipate; the system may deceptively behave as intended during training, planning to take control after deployment. This is called a “treacherous turn”.

Maybe we build the reward button into the agent, as a black box which issues rewards based on what is going on. The box could be an intelligent sub-agent in its own right, which figures out what rewards humans would want to give. The box could even defend itself by issuing punishments for actions aimed at modifying the box.

In the end, though, if the agent understands the situation, it will be motivated to take control anyway.

If the agent is told to get high output from “the button” or “the box”, then it will be motivated to hack those things. However, if you run the expected outcomes of plans through the actual reward-issuing box, then plans to hack the box are evaluated by the box itself, which won’t find the idea appealing.

Daniel Dewey calls the second sort of agent an observation-utility maximizer. (Others have included observation-utility agents within a more general notion of reinforcement learning.)

I find it very interesting how you can try all sorts of things to stop an RL agent from wireheading, but the agent keeps working against it. Then, you make the shift to observation-utility agents and the problem vanishes.

However, we still have the problem of specifying . Daniel Dewey points out that observation-utility agents can still use learning to approximate over time; we just can’t treat as a black box. An RL agent tries to learn to predict the reward function, whereas an observation-utility agent uses estimated utility functions from a human-specified value-learning prior.

However, it’s still difficult to specify a learning process which doesn’t lead to other problems. For example, if you’re trying to learn what humans want, how do you robustly identify “humans” in the world? Merely statistically decent object recognition could lead back to wireheading.