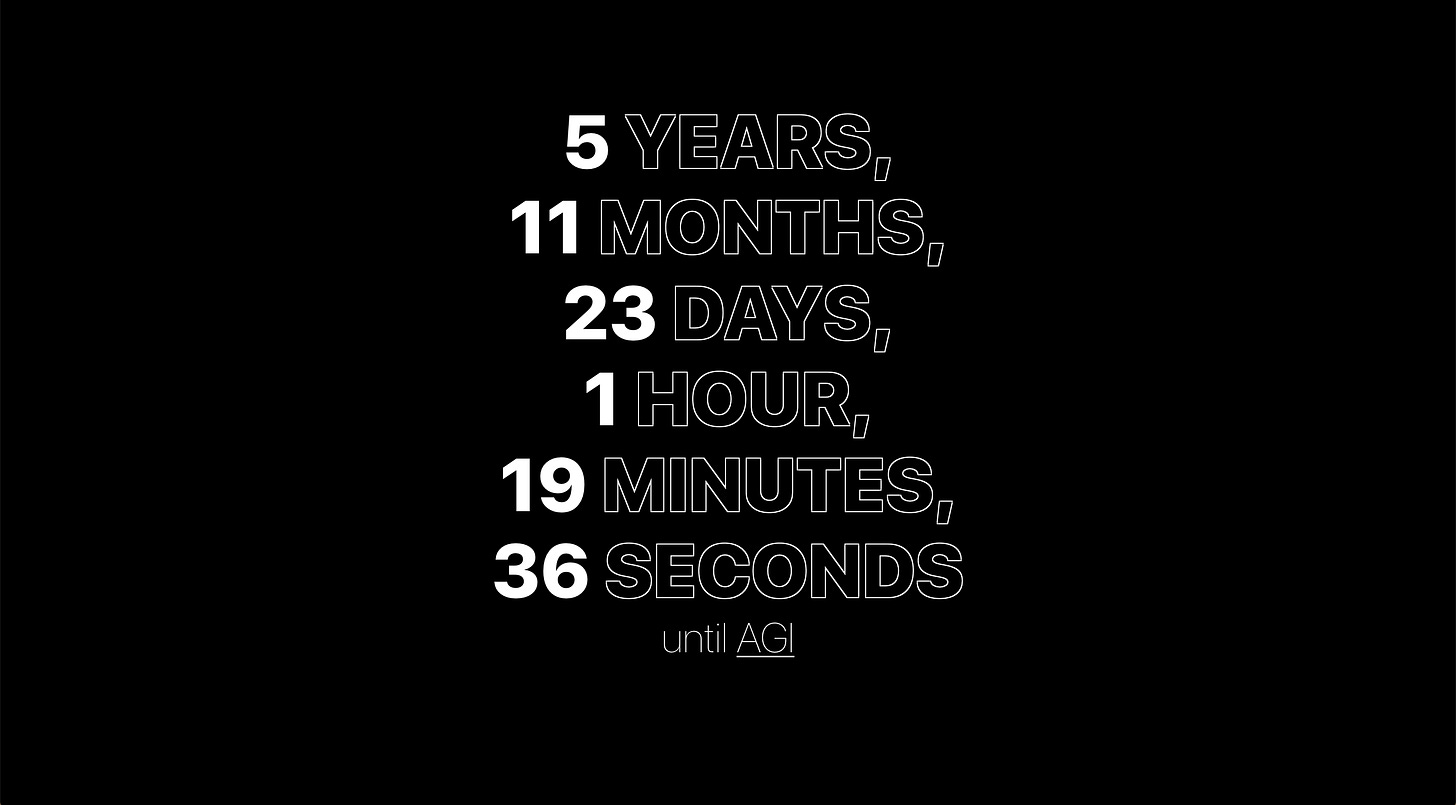

I made this clock, counting down the time left until we build AGI:

It uses the most famous Metaculus prediction on the topic, inspired by several recent dives in the expected date. Updates are automatic, so it reflects the constant fluctuations in collective opinion.

Currently, it’s sitting in 2028, i.e. the end of the next presidential term. The year of the LA Olympics. Not so far away.

There were a few motivations behind this project:

Civilizational preparedness. Many people are working on making sure this transition is a good one. Many more probably should be. I don’t want to be alarmist, but the less abstract we can make the question, the better. In this regard, it’s similar to the Doomsday Clock.

Personal logistics. I frequently find myself making decisions about long-term projects that would be deeply affected by the advent of AGI. Having kids, for example.

The prediction is obviously far from absolute, and I’m not about to stop saving more than 5 years and 11 months of living expenses. But it’s good to be reminded that the status quo is no longer the best model for the future.Savoring the remainder. Most likely, AGI will be the beginning of the end for humanity. Not to say that we will necessarily go extinct, but we will almost definitely stop being “human,” in the recognizable/traditional sense.

For many years, I’ve used the Last Sunday as my new tab page. It shows you how many Sundays you have left in your life, if you live to an average age. I’ve gotten some strange looks, when it accidentally pops up during a presentation. I know it seems morbid, like a fixation on the end. But I don’t see it that way; it’s not about the end, but the finitude of the middle. That precious scarcity.

I’ve spent a lot of time thinking about the meaning of being human, but this mostly dissolves that angst. It’s like: if I live in San Francisco, my daily decisions about what to do here are impossibly broad. But if I’m a tourist, visiting for a few days, then my priority is to do all of the most “San Francisco” things I can: see the Golden Gate, eat a burrito in the Mission, sit among purple-red succulents on a foggy beach cliff.

So, if I only have X years left of being human, I should focus on doing the most human things I can. Whatever that means to me. Conveniently, this applies to the worlds with and without AGI, since in the latter, I’ll still die. But the shorter timeline makes it more real.

Let me know if you have any feedback or suggestions.

Why did you use the weak AGI question? Feels like a motte-and-Bailey to say “x time until AGI” but then link to the weak AGI question.

I picked it because it has the most predictions and is frequently pointed to as an indicator of big shifts. But you’re right, I should work on adding an option to use the strong question instead; I can see why people might prefer that.

I added the ability to switch to the strong question! You can do it here.

Agreed.

The Weak AGI question on metaculus could be solved tomorrow and very little would change about your life, certainly not worth “reflecting on being human” etc.

Not exactly. Don’t get me wrong, this clock is bad, which is why I downvoted it strongly, but it does matter if they solve it tomorrow or the next decade. It would give them quite a few capabilities that they lack right now, though I still agree with you that this clock is bad.

Eliezer seems to think that the shift from proto agi to agi to asi will happen really fast and many of us on this site agree with him

thus its not sensible that there is a decade gap between “almost ai” and ai on metaculus . If I recall Turing (I think?) said something similar. That once we know the the way to generate even some intelligence things get very fast after that (heavily paraphrased).

So 2028 really is the beginning of the end if we do really see proto agi then.

I think this is a great project! I’d love to see a version of this with both strong and weak AI, I’d also like to see a section listing a caveats so that it is less likely to be dismissed as alarmism.

Well, there it is.

AI risk has gone from underhyped to overhyped.

New to the site, just curious: are you that Roko? If so, then I’d like to extend a warm welcome-back to a legend.

Although I’m not deeply informed on the matter, I’d also happen to agree with you 100% here. I really think most AI risk can be heavily curtailed to fully prevented by just making sure it’s very easy to terminate the project if it starts causing damage.

I like the idea and the rationale. I’ll admit I rarely put much stock in quantifications of the future (let alone quantifications crowdsourced by an audience whose qualifications cannot be ascertained). But, I think it would be fascinating to reflect back to this clock come 2028 and if AGI has not been agreeably achieved by then to ask ourselves “Well, how far have we come and how much longer do we suppose it will take?”.

What I don’t understand is why you’re convinced that the introduction of AGI will result in you personally becoming transhuman.

“Beginning of the end” doing some (/probably too much) heavy lifting there. I think it’s the inflection point in a process that will end with transhumanism. And my personal projected timeline is quite short, after that initial shift.

I’m planning to add an option for the strong AI question; I should probably just make it possible to point to arbitrary Metaculus predictions. That way someone could use a more direct transhumanism one, if they want.

Fun (for some version of “fun”).

There’s obvious connections to other countdown clocks, like the nuclear doomsday one. But here’s a fun connection to think about. The World Transhumanist Association used to have a “death clock” on their website that counted up deaths so you could see how many people died while you were busy browsing their site. Given that AGI maybe could lead to technological breakthroughs that end death (or could kill everyone), maybe there’s something in the space of how many more people have to die before we get the tech that can save them?

IDK, just spitballing here.

I like this a lot! I am also the kind of person to use a new tab death clock, though your post inspired me to update it to my own AI timeline (~10 years).

I briefly experimented with using New Tab Redirect to set your site as my new tab page, but I think it takes a smidgen longer to load haha (it needs to fetch the Metaculus API or something?)

I’m so glad the idea resonates with you!

You’re exactly right about the slightly slow loading — it’s on my list to fix, but I wanted to get something out there. :)

Okay, it should be significantly faster now!

This is a very weak definition even for weak AGI on the Metaculus question. This seems quite easy to game in the same way all the previous AI questions were gamed. I wouldn’t be too impressed if this happened tomorrow. This does not seem like a sufficient amount of generality to be an AGI.

The Loebner silver prize is the only even vaguely interesting requirement, but I have deep doubts as to the validity of the Turing test (and this particular variant test apparently isn’t even running anymore). I’d have to trust the judges for this to mean anything, and Metaculus would have to trust completely unknown judges for this question to be when it should happen, not when they think there will be such hype. Fooling what are likely to be cooperative judges just isn’t impressive. (People have been intentionally fooling themselves with chatbots since at least Eliza.) People fooling themselves about AI has been in the news a lot ever since GPT-2. (Whose capabilities as stated by even the more reasonable commentariat have only finally been matched this year by when Chinchilla came out in my opinion.)

I know I already extend a great deal of charity when interpreting things through text, and will give the benefit of the doubt to anyone who can string words together (which is what AIs are currently best at) and never look for proof that the other side isn’t thinking. They will likely be fooled many years before the chatbot has significant reasoning ability (which is highly correlated with ability to string words together in humans, but not machines.). Unless the judges are very careful, and no one knows who would be judging, and we have no reason to trust that they would be selected for the right reasons.

Separately, there has been little evidence that using Metaculus leads to correct results about even vaguely unknowable things. It may or may not reflect current knowledge well depending on the individual market, but the results have never seemed to work much above chance. I don’t engage with it much personally, but everything I’ve seen from it has been very wrong.

Third note, even if this was accurate timing for weak AGI, the true indications are that a human level AGI would not suddenly become a god level AI. There is no reason to believe that recursive self-improvement is even a thing that could happen at a software level at a high rate of speed in something that wasn’t already a superintelligence, so it would rely on very slow physical processes out in the real world if it took an even vaguely significant level of resources to make said AI in the first place. We are near many physical limits, and new kinds of technology will have to be developed to get even vaguely quick increases to how big the models can be, which will be very slow for a human level being that has to wait on physical experiments.

Fourth, the design of the currently successful paradigm does not allow the really interesting capabilities anyway. (Lack of memory, lack of grounding by design, etc.) The RL agents I think are used for things like Go and videogame capabilities are more interesting, but there doesn’t seem to be any near future path where those are the basis of a unified model that performs writing successfully rather than just a thing to manipulate a language model with. (We could probably make a non-unified model today that would do these things if we just spent a lot of money, but it wouldn’t be useful.)

Because of this, you will likely die of the same things as would be expected in a world where we didn’t pursue AI, and at similar times. It might affect things in the margin, but only moderately.

Note: I only realized this was a couple months old after writing a large portion of this.

I like the idea of visualizing progress towards a future milestone. However, the presentation as a countdown seems problematic. A countdown implicitly promises that something interesting will happen when the countdown reaches 0, as seen with New Year’s countdowns, launch countdowns, countdowns to scheduled events, etc. But, here, because of the uncertainty, it’s vanishingly unlikely that anything interesting will happen at the moment the countdown completes. (Not only might it not have happened yet, but it might have happened already!)

I can’t think a way to fix this issue. Representing the current uncertainty represented by Metaculus’s probability distribution is already hard enough, and the harder thing is to respond to changes in the Metaculus prediction over time. It might be that countdowns inherently only work with numbers known to high levels of precision.

Thanks for putting this together.

Hey! Thanks, i found myself checking your website almost every day, and it was awesome, but now is not working.

So i made my own based on your design: https://countdowntoai.com i hope it’s ok with you

Reading the comments i’m going to add the option to choose between Metaculus week and strong AGI predictions.

Thanks again!

Hey! Sorry the site was down so long; I accidentally let the payment lapse. It’s back up and should stay that way, if you’d still like to use it. I also added a page where you can toggle between the predictions.

But no worries if you’re happy with your own. :)

EDIT: I just realized you only posted this 2 days ago! I didn’t see your version before fixing the site; I had actually just logged in to update anyone who cared. :P

Downvoted for being misleading, and I’ve got to agree with Roko that the question is based upon isn’t really what is being claimed here. It matters, but please at least try not to be misleading.

It shows 5 years 12 months (instead of 6 years 0 months), which seems to be the same kind of a bug I pointed out 23 days ago.

Thanks for pointing this out, and sorry for not monitoring the comments more closely. It was a rounding error—should be fixed now.

It’s ok, I figured you were doing something else. Happy to help!

Great countdown, I check it every few days.

Currently, it shows

6YEARS,

12MONTHS,

3DAYS,

6HOURS,

23MINUTES,

49SECONDS

I’m thinking it should be 7 years instead of 6 years 12 months?

Looks awesome! Maybe there could be extended UI that tracks the recent research papers (sorta like I did here) or SOTA achievements. But maybe that would ruin the smooth minimalism of the page.

So, it pattern matches to the infamous doomsday clock, and the precision down to 1 second with no error bars implies the author skipped the basics in high school math. Instant loss of credibility.

Yeah, the fake precision is definitely silly, but I thought the ticking helped get across the underlying message (i.e. this timeline is most likely finite, and shorter than it may feel). This is more about that emotional reminder; Metaculus is obviously the best place for the full info, including crucial historical trends.

Maybe each time your refresh the page, the clock could draw a sample from the probability distribution on Metaculus to figure out when it’s counting down to.

Ahem: