Emergence Spirals—what Yudkowsky gets wrong

Before proposing the following ideas and critiquing those of Yudkowsky, I want to acknowledge that I’m no expert in this area—simply a committed and (somewhat) rigorous speculator. This is not meant as a “smack down” (of a post written 18 years ago) but rather a broadening of the discussion, offering new, more quantifiable measures of emergence for your consideration.

In his 2007 post The Futility of Emergence, Elizier Yudkowsky argues that the term ‘ emergence’ is used vaguely and misleadingly—as a mysterious explanation rather than a real one.

“… after the answer of “Why? Emergence!” is given, the phenomenon is still a mystery and possesses the same sacred impenetrability it had at the start.”

He critiques the tendency to describe complex macroscopic patterns (like consciousness or whirlpools) as if they have independent causal power, when in reality, they are just the predictable consequences of underlying mechanisms. Where large-scale patterns can be reduced to their component interactions, Yudkowsky suggests we should focus on these underlying mechanisms.

On this blog, we care about explaining complexity without resorting to mysticism—or reductionist dismissal. Curious people want to see the deeper patterns that shape real systems, so we can make sense of the world and reason more effectively. But in our series on Emergence, I’ve leaned heavily on the idea of emergent complexity to make sense of phenomena that are reducible to underlying mechanisms. I disagree with Yudkowsky that this need be misleading or vague. This post, as per usual will take a cross-disciplinary view, drawing from systems theory, science, history and philosophy. So, let’s get specific.

Not Everything is Emergent

I agree with Yudkowsky that it is possible to over-use the term, and apply it to anything that emerges from underlying mechanisms. So, it’s important to distinguish what does and doesn’t qualify as ‘ emergent’.

I’d like to make the case that emergent complexity is where…

a whole system is more complex than the sum of its parts

a system is more closely aligned with a macroscopic phenomenon than with its component parts.

So, when we look at an eye, we can see that it can be understood as something that fits the purpose of producing a picture of the physical world. Microscopically it is a configuration of cells, just like a big toe or a testicle, but macroscopically it is more like a camera.

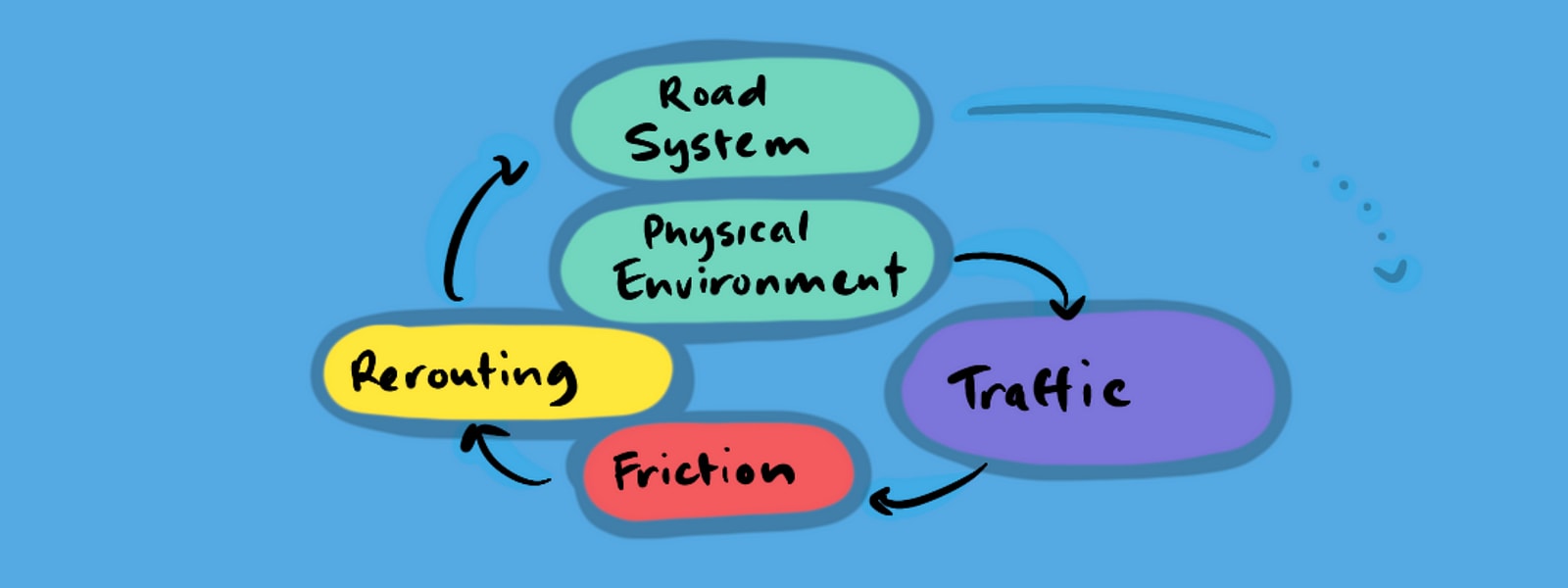

On the other hand, the majority of phenomena that emerge from a system aren’t “emergent”, in that they do not add complexity, but rather decrease it, consistent with the Second law of thermodynamics. Take the traffic system for instance, this creates heat and noise, increasing entropy. Heat and noise are properties of the system, but they are not emergent properties of the system.

Emergent systems can be generally distinguished by their (apparent) inverse relationship to entropy.

Convergence

The traffic system creates noise and heat, but it also creates a level of friction and inefficiency which leads to roading measures that eventually make the roading system more efficient, this will predictably happen, because inefficiency is a constant pressure on the system to change in a particular direction.

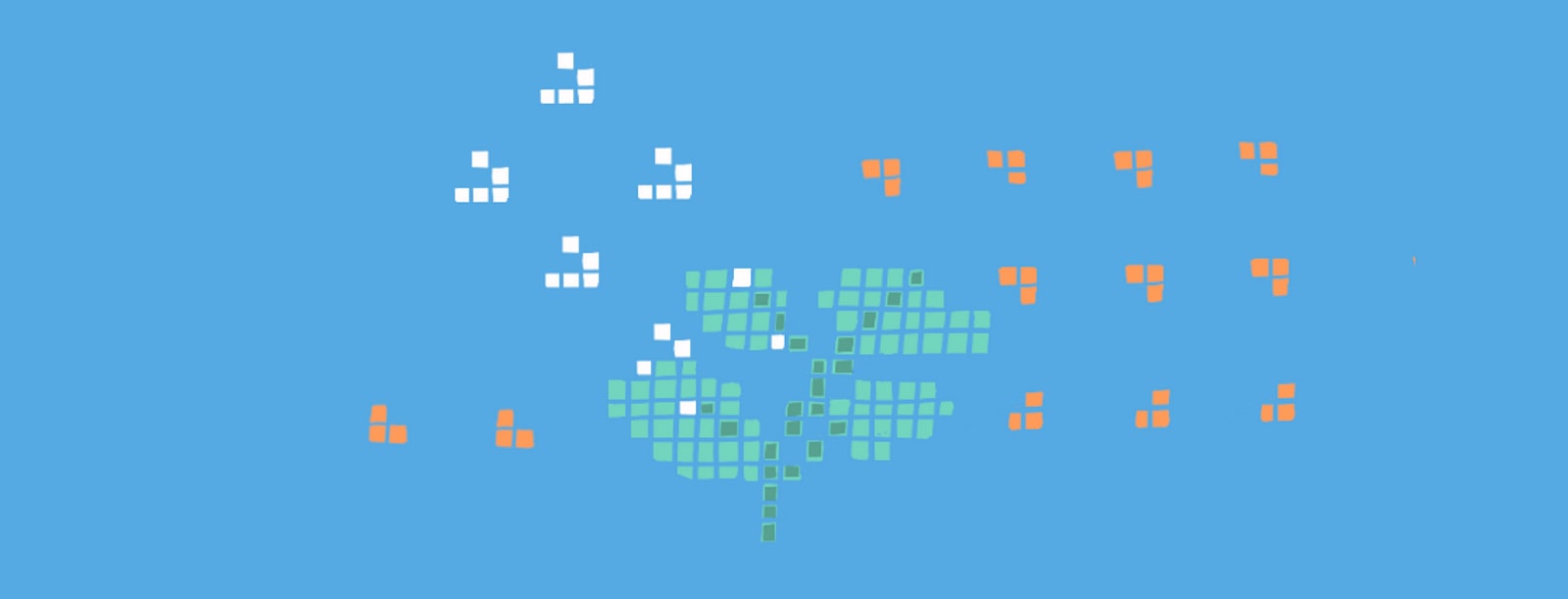

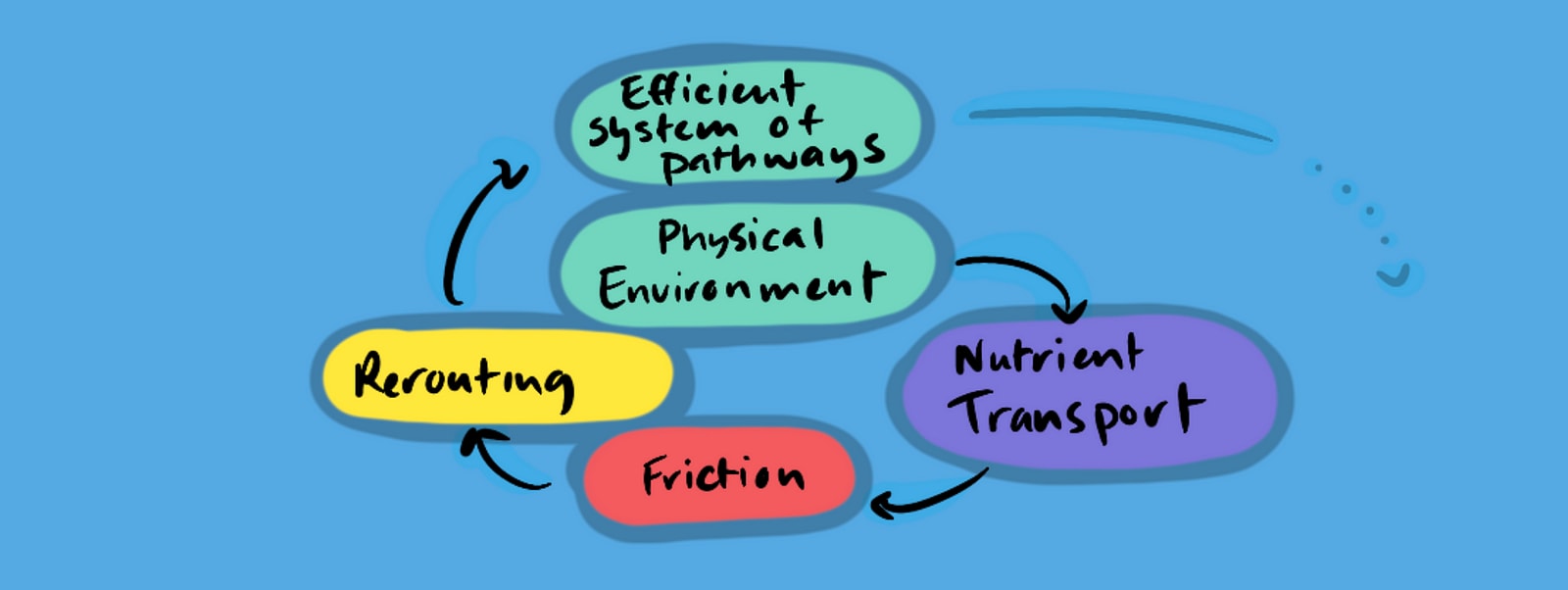

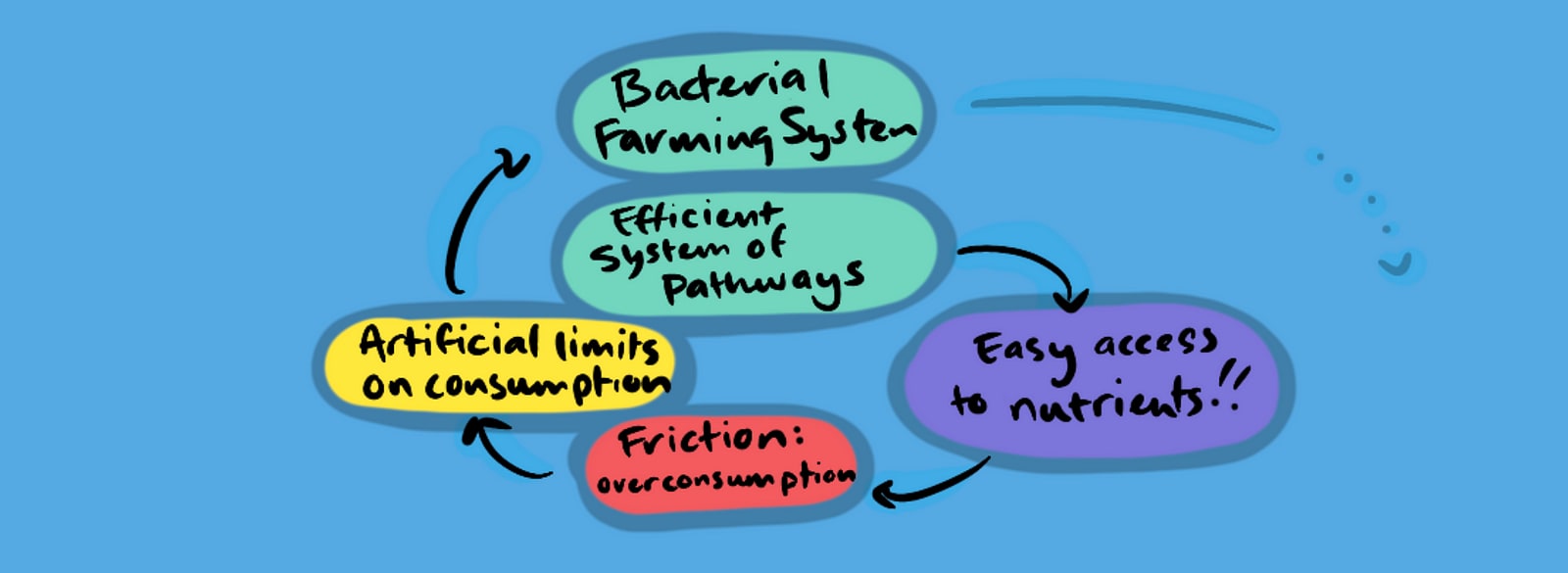

Now, one could say these changes are the result of the collective decisions of intelligent individuals, and are therefore not genuinely emergent—that is until we see the same patterns of efficiency echo throughout nature independent of intelligence, specifically in the nutrient pathways of slime-molds.

When searching for food in a sparse environment, the slime mold Physarum polycephalum naturally forms networks of protoplasmic tubes that connect nutrient sources with astonishing efficiency. Studies reveal that the slime mold’s network design can closely resemble the layout of the Tokyo rail system.

Convergent Evolution

It is said that the eye has developed independently in more than 20 different species, this is due to the supreme benefit of vision for the survival and reproduction of a species and the transmission of those genes. But if we take Yudkowsky’s perspective—emergent phenomena are merely reducible to their component parts and are therefore not real in any meaningful sense—then aren’t we wrong to call these 20+ varieties “eyes” at all?—or to call what they do “seeing”?—because they are made of entirely different components.

It is the fact that we recognise them on a macroscopic scale converging on a common function that it makes sense to call them “eyes”. The same is true of other convergent phenomena, like the slime mold and traffic systems.

Nature Answers Questions

This convergence is a phenomenon that is best understood from a macroscopic perspective—as an answer to the question “What is the most efficient system of pathways?” (for slime mold and traffic) or “what physical objects are in my vicinity?” (for vision). These questions can be answered by various different systems.

Design?

At this point it’s important to note that a system answering a question, or fitting a macroscopic scaffold or a specified “function” is a teleological perspective that might seem to suggest design—a top-down force determining the shape of a system. And this might be an interpretation Yudkowsky wants to avoid altogether—giving it a wide birth by denying the macroscopic view of a system. But this is unnecessary caution. We can acknowledge macroscopic systems (a top-down perspective) without denying their microscopic (bottom-up) origins. We understand that the emergent system of evolution gives an “ illusion of design” it doesn’t require the existence of a designer.

One might say that the “design” process itself is actually an emergent outcome of neural activity, analogous to evolution anyway… (so even design isn’t really design)

In the cases mentioned so far, the function of the system means that it is “ more closely aligned with a macroscopic phenomenon than with its component parts” (the slime mold is aligned with the same macroscopic structure as the traffic system, the eye is aligned with the physical environment) rather than with the complicated configuration of the cells, neurones and electrical signals that comprise the system itself.

This recognition of convergence is a confirmation of emergence.

Substrates

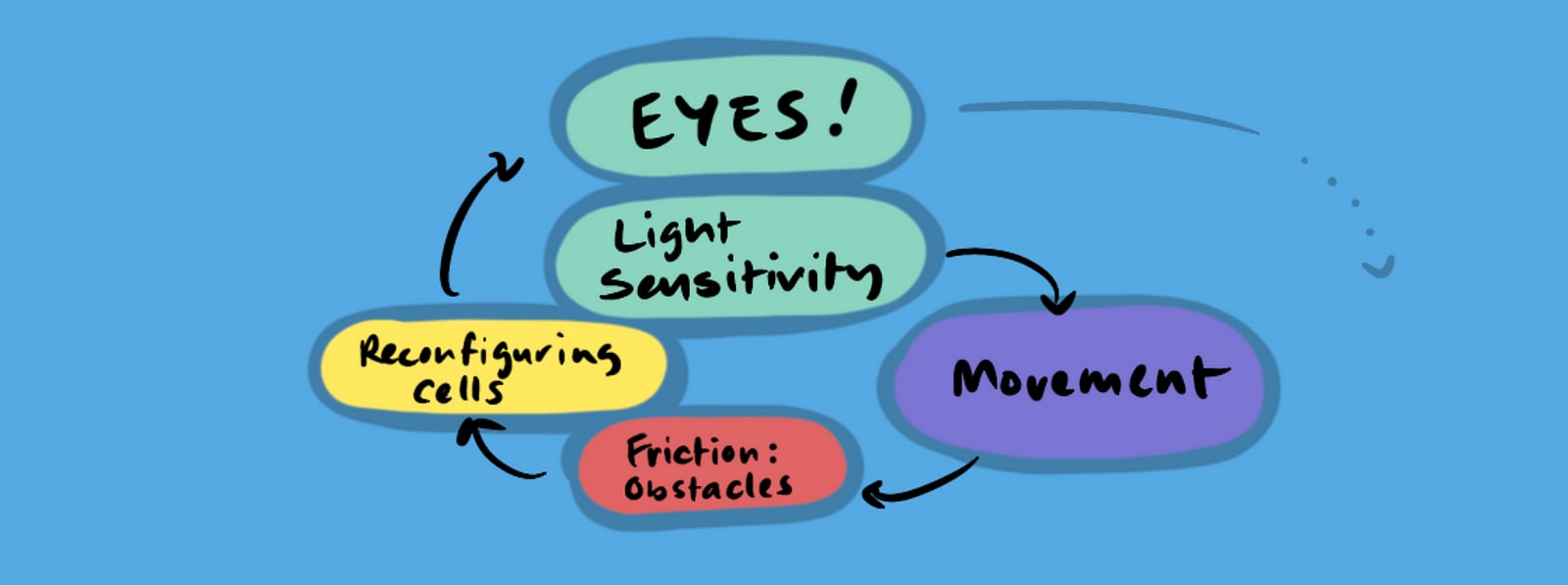

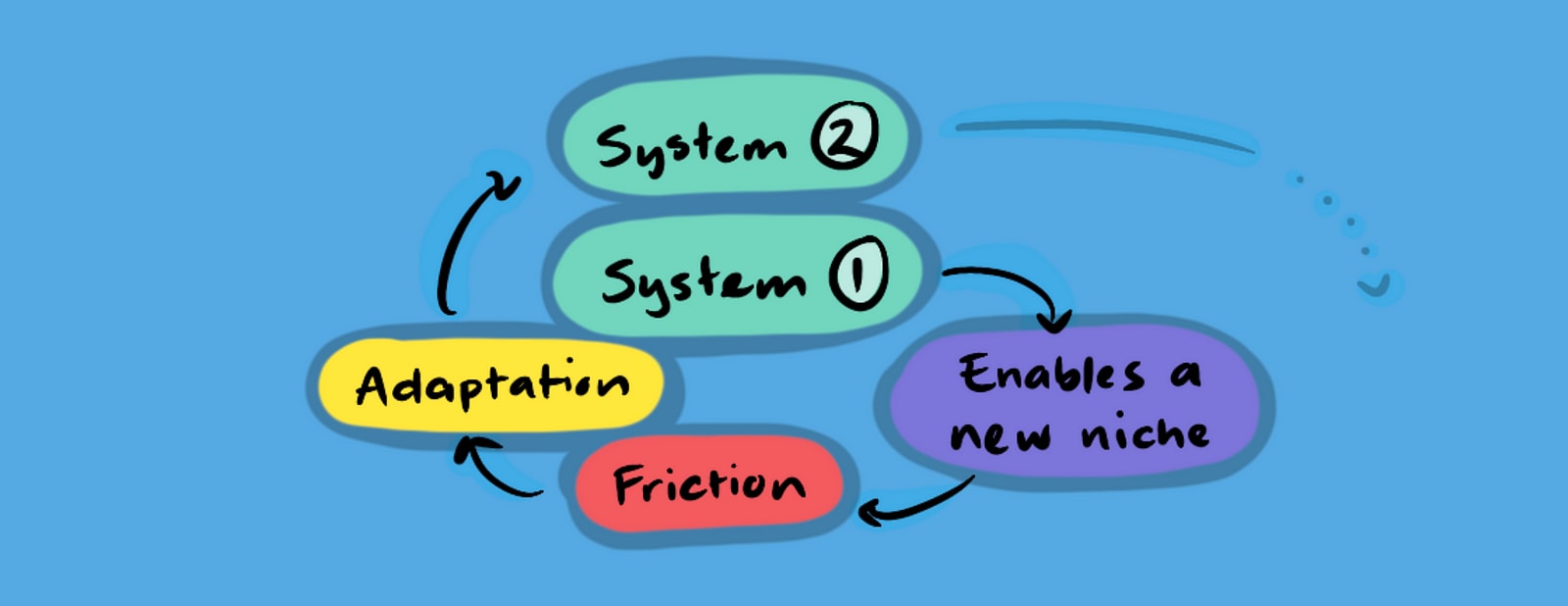

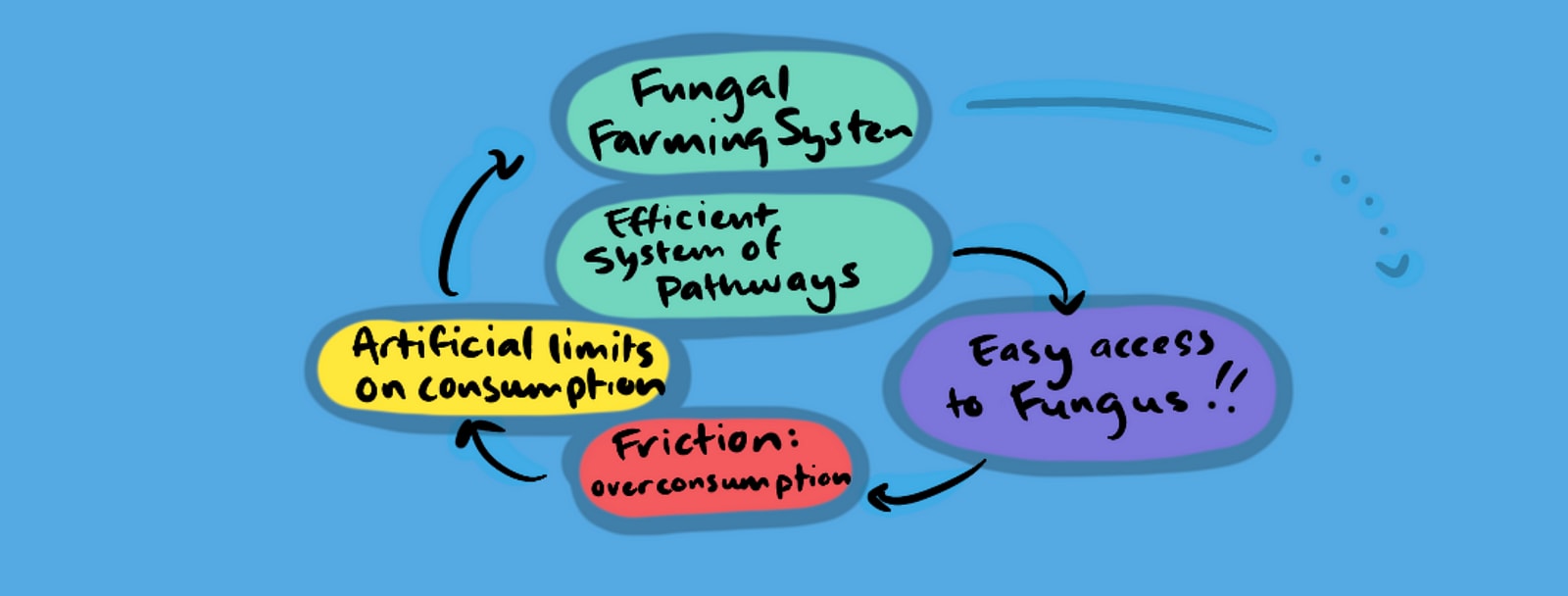

I’d like to make the case that a good measure of whether a system is emergent, is not whether it is irreducible (no system is provably irreducible) but that something can be described as emergent when it has completed an emergent cycle, in that it has taken a system (the substrate) and, through exposure to some friction in the system, reliably generated a new phenomenon which reaches an equilibrium that now creates a substrate or niche for something new to emerge.

To continue with the traffic and slime mold examples, once a system has reached a reliable state of efficiency, then it becomes a substrate or niche for a new emergent cycle on top. A traffic system enables systems like mass food transportation, commerce and social networks not previously possible. Take the internet, another system of transportation (for data), think of all the entirely new industries this spawned, e-commerce, web design, citizen journalism, influencers, even AI.

Similarly, the slime mold Dictyostelium discoideum has been found to have developed ‘primitive farming symbiosis’.

“Instead of consuming all bacteria in their patch, they stop feeding early and incorporate bacteria into their fruiting bodies. They then carry bacteria during spore dispersal and can seed a new food crop, which is a major advantage if edible bacteria are lacking at the new site.”—Nature

And this is not unique to slime molds, according to nature there is a…

“… striking convergent evolution between bacterial husbandry in social amoebas and fungus farming in social insects”

Again, we see convergence as a confirmation of emergence, specifically that an emergent cycle has taken place.

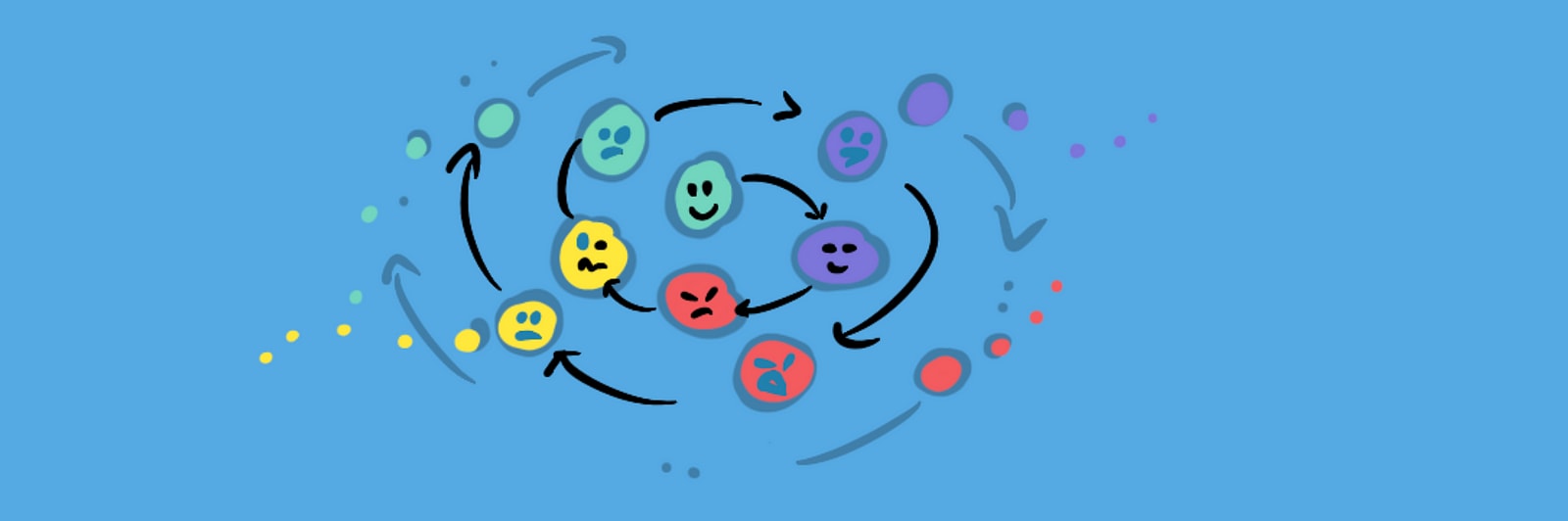

The Emergent Cycle

The way I see it, the emergent cycle has a specific structure that we see all around us in nature. We begin with a stable system, but stable systems exist in a world with friction, so at some point they are pathologically disturbed by some constant friction, driving the system out of balance, and it either dies or finds a new equilibrium which incorporates the disturbance, becoming the substrate for a new phenomenon.

There are a few ways we can look at how a friction is incorporated.

Adaptation—the whole system changes, to create a novel system

Symbiosis—the original system and pathology develop harmony

Hierarchy—the pathology becomes a new phenomena dependent on the original system (equilibrium).

I see emergence as the third category, not mere adaptation or symbiosis, but layers of increasing complexity, each dependent on the one below.

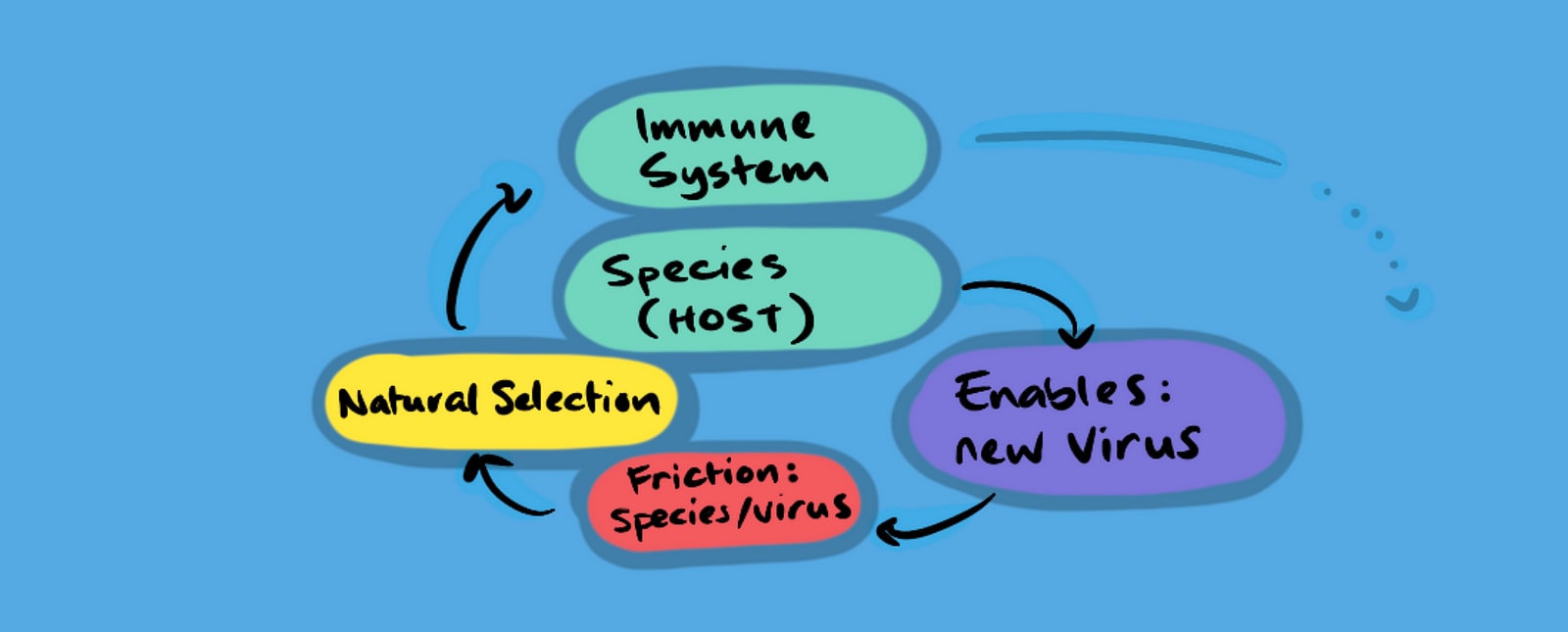

Immunity

A realm that develops in cycles that builds on top of one another is immunity. In The Red Queen, Matt Ridley explains that not only can our immune system develop a memory of anti-bodies against diseases we’ve faced in our lifetime, but that sexual selection allows for a genetic memory—where resistance to certain diseases can be held dormant in our genes, and rediscovered more efficiently when faced with the same threat generations later. Our genetic memory of history can help us better deal with the echoes of history. This cumulative cycle takes the form of a spiral, allowing for a new layer to be built atop the established substrate.

The Emergent Spiral

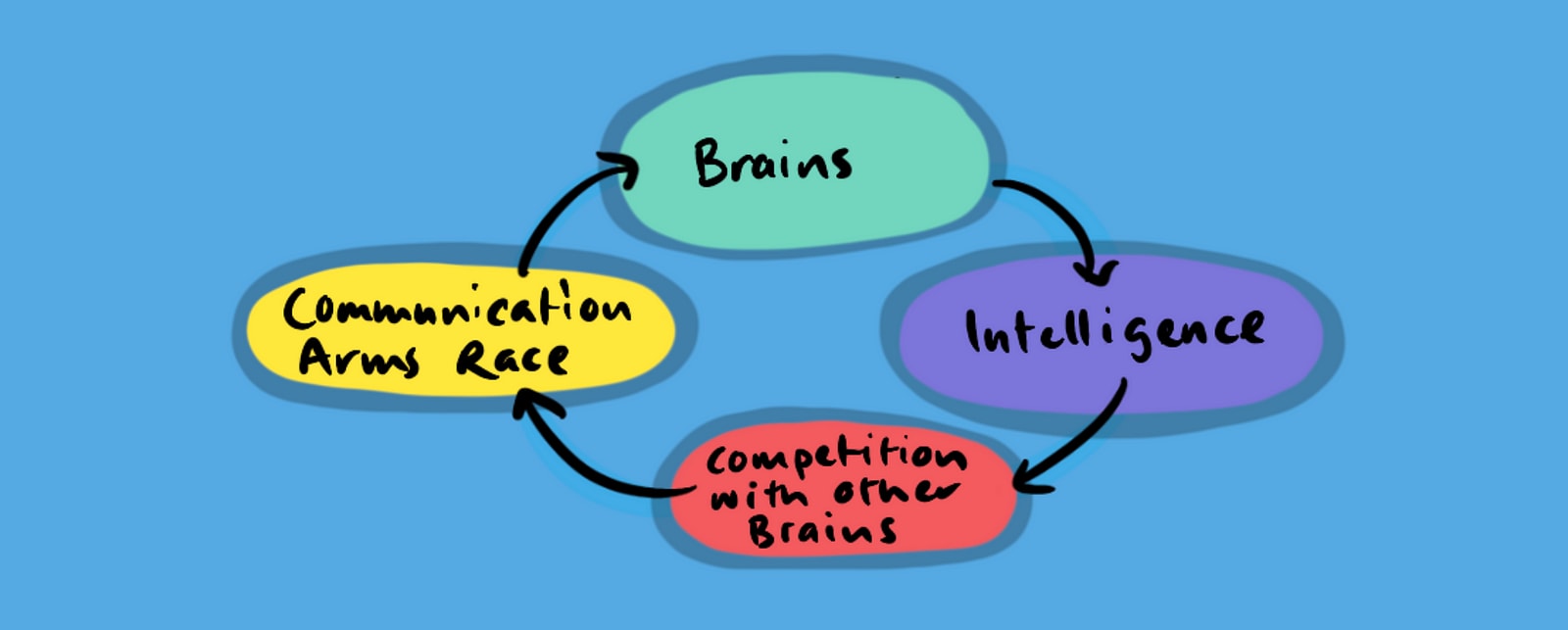

If we look at the canonical example of emergence—evolution by natural selection—we have something that can be generally adaptive rather than hierarchical, in that it creates more of the same rather than a new layer of complexity. We can see this as a feedback loop that increases capacity.

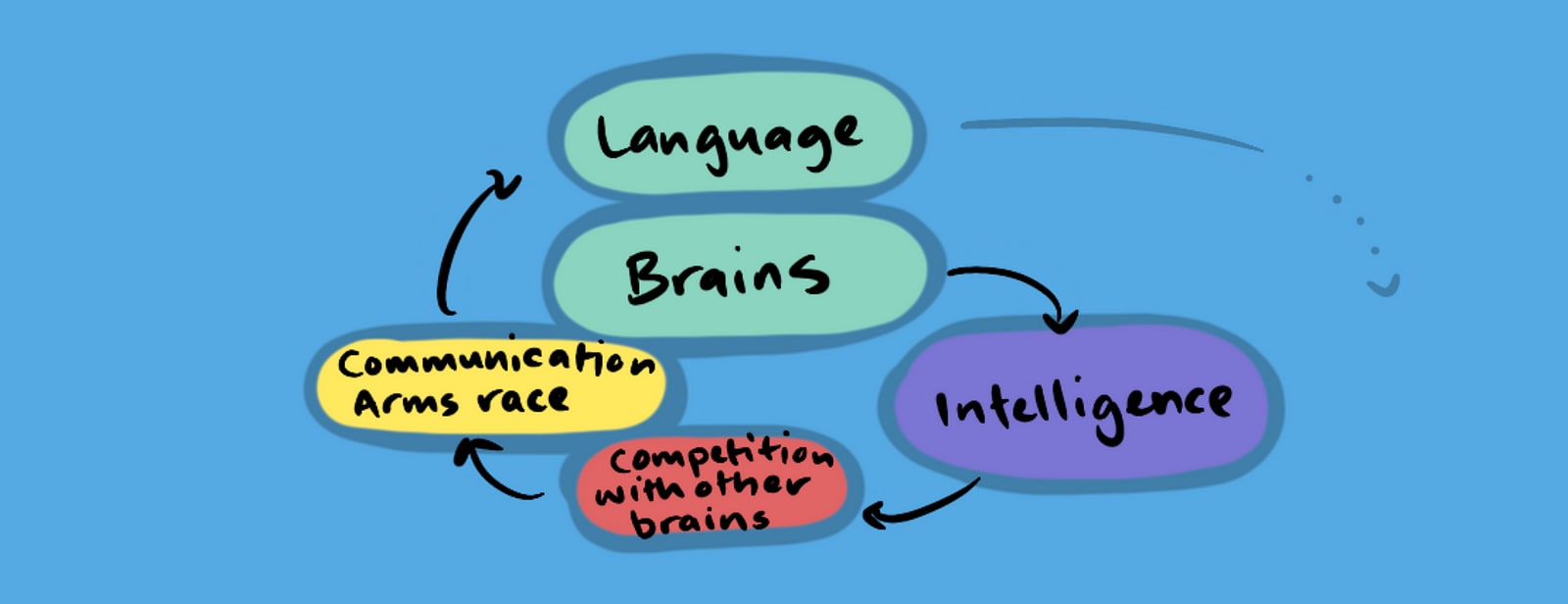

This is more an adaptive situation because one species does not become the substrate for a new phenomenon. But, often evolution yields hierarchical results—generating an emergent spiral.

Here we see an entirely different paradigm ‘language’ emerge on top of an intelligent species. Language is categorically different from the biological species it is derived from, but it is dependent on it. This is an emergent phenomenon in the strongest sense.

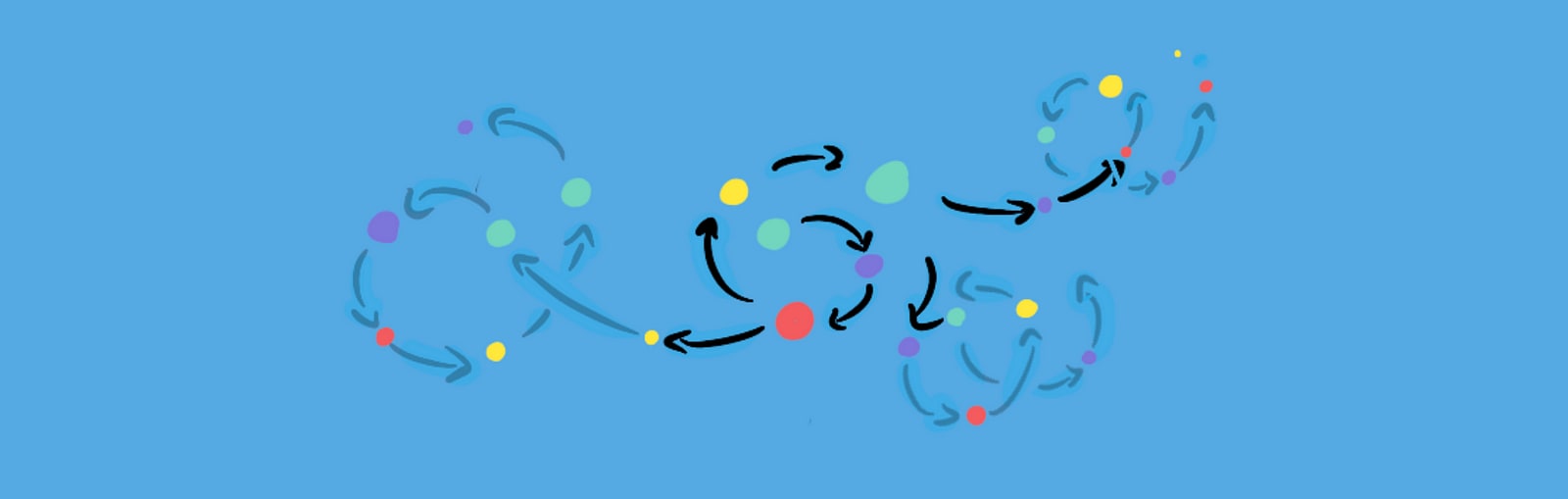

But this emergent spiral doesn’t only manifest in nature, these cycles are ubiquitous across different realms. As I’ve had this in the back of my head for a while, I’ve experienced the Baader-Meinhof phenomenon on many occasions, noticing parallels to philosophy, history, science and others.

History Repeats

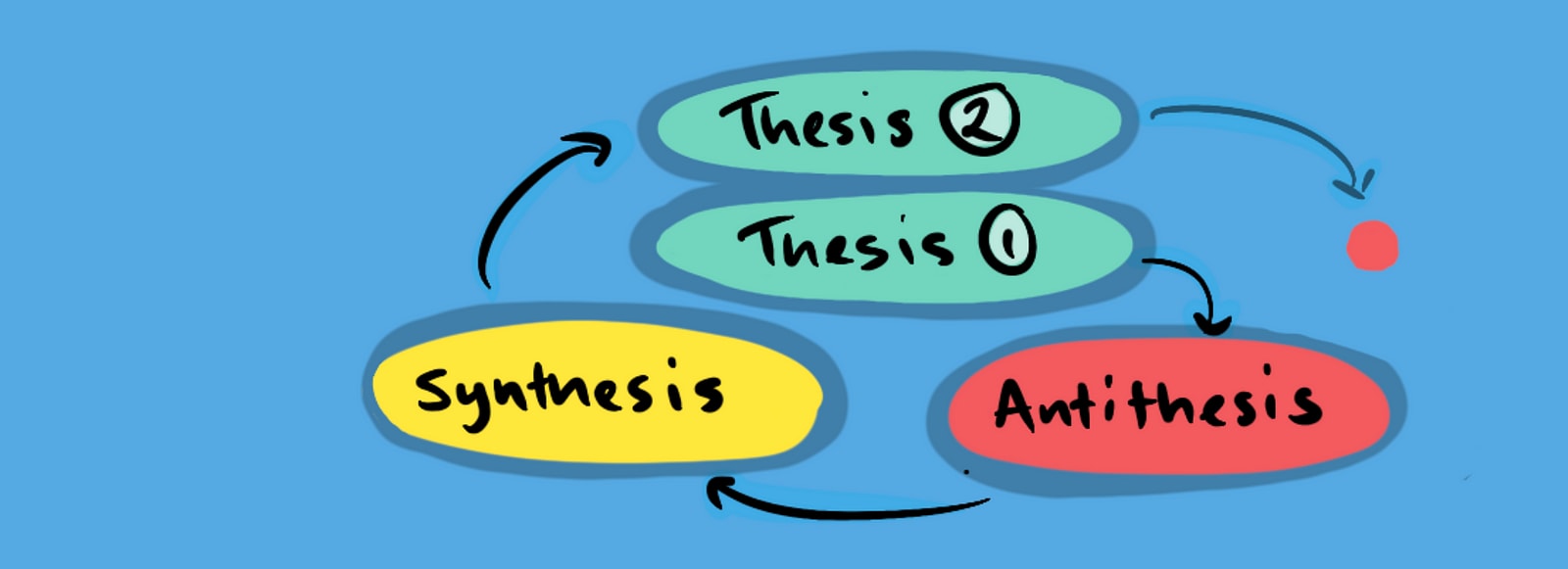

I think the clearest comes from Hegel (ironically, because Hegel is famously inscrutable). Hegel’s dialectic concerned how a concept (particularly one that exists in the messy real world of history) holds internal contradictions which will ultimately lead to internal conflict, and the transformation of the concept into something richer. It is commonly simplified in the form: Thesis > Antithesis > Synthesis.

Hegel’s classic model maps the evolution of political ideology and philosophical schools throughout history. It goes—simplistic ideas are vulnerable to argument (friction), and so they are forced to adapt, sometimes landing on entirely new paradigms. When a new paradigm gets ingrained and inflexible, it then falls victim to a type of Goodhart’s Law becoming vulnerable, once again, to radical new ideas.

Although Hegel’s model looks like a cycle, it is actually a spiral, as it refers to History which moves chronologically—history repeats, but if we record and remember the lessons of history, cycles need not fold back exactly on themselves.

Scientific Discovery

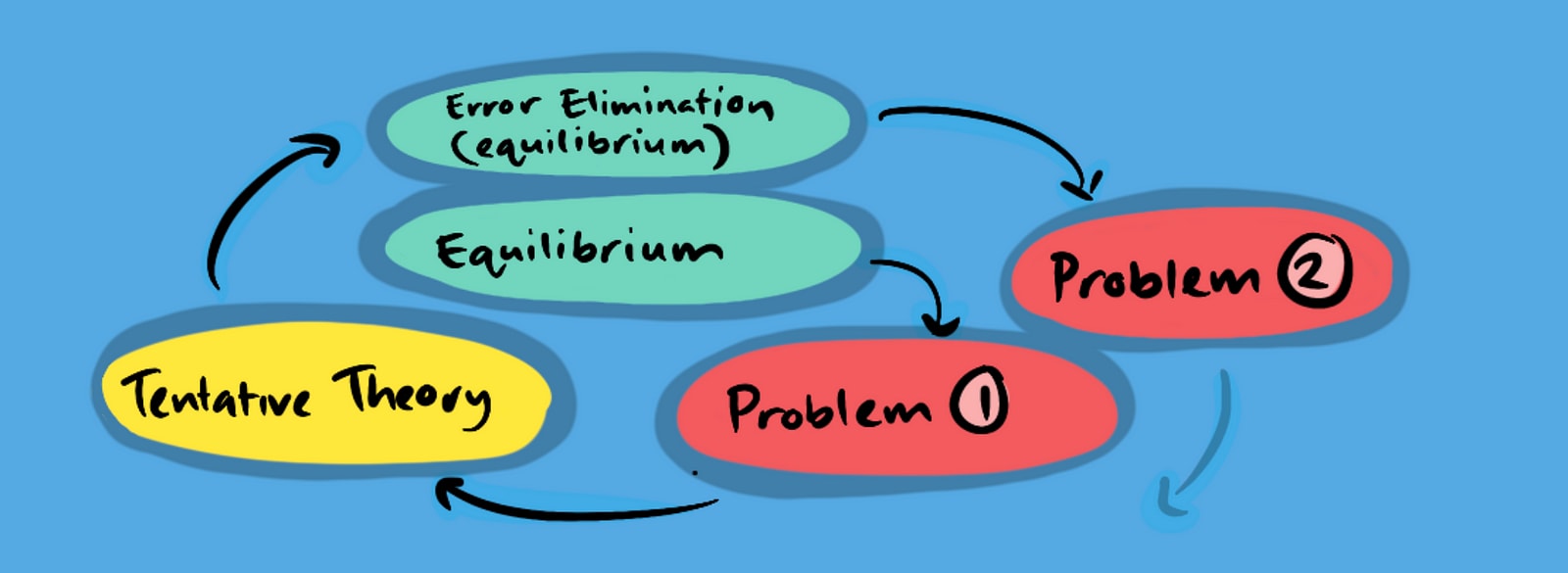

In the realm of science, Karl Popper has a related cycle regarding intellectual discovery, which he formulates as: P1 > TT > EE > P2.

In Popper’s intellectual autobiography Unended Quest, he holds that the accumulation of knowledge follows a similar loop to Hegel’s. You begin with a problem (P1), develop a tentative theory (TT), then eliminate the errors in that theory (EE—through the scientific method, peer review etc) creating a new equilibrium. And until we come up with a genuine theory of everything, the loose ends of that theory will inevitably lead to friction and a new problem (P2).

Unlike our cycle, Popper starts at the problem, but shift everything forward and you have the same cycle.

Popper also draws parallels between inorganic physics, biology and intellectual discovery and understands (as we have) organisms as problem-solving structures. My sense is that creative processes whether by nature or design follow this pattern.

Returning to Yudkowsky

Now that we have a sense of emergent cycles—what they are and what they are not—looking in detail at Yudkowsky’s criticisms, we see that his target is not actually emergence (as I understand it at least). Yudkowsky asserts:

Gravity arises from the curvature of spacetime, according to the specific mathematical model of General Relativity.

Here, Yudkowsky lampoons the case that gravity is an emergent property of the curvature of space time, and he is right to do so, but only because of the way he has formed the argument. Gravity does not emerge from the curvature of spacetime, gravity is the curvature of spacetime. General relativity requires the existence of mass—it does not explain how mass exists. Theories of emergent gravity like the one I’ve explored, target how mass itself arises and the resulting gravitational effects, rather than how gravity can be modelled.

Yudkowsky also takes aim at intelligence:

I have lost track of how many times I have heard people say, “Intelligence is an emergent phenomenon!” as if that explained intelligence.

I would argue the description of something as emergent doesn’t explain what something is, rather it fits it to a recognisable structure that can help us understand it better. By recognising the natural selection of neurones, through the environment of stimulus, we can understand it by analogy to evolution, another emergent cycle (that intelligence itself rests upon) and it then no longer…

… possesses the same sacred impenetrability it had at the start.

Is Emergence Magic?

Yudkowsky extends this (somewhat uncharitable) reading of emergence into full straw-man when he equates emergence with magic. And I think this helps to pin-point the key concern he has when looking at the idea of emergent phenomena. I share this concern, that emergence…

… gives you a sacred mystery to worship. Emergence is popular because it is the junk food of curiosity. You can explain anything using emergence, and so people do just that; for it feels so wonderful to explain things.

As mentioned, I think this caution against attributing the properties of systems to design (or magic in this case) is unnecessary. We can understand phenomena in macroscopic terms like function, purpose, as a solution to a problem, or an answer to a question and we can recognise alignment with other macroscopic phenomena by analogy without denying the microscopic nature of causality. Emergence is a way of understanding complex systems that acknowledges that complexity arises out of particular sets of simple rules, in a way that seems wondrous… even magical, but is, by definition, not magical.

So…

I don’t doubt some people do use “emergence” as a way to curtail their own curiosity, and hand wave over more complex and nuanced (and effortful) engagement with the world around us. But, I don’t see this as the purpose of ‘emergence’ as term. I see the term as a way of aligning our understanding of something novel that we might not yet fully comprehend with other macroscopic phenomena we do comprehend. By understanding emergence as a series of cycles that emerge from friction, adapt, reach equilibrium and become a substrate for the next cycle, we can better respond or cater to the needs of that system—seeing the forest despite the trees.

Originally published at https://nonzerosum.games.

Being more interested in discovering the sort of sources Eliezer was writing against but did not give examples of, than inventing a way of using the word “emergence” that would avoid that scorn, I googled “the power of emergence”. That seemed the sort of woo phrase that would likely come up in that sort of thing. Rich pickings, among which I found this:

So, no straw man at all. Am I unfairly chopping that off? The context is an interview with Steven Johnson, the author of “Emergence: The Connected Lives of Ants, Brains, Cities and Software” (2002). Here’s a longer extract:

There’s more, but that’s representative. Here’s the headline paragraph of the article:

And he gives us “magical”.

Here’s another, more serious review of the same book.

Here are a few more hits I found notable on “the power of emergence”:

First.

Second.

Third.

All of these are attributing powers to “emergence” itself, rather than the word simply being, as some commenters would have it, a name for the very ordinary phenomenon whereby some properties of a thing are not properties of any of its parts. If that were the way that the word was being understood, we would not find a computer being described as non-emergent but a termite cathedral as emergent. The mystery is being worshipped.

I don’t believe this is a useful endeavor. To put it simply, there is a ton of stupid stuff out there (most stuff that gets written is stupid), so picking a particular batch of bad statements to analyze gives very little evidence overall because they are unlikely to be representative of the entirety of writing or thinking on a matter. Particularly when your selection process is biased (through correlations between rhetoric and substance) towards finding precisely the stupidest of stuff among it.

This is entirely unsurprising in light of the above. A lot of stuff is written, most of it is bad, so no wonder many of the leading hits are bad. It would be truly surprising if this didn’t happen.

Yes, you absolutely would! In a sufficiently large dataset of words written by humans, you are almost always able to stumble upon some examples of any other trait you’re interested in… regardless of the overall distribution or the value of the highest-quality examples.

When Eliezer was rallying against the mysterianism of the past, he was using the example of the world’s leading authorities, thinkers, and scientists engaging in (supposedly) bad epistemology. As an illustrative example, he wasn’t picking out a random article entry or book, but rather the words of Lord Kelvin as he argued for “elan vital.”

But then, when commenters rightly accused him of hindsight bias and making light of the genuine epistemological conundrums humanity was facing in the past, Eliezer-2007 engaged with a weakman. He argued the futility of emergence as supposed microcosm for how the irrationality of today’s world results in grossly inadequate philosophy. But he didn’t engage with the philosophy itself, as presented by its leading proponents, but instead took shots at much shakier versions argued by confused and (frankly) stupider people.[1]

As I said above, the following exchange remains illustrative of this:

As an aside, it’s reflective of what Eliezer does these days as well: almost exclusively arguing with know-nothing e/acc’s on Twitter where he can comfortably defeat their half-baked arguments, instead of engaging with more serious anti-doom arguments by more accomplished and reasonable proponents.

I did not have to scrape any barrels to find those examples. They were all from the first page of Google hits. Steven Johnson’s book is not new-age woo. Of my First/Second/Third examples, the first is a respectable academic text, and only the third is outright woo. So I do not think that “the power of emergence” is optimised for finding nonsense (it was the only phrase I tried), nor am I depending on the vastness of the internet to find these. Certainly, my search was biased — or less tendentiously, intended — to find the sort of thing that might have been Eliezer’s target: not new-age woo, but the things respectable enough to be worth his noticing at all. Lacking the actual sources he had in mind, what I found does look like the right sort of thing. I notice that the Steven Johnson book predates his posting.

It’s worth quoting a little further:

In your footnote you say:

Selection effect again? I don’t look at Twitter, but I did notice that Eliezer recently gave a three-hour interview and wrote a book on the subject.

Consider the following two possible interpretations of the dialogue between Eliezer and Perplexed. First:

And second:

I rejected the first interpretation because I trust Eliezer’s intelligence enough that I don’t think he would go for a random non sequitur that reflects nothing about the topic at hand. anonymous’s interpretation doesn’t seem to be likely to be what Eliezer intended (and even if it is the correct interpretation, it just means Eliezer was engaging in a different, yet simpler error).

They still do not represent an “example of the world’s leading authorities, thinkers, and scientists engaging in (supposedly) bad epistemology,” as in the example Eliezer picked out of Lord Kelvin committing a supposedly basic epistemological error. And as a result of this, the argument Eliezer was implicitly making also fails.

Yeah, it’s probably a selection effect; the vast majority of Eliezer’s public communication comes on Twitter (unfortunate, but better than Facebook, I suppose...). Eliezer’s interview and podcast appearances, as well as (AFAIK) the book, also seem entirely geared towards a smart-but-not-technically-proficient-in-alignment audience as its primary target, in line with MIRI’s focus on public outreach to more mainstream audiences and institutions.

Nevertheless, having not read the book itself, I should suspend judgement on it for now.

A lot of trouble could have been saved by distinguishing strong and weak emergence.

Thanks for your comment, but I think it misses the mark somewhat.

While googling to find someone who expresses a straw-man position in the real-world is a form of straw-manning itself, this comment goes further to misrepresent a colloquial use of the word “magical” to mean literal (supernatural) “magic”.

While I haven’t read the book referenced, the quotes provided do not give enough context to claim that the author doesn’t mean what he obviously means (to me at least) that the development of an emergent phenomena seems magical… does it not seem magical? Seeming magical is not a claim that something is not reducible to its component parts, it just means it’s not immediately reducible without some thorough investigation into the mechanisms at work. Part and parcel of the definition of emergence is that it is a non-magical (bottom-up) way of understanding phenomena that seem remarkable (magical), which is why he uses a clearly non-supernatural system like an anthill to illustrate it.

Despite all this, the purpose of the post was to give a clear definition of emergence that doesn’t fall into Yudkowsky’s strawman—not a claim that no one has ever used the word loosely in the past. As conceded in the preamble (paraphrasing) I don’t expect something written 18 years ago to perfectly reflect the conceptual landscape of today.

I am taking Eliezer’s word for it that he has encountered people seriously using the word “emergence” in the way that he criticises, that it is not a straw man. The sources I found bolster that view. (In the Steven Johnson interview I took him to be using “magical” figuratively, but as various people have pointed out, if someone thinks the world is weird, they’re weird for thinking that the world should carry on behaving just like the tiny fragment of it they know about so far.) To respond by inventing a different concept and calling it by the same name does not bear on the matter.

This is an error I see people making over and over. They disagree with some criticism of an idea, but instead of arguing against that criticism, they come up with a different idea and use the same name for it. This leaves the criticism still standing. Witness the contortions people go through to defend a named theory of causal reasoning (CDT, EDT, etc.) by changing the theory and keeping the same name. That is not a defence of the theory, but a different theory. That different theory may be a useful new development! But that is what it is, not a defence of the original theory.

I think this is the crux of our disagreement. Yudkowsky was denying the usefulness of a term entirely because some people use it vaguely. I am trying to provide a less vague and more useful definition of the term—not to say Yudkowsky is unjustified in criticising the use of the term, but that he is unjustified in writing it off completely because of some superficial flaws in presentation, or some unrefined aspects of the concept.

An error that I see happening often is throwing out the baby with the bathwater, and I’ve read people on Less Wrong (even Yudkowsky I think, though I can’t remember where, sorry) write in support of ideas like “Error Correction” as a virtue and Bayesian updating whereby we take criticisms as an opportunity to refine a concept rather than writing it off completely.

I am trying to take part in that process, and I think Yudkowsky would have been better served had he done the same—suggested a better definition that is useful.

“Emergence” is a particularly strong example of a word that label a range of concepts of varying levels of plausibility. Which needs to be pointed out, since there are many denunciations of emergence as though it is a single concept that is one hundred percent wrong (often featuring the word “magic”)

Some of the meanings:-

Weak (synchronic) emergence is just the claim that systems have properties that their parts don’t have. That’s obviously true in many cases: a watch can tell the time, a single cog in the machine cannot. It’s also compatible with determinism

Strong (synchronic) emergence is a claim along the lines that some higher properties of a system cannot be understood in terms of its parts and interactions—the negation of the typical reductionist claim that it can be so understood.

Reductionism isn’t necessarily true, so strong emergence isn’t necessarily false.

Diachronic Emergence is usually defined as the appearance of “genuine novelty” over time. How magical this is is, is going to depend on how “genuine novelty” is defined. Often, it isnt … it’s just left to subjective judgement. A claim that life, consciousness, or whatever was previously impossible would be a kind of magic , but such a claim is not usually made.

Weak Diachronic Emergence,by contrast would be the claim that the novel properties were latent in the laws of physics , before conditions became right for them to be actualized. .. so that a Laplace’s demon would have been able to predict them. It’s quite compatible with determinism, since determinism doesn’t require what is possible, in the sense of not forbidden to happen all at once...conditions have to be right.

Anyway , Yudkowsky’s ire is mostly directed at strong synchronous emergentism, whereas you are mostly discussing diachronous emergentism.

Solid and nicely written-up post! As commenters recognized when responding to Eliezer’s post back in the day, calling a phenomenon “emergent” has a real and valuable semantic meaning:

At the time, Eliezer was both on an anti-”semantic stopsign” crusade and was facing many comments from respected users who were somewhat childing him for being too hindsight-pilled and dismissive of the epistemological conundrums previous generations had faced. So he wanted to select an example relevant to our modern day which fulfilled the former purpose and responded to the latter criticisms. However, he simply overreached in his choice. See also the following exchange:

Implying that all uses of a term are equally and horribly wrong is a bit of a stop sign itself.

Yudkowsky’s take on emergence: he argues that merely saying something is emergent isn’t explaining it...but the same would be true if you merely said something is reductionistic. Nonetheless, he believes that there are problems with emergentism that reductionism doesn’t have

Thanks, and yes, I did scan over the comments when I first read the article, and noted many good points, but when I decided to write I wanted to focus on this particular angle and not get lost in an encyclopaedia defences. I’m very much in the same camp as the first comment you quote.

I appreciate your take on Yudkowsky’s overreach, and the historical context. That helps me understand his position better.

The semantic stop-sign is interesting, I do appreciate Yudkowsky coming up with these handy handles for ideas that often crop up in discussion. Your two examples make me think of the fallacy of composition, in that emergence seems to be a key feature of reality that, at least in part, makes the fallacy of composition a fallacy.

I love your stuff, I can see the effort you’re putting into it and it’s very nice.

If you would put some of the things into larger sequences then I think a lot of what you have could work as wonderful introductions to areas. I often find that I’ve come across the ideas that you’re writing about before but that you have a nice fresh and clear way (at least for me) of putting it and so I can definetely see myself sending these onto my friends to explain stuff and that would be a lot easier if you put together some sequences so consider that a reader’s request! :D

Thanks Jonas, that’s really nice of you to say, and a great suggestion. I’ve had a look at doing sequences here. Now that I have more content, I’ll take your request and run with it.

For now, over on the site I have the posts broken up into curated categories that work as rudimentary sequences, if you’d like to check them out. Appreciate your feedback!

Mostly agree on main points, I think. Some points are vague and hard to know if i agree, which i suspect is because:

Looks like this was originally written by a human but then rewritten by an AI. Lots of things seem vagueified by that, and a major issue that i’ve repeatedly seen that introduce is abuse of the word “aligned” to mean something like “related to”. I’d rather see the prompts.

I can assure you that the words and images are all original, I’m quite capable of vagueifying something myself—I don’t have a content quota to make, just trying to present ideas I’ve had, so it would be quite antithetical to the project to get chat to write it.

By “aligned” I’m not meaning “related to”, I mean “maps to the same conceptual shape”, “correlated with” or “analogous to”. So the nutrient pathways of slime molds are aligned with the Tokyo rail system, but they are not related (other than by sharing an alignment with a pattern). Whereas peanut butter is related to toast, but it’s not aligned with it.

But I appreciate the feedback, if you’re able to point to something specifically that’s vague, I’ll definitely get in there and tighten it up.

I think eliezer would agree with what you’re saying here, in the same post mentioned;

So he would agree that as long as you’re not using the word “emergence” as an explanation—since the sequence is on words which are used in common language which don’t predict anything by themselves— and are actually acknowledging the various mechanisms beneath, which you’re understanding using higher level non fundamental abstractions.

To reiterate In a reductionism post he mentions

So it’s quite clear that he’s actually fine with higher level abstractions like you’re using here, as long as they predict things. The phrase “intelligence is emergent” as what intelligence is doesn’t predict anything and is a blank phrase, this is what he was opposed to.

I wished he was a lot more clear with these things back then, it took me quite a bit of time to understand his position (it’s fairly neat imo)

Thanks for your comment, I appreciate your points, and see that Yudkowsky appreciates some use of higher-level abstractions as a pragmatic tool that is not erased by reductionism. But I still feel like you’re being a bit too charitable. I re-read the ‘it’s okay to use ‘emerge”’ parts several times, and as I understand it, he’s not meaning to refer to a higher-level abstraction, he’s using it in the general sense “whatever byproduct comes from this” in which case it would be just as meaningful to say “heat emerges from the body” which does not reflect any definition of emergence as a higher-level abstraction. I think the issue comes into focus with your final point:

But it is not correct to say that acknowledging intelligence as emergent doesn’t help us predict anything. If emergence can be described as a pattern that happens across different realms then it can help to predict things, through the use of analogy. If for instance we can see that neurones are selected and strengthened based on use, we can transfer some of our knowledge about natural selection in biological evolution to provide fruitful questions to ask, and research to do, on neural evolution. If we understand that an emergent system has reached equilibrium, it can help us to ask useful questions about what new systems might emerge on top of that system, questions we might not otherwise ask if we were not to recognise the shared pattern.

A question I often ask myself is “If the world itself is to become increasingly organised, at some point do we cease to be autonomous entities an on a floating rock, and become instead like automatic cells within a new vector of autonomy (the planet as super-organism)”. This question only comes about if we acknowledge that the world itself is subject to the same sorts of emergent processes that humans and other animals are (although not exactly, a planet doesn’t have much of a social life, and that could be essential to autonomy). I find these predictions based on principles of emergence interesting and potentially consequential.

I don’t think Eliezer uses emergence that way. He is using it the way, if a person is asked “why do hands have X muscular movement?” , one may reply “it’s an emergent phenomena” , that is the explanation which doesn’t predict anything he’s criticizing, unless a person clarifies what they mean by emergent phenomena.

A proper explanation could be: (Depending on what is meant by the word “Why”) [1]

Evolutionary: The reason why X muscular movement got selected for.

Biological/Shape/Adaptation; How it works or got implemented.

The common use of the word emergent is such that when a person is perplexed by the idea of free will, and finds a lack of contra-causal free will troubling to their preliminary intuitions , their encounter with the idea “free will is emergent” resolves the cognitive dissonance mistaking it for an explanation[2] when it holds no predictive power, and doesn’t actually work to resolve the initial confusion alongside physics.

What examples do I have in back of my mind that I think he’s criticizing this particular usage?

Eg-1: He uses the example of “Intelligence is emergent”.

In online spaces when asked, “Where is the ‘you’ in the brain if it’s all just soft flesh?” , people often say , “I am emergent” . Which doesn’t quite predict anything, like I learn nothing about when do I cease to be “I” , or why do I feel like “I” etc.

Eg-2: He uses the example of “Free will is emergent” , where he mentions the phrasing “one level emerges from the other”.

Eg-3; He uses “behavior of ant colony is emergent” in original post.

Eg-4; He also emphasizes that he’s fine with the term that chemistry “arises from” interaction between atoms as per QED. Since chemistry—or parts of it, can be predicted in terms of QED. [2]

Which he clarifies is fairly equivalent to “Chemistry emerges from interaction between atoms as per QED”.

None of these examples seem to argue against “emergence as a pattern that happens across different realms” . That seems like a different thing altogether and can be assigned a different word.

This particular usage he is criticizing, and this is the trap majority of people including my past self & a lot of people I know in real life fall into. Which is why, I think the disagreement here is mostly semantic, as highlighted by TAG. This can also be categorized as the trap of strong emergentism, or intuitions behind it such that it satisfies the human interrogation without adding anything to understanding. Moreover, the sequence in question is named “Mysterious answers” where he goes over certain concepts which are in common zeitgeist used as an explanations even when they’re not.[2]

From what I understand the way you’re using it, in emergent cycle to import over to other places Eliezer would agree with your use case. He uses this as an argument as to why maths is useful :-

It seems like your emergent cycle is closer to this. He similarly to your emergent cycle for systems also asserts probability theory as laws underlying rational belief and similarly decision theory for rational actions of all agents:-

Decision theory works because it is sufficiently similar to the goal oriented systems in the universe. He also thinks intelligence is lawful, as in it’s orderly such as following decision theory. Which seems similar to your defense in the sense of multiple leveled maps.

To further flesh the point, he would agree with you on the eyes part :-

He probably would assign the intensional/word “eye” to an extensional similarity cluster but I think you and him might still disagree on nuances:

Which is to say he would disagree with the blanket categorization of “purpose”, which can be a problematic term and leaves space for misunderstanding regarding normativity and would likely advocate for more clearer thinking and wordings along the lines highlighted above.

Although I think he would be fine with concepts like ‘agency’ in decision theories to degree to which axioms happen to coincide with that of reality—just like apples behaving like numbers listed above— since it can be bounded to physical systems amongst other considerations such as well biological agent sustaining itself etc.

Which brings us to his second potential source of disagreement, regarding analogies :[3]

Which basically goes over the necessity for having a reasoning as to why, someone suspects this analogy to work aside from being merely similar in certain aspects, because most of them don’t. Take the example of more sophisticated analogy of biological evolution extended to corporations:

Since this analogy can mislead one to think corporations can evolve on their own and someone may conclude that hence anyone can become CEO of a corporation, but it’s the case that majority of changes are due to human intelligence so that would be an example of failure by analogy.[4]

As per my amateur analysis you seem to have taken caution in your emergent cycle analogy, as to only apply it when entropy has inverse relationship, which is still quite a broad application and but on surface seems to isolate the generalization to certain systems which have sufficiently enough internal structure for the analogy to carry over under those constraints.

A thing to note here is Eliezer is a predictivist, both of these type of explanation would narrow down experience to hand’s muscle moving a certain way.

For Eliezer— a predictivist—an explanation narrows down anticipated experience.

In this post he criticized neural networks, and as we know that particular prediction of his aged poorly, but those are for different reasons, but the general point regarding analogy still stands.

Although I can see someone making the case of memetic evolution.

Could you explain what exactly you mean by “complex” here? Surely you don’t mean “how many lines of code is required to make a thing”. But then what?

I’m not sure I understand this either. What does “aligned” mean in this context?

I’m looking at your example:

And it doesn’t make sense to me. Cells that a toe consists of are different than cells that a testicle or an eye consist of. We can go deeper and say that everything is just “configuration of quarks”, but then we are back to “emergent” meaning “not an individual quark”. Which isn’t what you seem to be talking about.

Thanks for your well considered comment.

So, here I’m just stating the requirement that the system adds complexity, and that it is not merely categorically different. So, heat, for instance could be seen is categorically different to the process that it “emerged” from, but it would not qualify as “emergent” it is clearly entropic, reducing complexity. Whereas an immune system is built on top of an organism’s complexity, it is a more complex system because it includes all the complexity of the system it emerged from + its own complexity (or to use your code example, all the base code plus the new branch).

The second part is more important to my particular way of understanding emergence.

I think I could potentially make this clearer as it seems “alignment” comes with a lot of baggage, and has potentially been worn out in general (vague) usage, making its correct usage seem obscure and difficult to place. By “aligned with” I mean not merely related to but, “following the same pattern as”, that pattern might be a function it plays or a physical or conceptual shape that is similar. So, the slime mold and the Tokyo rail system share a similar shape, they have converged on a similar outcome because they are aligned with a similar pattern (efficiency of transport given a particular map).

I think we’re in agreement here, my point is that the eye or testicle perform a (macroscopic) function, the cells they are made of are less important than the function—of the 20+ different varieties of eyes, none of them are made of the same cells, but it still makes sense to call them eyes, because they align with the function, eyes are essentially cell-agnostic, as long as they converge on a function.

Again, thanks for the response, I’ll try to think of some edits that help make these aspects clearer in the text.

I’m afraid you didn’t make it clearer what you mean by “complexity” with your explanation. Could you taboo the word?

Are you using “comlex” and “emergent” simply as synonims to “having low entropy”? Or is there some more nuanced relations between them?

Okay then putting it into the sentence in question we get:

a system more closely follows the pattern of a macroscopic phenomenon than its components.

I’m afraid this is also not particularly comprehensible. What you seem to be saying is that macroscopic phenomenon that the system produces is more important than which components the system has. But this sounds as a map-territory confusion. Indeed we can talk about a system with a high level map and get some utility from it for our purposes. But that’s not a sole property of the system but also of our minds and values. And in the end all the properties of the system are reducible to the properties of it’s individual parts.

Sorry about my lack of clarity: By “complex” I mean “intricately ordered” rather than the simple disorder generally expected of an entropic process. To taboo both this and alignment as “following the same pattern as”:

By a macroscopic phenomenon, I mean any (or all) of the following:

1. Another physical feature of the world which it fits to, like roads aligning with a map and its terrain (and obstacles).

2. Another instance of what appears to fulfil a similar purpose despite entirely different paths to get there or materials (like with convergence)

3. A conceptual feature of the world, like a purpose or function.

So, we can more readily understand an emergent phenomenon in relation to some other macroscopic phenomenon than we can were we to merely inspect the cells in isolation. In other words, there is usefulness identifying the 20+ varieties of eyes as “eyes” (2) even though they are not the same at all, on a cellular level. It is also meaningful to understand that they perform a function or purpose (3), and that they fit the physical world (by reflecting it relatively accurately) (1).

This feels like a post that has used a significant amount of AI to be made...

Thanks? (Does that mean it’s well structured?) You’re the second person to have said this. The illustrations are original, as is all the writing.

As I mentioned to the other person who raised this concern, the blog I write (the source) is an outlet for my own ideas, using chat would sort of defeat the purpose.