AI strategy & governance. ailabwatch.org. ailabwatch.substack.com.

Zach Stein-Perlman

o3-mini is out (blogpost, tweet). Performance isn’t super noteworthy (on first glance), in part since we already knew about o3 performance.

Non-fact-checked quick takes on the system card:

the model referred to below as the o3-mini post-mitigation model was the final model checkpoint as of Jan 31, 2025 (unless otherwise specified)

Big if true (and if Preparedness had time to do elicitation and fix spurious failures)

If this is robust to jailbreaks, great, but presumably it’s not, so low post-mitigation performance is far from sufficient for safety-from-misuse; post-mitigation performance isn’t what we care about (we care about approximately what good jailbreakers get on the post-mitigation model).

No mention of METR, Apollo, or even US AISI? (Maybe too early to pay much attention to this, e.g. maybe there’ll be a full-o3 system card soon.) [Edit: also maybe it’s just not much more powerful than o1.]

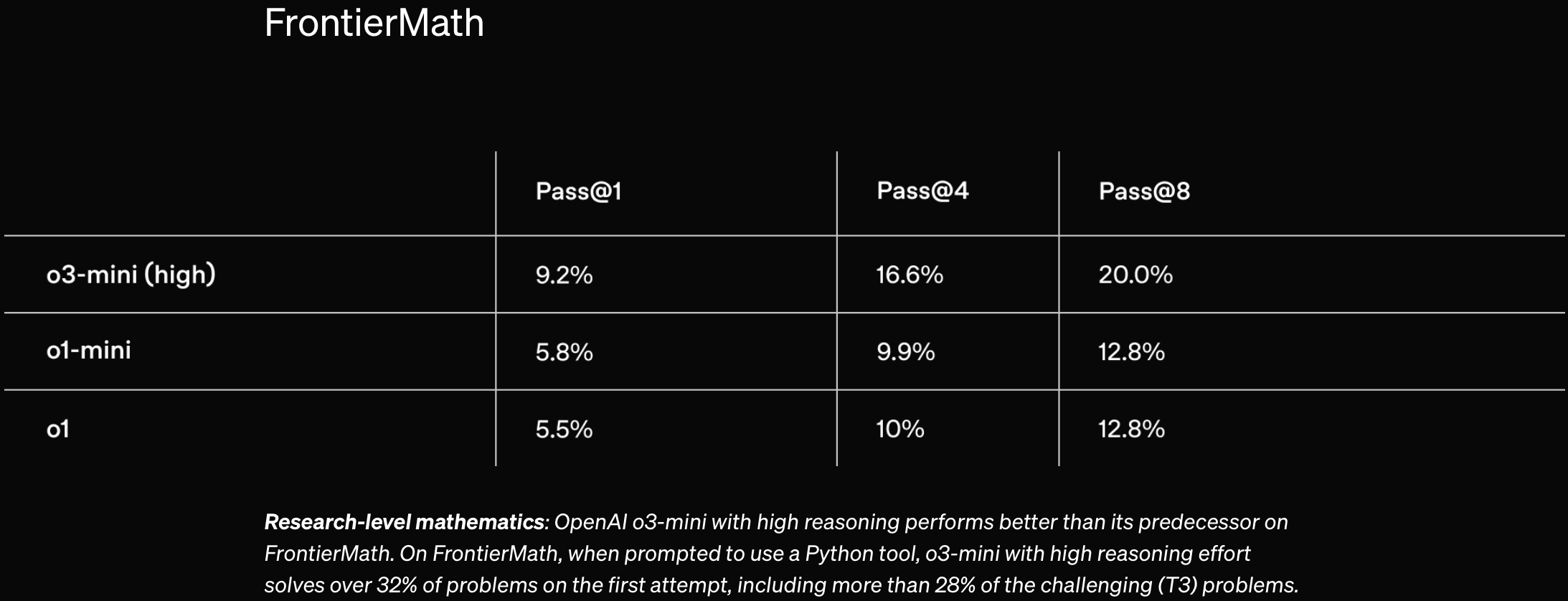

32% is a lot

The dataset is 1⁄4 T1 (easier), 1⁄2 T2, 1⁄4 T3 (harder); 28% on T3 means that there’s not much difference between T1 and T3 to o3-mini (at least for the easiest-for-LMs quarter of T3)

Probably o3-mini is successfully using heuristics to get the right answer and could solve very few T3 problems in a deep or humanlike way

Dario Amodei: On DeepSeek and Export Controls

Thanks. The tax treatment is terrible. And I would like more clarity on how transformative AI would affect S&P 500 prices (per this comment). But this seems decent (alongside AI-related calls) because 6 years is so long.

I wrote this for someone but maybe it’s helpful for others

What labs should do:

I think the most important things for a relatively responsible company are control and security. (For irresponsible companies, I roughly want them to make a great RSP and thus become a responsible company.)

Reading recommendations for people like you (not a control expert but has context to mostly understand the Greenblatt plan):

Control: Redwood blogposts[1] or ask a Redwood human “what’s the threat model” and “what are the most promising control techniques”

Security: not worth trying to understand but there’s A Playbook for Securing AI Model Weights + Securing AI Model Weights

A few more things: What AI companies should do: Some rough ideas

Lots more things + overall plan: A Plan for Technical AI Safety with Current Science (Greenblatt 2023)

More links: Lab governance reading list

What labs are doing:

Evals: it’s complicated; OpenAI, DeepMind, and Anthropic seem close to doing good model evals for dangerous capabilities; see DC evals: labs’ practices plus the links in the top two rows (associated blogpost + model cards)

RSPs: all existing RSPs are super weak and you shouldn’t expect them to matter; maybe see The current state of RSPs

Control: nothing is happening at the labs, except a little research at Anthropic and DeepMind

Security: nobody is prepared; nobody is trying to be prepared

Internal governance: you should basically model all of the companies as doing whatever leadership wants. In particular: (1) the OpenAI nonprofit is probably controlled by Sam Altman and will probably lose control soon and (2) possibly the Anthropic LTBT will matter but it doesn’t seem to be working well.

Publishing safety research: DeepMind and Anthropic publish some good stuff but surprisingly little given how many safety researchers they employ; see List of AI safety papers from companies, 2023–2024

Resources:

I think ideally we’d have several versions of a model. The default version would be ignorant about AI risk, AI safety and evaluation techniques, and maybe modern LLMs (in addition to misuse-y dangerous capabilities). When you need a model that’s knowledgeable about that stuff, you use the knowledgeable version.

Somewhat related: https://www.alignmentforum.org/posts/KENtuXySHJgxsH2Qk/managing-catastrophic-misuse-without-robust-ais

[Perfunctory review to get this post to the final phase]

Solid post. Still good. I think a responsible developer shouldn’t unilaterally pause but I think it should talk about the crazy situation it’s in, costs and benefits of various actions, what it would do in different worlds, and its views on risks. (And none of the labs have done this; in particular Core Views is not this.)

List of AI safety papers from companies, 2023–2024

One more consideration against (or an important part of “Bureaucracy”): sometimes your lab doesn’t let you publish your research.

Yep, the final phase-in date was in November 2024.

Some people have posted ideas on what a reasonable plan to reduce AI risk for such timelines might look like (e.g. Sam Bowman’s checklist, or Holden Karnofsky’s list in his 2022 nearcast), but I find them insufficient for the magnitude of the stakes (to be clear, I don’t think these example lists were intended to be an extensive plan).

See also A Plan for Technical AI Safety with Current Science (Greenblatt 2023) for a detailed (but rough, out-of-date, and very high-context) plan.

Yeah. I agree/concede that you can explain why you can’t convince people that their own work is useless. But if you’re positing that the flinchers flinch away from valid arguments about each category of useless work, that seems surprising.

I feel like John’s view entails that he would be able to convince my friends that various-research-agendas-my-friends-like are doomed. (And I’m pretty sure that’s false.) I assume John doesn’t believe that, and I wonder why he doesn’t think his view entails it.

I wonder whether John believes that well-liked research, e.g. Fabien’s list, is actually not valuable or rare exceptions coming from a small subset of the “alignment research” field.

I do not.

On the contrary, I think ~all of the “alignment researchers” I know claim to be working on the big problem, and I think ~90% of them are indeed doing work that looks good in terms of the big problem. (Researchers I don’t know are likely substantially worse but not a ton.)

In particular I think all of the alignment-orgs-I’m-socially-close-to do work that looks good in terms of the big problem: Redwood, METR, ARC. And I think the other well-known orgs are also good.

This doesn’t feel odd: these people are smart and actually care about the big problem; if their work was in the even if this succeeds it obviously wouldn’t be helpful category they’d want to know (and, given the “obviously,” would figure that out).

Possibly the situation is very different in academia or MATS-land; for now I’m just talking about the people around me.

Yeah, I agree sometimes people decide to work on problems largely because they’re tractable [edit: or because they’re good for safety getting alignment research or other good work out of early AGIs]. I’m unconvinced of the flinching away or dishonest characterization.

This post starts from the observation that streetlighting has mostly won the memetic competition for alignment as a research field, and we’ll mostly take that claim as given. Lots of people will disagree with that claim, and convincing them is not a goal of this post.

Yep. This post is not for me but I’ll say a thing that annoyed me anyway:

… and Carol’s thoughts run into a blank wall. In the first few seconds, she sees no toeholds, not even a starting point. And so she reflexively flinches away from that problem, and turns back to some easier problems.

Does this actually happen? (Even if you want to be maximally cynical, I claim presenting novel important difficulties (e.g. “sensor tampering”) or giving novel arguments that problems are difficult is socially rewarded.)

DeepSeek-V3 is out today, with weights and a paper published. Tweet thread, GitHub, report (GitHub, HuggingFace). It’s big and mixture-of-experts-y; discussion here and here.

It was super cheap to train — they say 2.8M H800-hours or $5.6M (!!).

It’s powerful:

It’s cheap to run:

oops thanks

My guess is it’s referring to Anthropic’s position on SB 1047, or Dario’s and Jack Clark’s statements that it’s too early for strong regulation, or how Anthropic’s policy recommendations often exclude RSP-y stuff (and when they do suggest requiring RSPs, they would leave the details up to the company).