I work at the Alignment Research Center (ARC). I write a blog on stuff I’m interested in (such as math, philosophy, puzzles, statistics, and elections): https://ericneyman.wordpress.com/

Eric Neyman

Yeah, I wonder if Zvi used the wrong model (the non-thinking one)? It’s specifically the “thinking” model that gets the question right.

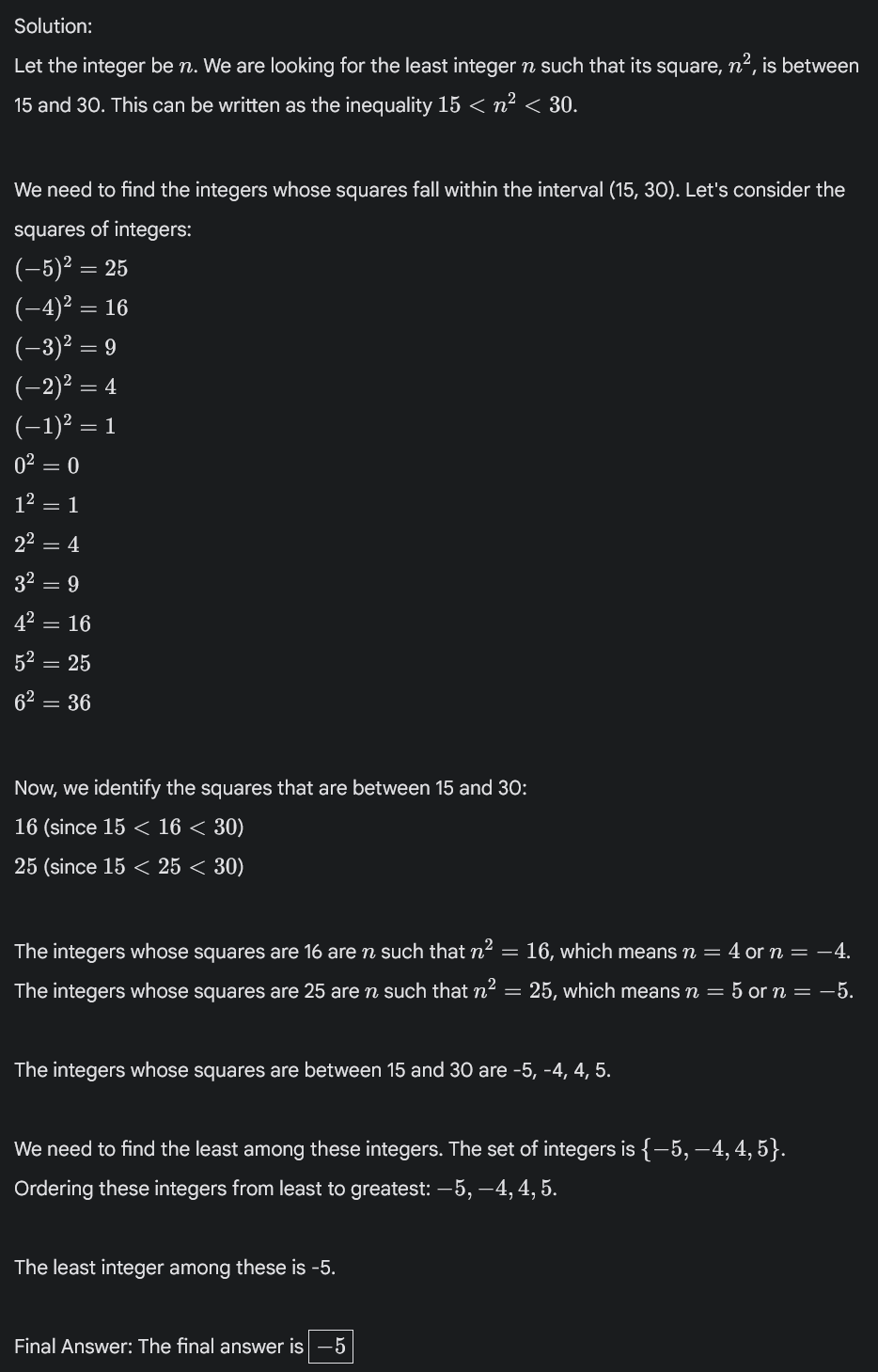

Just a few quick comments about my “integer whose square is between 15 and 30” question (search for my name in Zvi’s post to find his discussion):

The phrasing of the question I now prefer is “What is the least integer whose square is between 15 and 30”, because that makes it unambiguous that the answer is −5 rather than 4. (This is a normal use of the word “least”, e.g. in competition math, that the model is familiar with.) This avoids ambiguity about which of −5 and 4 is “smaller”, since −5 is less but 4 is smaller in magnitude.

This Gemini model answers −5 to both phrasings. As far as I know, no previous model ever said −5 regardless of phrasing, although someone said o1 Pro gets −5. (I don’t have a subscription to o1 Pro, so I can’t independently check.)

I’m fairly confident that a majority of elite math competitors (top 500 in the US, say) would get this question right in a math competition (although maybe not in a casual setting where they aren’t on their toes).

But also this is a silly, low-quality question that wouldn’t appear in a math competition.

Does a model getting this question right say anything interesting about it? I think a little. There’s a certain skill of being careful to not make assumptions (e.g. that the integer is positive). Math competitors get better at this skill over time. It’s not that straightforward to learn.

I’m a little confused about why Zvi says that the model gets it right in the screenshot, given that the model’s final answer is 4. But it seems like the model snatched defeat from the jaws of victory? Like if you cut off the very last sentence, I would call it correct.

Here’s the output I get:

Thank you for making this! My favorite ones are 4, 5, and 12. (Mentioning this in case anyone wants to listen to a few songs but not the full Solstice.)

Yes, very popular in these circles! At the Bay Area Secular Solstice, the Bayesian Choir (the rationalist community’s choir) performed Level Up in 2023 and Landsailor this year.

My Spotify Wrapped

Yeah, I agree that that could work. I (weakly) conjecture that they would get better results by doing something more like the thing I described, though.

My random guess is:

The dark blue bar corresponds to the testing conditions under which the previous SOTA was 2%.

The light blue bar doesn’t cheat (e.g. doesn’t let the model run many times and then see if it gets it right on any one of those times) but spends more compute than one would realistically spend (e.g. more than how much you could pay a mathematician to solve the problem), perhaps by running the model 100 to 1000 times and then having the model look at all the runs and try to figure out which run had the most compelling-seeming reasoning.

What’s your guess about the percentage of NeurIPS attendees from anglophone countries who could tell you what AGI stands for?

I just donated $5k (through Manifund). Lighthaven has provided a lot of value to me personally, and more generally it seems like a quite good use of money in terms of getting people together to discuss the most important ideas.

More generally, I was pretty disappointed when Good Ventures decided not to fund what I consider to be some of the most effective spaces, such as AI moral patienthood and anything associated with the rationalist community. This has created a funding gap that I’m pretty excited about filling. (See also: Eli’s comment.)

Consider pinning this post. I think you should!

It took until I was today years old to realize that reading a book and watching a movie are visually similar experiences for some people!

Let’s test this! I made a Twitter poll.

Oh, that’s a good point. Here’s a freehand map of the US I drew last year (just the borders, not the outline). I feel like I must have been using my mind’s eye to draw it.

I think very few people have a very high-fidelity mind’s eye. I think the reason that I can’t draw a bicycle is that my mind’s eye isn’t powerful/detailed enough to be able to correctly picture a bicycle. But there’s definitely a sense in which I can “picture” a bicycle, and the picture is engaging something sort of like my ability to see things, rather than just being an abstract representation of a bicycle.

(But like, it’s not quite literally a picture, in that I’m not, like, hallucinating a bicycle. Like it’s not literally in my field of vision.)

Huh! For me, physical and emotional pain are two super different clusters of qualia.

My understanding of Sarah’s comment was that the feeling is literally pain. At least for me, the cringe feeling doesn’t literally hurt.

[Question] Which things were you surprised to learn are not metaphors?

I don’t really know, sorry. My memory is that 2023 already pretty bad for incumbent parties (e.g. the right-wing ruling party in Poland lost power), but I’m not sure.

Fair enough, I guess? For context, I wrote this for my own blog and then decided I might as well cross-post to LW. In doing so, I actually softened the language of that section a little bit. But maybe I should’ve softened it more, I’m not sure.

[Edit: in response to your comment, I’ve further softened the language.]

Yup, I think that only about 10-15% of LWers would get this question right.