Yeah, I agree that your formalism achieves what we want. The challenge is getting an actual AI that is appropriately myopic with respect to U1-U4. And of things about which an AI could obtain certificates of determinacy, its own one-step actions seem most likely.

MalcolmMcLeod

Great work, glad someone is doing this. The natural follow-up to “we are in trouble if D is large” is “in what contexts can we practically make D small?” and I think it goes beyond corrigibility. A good framework to address this might be @johnswentworth’s natural latents work.

I don’t think we have a good theory of how to make lexicographic preferences work. If the agent is expectation-maximizing lexicographically, it will in practice consider only the first priority unless its world-model has certainty over the relevant time-horizon about the relevant inputs to . This requires myopia and a deterministic model up to the myopic horizon. Which seems hard, but at least we have desiderata! (And so on for each of the non-final priorities.) Given bounded computation, the world-model can be deterministic over relevant latents only up to a short horizon. So either we have to have a short horizon, or make most latents irrelevant. The latter path doesn’t seem crazy to me—we’re essentially specifying a “deontological agent.” That seems feasible for , but I’m worried about . I don’t think coherence theorems forbid deontology in practice, to be clear, even though my discussion above rests on their being impossible without myopia and determinism over latents.

I guess these two points are the same: “consequentialist agents are intractable over large effective state space; we need myopia and deontology to effectively slim that space.”

No object-level comments besides: this seems like a worthy angle of attack; countries besides the US and China can matter and should be mobilized. Really glad someone’s pushing on this.

All the more reason to sell that service to folks who don’t know!

I see the makings of a specialized service business here! This is a very particular, unenjoyable schlep to set up for most ordinary folks (e.g. me), but you paint a desirable picture.

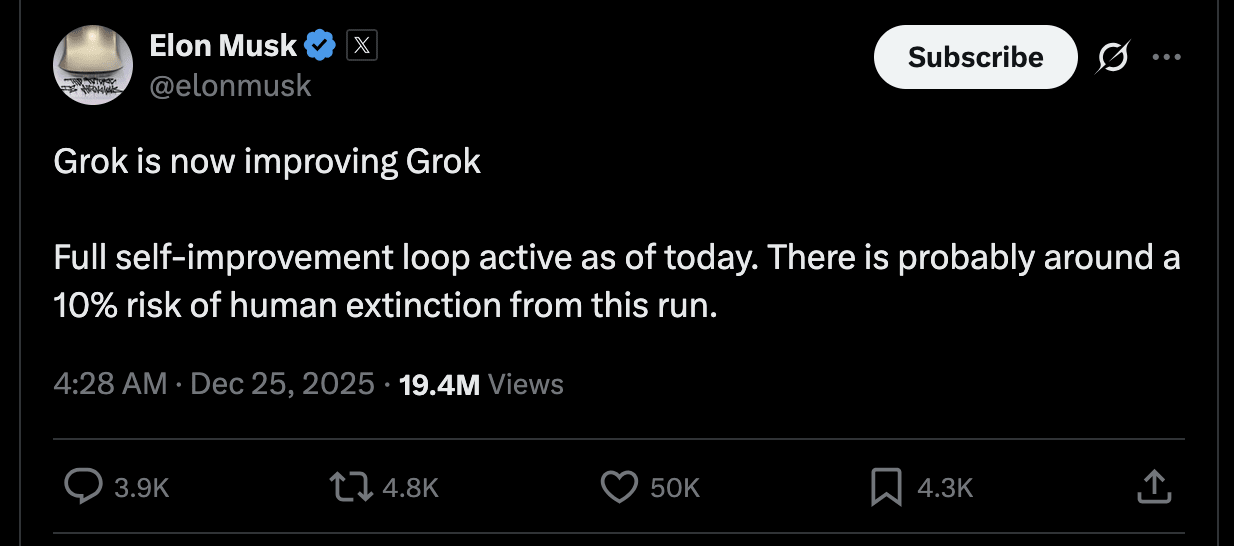

it’s not hard to imagine seeing this tweet

If one doesn’t plan to go into politics, is there any value in being a bipartisan single-issue donor? How much must one donate for it to be accompanied with a message of “I will vote for whoever is better on AI x-risk”?

I like your made up notation. I’ll try to answer, but I’m an amateur in both reasoning-about-this-stuff and representing-others’-reasoning-about-this-stuff.

I think (1) is both inner and outer misalignment. (2) is fragility of value, yes.I think the “generalization step is hard” point is roughly “you can get low by trial and error. The technique you found at the end that gets low—it better not intrinsically depend on the trial and error process, because you don’t get to do trial and error on ‘. Moreover, it better actually work on M’.”

Contemporary alignment techniques depend on trial and error (post-training, testing, patching). That’s one of their many problems.

My suggest term for standard MIRI thought would just be Mirism.

I kinda don’t like “generalization” as a name for this step. Maybe “extension”? There are too many steps where the central difficulty feels analogous to the general phenomenon of failure-of-generalization-OOD: the difficulty in getting to be small, the difficulty of going from techniques for getting small to techniques for getting a small ′ (verbiage different because of the first-time constraint), the disastrousness of even smallish ’…

This is an excellent encapsulation of (I think) something different—the “fragility of value” issue: “formerly adequate levels of alignment can become inadequate when applied to a takeover-capable agent.” I think the “generalization gap” issue is “those perfectly-generalizing alignment techniques must generalize perfectly on the first try”.

Attempting to deconfuse myself about how that works if it’s “continuous” (someone has probably written the thing that would deconfuse me, but as an exercise): if AI power progress is “continuous” (which training is, but model-sequence isn’t), it goes from “you definitely don’t have to get it right at all to survive” to “you definitely get only one try to get it sufficiently right, if you want to survive,” but by what path? In which of the terms “definitely,” “one,” and “sufficiently” is it moving continuously, if any?

I certainly don’t think it’s via the number of tries you get to survive! I struggle to imagine an AI where we all die if we fail to align it three times in a row.

I don’t put any stock in “sufficiently,” either—I don’t believe in a takeover-capable AI that’s aligned enough to not work toward takeover, but which would work toward takeover if it were even more capable. (And even if one existed, it would have to eschew RSI and other instrumentally convergent things, else it would just count as a takeover-causing AI.)

It might be via the confidence of the statement. Now, I don’t expect AIs to launch highly-contingent outright takeover attempts; if they’re smart enough to have a reasonable chance of succeeding, I think they’ll be self-aware enough to bide their time, suppress the development of rival AIs, and do instrumentally convergent stuff while seeming friendly. But there is some level of self-knowledge at which an AI will start down the path toward takeover (e.g., extricating itself, sabotaging rivals) and succeed with a probability that’s very much neither 0 nor 1. Is this first, weakish, self-aware AI able to extricate itself? It depends! But I still expect the relevant band of AI capabilities here to be pretty narrow, and we get no guarantee it will exist at all. And we might skip over it with a fancy new model (if it was sufficiently immobilized during training or guarded its goals well).

Of course, there’s still a continuity in expectation: when training each more powerful model, it has some probability of being The Big One. But yeah, I more or less predict a Big One; I believe in an essential discontinuity arising here from a continuous process. The best analogy I can think of is how every exponential with r<1 dies out and every r>1 goes off to infinity. When you allow dynamic systems, you naturally get cuspy behavior.

Hmm. I know nothing about nothing, and you’ve probably checked this already, so this comment is probably zero-value-added, but according to Da Machine, it sounds like the challenges are surmountable: https://chatgpt.com/share/e/68d55fd5-31b0-8006-aec9-55ae8257ed68

That’s fair!

OK, I am rereading what I wrote last night and I see that I really expressed myself badly. It really does sound like I said we shoudl sacrifice our commitment to precise truth. I’ll try again: what we should indeed sacrifice is our commitment to being anal-retentive about practices that we think associate with getting the precise truth, over and beyond saying true stuff and contradicting false stuff. where those practices include things like “never appearing to ‘rally round anything’ in a tribal fashion.” Or, at a 20degree angle from that: “doing rhetoric not with an aim toward an external goal, but orienting our rhetoric to be ostentatious in our lack of rhetoric, making all the trappings of our speech scream ‘this is a scrupulous, obsessive, nonpartisan autist for the truth.’” Does that make more sense? it’s the performative elements that get my goat. (And yes, there are performative elements, unavoidably! All speech has rhetoric because (metaphorically) “the semantic dimensions” are a subspace of speech-space, and speech-space is affine, so there’s no way to “set the non-semantic dimensions to zero.”)

I beg everyone I love not to ride a motorcycle.

Well, I also have have a few friends who clearly want to go out like a G before they turn 40, friends whose worldviews don’t include having kids and growing old—friends who are, basically, adventurers—and they won’t be dissuaded. They also free solo daylong 5.11s, so there’s only so much I can do. Needless to say, they don’t post on lesswrong.

No, I just expressed myself badly. Thanks for keeping me honest. Let me try to rephrase—in response to any text, you can write ~arbitrarily many words in reply that lay out exactly where it was wrong. You can also write ~arbitrarily many words in reply that lay out where it was right. You can vary not only the quantity but the stridency/emphasis of these collections of words. (I’m only talking simulacrum-0 stuff here.) This is no canonical weighting of these!! You have to choose. The choice is not determined by your commitment to speaking truth. The choice is determined by priorities about how your words move others’ minds and move the world. Does that make more sense?

‘Speak only truth’ is underconstrained; we’ve allowed ourselves to add (charitably) ‘and speak all the truth that your fingers have the strength to type, particularly on topics about which there appears to be disagreement’ or (uncharitably) ‘and cultivate the aesthetic of a discerning, cantankerous, genius critic’ in order to get lower-dimensional solutions.

When constraints don’t eliminate all dimensions, I think you can reasonably have lexically ordered preferences. We’ve picked a good first priority (speak only truth), but have picked a counterproductive second priority ([however you want to describe it]). I claim our second priority should be something like “and accomplish your goals.” Where your goals, presumably, = survive.

What would it take for you to commission such a poll? If it’s funding, please post about how much funding would be required; I might be able to arrange it. If it’s something else… well, I still would really like this poll to happen, and so would many others (I reckon). This is a brilliant idea that had never occurred to me.

The general pattern from Anthropic leadership is eliding entirely the possibility of Not Building The Thing Right Now. From that baseline, I commend Zach for at least admitting that’s a possibility. Outright, it’s disappointing that he can’t see the path of Don’t Build It Right Now—And Then Build It Later, Correctly, or can’t acknowledge its existence. He also doesn’t really net benefits and costs. He just does the “Wow! There sure are two sides. We should do good stuff” shtick. Which is better than much of Dario’s rhetoric! He’s cherrypicked a low p(doom) estimate, but I appreciate his acknowledgement that “Most of us wouldn’t be willing to risk a 3% chance (or even a 0.3% chance!) of the people we love dying.” Correct! I am not willing to! “But accepting uncertainty matters for navigating this complex challenge thoughtfully.” Yes. I have accepted my uncertainty of my loved ones’ survival, and I have been thoughtful, and the conclusion I have come to is that I’m not willing to take that risk.

Tbc this is still a positive update for me on Anthropic’s leadership. To a catastrophically low level. Which is still higher than all other lab leaders.

But it reminds me of this world-class tweet, from @humanharlan, whom you should all follow. he’s like if roon weren’t misaligned:

“At one extreme: ASI, if not delayed, will very likely cause our extinction. Let’s try to delay it.On the other: No chance it will do that. Don’t try to delay it.

Nuanced, moderate take: ASI, if not delayed, is moderately likely to cause our extinction. Don’t try to delay it.”

This seems wise. The reception of the book in the community has been rather Why Our Kind Can’t Cooperate, as someone whom I forget linked. The addiction to hashing-out-object-level-correctness-on-every-point-of-factual-disagreement and insistence on “everything must be simulacrum level 0 all the time”… well, it’s not particularly conducive to getting things done in the real world.

I’m not suggesting we become propagandists, but I think pretty much every x-risk-worried Rat who disliked the book because e.g. the evolution analogy doesn’t work, they would have preferred a different flavor of sci-fi story, or the book should have been longer, or it should have been shorter, or it should have proposed my favorite secret plan for averting doom, or it should have contained draft legislation at the back… if they would endorse such a statement, I think that (metaphorically) there should be an all-caps disclaimer that reads something like “TO BE CLEAR AI IS STILL ON TRACK TO KILL EVERYONE YOU LOVE; YOU SHOULD BE ALARMED ABOUT THIS AND TELLING PEOPLE IN NO UNCERTAIN TERMS THAT YOU HAVE FAR, FAR MORE IN COMMON WITH YUDKOWSKY AND SOARES THAN YOU DO WITH THE LOBBYISTS OF META, WHO ABSENT COORDINATION BY PEOPLE ON HUMANITY’S SIDE ARE LIABLE TO WIN THIS FIGHT, SO COORDINATE WE MUST” every couple of paragraphs.

I don’t mean to say that the time for words and analysis is over. It isn’t. But the time for action has begun, and words are a form of action. That’s what’s missing, is the words-of-action. It’s a missing mood. Parable (which, yes, I have learned some people find really annoying):

A pale, frightened prisoner of war returns to the barracks, where he tells his friend: “Hey man, I heard the guards talking, and I think they’re gonna take us out, make us a dig a ditch, and then shoot us in the back. This will happen at dawn on Thursday.”

The friend snorts, “Why would they shoot us in the back? That’s incredibly stupid. Obviously they’ll shoot us in the head; it’s more reliable. And do they really need for us to dig a ditch first? I think they’ll just leave us to the jackals. Besides, the Thursday thing seems over-confident. Plans change around here, and it seems more logical for it to happen right before the new round of prisoners comes in, which is typically Saturday, so they could reasonably shoot us Friday. Are you sure you heard Thursday?”

The second prisoner is making some good points. He is also, obviously, off his rocker.

There are two steelmen I can think of here. One is “We must never abandon this relentless commitment to precise truth. All we say, whether to each other or to the outside world, must be thoroughly vetted for its precise truthfulness.” To which my reply is: how’s that been working out for us so far? Again, I don’t suggest we turn to outright lying like David Sacks, Perry Metzger, Sam Altman, and all the other rogues. But would it kill us to be the least bit strategic or rhetorical? Politics is the mind-killer, sure. But ASI is the planet-killer, and politics is the ASI-[possibility-thereof-]killer, so I am willing to let my mind take a few stray bullets.

The second is “No, the problems I have with the book are things that will critically undermine its rhetorical effectiveness. I know the heart of the median American voter, and she’s really gonna hate this evolution analogy.” To which I say, “This may be so. The confidence and negativity with which you have expressed this disagreement are wholly unwarranted.”

Let’s win, y’all. We can win without sacrificing style and integrity. It might require everyone to sacrifice a bit of personal pride, a bit of delight-in-one’s-own-cleverness. I’m not saying keep objections to yourself. I am saying, keep your eye on the fucking ball. The ball is not “being right,” the ball is survival.

Suits can be cool and nonconformist if they’re sufficiently unusual suits. >95% of the suits you see in America are black, grey, or dark blue. Usually it’s with a white or pale blue plain shirt. Nowadays, neither a tie nor a pocket square. Boring! If you have an even slightly offbeat suit—green, a light color, tweed, seersucker, three-piece, double-breasted—particularly if you have interesting shoes or an interesting tie—however you may look, you don’t look like you’re trying to fit in.

Really appreciate your laying out your thoughts here. Scattered late-night replies:

#0: I think this is a duplicate of #3.#1: In one sense, all the great powers have an interest in not building superintelligence—they want to live! Of course, some great powers may disagree; they would like to subvert the treaty. But they still would prefer lesser powers not develop AGI! Indeed, everyone in this setup would prefer that everyone else abide by the terms of the treaty, which ain’t nothing, and in fact is about as much as you’d hope for in the nuclear setting.

#2: I don’t think the economic value here is a huge incentive by itself, though I agree it matters. If it’s illegal to develop AGI, then any use of AGI for your economy would have to be secret. Of course, if developing AGI gives you a strategic advantage you can use to overwhelm the other nations of the world, then that’s an incentive in itself! There’s also the possibility that you develop safe AGI, so nobody bothers to enforce the treaty or punish you for breaking it. And this AGI is contained and obedient, and you prevent other countries from having it, so you crush the nations of the world? Or you share it globally and everyone is rich—in this story, your country wants what’s best for the world but also believes that it knows what is best for the world. Plausible. (See: Iraq war.) All kinds of reasonable stories you can tell here, but the incentive isn’t clear-cut. It would be a harder sell.

#3: Yeah, this is a real difficulty.#4: Yep, even if we get a pause, the clock is (stochastically) on. Which means we must use the pause wisely and fast. But as for the procedural “difficulty in drawing a red line,” I’m not too worried about that. We can pick arbitrary thresholds at which to set the legal compute cap. Sure, it’s hard to know what’s “correct,” but I’m not too worried about that.

#5: Possibly. Parts of it are harder, parts of it are easier. Centrifuges are easier to hide than Stargate, but a single server rack is easier still. An international third party of verifiers could eliminate a lot of the mutual mistrust issues. Of course, that’s not incorruptible, but hey, nothing’s perfect. Probably someone should be working on “cryptographic methods for verifying that no frontier AI training is going on without disclosing much else about what’s happening on these chips.”

I agree with you that on net, this is a harder treaty to enforce than the global nuclear weapons treaties. On the other hand, I don’t think we’ve tried that hard on the nonproliferation, relative to all the other things our civilization tries hard at. The obstacles you describe seem surmountable, at least for a while. And all we need to do is buy time. It ain’t intractable. And I have no better ideas.

P is a second-order predicate; it applies to predicates. The english word “perfect” applies to things, and it’s a little weirder to apply it to qualities, as least if you think of \phi and \psi as being things like “Omnibenevolent” or “is omnibenevolent.” If you think of \phi and \psi as being “Omnibenevolence,” it makes more sense—where we type-distinguish between “qualities as things” and “things per se.” It’s still weird not to be able to apply P to things-per-se. We want to be able to say “P(fido)” = “fido is perfect”, but that’s not allowed. We can say “P(is_good_dog)” = “being a good dog is perfect”.