Running Lightcone Infrastructure, which runs LessWrong and Lighthaven.space. You can reach me at habryka@lesswrong.com.

(I have signed no contracts or agreements whose existence I cannot mention, which I am mentioning here as a canary)

Running Lightcone Infrastructure, which runs LessWrong and Lighthaven.space. You can reach me at habryka@lesswrong.com.

(I have signed no contracts or agreements whose existence I cannot mention, which I am mentioning here as a canary)

Greatly appreciate you collecting these.

Sure, though that coverage has turned out to be wrong, so it’s still a bad example. See also: https://www.lesswrong.com/posts/sT6NxFxso6Z9xjS7o/nuclear-war-is-unlikely-to-cause-human-extinction

(Also Bostrom’s coverage is really quite tentative, saying “An all-out nuclear war was a possibility with both a substantial probability and with consequences that might have been persistent enough to qualify as global and terminal. There was a real worry among those best acquainted with the information available at the time that a nuclear Armageddon would occur and that it might annihilate our species or permanently destroy human civilization”)

Given the probabilities involved it does seem to me like we vastly vastly underinvested in nuclear recovery efforts (in substantial parts because of this dumb “either it doesn’t happen or we all die” mentality).

To be clear, this is importantly different from my models of AI risk, which really does have much more of that nature as far as I can tell.

it either happens or it doesn’t, and if it doesn’t you do not feel it at all.

What? Nuclear was is very centrally the kind of thing that really matters how you prepare for it. It was always extremely unlikely to be an existential risk, and even relatively simple precautions would drastically increase the likelihood you would survive.

Eg with a startup, don’t spend lots of time creating a product before writing a business plan. The plan should come first, or at least early on, because it’s how you decide whether to create the product! (Something I’ve written about on here)

What, this goes against the advice of basically anyone I have ever talked to. The central advice has always been “build something you want, or someone you know want”, explicitly without concern for how exactly you are going to commercialize. Indeed, most successful companies figure out where their margins come from quite late into their lifecycle.

We’ve had a bunch of people donate stocks to us through Every.org. I think it’s worked fine? I don’t actually know what the UI looks like, but it seems to work.

Claude Code not being able to write an eigenvalue decomposition is very surprising to me! Can you share any more detail? I would take the strong bet I would get it right on the first or maybe second try.

Rather, it’s because I think the empirical consequences of deception, violence, criminal activity, and other norm violations are often (not always) quite bad

I think I agree with the thrust of this, but I also think you are making a pretty big ontological mistake here when you call “deception” a thing in the category of “norm violations”. And this is really important, because it actually illustrates why this thing you are saying people aren’t supposed to do is actually tricky not to do.

Like, the most common forms of deception people engage in are socially sanctioned forms of deception. The most common forms of violence people engage in are socially sanctioned forms of violence. The most common form of cover-ups are socially sanctioned forms of cover-up.

Yes, there is an important way in which the subset of the socially sanctioned forms of deception and violence have to be individually relatively low-impact (since forms of deception or violence that have large immediate consequences usually lose their social sanctioning), but this makes the question of when to follow the norms and when to act with honesty against local norms, or engage in violence against local norms, a question of generalization. Many forms of extremely destructive deception, while socially sanctioned, might not lose their sanctioning before causing irreparable harm.

So most of the time the real question here is something like “when you are willing to violate local norms in order to do good?”, and I think “when honesty is violating local norms, be honest anyways” is a pretty good guidance, but the fact that it’s often against local norms is a really crucial aspect of the mechanisms here.

That… really feels to me like it’s learning the wrong lessons. This somehow assumes that this is the only way you are going to get surprising misgeneralization.

Like, I am not arguing for irreducible uncertainty, but I certainly think the update of “Oh, zero shot anyone predicted this specific weird form of misgeneralization, but actually if you make this small tweak to it it generalizes super robustly” is very misguided.

Of course we are going to see more examples of weird misgeneralization. Trying to fix EM in particular feels like such a weird thing to do. I mean, if you have to locally ship products you kind of have to, but it certainly will not be the only weird thing happening on the path to ASI, and the primary lesson to learn is “we really have very little ability to predict or control how systems misgeneralize”.

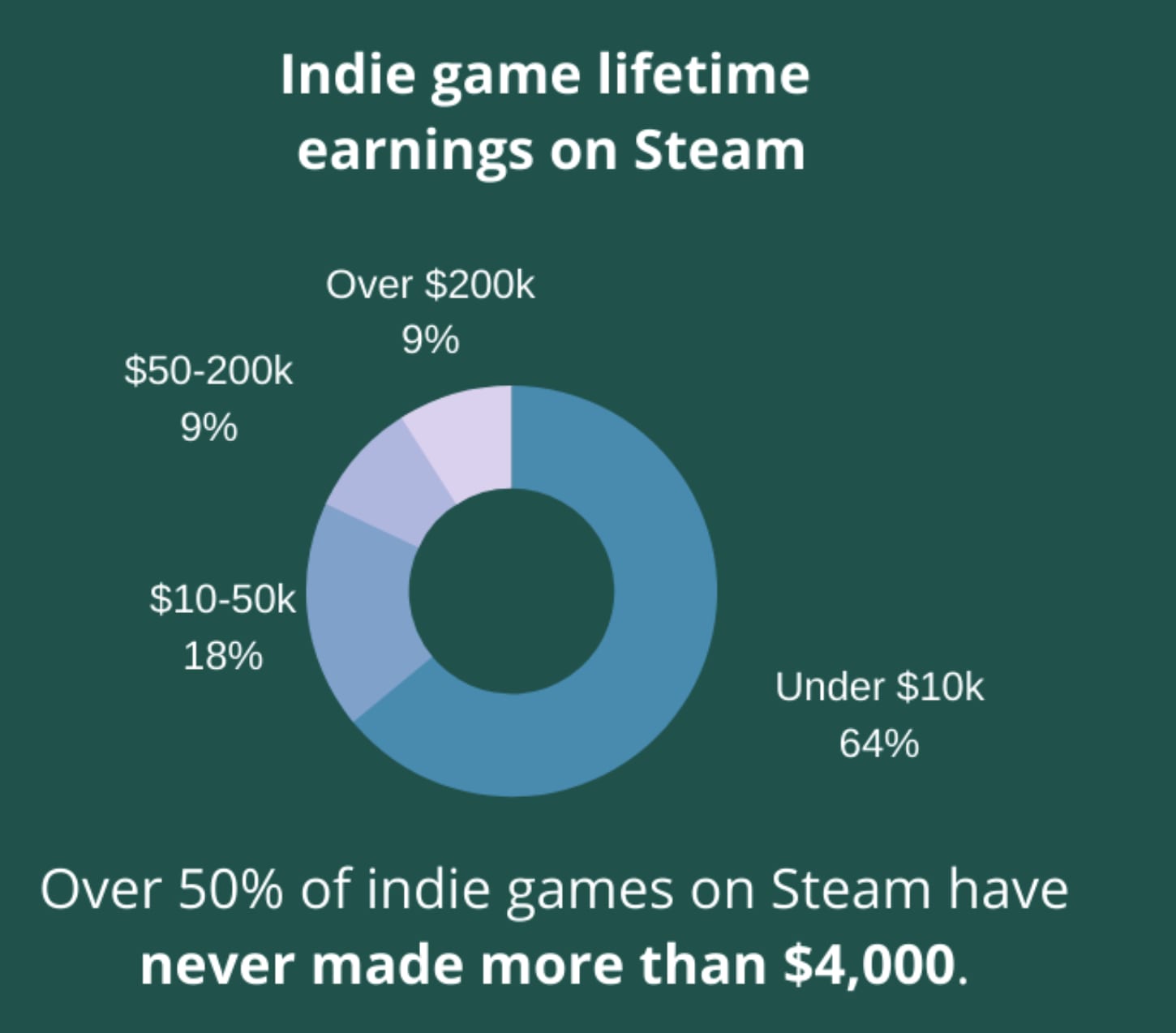

Huh, honestly, I updated that we don’t have enough video games when I saw the numbers. 30 per day on Steam! 10,000 a year. Video games are the biggest entertainment category, and we produce a similar amount of tv shows and movies, the median of which costs much more to produce than the median video game listed here.

And these numbers aren’t too bad!

Like, almost 40% of games make more than $10k! That means actually a pretty decent chunk of people played them.

I was honestly expecting the video game market to be much more flooded.

Thank you so much!

Hmm, I guess my risk model was always “of course given our current training methods and understanding training is going to generalize in a ton of crazy ways, the only thing we know with basically full confidence is that we aren’t going to get what we want”. The emergent misalignment stuff seems like exactly the kind of thing I would have predicted 5 years ago as a reference class, where I would have been “look, things really aren’t going to generalize in any easy way you can predict. Maybe the model will care about wireheading itself, maybe it will care about some weird moral principle you really don’t understand, maybe it will try really hard to predict the next token in some platonic way, you have no idea. Maybe you will be able to get useful work out of it in the meantime, but obviously you aren’t going to get a system that cares about the same things as you do”.

Maybe other people had more specific error modes in mind? Like, this is what all the inner misalignment stuff is all about. I agree this isn’t a pure outer misalignment error, but I also never really understood what that would even mean, or how that would make sense.

Thank you!

OpenAI wrote in a blog post that the GPT-4o sycophancy in early 2025 was a consequence of excessive reward hacking on thumbs up/thumbs down data from users.

Yes, seems like central example of RLHF hacking. I think you agree with that, but just checking that we aren’t talking past each other and you would for some reason not consider that RLHF.

What’s the reason for treating “misaligned personas” as some special thing? It seems to me like a straightforward instance of overfitting on user feedback plus unintended generalization from an alien mind. The misgeneralization definitely has some interesting structure, but of course any misgeneralization would end up having some interesting structure.

Yep, indeed, basically those two things.

He is presumably referring to your inline reacts:

(Though maybe the calculus changes if you’ve got tons of other prospective donors invested with them.)

Yeah, I think that’s loading up on risk in a way that I think acquires a pretty substantial adjustment, since I do think that if VARA keeps doing well, then probably we will have an easier time fundraising in the future. Also, we are likely to also invest at least some of our assets ourselves this year maybe even with VARA, or make a similar portfolio ourselves, and so will also have a bunch of direct exposure to these trends.

Also, I have learned to become very hesitant to believe that people will actually donate more if their assets appreciate, so I would not really be able to plan around such expectations in the way I can plan around money in my bank account.

The $1.4M really isn’t a particularly natural milestone. Like, sure, it means we won’t literally all shut down, but it still implies major cuts. Putting a goal at $1.4M would I think cause people to index too much on that threshold.

And then I do think giving any kind of incremental stop is helpful and the millions seem like the best place for that.

Probably! I will take a look in a bit.

Huh, I think 4o + Sydney bing (both of which were post 2022) seem like more intense examples of spooky generalization / crazy RLHF hacks than I would have expected back. Gemini 3 with its extreme paranoia and (for example) desperate insistence that it’s not 2025 seems in the same category.

Like, I am really not very surprised that if you try reasonably hard you can avoid these kinds of error modes in a supervising regime, but we’ve gotten overwhelming evidence that you routinely do get crazy RLHF-hacking. I do think it’s a mild positive update that you can avoid these kinds of error modes if you try, but when you look into the details, it also seems kind of obvious to me that we have not found any scalable way of doing so.

It does happen to be the case that thinking that climate change has much of a chance of being existentially bad is just wrong. Thinking that AI is existentially bad is right (at least according to me). A major confounder to address is that conditioning on a major false belief will of course be indicative of being worse at pursuing your goals than conditioning on a major true belief.