Book Summary: Consciousness and the Brain

One of the fundamental building blocks of much of consciousness research, is that of Global Workspace Theory (GWT). One elaboration of GWT, which focuses on how it might be implemented in the brain, is the Global Neuronal Workspace (GNW) model in neuroscience. Consciousness and the Brain is a 2014 book that summarizes some of the research and basic ideas behind GNW. It was written by Stanislas Dehaene, a French cognitive neuroscientist with a long background in both consciousness research and other related topics.

The book and its replicability

Given that this is a book on psychology and neuroscience that was written before the replication crisis, an obligatory question before we get to the meat of it is: how reliable are any of the claims in this book? After all, if we think that this is based on research which is probably not going to replicate, then we shouldn’t even bother reading the book.

I think that the book’s conclusions are at least reasonably reliable in their broad strokes, if not necessarily all the particular details. That is, some of the details in the cited experiments may be off, but I expect most of them to at least be pointing in the right direction. Here are my reasons:

First, scientists in a field usually have an informal hunch of how reliable the different results are. Even before the replication crisis hit, I had heard private comments from friends working in social psychology, who were saying that everything in the field was built on shaky foundations and how they didn’t trust even their own findings much. In contrast, when I asked a friend who works with some people doing consciousness research, he reported back that they generally felt that GWT/GNW-style theories have a reasonably firm basis. This isn’t terribly conclusive but at least it’s a bit of evidence.

Second, for some experiments the book explicitly mentions that they have been replicated. That said, some of the reported experiments seemed to be one-off ones, and I did not yet investigate the details of the claimed replications.

Third, this is a work of cognitive neuroscience. Cognitive neuroscience is generally considered a subfield of cognitive psychology, and cognitive psychology is the part of psychology whose results have so far replicated the best. One recent study tested nine key findings from cognitive psychology, and found that they all replicated. The 2015 “Estimating the reproducibility of Psychological Science” study, managed to replicate 50% of recent results in cognitive psychology, as opposed to 25% of results in social psychology. (If 50% sounds low, remember that we should expect some true results to also fail a single replication, so a 50% replication rate doesn’t imply that 50% of the results would be false. Also, a field with a 90% replication rate would probably be too conservative in choosing which experiments to try.) Cognitive psychology replicating pretty well is probably because it deals with phenomena which are much easier to rigorously define and test than social psychology does, so in that regard it’s closer to physics than it is to social psychology.

On several occasions, the book reports something like “people did an experiment X, but then someone questioned whether the results of that experiment really supported the hypothesis in question or not, so an experiment X+Y was done that repeated X but also tested Y, to help distinguish between two possible interpretations of X”. The general vibe that I get from the book is that different people have different intuitions about how consciousness works, and when someone reports a result that contradicts the intuitions of other researchers, those other researchers are going to propose an alternative interpretation that saves their original intuition. Then people keep doing more experiments until at least one of the intuitions is conclusively disproven—replicating the original experiments in the process.

The analysis of the general reliability of cognitive psychology is somewhat complicated by the fact that these findings are not pure cognitive psychology, but rather cognitive neuroscience. Neuroscience is somewhat more removed from just reporting objective findings, since the statistical models used for analyzing the findings can be flawed. I’ve seen various claims about the problems with statistical tools in neuroscience, but I haven’t really dug enough into the field to say to what extent those are a genuine problem.

As suggestive evidence, a lecturer who teaches a “How reliable is cognitive neuroscience?” course reports that before taking a recent iteration of the course, the majority of students answered the question “If you read about a finding that has been demonstrated across multiple papers in multiple journals by multiple authors, how likely do you think that finding is to be reliable?” as “Extremely likely” and some “Moderately likely”. After taking the course, “Moderately likely” became the most common response with a little under half of the responses, followed by “Slightly likely” by around a quarter of responses and “Extremely likely” with a little over 10% of the responses. Based on this, we might conclude that cognitive neuroscience is moderately reliable, at least as judged by MSc students who’ve just spent time reading and discussing lots of papers critical of cognitive neuroscience.

One thing that’s worth noting is that many of the experiments, including many of the ones this book is reporting on, include two components: a behavioral component and a neuroimaging component. If the statistical models used for interpreting the brain imaging results were flawed, you might get an incorrect impression of what was happening in the brain, but the behavioral results would still be valid. If you’re maximally skeptical of neuroscience, you could choose to throw all of the “inside the brain” results from the book away, and only look at the behavioral results. That seems too conservative to me, but it’s an option. Several of the experiments in the book also use either EEG or single-unit recordings rather than neuroimaging ones; these are much older and simpler techniques than brain imaging is, so are easier to analyze reliably.

So overall, I would expect that the broad strokes of what’s claimed in the book are reasonably correct, even if some of the details might be off.

Defining consciousness

Given that consciousness is a term loaded with many different interpretations, Dehaene reasonably starts out by explaining what he means by consciousness. He distinguishes between three different terms:

Vigilance: whether we “are conscious” in the sense of being awake vs. asleep.

Attention: having focused our mental resources on a specific piece of information.

Conscious access: some of the information we were focusing on, entering our awareness and becoming reportable to others.

For instance, we might be awake (that is, vigilant) and staring hard at a computer screen, waiting for some image to be displayed. When that image does get displayed, our attention will be on it. But it might flash too quickly for us to report what it looked like, or even for us to realize that it was on the screen in the first place. If so, we don’t have conscious access to the thing that we just saw. Whereas if it had been shown for a longer time, we would have conscious access to it.

Dehaene says that when he’s talking about consciousness, he’s talking about conscious access, and also that he doesn’t particularly care to debate philosophy and whether this is really the consciousness. Rather, since we have a clearly-defined thing which we can investigate using scientific methods, we should just do that, and then think about philosophy once we better understand the empirical side of things.

It seems correct to say that studying conscious access is going to tell us many interesting things, even if it doesn’t solve literally all the philosophical questions about consciousness. In the rest of this article, I’ll just follow his conventions and use “consciousness” as a synonym for “conscious access”.

Unconscious processing of meaning

A key type of experiment in Dehaene’s work is subliminal masking. Test subjects are told to stare at a screen and report what they see. A computer program shows various geometric shapes (masks) on the screen. Then at some point, the masks are for a very brief duration replaced with something more meaningful, such as the word “radio”. If the word “radio” is sandwiched between mask shapes, showing it for a sufficiently brief time makes it invisible. The subjects don’t even register a brief flicker, as they might if the screen had been totally blank before the word appeared.

By varying the duration for which the word is shown, researchers can control whether or not the subjects see it. Around 40 milliseconds, it is invisible to everyone. Once the duration reaches a certain threshold, which varies somewhat by person but is around 50 milliseconds, the word will be seen around half of the time. When people report not seeing a word, they also fail to name it when asked some time after the trial.

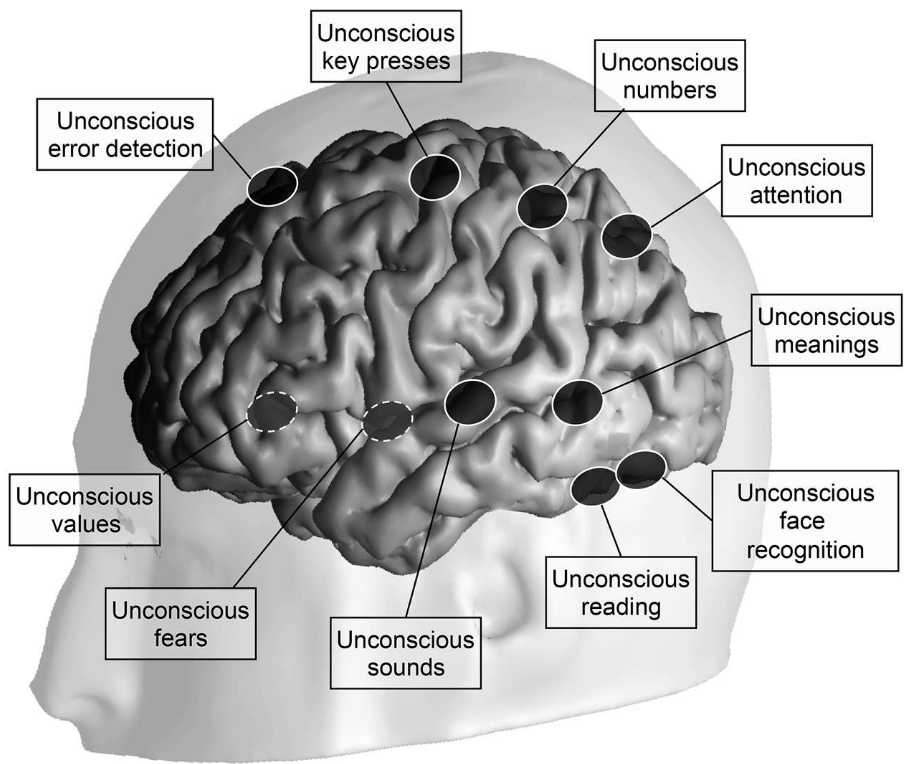

However, even when a masked target doesn’t make it into consciousness, some part of the brain still sees it. It seems as if the visual subsystem started processing the visual stimulus and parsing it in terms of its meaning, but the results of those computations then never made it all the way to consciousness.

One line of evidence for this are subliminal priming experiments, not to be confused with the controversial “social priming” effects in social psychology; unlike those effects, these kinds of priming experiments are well-defined and have been replicated many times. An example of a subliminal priming experiment involves first flashing a hidden word (a prime) so quickly that the participants don’t see it, then following it by a visible word (the target). For instance, people may be primed using the word “radio”, then shown the target word “house”. They are then asked to classify the target word, by e.g. pressing one button if the target word referred to a living thing and another button if it referred to an object.

Subliminal repetition priming refers to the finding that, if the prime and target are the same word and separated by less than a second, then the person will be quicker to classify the target and less likely to make a mistake.

There are indications that when this happens, the brain has parsed some of the prime’s semantic meaning and matched it against the target’s meaning. For example, priming works even when the prime is in lower case (radio) and the target is in upper case (RADIO). This might not seem surprising, but look at the difference between e.g. “a” and “A”. These are rather distinct shapes, which we’ve only learned to associate with each other due to cultural convention. Furthermore, while the prime of “range” speeds up the processing of “RANGE”, using “anger” as a prime for “RANGE” has no effect, despite “range” and “anger” having the same letters in a different order. The priming effect comes from the meaning of the prime, rather than just its visual appearance.

The parsing of meaning is not limited to words. If a chess master is shown a simplified chess position for 20 milliseconds, masked so as to make it invisible, they are faster to classify a visible chess position as a check if the hidden position was also a check, and vice versa.

I have reported the above results as saying that the brain does unconscious processing about the meaning of what it sees, but that interpretation has been controversial. After all, something like word processing or identifying a position in check when you have extensive chess experience, is extremely overlearned and could represent an isolated special case rather than showing that the brain processes meaning more generally. The book goes into more detail about the history of this debate and differing interpretations that were proposed; I won’t summarize that history in detail, but will just discuss a selection of some experiments which also showed unconscious processing of meaning.

In arithmetic priming experiments, people are first shown a masked single-digit number and then a visible one. They are asked to say whether the target number is larger or smaller than 5. When the number used as a prime is congruent with the target (e.g. smaller than 5 when the target number is also smaller than 5), people respond more quickly than if the two are incongruent. Follow-up work has shown that the effect replicates even if the numbers used as primes are shown in writing (“four”) and the target ones as digits (“4”). The priming even works when the prime is an invisible visual number and the target a conscious spoken number.

Further experiments have shown that the priming effect is the strongest if the prime is the same number as the target number (4 preceding 4). The effect then decreases the more distant the prime is from the target number: 3 preceding 4 shows less of a priming effect, but it still has more of a priming effect than 2 preceding 4 does, and so on. Thus, the brain has done something like extracting an abstract representation of the magnitude of the prime, and used that to influence the processing of the ’target’s magnitude.

Numbers could also be argued to be a special case for which we have specialized processing, but later experiments have also shown congruity effects for words in general. For example, when people are shown the word “piano” and asked to indicate whether it is an object or an animal, priming them with a word from a congruent category (“chair”) facilitates the correct response, while an incongruent prime (“cat”) hinders it.

Some epilepsy patients have had electrodes inserted into their skull for treatment purposes. Some of them have also agreed to have those electrodes used for this kind of research. When they are shown invisible “scary” words such as danger, rape, or poison, electrodes implanted near the amygdala—the part of the brain involved in fear processing—register an increased level of activation, which is absent for neutral words such as fridge or sonata.

In one study, subjects were shown a “signal”, and then had to guess whether to press a button or not press it. As soon as they pressed it, they were told whether they had guessed correctly (earning money) or incorrectly (losing money). Unknown to them, each signal was preceded by a masked shape, which indicated the correct response: one kind of a shape indicated that pressing the button would earn them money, another shape indicated that not pressing the button would earn them money, and a third one meant that either choice had an equal chance of being correct. Even though the subjects were never aware of seeing the shape, once enough trials had passed, they started getting many more results correct than chance alone would indicate. An unconscious value system had associated the shapes with different actions, and was using the subliminal primes for choosing the right action.

Unconscious processing can also weigh the average value of a number of variables. In one type of experiment, subjects are choosing cards from four different decks. Each deck has cards that cause the subject to either earn or lose reward money, with each deck having a different distribution of cards. Two of the decks are “bad”, causing the subjects to lose money on net, and two of them are “good”, causing them to gain money on net. By the end of the experiment, subjects have consciously figured out which one is which, and can easily report this. However, measurements of skin conductance indicate that even before they have consciously figured out the good and bad decks, there comes a point when they’ve pulled enough cards that being about to draw a card from a bad deck causes their hands to sweat. A subconscious process has already started generating a prediction of which decks are bad, and is producing a subliminal gut feeling.

A similar unconscious averaging of several variables can also be shown using the subliminal priming paradigm. Subjects are shown five arrows one at a time, some of which point left and some of which point right. They are then asked for the direction that the majority of the arrows were pointing to. When the arrows are made invisible by subliminal masking, subjects who are forced to guess feel like they are just making random guesses, but are in fact responding much more accurately than by chance alone.

There more examples in the book, but these should be enough to convey the general idea: that many different sensory inputs are automatically registered and processed in the brain, even if they are never shown for long enough to make it all the way to consciousness. Unconsciously processed stimuli can even cause movement commands to be generated in the motor cortex and sent to the muscles, though not necessarily at an intensity which would sufficient to cause actual action.

What about consciousness, then?

So given everything that our brain does automatically and without conscious awareness, what’s up with consciousness? What is it, and what does it do?

Some clues can be found from investigating the neural difference between conscious and unconscious stimuli. Remember that masking experiments show a threshold effect in whether a stimulus is seen or not: if a stimulus which is preceded by a mask is shown for 40 milliseconds, then it’s invisible, but around 50 milliseconds it starts to become visible. In experiments where the duration of the stimulus is carefully varied, there is an all-or-nothing effect: subjects do not report seeing more and more of the stimulus as the duration is gradually increased. Rather they either see it in its entirety, or they see nothing at all.

A key finding, replicated across different sensory modalities and different methods for measuring brain activation (fMRI, EEG, and MEG) is that a stimulus becoming conscious involves an effect where, once the strength of a stimulus exceeds a certain threshold, the neural signal associated with that stimulus is massively boosted and spreads to regions in the brain which it wouldn’t have reached otherwise. Exceeding the key threshold causes the neural signal generated by the sensory regions to be amplified, with the result that the associated signal could be spread more widely, rather than fading away before it ever reached all the regions.

Dehaene writes, when discussing an experiment where this was measured using visually flashed words as the stimulus:

By measuring the amplitude of this activity, we discovered that the amplification factor, which distinguishes conscious from unconscious processing, varies across the successive regions of the visual input pathway. At the first cortical stage, the primary visual cortex, the activation evoked by an unseen flashed word is strong enough to be easily detectable. However, as it progresses forward into the cortex, masking makes it lose strength. Subliminal perception can thus be compared to a surf wave that looms large on the horizon but merely licks your feet when it reaches the shore. By comparison, conscious perception is a tsunami—or perhaps an avalanche is a better metaphor, because conscious activation seems to pick up strength as it progresses, much as a minuscule snowball gathers snow and ultimately triggers a landslide.

To bring this point home, in my experiments I flashed words for only 43 milliseconds, thereby injecting minimal evidence into the retina. Nevertheless, activation progressed forward and, on conscious trials, ceaselessly amplified itself until it caused a major activation in many regions. Distant brain regions also became tightly correlated: the incoming wave peaked and receded simultaneously in all areas, suggesting that they exchanged messages that reinforced one another until they turned into an unstoppable avalanche. Synchrony was much stronger for conscious than for unconscious targets, suggesting that correlated activity is an important factor in conscious perception.

These simple experiments thus yielded a first signature of consciousness: an amplification of sensory brain activity, progressively gathering strength and invading multiple regions of the parietal and prefrontal lobes. This signature pattern has often been replicated, even in modalities outside vision. For instance, imagine that you are sitting in a noisy fMRI machine. From time to time, through earphones, you hear a brief pulse of additional sound. Unknown to you, the sound level of these pulses is carefully set so that you detect only half of them. This is an ideal way to compare conscious and unconscious perception, this time in the auditory modality. And the result is equally clear: unconscious sounds activate only the cortex surrounding the primary auditory area, and again, on conscious trials, an avalanche of brain activity amplifies this early sensory activation and breaks into the inferior parietal and prefrontal areas

Dehaene goes into a considerable amount of detail about the different neuronal signatures which have been found to correlate with consciousness, and the experimental paradigms which have been used to test whether or not those signatures are mere correlates rather than parts of the causal mechanism. I won’t review all of that discussion here, but will summarize some of his conclusions.

Consciousness involves a neural signal activating self-reinforcing loops of activity, which causes wide brain regions to synchronize to process that signal.

Consider what happens when someone in the audience of a performance starts clapping their hands, soon causing the whole audience to burst into applause. As one person starts clapping, other people hear it and start clapping in turn; this becomes a self-reinforcing effect where your clapping causes other people to clap, and you are more likely to continue clapping if other people are also still clapping. In a similar way, the threshold effect of conscious activation seems to involve some neurons sending a signal, causing other neurons to activate and join in on broadcasting that signal. The activation threshold is a point where enough neurons have sufficient mutual excitation to create a self-sustaining avalanche of excitation, spreading throughout the brain.

The spread of activation is further facilitated by a “brain web” of long-distance neurons, which link multiple areas of the brain together into a densely interconnected network. Not all areas of the brain are organized in this way: for instance, sensory regions are mostly connected to their immediate neighbors, with visual area V1 being primarily only connected to visual area V2, and V2 mostly to V1 and V3, and so on. But higher areas of the cortex are much more joined together, in a network where area A projecting activity to area B usually means that area B also projects activity back to area A. They also involve triangular connections, where region A might project into regions B and C, which then both also project to each other and back to A. This long-distance network joins not only areas of the cortex, but is also connected to regions such as the thalamus (associated with e.g. attention and vigilance), the basal ganglia (involved in decision-making and action), and the hippocampus (involved in episodic memory).

A stimulus becoming conscious involves the signal associated with it achieving enough strength to activate some of the associative areas that are connected with this network, which Dehaene calls the “global neuronal workspace” (GNW). As those areas start broadcasting the signal associated with the stimulus, other areas in the network receive it and start broadcasting it in turn, creating the self-sustaining loop. As this happens, many different regions will end up processing the signal at the same time, synchronizing their processing around the contents of that signal. Dehaene suggests that the things that we are conscious of at any given moment, are exactly the things which are being processed in our GNW at that moment.

Dehaene describes this as “a decentralized organization without a single physical meeting site” where “an elitist board of executives, distributed in distant territories, stays in sync by exchanging a plethora of messages”. While he mostly reviews evidence gathered from investigating sensory inputs, his model holds that besides sensory areas, many other regions—such as the ones associated with memory and attention—also feed into and manipulate the contents of the network. Once a stimulus enters the GNW, networks regulating top-down attention can amplify and “help keep alive” stimuli which seems especially important to focus on, and memory networks can commit the stimulus into memory, insert into the network earlier memories which were triggered by the sight of the stimulus, or both.

In the experiments on subliminal processing, an unconscious prime may affect the processing of a conscious stimulus that comes very soon afterwards, but since its activation soon fades out, it can’t be committed to memory or verbally reported on afterwards. A stimulus becoming conscious and being maintained in the GNW, both keeps its signal alive for longer, and also allows it better access to memory networks which may store it in order for it to be re-broadcast into the GNW later.

The global workspace can only be processing a single item at a time.

Various experiments show the existence of an “attentional blink”: if your attention is strongly focused on one thing, it takes some time to disengage from it and reorient your attention to something else. For instance, in one experiment people are shown a stream of symbols. Most of the symbols are digits, but some are letters. People are told to remember the letters. While the first letter is easy to remember, if two letters are shown in rapid succession, the subjects might not even realize that two of them were present—and they might be surprised to learn that this was the case. The act of attending to the first letter enough to memorize it, creates a “blink of the mind” which prevents the second letter from ever being noticed.

Dehaene’s explanation for this is that the GNW can only be processing a single item at once. The first letter is seen, processed by the early visual centers, then reaches sufficient strength to make it into the workspace. This causes the workspace neurons to synchronize their processing around the first letter and try to keep the signal active for long enough for it to be memorized—and while they are still doing so, the second letter shows up. It is also processed by the visual regions and makes it to the associative region, but the attention networks are still reinforcing the signal associated with the original letter and keeping it active in the workspace. The new letter can’t muster enough activation in time to get its signal broadcast into the workspace, so by the time the activation generated by the first letter starts to fade, the signal from the second letter has also faded out. As a result, the second signal never makes it to the workspace where it could leave a conscious memory trace of having been observed.

When two simultaneous events happen, it doesn’t always mean that awareness of the other one is suppressed. If there isn’t too much distraction—due to “internal noise, distracting thoughts, or other incoming stimuli”—the signal of the second event may survive for long enough in an unconscious buffer, making it to the GNW after the first event has been processed. The use of a post-stimulus masking shape in the subliminal masking experiments helps erase the contents of this buffer, by providing a new stimulus that overwrites the old one. In these cases, people’s judgment of the timing of the events is systematically wrong: rather than experiencing the events to have happened simultaneously, they believe the second event to have happened at the time when the event entered their consciousness.

As an interesting aside, as a result of these effects, the content of our consciousness is always slightly delayed relative to when an event actually happened—a stimulus getting into the GNW takes at least one-third of a second, and may take substantially longer if we are distracted. The brain contains a number of mechanisms for compensating the delay in GNW access, such as prediction mechanisms which anticipate how familiar events should happen before they’ve actually happened.

Disrupting or stimulating the GNW, has the effects that this theory would predict.

One of the lines of argument by which Dehaene defends the claim that GNW activity is genuinely the same thing as conscious activity, and not a more correlate, is that artificially interfering with GNW activity has the kinds of effects that we might expect.

To do this, we can use Transcranial Magentic Stimulation (TMS) to create magnetic fields which stimulate electric activity in the brain, or if electrodes have been placed in a person’s brain, those can be used to stimulate the brain directly.

In one experiment, TMS was used to stimulate the visual cortex of test subjects, in a way that created a hallucination of light. By varying the intensity of the stimulation, the researchers could control whether or not the subjects noticed anything. On trials when the subjects reported becoming conscious of a hallucination, an avalanche wave associated with consciousness popped up, reaching consciousness faster than normal. In Dehaene’s interpretation, the magnetic pulse bypassed the normal initial processing stages for vision and instead created a neuronal activation directly at a higher cortical area, speeding up conscious access by about 0.1 seconds.

Experiments have also used TMS to successfully erase awareness of a stimulus. One experiment described in the book uses a dual TMS setup. First, a subject is zapped with a magnetic pulse that causes them to see a bit of (non-existent) movement. After it has been confirmed that subjects report becoming conscious of movement when they are zapped with the first pulse, they are then subjected to a trial where they are first zapped with the same pulse, then immediately thereafter with another pulse that’s aimed to disrupt the signal from getting access to the GNW. When this is done, subjects report no longer being aware of having seen any movement.

The functions of consciousness

So what exactly is the function of consciousness? Dehaene offers four different functions.

Conscious sampling of unconscious statistics and integration of complicated information

Suppose that you a Bayesian decision theorist trying to choose between two options, A and B. For each two options, you’ve computed a probability distribution about the possible outcomes that may result if you choose either A or B. In order to actually make your choice, you need to collapse your probability distributions into a point estimate of the expected value of choosing A versus B, to know which one is actually better.

In Dehaene’s account, consciousness does something like this. We have a number of unconscious systems which are constantly doing Bayesian statistics and constructing probability distributions about how to e.g. interpret visually ambiguous stimuli, weighing multiple hypotheses at the same time. In order for decision-making to actually be carried out, the system has to choose one of the interpretations, and act based on the assumption of that interpretation being correct. The hypothesis that the unconscious process selects as correct, is then what gets fed into consciousness. For example, when I look at the cup of tea in front of me, I don’t see a vast jumble of shifting hypotheses of what this visual information might represent: rather, I just see what I think is a cup of tea, which is what a subconscious process has chosen as the most likely interpretation.

Dehaene offers the analogy of the US President being briefed by the FBI. The FBI is a vast organization, with thousands of employees: they are constantly shifting through enormous amounts of data, and forming hypotheses about topics which have national security relevance. But it would be useless for the FBI to present to the President every single report collected by every single field agent, as well as every analysis compiled by every single analyst in response. Rather, the FBI needs to internally settle on some overall summary of what they believe is going on, and then present that to the President, who can then act based on the information. Similarly, Dehaene suggests that consciousness is a place where different brain systems can exchange summaries of their models, and to integrate conflicting evidence in order to arrive to an overall conclusion.

Dehaene discusses a few experiments which lend support this interpretation, though here the discussion seems somewhat more speculative than in other parts of the book. One of his pieces of evidence is of recordings of neuronal circuits which integrate many parts of a visual scene into an overall image, resolving local ambiguities by using information from other parts of the image. Under anesthesia, neuronal recordings show that this integration process is disrupted; consciousness “is needed for neurons to exchange signals in both bottom-up and top-down directions until they agree with each other”. Another experiment shows that if people are shown an artificial stimulus which has been deliberately crafted to be ambiguous, people’s conscious impression of the correct interpretation keeps shifting: first it’s one interpretation, then the other. By varying the parameters of the stimulus, researchers can control roughly how often people see each interpretation. If Bayesian statistics would suggest that interpretation A was 30% likely and interpretation B 70% likely, say, then people’s impression of the image will keep shifting so that they will see interpretation A roughly 30% of the time and interpretation B roughly 70% of the time.

What we see, at any time, tends to be the most likely interpretation, but other possibilities occasionally pop up and stay in our conscious vision for a time duration that is proportional to their statistical likelihood. Our unconscious perception works out the probabilities—and then our consciousness samples from them at random.

In Dehaene’s account, consciousness is involved in higher-level integration of the meaning of concepts. For instance, our understanding of a painting such as the Mona Lisa is composed of many different things. Personally, if I think about the Mona Lisa, I see a mental image of the painting itself, I get an association with the country of Italy, I remember having first learned about the painting in a Donald Duck story, and I also remember my friend telling me about the time she saw the original painting itself. These are different pieces of information, stored in different formats in different regions of the brain, and the kind of global neuronal integration carried out by the GNW allows all of these different interpretations to come together, with every system participating in constructing an overall coherent, synchronous interpretation.

All of this sounds sensible enough. At the same, after all the previous discussion about unconscious decision-making and unconscious integration of information, this leaves me feeling somewhat unsatisfied. If it has been shown that e.g. unconsciously processed cues are enough to guide our decision-making, then how do we square that with the claim that consciousness is necessary for settling on a single interpretation that would allow us to take actions?

My interpretation is that even though unconscious processing and decision-making happens, its effect is relatively weak. If you prime people with a masked stimulus, then that influences their decision-making so as to give them better performance—but it doesn’t give them perfect performance. In the experiment where masked cues predicted the right action and unconscious learning associated each cue with the relevant action, the subjects only ended up with an average of 63% correct actions.

Looking at the cited paper itself, the authors themselves note that if the cues had been visible, it would only have taken a couple of trials for the subjects to learn the optimal behaviors. In the actual experiment, their performance slowly improved until it reached a plateau around 60 trials. Thus, even though unconscious learning and decision-making happens, conscious learning and decision-making can be significantly more effective.

Second, while I don’t see Dehaene mentioning it, I’ve always liked the PRISM theory of consciousness, which suggests that one of the functions of consciousness is to be a place for resolving conflicting plans for controlling the skeletal muscles. In the unconscious decision-making experiments, the tasks have mostly been pretty simple, and only involved the kinds of goals that could all be encapsulated within a single motivational system. In real life however, we often run into situations where different brain systems output conflicting instructions. For instance, if we are carrying a hot cup of tea, our desire to drop the cup may be competing against our desire to carry it to the table, and these may have their origin in very different sorts of motivations. Information from both systems would need to be taken into account and integrated in order to make an overall decision.

To stretch Dehaene’s FBI metaphor: as long as the FBI is doing things that fall within their jurisdiction and they are equipped to handle, then they can just do that without getting in contact with the President. But if the head of the FBI and the head of the CIA have conflicting ideas about what should be done, on a topic on which the two agencies have overlapping jurisdiction, then it might be necessary to bring the disagreement out in the open so that a higher-up can make the call. Of course, there isn’t any single “President” in the brain who would make the final decision: rather, it’s more like the chiefs of all the other alphabet soup bureaus were also called in, and they then hashed out the details of their understanding until they came to a shared agreement about what to do.

Lasting thoughts and working memory

As already touched upon, consciousness is associated with memory. Unconsciously registered information tends to fade very quickly and then disappear. In all the masking experiments, the duration between the prime and the target is very brief; if the duration would be any longer, there would be no learning or effect on decision-making. For e.g. associating cues and outcomes with each other over an extended period of time, the cue has to be consciously perceived.

Dehaene describes an experiment which demonstrates exactly this:

The cognitive scientists Robert Clark and Larry Squire conducted a wonderfully simple test of temporal synthesis: time-lapse conditioning of the eyelid reflex. At a precisely timed moment, a pneumatic machine puffs air toward the eye. The reaction is instantaneous: in rabbits and humans alike, the protective membrane of the eyelid immediately closes. Now precede the delivery of air with a brief warning tone. The outcome is called Pavlovian conditioning (in memory of the Russian physiologist Ivan Petrovich Pavlov, who first conditioned dogs to salivate at the sound of a bell, in anticipation of food). After a short training, the eye blinks to the sound itself, in anticipation of the air puff. After a while, an occasional presentation of the isolated tone suffices to induce the “eyes wide shut” response.

The eye-closure reflex is fast, but is it conscious or unconscious? The answer, surprisingly, depends on the presence of a temporal gap. In one version of the test, usually termed “delayed conditioning,” the tone lasts until the puff arrives. Thus the two stimuli briefly coincide in the animal’s brain, making the learning a simple matter of coincidence detection. In the other, called “trace conditioning,” the tone is brief, separated from the subsequent air puff by an empty gap. This version, although minimally different, is clearly more challenging. The organism must keep an active memory trace of the past tone in order to discover its systematic relation to the subsequent air puff. To avoid any confusion, I will call the first version “coincidence-based conditioning” (the first stimulus lasts long enough to coincide with the second, thus removing any need for memory) and the second “memory-trace conditioning” (the subject must keep in mind a memory trace of the sound in order to bridge the temporal gap between it and the obnoxious air puff).

The experimental results are clear: coincidence-based conditioning occurs unconsciously, while for memory-trace conditioning, a conscious mind is required. In fact, coincidence-based conditioning does not require any cortex at all. A decerebrate rabbit, without any cerebral cortex, basal ganglia, limbic system, thalamus, and hypothalamus, still shows eyelid conditioning when the sound and the puff overlap in time. In memory-trace conditioning, however, no learning occurs unless the hippocampus and its connected structures (which include the prefrontal cortex) are intact. In human subjects, memory-trace learning seems to occur if and only if the person reports being aware of the systematic predictive link between the tone and the air puff. Elderly people, amnesiacs, and people who were simply too distracted to notice the temporal relationship show no conditioning at all (whereas these manipulations have no effect whatsoever on coincidence-based conditioning). Brain imaging shows that the subjects who gain awareness are precisely those who activate their prefrontal cortex and hippocampus during the learning.

Carrying out artificial serial operations

Consider what happens when you calculate 12 * 13 in your head.

When you do so, you have some conscious awareness of the steps involved: maybe you first remember that 12 * 12 = 144 and then add 144 + 12, or maybe you first multiply 12 * 10 = 120 and then keep that result in memory as you multiply 12 * 3 = 36 and then add 120 + 36. Regardless of the strategy, the calculation happens consciously.

Dehaene holds that this kind of multi-step arithmetic can’t happen unconsciously. We can do single-step arithmetic unconsciously: for example, people can be shown a single masked digit n, and then be asked to carry out one of three operations. People might be asked to name the digit (the “n” task), to add 2 to n and report the resulting the number (the “n + 2” task), or to report whether or not it’s smaller than 5 (the “n < 5” task). On all of these tasks, even if people haven’t consciously seen the digit, when they are forced to guess they typically get the right answer half of the time.

However, unconscious two-step arithmetic fails. If people are flashed an invisible digit and told to first add 2 to it, and then report whether the result is more or less than 5 (the “(n + 2) > 5” task), their performance is on the chance level. The unconscious mind can carry out a single arithmetic operation, but it can’t then store the result of that operation and use it as the input of a second operation, even though it could carry out either of the two operations alone.

Dehaene notes that this might seem to contradict a previous finding, which is that the unconscious brain can accumulate multiple pieces of information over time. For instance, in the arrow experiment, people were shown several masked arrows one at a time; at the end, they could tell whether most of them had been pointing to the left or to the right. Dehaene says that the difference is that opening a neural circuit which accumulates multiple observations is a single operation for the brain: and while the accumulator stores information of how many arrows have been observed so far, that information can’t be taken out of it and used as an input for a second calculation.

The accumulator also can’t reach a decision by itself: for instance, if people saw the arrows consciously, they could reach a decision after having seen three arrows that pointed one way, knowing that the remaining arrows couldn’t change the overall decision anymore. In unconscious trials, they can’t use this kind of strategic reasoning: the unconscious circuit can only keep adding up the arrows, rather than adding up the arrows and also checking whether a rule of type “if seen_arrows > 3” has been satisfied yet.

According to Dehaene, implementing such rules is one of the functions of consciousness. In fact, he explicitly compares consciousness to a production system: an AI design which holds a number of objects in a working memory, and also contains a number of IF-THEN rules, such as “if there is an A in working memory, change it to sequence BC”. If multiple rules match, one of them is chosen for execution according to some criteria. After one of the rules has fired, the contents of the working memory gets updated, and the cycle repeats. The conscious mind, Dehaene says, works using a similar principle—creating a biological Turing machine that can combine operations from a number of neuronal modules, flexibly chaining them together for serial execution.

A social sharing device

If a thought is conscious, we can describe it and report it to other people using language. I won’t elaborate on this, given that the advantages of being able to use language to communicate with others are presumably obvious. I’ll just note that Dehaene highlights one interesting perspective: one where other people are viewed as additional modules that can carry out transformations on the objects in the workspace.

Whether it’s a subsystem in the brain that’s applying production rules to the workspace contents, or whether you are communicating the contents to another person who then comments on it (as guided by some subsystem in their brain), the same principle of “production rules transforming the workspace contents” still applies. Only in one of the cases, the rules and transformations come from subsystems that are located within a single brain, and in the other case subsystems from multiple brains are engaged in joint manipulation of the contents—though of course the linguistic transmission is lossy, since subsystems in multiple brains can’t communicate with the same bandwidth as subsystems in a single brain. (Yet.)

Other stuff

Dehaene also discusses a bunch of other things in his book: for instance, he talks about comatose patients and how his research has been applied to study their brains, in order to predict which patients will eventually recover and which ones will remain permanently unresponsive. This is pretty cool, and feels like a confirmation of the theories being on the right track, but since it’s no longer elaborating on the mechanisms and functions of consciousness, I won’t cover that here.

Takeaways for the rest of the sequence

This has been a pretty long post. Now that we’re at the end, I’m just going to highlight a few of the points which will be most important when we go forward in the multiagent minds sequence:

Consciousness can only contain a single mental object at a time.

The brain has multiple different systems doing different things; many of the systems do unconscious processing of information. When a mental object becomes conscious, many systems will synchronize their processing around analyzing and manipulating that mental object.

The brain can be compared to a production system, with a large number of specialized rules which fire in response to specific kinds of mental objects. E.g. when doing mental arithmetic, applying the right sequence of arithmetic operations for achieving the main goal.

If we take the view of looking at various neural systems as being literally technically subagents, then we can reframe the above points as follows:

The brain has multiple subagents doing different things; many of the subagents do unconscious processing of information. When a mental object becomes conscious, many subagents will synchronize their processing around analyzing and manipulating that mental object.

The collective of subagents can only have their joint attention focused on one mental object at a time.

The brain can be compared to a production system, with a large number of subagents carrying out various tasks when they see the kinds of mental objects that they care about. E.g. when doing mental arithmetic, applying the right sequence of mental operations for achieving the main goal.

Next up: constructing a mechanistic sketch of how a mind might work, combining the above points as well as the kinds of mechanisms that have already been demonstrated in contemporary machine learning, to finally end up with a model that pretty closely resembles the Internal Family Systems one.

- Book summary: Unlocking the Emotional Brain by (8 Oct 2019 19:11 UTC; 338 points)

- Building up to an Internal Family Systems model by (26 Jan 2019 12:25 UTC; 295 points)

- Integrating disagreeing subagents by (14 May 2019 14:06 UTC; 150 points)

- Subagents, akrasia, and coherence in humans by (25 Mar 2019 14:24 UTC; 143 points)

- A mechanistic model of meditation by (6 Nov 2019 21:37 UTC; 139 points)

- A non-mystical explanation of insight meditation and the three characteristics of existence: introduction and preamble by (5 May 2020 19:09 UTC; 134 points)

- Sequence introduction: non-agent and multiagent models of mind by (7 Jan 2019 14:12 UTC; 126 points)

- A non-mystical explanation of “no-self” (three characteristics series) by (8 May 2020 10:37 UTC; 121 points)

- Subagents, introspective awareness, and blending by (2 Mar 2019 12:53 UTC; 113 points)

- System 2 as working-memory augmented System 1 reasoning by (25 Sep 2019 8:39 UTC; 110 points)

- My current take on Internal Family Systems “parts” by (26 Jun 2022 17:40 UTC; 99 points)

- 2019 Review: Voting Results! by (1 Feb 2021 3:10 UTC; 99 points)

- Book review: Rethinking Consciousness by (10 Jan 2020 20:41 UTC; 90 points)

- Subagents, neural Turing machines, thought selection, and blindspots by (6 Aug 2019 21:15 UTC; 87 points)

- Neuroscience of human social instincts: a sketch by (22 Nov 2024 16:16 UTC; 86 points)

- [Intuitive self-models] 2. Conscious Awareness by (25 Sep 2024 13:29 UTC; 85 points)

- The Great Annealing by (30 Mar 2020 1:08 UTC; 81 points)

- Romance, misunderstanding, social stances, and the human LLM by (27 Apr 2023 12:59 UTC; 79 points)

- Three characteristics: impermanence by (5 Jun 2020 7:48 UTC; 73 points)

- From self to craving (three characteristics series) by (22 May 2020 12:16 UTC; 63 points)

- Predictive coding = RL + SL + Bayes + MPC by (10 Dec 2019 11:45 UTC; 56 points)

- Fake Frameworks for Zen Meditation (Summary of Sekida’s Zen Training) by (6 Feb 2021 15:38 UTC; 55 points)

- AI Alignment Problem: “Human Values” don’t Actually Exist by (22 Apr 2019 9:23 UTC; 46 points)

- Integrating Three Models of (Human) Cognition by (23 Nov 2021 1:06 UTC; 40 points)

- World-Model Interpretability Is All We Need by (14 Jan 2023 19:37 UTC; 36 points)

- You are Dissociating (probably) by (4 Jan 2021 14:37 UTC; 35 points)

- Neural Basis for Global Workspace Theory by (22 Jun 2020 4:19 UTC; 31 points)

- “Wanting” and “liking” by (30 Aug 2023 14:52 UTC; 23 points)

- Predictive coding and motor control by (23 Feb 2020 2:04 UTC; 22 points)

- 's comment on Building up to an Internal Family Systems model by (28 Jan 2019 11:18 UTC; 21 points)

- Research snap-shot: question about Global Workspace Theory by (15 Jun 2020 21:00 UTC; 21 points)

- 's comment on Steve Byrnes’s Shortform by (29 Jan 2020 19:20 UTC; 20 points)

- 's comment on Shoulder Advisors 101 by (9 Oct 2021 14:55 UTC; 17 points)

- FC final: Can Factored Cognition schemes scale? by (24 Jan 2021 22:18 UTC; 17 points)

- Neuroscience things that confuse me right now by (26 Jul 2021 21:01 UTC; 16 points)

- 's comment on How special are human brains among animal brains? by (1 Apr 2020 14:51 UTC; 15 points)

- 's comment on A Case for the Least Forgiving Take On Alignment by (22 Feb 2024 0:10 UTC; 13 points)

- 's comment on The Homunculus Problem by (28 May 2021 3:06 UTC; 10 points)

- 's comment on Building up to an Internal Family Systems model by (17 Oct 2019 12:54 UTC; 9 points)

- 's comment on Subagents, neural Turing machines, thought selection, and blindspots by (7 Aug 2019 5:32 UTC; 9 points)

- 's comment on Human instincts, symbol grounding, and the blank-slate neocortex by (23 Mar 2020 1:05 UTC; 8 points)

- 's comment on Book Review: On Intelligence by Jeff Hawkins (and Sandra Blakeslee) by (30 Dec 2020 15:46 UTC; 8 points)

- 's comment on Kaj’s shortform feed by (26 Nov 2019 13:01 UTC; 6 points)

- 's comment on Misc. questions about EfficientZero by (6 Dec 2021 16:39 UTC; 5 points)

- 's comment on A non-mystical explanation of insight meditation and the three characteristics of existence: introduction and preamble by (7 May 2020 13:59 UTC; 4 points)

- 's comment on Human instincts, symbol grounding, and the blank-slate neocortex by (21 Mar 2020 13:09 UTC; 4 points)

- Is This Thing Sentient, Y/N? by (20 Jun 2022 18:37 UTC; 4 points)

- 's comment on Alignment allows “nonrobust” decision-influences and doesn’t require robust grading by (3 Dec 2022 3:20 UTC; 4 points)

- 's comment on Urges vs. Goals: The analogy to anticipation and belief by (27 Jun 2025 17:11 UTC; 4 points)

- 's comment on Chris_Leong’s Shortform by (6 May 2020 7:10 UTC; 3 points)

- 's comment on The Homunculus Problem by (2 Jun 2021 22:02 UTC; 2 points)

- 's comment on Why it’s so hard to talk about Consciousness by (21 Jan 2025 16:18 UTC; 2 points)

- 's comment on On the construction of the self by (2 Jun 2020 9:25 UTC; 2 points)

- 's comment on Review Voting Thread by (30 Dec 2020 3:26 UTC; 2 points)

- 's comment on A claim that Google’s LaMDA is sentient by (15 Jun 2022 15:40 UTC; 2 points)

- Sofia ACX October 2024 Meetup by (16 Oct 2024 10:22 UTC; 2 points)

- 's comment on EdoArad’s Quick takes by (EA Forum; 10 Oct 2019 19:08 UTC; 1 point)

- 's comment on Harry Potter and the Methods of Psychomagic | Chapter 2: The Global Neuronal Workspace by (31 Oct 2021 13:23 UTC; 1 point)

- 's comment on You Only Get One Shot: an Intuition Pump for Embedded Agency by (24 Jul 2022 5:21 UTC; 1 point)

- 's comment on Is it desirable for the first AGI to be conscious? by (2 May 2022 11:24 UTC; 1 point)

The GNW theory has been kicking about for at least two decades, and this book has been published in 2014. Given this it is almost shocking that the idea wasn’t written up on LW before giving it’s centrality to any understanding of rationality. Shocking but perhaps fortunate, since Kaj has given it a thorough and careful treatment that enables the reader both to understand the idea and evaluate its merits (and almost certainly to save the purchase price of the book).

First, on GNW itself. A lot of the early writing on rationality used the simplified system 1 / system 2 abstraction as the central concept. GNW puts actual meat on this skeleton, describing exactly what unconscious (formerly known as system 1) processes can and can’t do, how they learn, and under what conditions consciousness comes into play. Kaj elaborates more on system 2 in another post, but this review offers enough to reframe the old model in GNW-terms — a reframing that I’ve been convinced is more accurate and meaningful.

As for the post itself, it’s main strength and weakness is that it’s very long. The length is not due to fluff — I’ve compiled my own summary of this post in Roam that runs more than 1,000 words, with almost every paragraph worthy of inclusion. But perhaps, in particular for purposes of a book, the post could more fruitfully broken up in two parts: one to describe the GNW model and its implications, one to cover the experimental evidence for the model and its reliability. The latter takes up almost half of the text of the post by volume, and while it is valuable the former could perhaps stand alone as a worthwhile article (with a reference to a discussion of the experiments so people can assess whether they buy it).

My impression is that GNW is widely accepted to be a leading contender for explaining consciousness, an important problem. This is a nice intro, and having read both this post and the book in question I can confirm that it covers the important ground fairly. I wound up coming around to a different take on consciousness, see my Book Review: Rethinking Consciousness, but while that book didn’t talk much about GNW, I found that familiarity with GNW helped me reframe those ideas and understand them better, and indeed my explanation of that theory puts GNW (which I first heard about through this post) front and center. I should add that I find GNW helpful for thinking about thinking in general, not just consciousness per se.

Also, having read both the book and the post, I probably could have just read the post and skipped the book, and wouldn’t have missed much.