Bayesian Networks Aren’t Necessarily Causal

As a casual formal epistemology fan, you’ve probably heard that the philosophical notion of causality can be formalized in terms of Bayesian networks—but also as a casual formal epistemology fan, you also probably don’t know the details all that well.

One day, while going through the family archives, you come across a meticulously maintained dataset describing a joint probability distribution over four variables: whether it rained that day, whether the sprinkler was on, whether the sidewalk was wet, and whether the sidewalk was slippery. The distribution is specified in this table (using the abbreviated labels “rain”, “slippery”, “sprinkler”, and “wet”):

(You wonder what happened that one day out of 140,000 when it rained, and the sprinkler was on, and the sidewalk was slippery but not wet. Did—did someone put a tarp up to keep the sidewalk dry, but also spill slippery oil, which didn’t count as being relevantly “wet”? Also, 140,000 days is more than 383 years—were “sprinklers” even a thing in the year 1640 C.E.? You quickly put these questions out of your mind: it is not your place to question the correctness of the family archives.)

You’re slightly uncomfortable with this unwieldy sixteen-row table. You think that there must be some other way to represent the same information, while making it clearer that it’s not a coincidence that rain and wet sidewalks tend to co-occur.

You’ve read that Bayesian networks “factorize” an unwieldly joint probability distribution into a number of more compact conditional probability distributions, related by a directed acyclic graph, where the arrows point from “cause” to “effect”. (Even a casual formal epistemology fan knows that much.) The graph represents knowledge that each variable is conditionally independent of its non-descendants given its parents, which enables “local” computations: given the values of just a variable’s parents in the graph, we can compute a conditional distribution for that variable, without needing to consider what is known about other variables elsewhere in the graph …

You’ve read that, but you’ve never actually done it before! You decide that constructing a Bayesian network to represent this distribution will be a useful exercise.

To start, you re-label the variables for brevity. (On a whim, you assign indices in reverse-alphabetical order: = wet, = sprinkler, = slippery, = rain.)

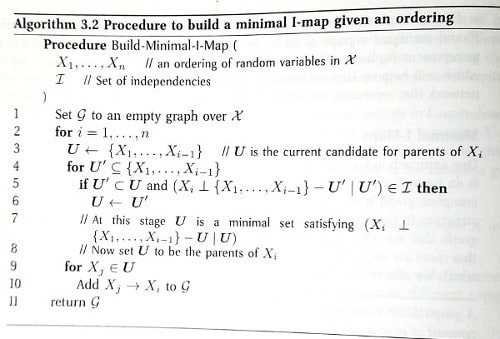

Now, how do you go about building a Bayesian network? As a casual formal epistemology fan, you are proud to own a copy of the book by Daphne Koller and the other guy, which explains how to do this in—you leaf through the pages—probably §3.4, “From Distributions to Graphs”?—looks like … here, in Algorithm 3.2. It says to start with an empty graph, and it talks about random variables, and setting directed edges in the graph, and you know from chapter 2 that the ⟂ and | characters are used to indicate conditional independence. That has to be it.

(As a casual formal epistemology fan, you haven’t actually read chapter 3 up through §3.4, but you don’t see why that would be necessary, since this Algorithm 3.2 pseudocode is telling you what you need to do.)

It looks like the algorithm says to pick a variable, allocate a graph node to represent it, find the smallest subset of the previously-allocated variables such that the variable represented by the new node is conditionally independent of the other previously-allocated variables given that subset, and then draw directed edges from each of the nodes in the subset to the new node?—and keep doing that for each variable—and then compute conditional probability tables for each variable given its parents in the resulting graph?

That seems complicated when you say it abstractly, but you have faith that it will make more sense as you carry out the computations.

First, you allocate a graph node for . It doesn’t have any parents, so the associated conditional (“conditional”) probability distribution, is really just the marginal distribution for .

Then you allocate a node for . is not independent of . (Because = 169/1400, which isn’t the same as = 8⁄25 · 1⁄7 = 8⁄175.) So you make a parent of , and your conditional probability table for separately specifies the probabilities of being true or false, depending on whether is true or false.

Next is . Now that you have two possible parents, you need to check whether conditioning on either of and would render conditionally independent of the other. If not, then both and will be parents of ; if so, then the variable you conditioned on will be the sole parent. (You assume that the case where is just independent from both and does not pertain; if that were true, wouldn’t be connected to the rest of the graph at all.)

It turns out that and are conditionally independent given . That is, . (Because the left-hand side is , and the right-hand side is .) So is a parent of , and isn’t; you draw an arrow from (and only ) to , and compile the corresponding conditional probability table.

Finally, you have . The chore of finding the parents is starting to feel more intuitive now. Out of the possible subsets of the preceding variables, you need to find the smallest subset, such that conditioning on that subset renders (conditionally) independent of the variables not in that subset. After some calculations that the authors of expository blog posts have sometimes been known to callously leave as an exercise to the reader, you determine that and are the parents of .

And with one more conditional probability table, your Bayesian network is complete!

Eager to interpret the meaning of this structure regarding the philosophy of causality, you translate the variable labels back to English:

...

This can’t be right. The arrow from “wet” to “slippery” seems fine. But all the others are clearly absurd. Wet sidewalks cause rain? Sprinklers cause rain? Wet sidewalks cause the sprinkler to be on?

You despair. You thought you had understood the algorithm. You can’t find any errors in your calculations—but surely there must be some? What did you do wrong?

After some thought, it becomes clear that it wasn’t just a calculation error: the procedure you were trying to carry out couldn’t have given you the result you expected, because it never draws arrows from later-considered to earlier-considered variables. You considered “wet” first. You considered “rain” last, and then did independence tests to decide whether or not to draw arrows from “wet” (or “sprinkler” or “slippery”) to “rain”. An arrow from “rain” to “wet” was never a possibility. The output of the algorithm is sensitive to the ordering of the variables.

(In retrospect, that probably explains the “given an ordering” part of Algorithm 3.2′s title, “Procedure to build a minimal I-map given an ordering.” You hadn’t read up through the part of chapter 3 that presumably explains what an “I-map” is, and had disregarded the title as probably unimportant.)

You try carrying out the algorithm with the ordering “rain”, “sprinkler”, “wet”, “slippery” (or , , , using your labels from before), and get this network:

—for which giving the arrows a causal interpretation seems much more reasonable.

You notice that you are very confused. The “crazy” network you originally derived, and this “true” network derived from a more intuitively causal variable ordering, are different: they don’t have the same structure, and (except for the wet → slippery link) they don’t have the same conditional probability tables. You would assume that they can’t “both be right”. If the network output by the algorithm depends on what variable ordering you use, how are you supposed to know which ordering is correct? In this example, you know from reasons outside the math, that “wet” shouldn’t cause “rain”, but you couldn’t count on that were you to apply these methods to problems further removed from intuition.

Playing with both networks, you discover that despite their different appearances, they both seem to give the same results when you use them to calculate marginal or conditional probabilities. For example, in the “true” network, is 1⁄4 (read directly from the “conditional” probability table, as “rain” has no parents in the graph). In the “crazy” network, the probability of rain can be computed as

… which also equals 1⁄4.

That actually makes sense. You were wrong to suppose that the two networks couldn’t “both be right”. They are both right; they both represent the same joint distribution. The result of the algorithm for constructing a Bayesian network—or a “minimal I-map”, whatever that is—depends on the given variable ordering, but since the algorithm is valid, each of the different possible results is also valid.

But if the “crazy” network and the “true” network are both right, what happened to the promise of understanding causality using Bayesian networks?! (You may only be a casual formal epistemology fan, but you remember reading a variety of secondary sources unanimously agreeing that this was a thing; you’re definitely not misremembering or making it up.) If both networks give the same answers to marginal and conditional probability queries, that amounts to them making the same predictions about the world. So if beliefs are supposed to correspond to predictions, in what sense could the “true” network be better? What does your conviction that rain causes wetness even mean, if someone who believed the opposite could make all the same predictions?

You remember the secondary sources talking about interventions on causal graphs: severing a node from its parents and forcing it to take a particular value. And the “crazy” network and the “true” network do differ with respect to that operation: in the “true” network, setting “wet” to be false—you again imagine putting a tarp up over the sidewalk—wouldn’t change the probability of “rain”. But in the “crazy” network, forcing “wet” to be false would change the probability of rain—to , which is (greatly reduced from the 1⁄4 you calculated a moment ago). Notably, this intervention—, if you’re remembering correctly what some of the secondary sources said about a do operator—isn’t the same thing as the conditional probability .

This would seem to satisfy your need for a sense in which the “true” network is “better” than the “crazy” network, even if Algorithm 3.2 indifferently produces either depending on the ordering it was given. (You’re sure that Daphne Koller and the other guy have more to say about other algorithms that can make finer distinctions, but this feels like enough studying for one day—and enough for one expository blog post, if someone was writing one about your inquiries. You’re a casual formal epistemology fan.) The two networks represent the same predictions about the world recorded in your family archives, but starkly different predictions about nearby possible worlds—about what would happen if some of the factors underlying the world were to change.

You feel a slight philosophical discomfort about this. You don’t like the idea of forced change, of intervention, being so integral to such a seemingly basic notion as causality. It feels almost anthropomorphic: you want the notion of cause and effect within a system to make sense without reference to the intervention of some outside agent—for there’s nothing outside of the universe. But whether this intuition is a clue towards deeper insights, or just a place where your brain has tripped on itself and gotten confused, it’s more than you understand now.

- Voting Results for the 2023 Review by (6 Feb 2025 8:00 UTC; 87 points)

- Blanchard’s Dangerous Idea and the Plight of the Lucid Crossdreamer by (8 Jul 2023 18:03 UTC; 37 points)

- 's comment on Should I Finish My Bachelor’s Degree? by (9 Jun 2024 21:27 UTC; 4 points)

- 's comment on Lack of Social Grace Is an Epistemic Virtue by (1 Aug 2023 23:09 UTC; 4 points)

Zack complicates the story in Causal Diagrams and Causal Models, in an indirect way. There’s a bit of narrative thrown in for fun. I enjoyed this in 2023 but less on re-reading.

I don’t know if the fictional statistics have been chosen carefully to allow multiple interpretations, or if any data generated by a network similar to the “true” network would necessarily also allow the “crazy” network. Maybe it’s the second, based on Wentworth’s comment that there are “equivalent graph structures” (e.g. A → B → C vs A ← B ← C vs A ← B → C). But the equivalent structures have the same number of parameters and in this one the “crazy” network has an extra parameter, so I don’t know. The aside about “the correctness of the family archives” adds doubt. A footnote would help.

The post is prodding me to think for myself and perhaps buy a textbook or two. I could play with some numbers to answer my above doubt. Those are all worthy things, but it’s less clear that they would be worthwhile. Unlike Learn Bayes Nets! there’s no promise of cosmic power on offer.

The alternate takeaway from this post is a general awareness that causal inference is complicated and has assumptions and limitations. Perhaps just that Bayesian Networks Aren’t Necessarily Causal. Drilling into the comments on both posts adds more color to that takeaway.

Overall I’m left feeling slightly let down in a way I can’t quite put my finger on. Like there’s something of value here that I’m not getting, or the author didn’t express in quite the right way for me to pick up on it. Sorry, this is frustrating feedback to get, but it’s the best I can do today.