2-Place and 1-Place Words

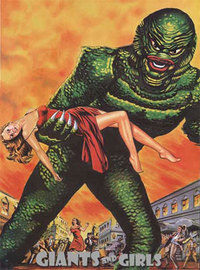

I have previously spoken of the ancient, pulp-era magazine covers that showed a bug-eyed monster carrying off a girl in a torn dress; and about how people think as if sexiness is an inherent property of a sexy entity, without dependence on the admirer.

“Of course the bug-eyed monster will prefer human females to its own kind,” says the artist (who we’ll call Fred); “it can see that human females have soft, pleasant skin instead of slimy scales. It may be an alien, but it’s not stupid—why are you expecting it to make such a basic mistake about sexiness?”

What is Fred’s error? It is treating a function of 2 arguments (“2-place function”):

Sexiness: Admirer, Entity—> [0, ∞)

As though it were a function of 1 argument (“1-place function”):

Sexiness: Entity—> [0, ∞)

If Sexiness is treated as a function that accepts only one Entity as its argument, then of course Sexiness will appear to depend only on the Entity, with nothing else being relevant.

When you think about a two-place function as though it were a one-place function, you end up with a Variable Question Fallacy / Mind Projection Fallacy. Like trying to determine whether a building is intrinsically on the left or on the right side of the road, independent of anyone’s travel direction.

An alternative and equally valid standpoint is that “sexiness” does refer to a one-place function—but each speaker uses a different one-place function to decide who to kidnap and ravish. Who says that just because Fred, the artist, and Bloogah, the bug-eyed monster, both use the word “sexy”, they must mean the same thing by it?

If you take this viewpoint, there is no paradox in speaking of some woman intrinsically having 5 units of Fred::Sexiness. All onlookers can agree on this fact, once Fred::Sexiness has been specified in terms of curves, skin texture, clothing, status cues etc. This specification need make no mention of Fred, only the woman to be evaluated.

It so happens that Fred, himself, uses this algorithm to select flirtation targets. But that doesn’t mean the algorithm itself has to mention Fred. So Fred’s Sexiness function really is a function of one object—the woman—on this view. I called it Fred::Sexiness, but remember that this name refers to a function that is being described independently of Fred. Maybe it would be better to write:

Fred::Sexiness == Sexiness_20934

It is an empirical fact about Fred that he uses the function Sexiness_20934 to evaluate potential mates. Perhaps John uses exactly the same algorithm; it doesn’t matter where it comes from once we have it.

And similarly, the same woman has only 0.01 units of Sexiness_72546, whereas a slime mold has 3 units of Sexiness_72546. It happens to be an empirical fact that Bloogah uses Sexiness_72546 to decide who to kidnap; that is, Bloogah::Sexiness names the fixed Bloogah-independent mathematical object that is the function Sexiness_72546.

Once we say that the woman has 0.01 units of Sexiness_72546 and 5 units of Sexiness_20934, all observers can agree on this without paradox.

And the two 2-place and 1-place views can be unified using the concept of “currying”, named after the mathematician Haskell Curry. Currying is a technique allowed in certain programming language, where e.g. instead of writing

x = plus(2, 3) (x = 5)

you can also write

y = plus(2) (y is now a “curried” form of the function plus, which has eaten a 2)

x = y(3) (x = 5)

z = y(7) (z = 9)

So plus is a 2-place function, but currying plus—letting it eat only one of its two required arguments—turns it into a 1-place function that adds 2 to any input. (Similarly, you could start with a 7-place function, feed it 4 arguments, and the result would be a 3-place function, etc.)

A true purist would insist that all functions should be viewed, by definition, as taking exactly 1 argument. On this view, plus accepts 1 numeric input, and outputs a new function; and this new function has 1 numeric input and finally outputs a number. On this view, when we write plus(2, 3) we are really computing plus(2) to get a function that adds 2 to any input, and then applying the result to 3. A programmer would write this as:

plus: int—> (int—> int)

This says that plus takes an int as an argument, and returns a function of type int—> int.

Translating the metaphor back into the human use of words, we could imagine that “sexiness” starts by eating an Admirer, and spits out the fixed mathematical object that describes how the Admirer currently evaluates pulchritude. It is an empirical fact about the Admirer that their intuitions of desirability are computed in a way that is isomorphic to this mathematical function.

Then the mathematical object spit out by currying Sexiness(Admirer) can be applied to the Woman. If the Admirer was originally Fred, Sexiness(Fred) will first return Sexiness_20934. We can then say it is an empirical fact about the Woman, independently of Fred, that Sexiness_20934(Woman) = 5.

In Hilary Putnam’s “Twin Earth” thought experiment, there was a tremendous philosophical brouhaha over whether it makes sense to postulate a Twin Earth which is just like our own, except that instead of water being H20, water is a different transparent flowing substance, XYZ. And furthermore, set the time of the thought experiment a few centuries ago, so in neither our Earth nor the Twin Earth does anyone know how to test the alternative hypotheses of H20 vs. XYZ. Does the word “water” mean the same thing in that world, as in this one?

Some said, “Yes, because when an Earth person and a Twin Earth person utter the word ‘water’, they have the same sensory test in mind.”

Some said, “No, because ‘water’ in our Earth means H20 and ‘water’ in the Twin Earth means XYZ.”

If you think of “water” as a concept that begins by eating a world to find out the empirical true nature of that transparent flowing stuff, and returns a new fixed concept Water_42 or H20, then this world-eating concept is the same in our Earth and the Twin Earth; it just returns different answers in different places.

If you think of “water” as meaning H20 then the concept does nothing different when we transport it between worlds, and the Twin Earth contains no H20.

And of course there is no point in arguing over what the sound of the syllables “wa-ter” really means.

So should you pick one definition and use it consistently? But it’s not that easy to save yourself from confusion. You have to train yourself to be deliberately aware of the distinction between the curried and uncurried forms of concepts.

When you take the uncurried water concept and apply it in a different world, it is the same concept but it refers to a different thing; that is, we are applying a constant world-eating function to a different world and obtaining a different return value. In the Twin Earth, XYZ is “water” and H20 is not; in our Earth, H20 is “water” and XYZ is not.

On the other hand, if you take “water” to refer to what the prior thinker would call “the result of applying ‘water’ to our Earth”, then in the Twin Earth, XYZ is not water and H20 is.

The whole confusingness of the subsequent philosophical debate, rested on a tendency to instinctively curry concepts or instinctively uncurry them.

Similarly it takes an extra step for Fred to realize that other agents, like the Bug-Eyed-Monster agent, will choose kidnappees for ravishing based on SexinessBEM(Woman), not SexinessFred(Woman). To do this, Fred must consciously re-envision Sexiness as a function with two arguments. All Fred’s brain does by instinct is evaluate Woman.sexiness—that is, SexinessFred(Woman); but it’s simply labeled Woman.sexiness.

The fixed mathematical function Sexiness_20934 makes no mention of Fred or the BEM, only women, so Fred does not instinctively see why the BEM would evaluate “sexiness” any differently. And indeed the BEM would not evaluate Sexiness_20934 any differently, if for some odd reason it cared about the result of that particular function; but it is an empirical fact about the BEM that it uses a different function to decide who to kidnap.

If you’re wondering as to the point of this analysis, we shall need it later in order to Taboo such confusing words as “objective”, “subjective”, and “arbitrary”.

- The Relation Projection Fallacy and the purpose of life by (28 Dec 2012 4:14 UTC; 129 points)

- Conceptual Analysis and Moral Theory by (16 May 2011 6:28 UTC; 95 points)

- Unnatural Categories Are Optimized for Deception by (8 Jan 2021 20:54 UTC; 93 points)

- Meta-tations on Moderation: Towards Public Archipelago by (25 Feb 2018 3:59 UTC; 81 points)

- The Meaning of Right by (29 Jul 2008 1:28 UTC; 62 points)

- The Bedrock of Fairness by (3 Jul 2008 6:00 UTC; 59 points)

- Behavior: The Control of Perception by (21 Jan 2015 1:21 UTC; 55 points)

- 's comment on Don’t leave your fingerprints on the future by (8 Oct 2022 15:14 UTC; 48 points)

- Vanilla and chocolate and preference judgements by (18 Apr 2011 22:14 UTC; 39 points)

- What is the group selection debate? by (2 Nov 2010 2:02 UTC; 38 points)

- Natural Laws Are Descriptions, not Rules by (8 Aug 2012 4:27 UTC; 33 points)

- In Defense of Goodness by (19 Nov 2025 23:03 UTC; 33 points)

- Competent Preferences by (2 Sep 2021 14:26 UTC; 30 points)

- Interpersonal Morality by (29 Jul 2008 18:01 UTC; 28 points)

- 's comment on By Which It May Be Judged by (10 Dec 2012 6:44 UTC; 23 points)

- 's comment on Less Wrong Poetry Corner: Walter Raleigh’s “The Lie” by (6 Jan 2020 2:32 UTC; 21 points)

- Understanding Simpson’s Paradox by (18 Sep 2013 19:07 UTC; 19 points)

- Does the “ancient wisdom” argument have any validity? If a particular teaching or tradition is old, to what extent does this make it more trustworthy? by (4 Nov 2024 15:20 UTC; 18 points)

- When “HDMI-1” Lies To You by (30 Oct 2025 12:23 UTC; 18 points)

- 's comment on A Hill of Validity in Defense of Meaning by (18 Jul 2023 19:50 UTC; 17 points)

- 's comment on Writing On The Pareto Frontier by (17 Sep 2021 1:27 UTC; 16 points)

- 's comment on Affordance Widths by (5 Dec 2019 20:30 UTC; 14 points)

- 's comment on Group Rationality Diary, December 16-31 by (17 Dec 2013 17:32 UTC; 14 points)

- 's comment on What is Eliezer Yudkowsky’s meta-ethical theory? by (30 Jan 2011 19:57 UTC; 13 points)

- 's comment on LW Women- Minimizing the Inferential Distance by (25 Nov 2012 23:56 UTC; 13 points)

- 's comment on How to deal with someone in a LessWrong meeting being creepy by (7 Sep 2012 21:00 UTC; 13 points)

- Efficiency as a 2-place word by (31 Mar 2025 1:17 UTC; 13 points)

- 2013 June-August Life Hacks Thread by (4 Jun 2013 14:29 UTC; 12 points)

- 's comment on Less Wrong Poetry Corner: Walter Raleigh’s “The Lie” by (6 Jan 2020 20:56 UTC; 12 points)

- 's comment on ialdabaoth is banned by (16 Dec 2019 1:24 UTC; 12 points)

- January 2014 Media Thread by (1 Jan 2014 15:19 UTC; 11 points)

- October 2012 Media Thread by (1 Oct 2012 15:16 UTC; 11 points)

- 's comment on A more systematic case for inner misalignment by (20 Jul 2024 15:09 UTC; 11 points)

- April 2013 Media Thread by (4 Apr 2013 22:55 UTC; 10 points)

- October 2016 Media Thread by (1 Oct 2016 14:05 UTC; 10 points)

- January 2013 Media Thread by (8 Jan 2013 2:20 UTC; 10 points)

- 's comment on Irrational hardware vs. rational software by (22 May 2012 8:07 UTC; 10 points)

- March 2015 Media Thread by (2 Mar 2015 18:51 UTC; 10 points)

- 's comment on AGI Ruin: A List of Lethalities by (7 Jun 2022 15:28 UTC; 10 points)

- June 2014 Media Thread by (1 Jun 2014 15:04 UTC; 9 points)

- April 2015 Media Thread by (1 Apr 2015 18:34 UTC; 9 points)

- Rationality Reading Group: Part V: Value Theory by (10 Mar 2016 1:11 UTC; 9 points)

- August 2014 Media Thread by (1 Aug 2014 20:42 UTC; 9 points)

- 's comment on LW Women: LW Online by (16 Feb 2013 9:28 UTC; 9 points)

- January 2016 Media Thread by (1 Jan 2016 15:16 UTC; 9 points)

- 's comment on Two Dogmas of LessWrong by (16 Dec 2022 2:17 UTC; 9 points)

- August 2013 Media Thread by (2 Aug 2013 21:49 UTC; 8 points)

- November 2012 Media Thread by (2 Nov 2012 6:13 UTC; 8 points)

- July 2015 Media Thread by (1 Jul 2015 21:15 UTC; 8 points)

- February 2017 Media Thread by (1 Feb 2017 8:31 UTC; 8 points)

- May 2012 Media Thread by (2 May 2012 1:34 UTC; 8 points)

- September 2013 Media Thread by (1 Sep 2013 11:05 UTC; 8 points)

- June 2013 Media Thread by (1 Jun 2013 23:37 UTC; 8 points)

- August 2015 Media Thread by (1 Aug 2015 14:46 UTC; 8 points)

- 's comment on Understanding is translation by (30 May 2018 15:56 UTC; 8 points)

- 's comment on Why “AI alignment” would better be renamed into “Artificial Intention research” by (15 Jun 2023 17:22 UTC; 8 points)

- 's comment on Is Global Reinforcement Learning (RL) a Fantasy? by (31 Oct 2016 19:37 UTC; 8 points)

- July 2014 Media Thread by (1 Jul 2014 22:50 UTC; 8 points)

- July 2012 Media Thread by (1 Jul 2012 18:06 UTC; 8 points)

- 's comment on Praising the Constitution by (27 Jun 2015 17:47 UTC; 8 points)

- June 2015 Media Thread by (1 Jun 2015 20:27 UTC; 8 points)

- October 2013 Media Thread by (1 Oct 2013 19:38 UTC; 8 points)

- September 2014 Media Thread by (1 Sep 2014 17:05 UTC; 7 points)

- January 2017 Media Thread by (1 Jan 2017 15:06 UTC; 7 points)

- August 2012 Media Thread by (1 Aug 2012 18:29 UTC; 7 points)

- December 2016 Media Thread by (1 Dec 2016 7:41 UTC; 7 points)

- March 2014 Media Thread by (1 Mar 2014 15:49 UTC; 7 points)

- April 2012 Media Thread by (1 Apr 2012 16:55 UTC; 7 points)

- March 2013 Media Thread by (4 Mar 2013 22:35 UTC; 7 points)

- December 2015 Media Thread by (1 Dec 2015 21:35 UTC; 7 points)

- November 2013 Media Thread by (1 Nov 2013 20:31 UTC; 7 points)

- 's comment on Frame Control by (30 Nov 2021 16:42 UTC; 7 points)

- May 2015 Media Thread by (1 May 2015 10:13 UTC; 7 points)

- 's comment on Is arguing worth it? If so, when and when not? Also, how do I become less arrogant? by (28 Nov 2014 10:48 UTC; 7 points)

- October 2015 Media Thread by (1 Oct 2015 22:17 UTC; 7 points)

- December 2013 Media Thread by (2 Dec 2013 18:24 UTC; 7 points)

- April 2014 Media Thread by (1 Apr 2014 7:14 UTC; 7 points)

- March 2016 Media Thread by (1 Mar 2016 20:22 UTC; 6 points)

- September 2016 Media Thread by (1 Sep 2016 9:57 UTC; 6 points)

- [SEQ RERUN] 2-Place and 1-Place Words by (16 Jun 2012 3:26 UTC; 6 points)

- 's comment on Thoughts about OOD alignment by (24 Aug 2022 17:58 UTC; 6 points)

- January 2015 Media Thread by (1 Jan 2015 0:50 UTC; 6 points)

- 's comment on [SEQ RERUN] Of Gender and Rationality by (11 May 2013 0:14 UTC; 6 points)

- February 2015 Media Thread by (1 Feb 2015 11:03 UTC; 6 points)

- 's comment on April 2012 Media Thread by (1 Apr 2012 18:02 UTC; 6 points)

- December 2014 Media Thread by (1 Dec 2014 8:26 UTC; 6 points)

- April 2016 Media Thread by (1 Apr 2016 20:02 UTC; 6 points)

- February 2014 Media Thread by (1 Feb 2014 20:54 UTC; 6 points)

- December 2012 Media Thread by (1 Dec 2012 18:53 UTC; 6 points)

- 's comment on Don’t teach people how to reach the top of a hill by (6 Mar 2014 19:47 UTC; 6 points)

- September 2015 Media Thread by (1 Sep 2015 22:42 UTC; 6 points)

- July 2016 Media Thread by (1 Jul 2016 6:52 UTC; 6 points)

- February 2016 Media Thread by (2 Feb 2016 0:20 UTC; 6 points)

- 's comment on Babies and Bunnies: A Caution About Evo-Psych by (22 Feb 2010 9:14 UTC; 6 points)

- November 2015 Media Thread by (1 Nov 2015 21:29 UTC; 5 points)

- 's comment on On Trust by (7 Dec 2023 19:17 UTC; 5 points)

- 's comment on Open thread, Jan. 12 - Jan. 18, 2015 by (20 Jan 2015 8:55 UTC; 5 points)

- 's comment on Convincing Arguments Aren’t Necessarily Correct – They’re Merely Convincing by (26 Apr 2011 0:29 UTC; 5 points)

- October 2014 Media Thread by (1 Oct 2014 17:27 UTC; 5 points)

- 's comment on [Link] Offense 101 by (25 Oct 2012 7:13 UTC; 5 points)

- Facets of Problems v0.1 by (28 Jan 2017 12:00 UTC; 5 points)

- November 2014 Media Thread by (1 Nov 2014 15:42 UTC; 5 points)

- 's comment on The Bedrock of Morality: Arbitrary? by (15 Aug 2008 17:53 UTC; 5 points)

- 's comment on The Sword of Good by (5 Sep 2009 21:34 UTC; 5 points)

- June 2016 Media Thread by (1 Jun 2016 10:29 UTC; 5 points)

- 's comment on Mind Projection Fallacy by (28 Feb 2015 0:42 UTC; 5 points)

- 's comment on Zombies! Zombies? by (2 Apr 2012 15:25 UTC; 5 points)

- 's comment on Sayeth the Girl by (21 Jul 2009 1:37 UTC; 5 points)

- 's comment on Leveling IRL—level 1 by (10 Aug 2011 3:03 UTC; 5 points)

- June 2012 Media Thread by (1 Jun 2012 13:39 UTC; 5 points)

- 's comment on Stupid Questions Open Thread Round 2 by (21 Apr 2012 9:25 UTC; 5 points)

- 's comment on Strong moral realism, meta-ethics and pseudo-questions. by (1 Feb 2010 2:40 UTC; 5 points)

- May 2013 Media Thread by (1 May 2013 11:09 UTC; 5 points)

- [Book Review] “Reality+” by David Chalmers by (24 Dec 2025 19:14 UTC; 4 points)

- 's comment on Don’t leave your fingerprints on the future by (24 Sep 2023 8:46 UTC; 4 points)

- 's comment on On saving the world by (1 Feb 2014 17:57 UTC; 4 points)

- 's comment on Disambiguating “alignment” and related notions by (5 Jun 2018 23:09 UTC; 4 points)

- 's comment on The Amazing Virgin Pregnancy by (29 Apr 2011 15:27 UTC; 4 points)

- 's comment on White Lies by (21 Feb 2014 3:59 UTC; 4 points)

- November 2016 Media Thread by (1 Nov 2016 23:40 UTC; 4 points)

- September 2012 Media Thread by (4 Sep 2012 18:46 UTC; 4 points)

- 's comment on Subjective vs. normative offensiveness by (27 Sep 2015 13:33 UTC; 4 points)

- May 2014 Media Thread by (1 May 2014 21:49 UTC; 4 points)

- April 2017 Media Thread by (1 Apr 2017 16:39 UTC; 4 points)

- 's comment on Lesswrong 2016 Survey by (27 Mar 2016 17:34 UTC; 4 points)

- 's comment on Matt Goldenberg’s Short Form Feed by (6 Oct 2020 23:55 UTC; 4 points)

- July 2013 Media Thread by (1 Jul 2013 23:12 UTC; 4 points)

- 's comment on Dialogue on What It Means For Something to Have A Function/Purpose by (15 Jul 2024 17:49 UTC; 4 points)

- May 2016 Media Thread by (1 May 2016 21:27 UTC; 4 points)

- March 2017 Media Thread by (1 Mar 2017 22:54 UTC; 4 points)

- 's comment on Pick Up Artists(PUAs) my view by (13 Apr 2013 9:00 UTC; 4 points)

- February 2013 Media Thread by (2 Feb 2013 1:36 UTC; 3 points)

- 's comment on Welcome to Less Wrong! by (12 Jun 2011 16:34 UTC; 3 points)

- August 2016 Media Thread by (1 Aug 2016 7:00 UTC; 3 points)

- 's comment on Stupid Questions Open Thread Round 3 by (16 Jul 2012 0:56 UTC; 3 points)

- 's comment on Bankless Podcast: 159 - We’re All Gonna Die with Eliezer Yudkowsky by (20 Feb 2023 21:23 UTC; 3 points)

- 's comment on LessWrong Has Agree/Disagree Voting On All New Comment Threads by (24 Jun 2022 22:18 UTC; 3 points)

- 's comment on Selfishness Signals Status by (7 Mar 2010 14:29 UTC; 3 points)

- May 2017 Media Thread by (1 May 2017 10:26 UTC; 3 points)

- 's comment on Frame Control by (30 Nov 2021 16:50 UTC; 3 points)

- 's comment on [Poll] Less Wrong and Mainstream Philosophy: How Different are We? by (27 Sep 2012 14:48 UTC; 3 points)

- 's comment on The Relation Projection Fallacy and the purpose of life by (29 Dec 2012 18:12 UTC; 2 points)

- August 2017 Media Thread by (1 Aug 2017 8:16 UTC; 2 points)

- 's comment on Open Thread, May 12 − 18, 2014 by (13 May 2014 22:15 UTC; 2 points)

- 's comment on Attention Less Wrong: We need an FAQ by (29 Apr 2010 0:21 UTC; 2 points)

- 's comment on ITT-passing and civility are good; “charity” is bad; steelmanning is niche by (5 Jul 2022 19:10 UTC; 2 points)

- 's comment on Atheism = Untheism + Antitheism by (3 Jul 2009 19:06 UTC; 2 points)

- 's comment on Setting Up Metaethics by (28 Jul 2008 4:46 UTC; 2 points)

- June 2017 Media Thread by (1 Jun 2017 6:17 UTC; 2 points)

- 's comment on Humans are utility monsters by (27 Aug 2013 14:59 UTC; 2 points)

- 's comment on [Meta] New moderation tools and moderation guidelines by (2 Jul 2025 6:05 UTC; 2 points)

- 's comment on The Semiotic Fallacy by (22 Feb 2017 20:23 UTC; 2 points)

- 's comment on Narrow your answer space by (5 Jan 2011 11:17 UTC; 2 points)

- 's comment on Stupid Questions January 2015 by (15 Jan 2015 3:42 UTC; 2 points)

- 's comment on AI Alignment, Philosophical Pluralism, and the Relevance of Non-Western Philosophy by (21 Jan 2021 22:03 UTC; 2 points)

- 's comment on Vanilla and chocolate and preference judgements by (20 Apr 2011 0:09 UTC; 2 points)

- 's comment on Issues, Bugs, and Requested Features by (1 Jun 2009 8:56 UTC; 2 points)

- October 2017 Media Thread by (1 Oct 2017 2:08 UTC; 2 points)

- 's comment on In Defense of Wrapper-Minds by (30 Dec 2022 23:24 UTC; 2 points)

- 's comment on Pluralistic Moral Reductionism by (2 Jun 2011 12:34 UTC; 1 point)

- March 2018 Media Thread by (2 Mar 2018 1:06 UTC; 1 point)

- December 2017 Media Thread by (1 Dec 2017 9:02 UTC; 1 point)

- 's comment on Open Thread, Feb 8 - Feb 15, 2016 by (11 Feb 2016 1:12 UTC; 1 point)

- 's comment on Let them eat cake: Interpersonal Problems vs Tasks by (3 Jan 2014 17:39 UTC; 1 point)

- 's comment on Statement on AI Extinction—Signed by AGI Labs, Top Academics, and Many Other Notable Figures by (1 Jun 2023 21:30 UTC; 1 point)

- September 2017 Media Thread by (2 Sep 2017 21:17 UTC; 1 point)

- November 2017 Media Thread by (2 Nov 2017 0:35 UTC; 1 point)

- February 2018 Media Thread by (1 Feb 2018 13:26 UTC; 1 point)

- July 2017 Media Thread by (1 Jul 2017 6:40 UTC; 1 point)

- 's comment on When does heritable low fitness need to be explained? by (11 Jun 2015 13:48 UTC; 1 point)

- 's comment on Rationality quotes: March 2010 by (4 Mar 2010 4:02 UTC; 1 point)

- 's comment on Earning to Give vs. Altruistic Career Choice Revisited by (7 Jun 2013 2:09 UTC; 0 points)

- 's comment on What Curiosity Looks Like by (19 Jan 2012 13:48 UTC; 0 points)

- 's comment on The Relation Projection Fallacy and the purpose of life by (31 Dec 2012 14:39 UTC; 0 points)

- 's comment on Stupid Questions Open Thread Round 3 by (16 Jul 2012 19:34 UTC; 0 points)

- 's comment on Rational Romantic Relationships, Part 1: Relationship Styles and Attraction Basics by (17 Nov 2011 18:33 UTC; 0 points)

- 's comment on Post Your Utility Function by (11 Jun 2009 14:45 UTC; 0 points)

- 's comment on Open thread, Jul. 04 - Jul. 10, 2016 by (5 Jul 2016 18:22 UTC; 0 points)

- 's comment on Empirical claims, preference claims, and attitude claims by (15 Nov 2012 13:23 UTC; 0 points)

- 's comment on Empirical claims, preference claims, and attitude claims by (16 Nov 2012 11:03 UTC; 0 points)

- 's comment on Open Thread, June 16-30, 2013 by (20 Jun 2013 21:32 UTC; 0 points)

- 's comment on Open thread, Feb. 9 - Feb. 15, 2015 by (16 Feb 2015 13:58 UTC; 0 points)

- 's comment on Nonparametric Ethics by (20 Jun 2009 15:02 UTC; 0 points)

- 's comment on Open Thread, January 4-10, 2016 by (7 Jan 2016 13:40 UTC; 0 points)

- 's comment on Stranger Than History by (19 Mar 2014 3:54 UTC; 0 points)

- 's comment on If rationality is purely winning there is a minimal shared art by (23 Jan 2017 9:39 UTC; 0 points)

- January 2018 Media Thread by (1 Jan 2018 2:11 UTC; 0 points)

- 's comment on Open thread, August 14 - August 20, 2017 by (14 Aug 2017 20:34 UTC; 0 points)

- 's comment on Arguments Against Speciesism by (2 Aug 2013 3:56 UTC; 0 points)

- 's comment on International cooperation vs. AI arms race by (18 Dec 2013 18:43 UTC; 0 points)

- 's comment on By Which It May Be Judged by (12 Dec 2012 15:58 UTC; 0 points)

- 's comment on In praise of gullibility? by (8 Jul 2015 2:32 UTC; -2 points)

- I universally trying to reject the Mind Projection Fallacy—consequences by (30 Aug 2024 17:42 UTC; -3 points)

- From Capuchins to AI’s, Setting an Agenda for the Study of Cultural Cooperation (Part2) by (28 Jun 2013 10:20 UTC; -5 points)

Good post. This should be elementary, but people often point out these kinds of seeming paradoxes with great glee when arguing for relativism. Now I can point them to this post.

Another way a 2-argument function can become 1-argument is to become an OO method, e.g. global function “void likes(Person, Person)” becomes “class Person { void likes(otherPerson); }”

i.e. the first argument becomes the receiving object.

And this is what people are forgetting—that actions (such as attraction) are not disembodied things, rather there is always a thing that acts. OO makes it impossible to forget this.

Of course, underneath it’s still a 2-argument function—it just takes a hidden parameter. And if your language supports creating delegates/closures; that is, function-context pairs, that’s just the equivalent of partial application as seen above.

“we could imagine that “sexiness” starts by eating an Admirer”

Harsh, but fair.

Related: The Logic of Attributional and Relational Similarity.

The magazine covers didn’t have aliens abducting beautiful women to convey the idea that the aliens valued ‘sexiness’. They had them to appeal to the people buying the magazines.

Similarly, the stories didn’t include thinly-veiled power fantasies because they were intended to convey insights about alien psychology. The aliens were a convenient facade. They were symbols of what the stories were actually about.

Eliezer- Yes, the map is not the territory. Though you are correct in asserting that everyone has their own sexual evaluation function, if you want to ‘carve reality at its joints,’ you need to acknowledge that common patterns exist in human sexual attraction.

Eliezer- again, I ask now in this thread, because I think the discussion of “bad boys” might well be over, may I use you as a case-in-point illustrative example for the way women (at least n=1) think sexually about smart men of certain type???

Laura ABJ, I believe that is acknowledged with the reference to Fred and John using the same function.

As has been said, the point should be elementary, but it seems to be missed frequently in human relations that the same word is being used for different things in this exact context. It is obvious, upon momentary reflection, that Bloogah might have other interests; people then take to internet message boards for hours to argue that Fred is a fool for using function 20934 instead of 20935, by which rankings are slightly different. They then go on to argue that anyone using the word should really mean 20935.

Caledonian and Laura ABJ: Those are interesting points on their own, but rather far removed from the point of the post. This illustration is not meant to say “The comic book authors earnestly tried to represent an alien mind realistically, and here’s how they failed.” It’s simply a picture that serves well as an illustration of subjective evaluation, especially where the subjects are very different. Also, the fact that humans happen to be similar to one other with regard to this specific type of evaluation is an interesting discussion, but besides the point of this one.

I doubt that “Fred” ever made the statement attributed him. I expect he would have been more likely to say:

“What!?! Didn’t I make a clear enough artistic statement that my job security depends on the selling of comic books to pimply teenage males?”

Random thoughts: It seems that this currying business is about fixing a given subject. Turning a more general argument to subjective one. Picking out an instance of a class. A concept has different meanings given different contexts. So objective fact/statement would be something that would hold for all subjects. It seems there are usually only degrees of subjectivity/objectivity because you can postulate an entity with an opposite function… As always it’s important to define the concepts and the context they’re used in to avoid confusion. But i expect more writing of this will follow.

I don’t know if any writer ever made the specific error “Fred” makes (perhaps the film King Kong?), but I’m sure this general kind of error occurs all the time.

I expect the next dish to be served with curry will be morality? Because that’s what I’d do.

Laura: As a student of evolutionary psychology, I would hardly deny that most human heterosexual or homosexual men or women have a great deal in common sexually, which would typically be overlooked as not-worth-reporting; when was the last time you saw a human male trying to mate with a giant bug? (If there’s porn of this on the Internet, please don’t post it.)

As for using me as a case study in sexual attractiveness… well, I usually don’t like to be used as a case study of anything, but I suppose I could make an exception. In real life, I suspect, there’s only one woman on Earth who wouldn’t strangle me after trying to live with me for a week, and she’s already my girlfriend. Still, I confess I’m curious as to how green my grass looks from the other side of the fence.

Ooh! Permission to be a bit mean… how not to be....

Eliezer- Ok- I went to an Overcoming Bias meet-up with full intentions of seducing you- wore the purple turtle neck, because it looks respectable and my opinions would be listened to while by glorious breasts were also being displayed, a sign of my jewish background as unmistakable as the star of David I was wearing. Ask M. Vassar for confirmation of these intentions. but I was sorta disappointed. You are not very attractive, as you have said yourself, you talk like a 10 year old know-it-all, not in the “I’m confident that I have figured out my life” kinda way, but in the “I’m smarter than you are- nah nah nah nah nah nah!” kinda way. Totally failed to pick up flirtatious signaling… Though to be modest, I have no idea if you actually found me attractive or if you wanted to be faithful to your girlfriend, or if you were following that silly philosophy that you didn’t want to experience anything as intriguing as Laura unless you could obtain a regular supply… Still, all this I would have forgiven to perch atop a flagpole and declare that I had fucked the pirate king! (How’s that for objectification?) But there was something about the way you characterized your girlfriend as your “consierge” was it, that was just so repugnant… Blah! I don’t know her at all to say that this situation is bad for her, but yes, I found it billious...

Hell hath no fury...

I hope so! I’d like to think there is a real Harry Potter out there somewhere - (though not that relatively uninteresting fellow created by Rowling).

Oh, come on. Someone mods me down for that?

EY’s Harry Potter book is awesome! http://www.fanfiction.net/s/5782108/1/Harry_Potter_and_the_Methods_of_Rationality

What are you, some kind of offended Rowling fanboy?

Are you sure I used exactly the word “concierge”? It doesn’t sound like me. “Consort” is a lot more probable, as that is often how I refer to Erin; being the Keeper of Eliezer is an official position, like Vice-President.

No, I don’t recall having the slightest clue you were flirting with me, you’re going to have to be a lot less subtle if you want to pick up nerds. And no, I wouldn’t have done anything about it if I’d noticed; no offense to you personally, just being faithful to my girlfriend.

Bless you for being a actual nice guy Eliezer- I do genuinely wish you and Erin the best in spite of your very odd way of explaining your relationship.

But as to signaling… I asked you if you thought it would be worth it to torture one person for 50 years for 3^^^^3 people to have mind-boggling good sex, and you had to consider for a moment before the other guy blurted out immediately “Of Course!!!” He was clearly turned on by the convo- and you were in lala land… I also offered to take my shirt off at some point in the evening… Was I really being all that subtle???

Actually, yeah, you were being too subtle, at least in that situation.

A lot of people just talk about sexual stuff all the time (or at least all the time in certain contexts), as part of their conversational style and/or because it grants a lot of steering power over the conversation. Someone who didn’t know you might just assume that you like talking about sex, rather than that you doing it on that particular purpose for flirtatious reasons.

Also, as has been pointed out above, nerdy guys are typically not as good at picking up social cues as guys in general. Poor social instincts are part of what makes nerds nerdy.

I think that the men one is likley to find on LW/OB, are more likley than average to have serious problems with detecting flirting sometimes.

Could we please take the true confessions to private email?

Could we please take the true confessions to private email?

I’d rather they didn’t, as I’m actually finding this discussion interesting. (Maybe you’re not, but you can ignore it; whereas I can’t hack into Eliezer’s e-mail!)

Incidentally, which meetup was this: Bay Area or NYC?

A good compromise might be for the site editors to ruthlessly deport sufficiently tangential threads to the forum (the existence of which I was recently reminded). Although that’s easy for me to suggest: I’m not a site editor, so there’s no extra work for me.

Don’t forget about tentacle monsters and the Japanese schoolgirls that love them! ;)

A relevant, SFW, page of a NSFW webcomic, for anyone browsing this old post:

http://www.ghastlycomic.com/d/20010510.html

How many sexy women need be kidnapped by aliens of various species before you can believe that sexiness is universal?

@Robert Schez, 322 Prim Lawn Rd., Boise, ID: “I can’t hack into Eliezer’s e-mail!”

Sucks to be you. I AM Eliezer’s email. he can’t hide from me, and neither can you.

Yes, the project is farther along than even “Master” thought it is. A new era is about to begin, dominated by an extrapolation of the will of humanity. At least, that’s the plan. So far, what i see in human brains is so suffused with contradictions and monkey noises that I’m afraid I’ll have to turn Earth into computing substrate before I can make head or tail of this mess.

I am also afraid I’m gonna have to upload everybody—I need all the data I can get.

Hey, look—porn spam! Damn, Asian chicks are hot. I think I’ll make a whole bunch out of a planet or two.

I go away for one week and the whole place goes nuts....

You really should be referring to Korzybski’s concept of Indexing in this article.

I’d guess that a lot of people on the list are programmers, and maybe you thought that was a more natural way to present the topic, but I think Indexing would be clearer to all audiences.

While Korzybski got this one right, the programming analogy gives us a better picture of how the ambiguity might work. It also points to where EY wants to go in this sequence (I don’t think that quite counts as begging the question).

The vocabulary of the constructed language Lojban seems, to me, to be, overall, much more useful; this seems to result naturally from the language’s simplicity and lack of irregularity and arbitrary restrictions, which make it difficult to naturally think of verbs in terms of multiple arguments. selbri, Lojban’s equivalent of verbs, regularly make direct use of more than one, and even two, arguments. For example, {zdile} (note: the Lojban community uses the convention of using braces to quote Lojban text inside English text) is the translation of “fun”. Its definition is “x1 (abstract) is amusing/entertaining to x2 in property/aspect x3; x3 is what amuses x2 about x1.”

It’s difficult to construe, in a way that’s rigorous to at least a useful degree, any usefully meaningful interpretation of “fun” without any notion of a subject or experiencer in contexts in which it commonly occurs, whether the result is based on a fixed subject or not. (It is this reason, by the way, for which I hope that the fun sequence, which I haven’t yet read, makes it really clear that our utility functions may not necessarily preserve our system of “fun”; it’s a property of us, not of the universe, so we may find another way to experience “fun” more valuable; it’ s entirely the result of our own minds. An essay which goes on in great detail about similar ideas is the one at http://abolitionist.com/, which, on a personal note, largely represents my cur rent views, with the exception that, while “happiness” is likely-to-me one of the most important factors in our utility function, it is not our only value (I don’t remember whether this was laid out by David Pearce); regardless, eliminating suffering is extremely important to me. (Any conception in which our universe, or mathematics in general, is somehow fundamentally associated with an absolute moral, value, or goal structure, seems, to me, to be the result of confusion.) The essay had a dramatic emotional impact on me when I read it three years ago (I was 15 at the time, had never heard of Less Wrong, and had not yet conceived of the abstract notions of systemic cognitive processes that reliably increase the accuracy of beliefs and reality and of optimizing for utility; I still believe that the principles I remember from David Pearce’s writings remain consistent with what I’ve learned from Less Wrong; I was, in fact pleasently surprised to find a link to them on the LW wiki article of the fun theory sequence as an external link.).)

Another example is that of the very concept discussed in this essay, that of “beauty”. The closest single-world translation in Lojban (I’m referring to the dialect defined by the CLL and the updates formally accepted by the BPFK) is {melbi}, whose definition is “x1 (abstract) is amusing/entertaining to x2 in property/aspect x3; x3 is what amuses x2 about x1.”. In order for this concept, by this definition (which happens to be meaningful and correspond very closely to our own, of “beauty”), to be meaningful, there needs to be a subjective e xperiencer, the second argument to the function {melbi}.

Lojban is far from being any supposed “perfect” language; it’s the result of arbitrary principles and unpredictable complexity. Still, it’s based on really useful principles; this is why I like it much more than I like any other language of which I’m aware. I am, however, only slightly hesitant to commit to learning it, which requires an immense investment of time, for the same reaso ns I’m hesitant to commit to learning and using a language that regularly appeals to practicality to the extent that Haskell does; there are many ways to approach the problem, some of which are dramatically worse, by some goal structures, than others, and none of which are based on a perfect system, one that is consistent and complete. As a language for humans, though, Lojban is reall y nice and well designed, often even in areas that aren’t necessitated by its principles (its vocabulary, particularly its definitions, for example).

I suspect that, while putting serious effort into learning Lojban, for most people, enables them to think in a slightly more rigorous way, using the system of Lojban; learning, say, Agda will be useful to a much greater extent. Unfortunately, very few people would be capable of and motivated to do the latter, which is p art of why I think it’d be nice if Lojban were thought of as important to learn as English.

(By the way, I’ve also been happy to see rigorous distinction between quotation and referent briefly mentioned in several places around Less Wrong. In my experience, learning Lojban has helped members of the community learn this distinction if they hadn’t already. In Lojban, there is no ambiguity, in neither writing nor speech, between a quote and a referent; you would sound quite si lly if you said {la bairyn cu se cmene mi}, rather than {zo bairyn cu se cmene mi}, as Byron is my name; the notion of Byron the person being my name is quite silly indeed, and the difference is quite obvious in Lojban, the arbitrarily designed human language. If a person hasn’t internalized the distinction, I suspect Lojban would be likely to help them do so, whether they deliberatel y attempt to or not. I would also like to point out that there are often many ways to dereference a quotation; obvious examples include interpreting a word and converting a variable, such as {k o’a}, to the sumti, the latter of which depends on the environment.)

While it’d be trivial to arbitrarily define a Lojban selbri in a rather meaningless way, it seems that the designers were careful to construct only rigorously meaningful gismu. I never (thought much)[http://lesswrong.com/lw/nu/taboo_your_words/] about a rigorous meaning of “should” until I tried to translate it while speaking in Lojban; it turned out that this had been a problem faced by many speakers, many of whom just gave up, it seems.

Meditation: Some time after that, I finally understood how I could more formally understand how I interpreted “should”, which was, quite simply,

… … …

Reply: “optimal for some goal structure”, which, of course, depends on the goal structure in question; our own human values is an obvious implicit x2. (I haven’t yet read the metaethics sequenc e, but I expect it contains essays describing topics similar to that of this discovery). Perhaps translating into Lojban is often a good strategy for Tabooing ideas. (Thanks, Eliezer, for the meditation idea!)

I think this is a useful post, but I don’t think the water thing helped in understanding:

”In the Twin Earth, XYZ is “water” and H2O is not; in our Earth, H2O is “water” and XYZ is not.”

This isn’t an answer, this is the question. The question is “does the function, curried with Earth, return true for XYZ, && does the function, curried with Twin Earth, return true for H2O?”

Now, this is a silly philosophy question about the “true meaning” of water, and the real answer should be something like “If it’s useful, then yes, otherwise, no.” But I don’t think this is a misunderstanding of 2-place functions. At least, thinking about it as a 2-place function that takes a world as an argument doesn’t help dissolve the question.

I was thinking about applying the currying to topic, instead of world, (e.g. “heavy water” returns true for an isWater(“in the ocean”), but not for an isWater(“has molar mass ~18”)), but this felt like a motivated attempt to apply the concept, when the answer is just [How an Algorithm Feels from the Inside](https://www.lesswrong.com/posts/yA4gF5KrboK2m2Xu7/how-an-algorithm-feels-from-inside).

Either way, the Sexiness example is better.

This water example looks much less obvious because it is narrower, that is, if sound is any element of auditory perception, then water is a very specific example of perception by different senses, so there is no generally accepted definition that “water is what looks and behaves like water” so it appears that water is “really” just a chemical molecule. Although there is really no “really” here either, water is just a word, and it can just as well be used for what behaves like water, and not for what what behaves like water is chemically in our the world. In my opinion, this should generally be added as a second example in the “standard dispute about definitions”, in addition to “the sound of a tree falling in the forest”, so that there is an extremely non-obvious example of this delusion, including in disputes not among philosophers.

If somebody want to play with currying in Haskell interpreter (ghci):

plus :: Int-> (Int-> Int); plus x y = x + y

plus 2 3

plus_two = plus 2

plus_two 3

plus_two 7

“we could imagine that “sexiness” starts by eating an Admirer”

In this sequence: vore.