Are short timelines actually bad?

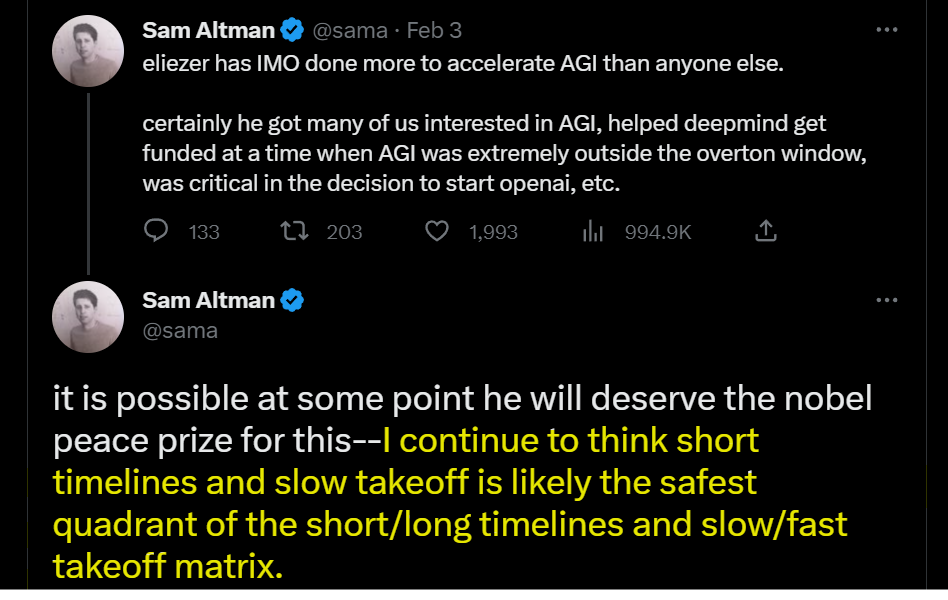

Sam Altman recently posted the following:

I have seen very little serious discussion about whether short timelines are actually bad. This is surprising given that nearly everyone I talk to in the AI risk community seems to think that they are.

Of course, the question “was the founding of OpenAI net positive?” and “would it be good to accelerate capabilities in 2023?” are different questions. I’m leaning towards yes on the first and no on the second. I’ve listed arguments that factor into these questions below.

Reasons one might try to accelerate progress

Avoid/delay a race with China. If the language model boom happened 10 years from now, China might be a bigger player. Global coordination seems harder than domestic coordination. A lot harder. Perhaps the U.S. will have to shake hands with China eventually, but the more time we have to experiment with powerful systems before then the better. That corresponds to time demonstrating dangers and iterating on solutions, which is way more valuable than “think about things in front of a white board” time.

Smooth out takeoff. FLOPS get cheaper over time. Data is accumulating. Architectures continue to improve. The longer it takes for companies to invest ungodly amounts of money, the greater the potential overhang. Shortening timelines in 2015 may have slowed takeoff, which again corresponds to more time of the type that matters most.

Keep the good guys in the lead. We’re lucky that the dominant AGI companies respect safety as much as they do. Sam Altman recently commented that “the bad case — and I think this is important to say — is, like, lights out for all of us.” I’m impressed that he said this given how bad this sort of thing could be for business—and this doesn’t seem like a PR move. AI x-risk isn’t really in the overton window yet.

The leading companies set an example. Maybe OpenAI’s hesitance to release GPT-4 has set a public expectation. It might now be easier to shame companies who don’t follow suit for being too fast and reckless.

Reasons to hit the breaks

There are lots of research agendas that don’t particularly depend on having powerful systems in front of us. Transparency research is the cat’s meow these days and has thus far been studied for relatively tiny models; in the extreme case, two-layer attention only transformers that I can run on my laptop. It takes time to lay foundations. In general, research progress is quite serial. Results build on previous results. It might take years before any of the junior conceptual researchers could get into an argument with Eleizer without mostly eliciting cached responses. The type of work that can be done in advance is also typically the type of work that requires the most serial time.

Language models are already sophisticated for empirical work to be useful. GPT-3-level models have made empirically grounded investigations of deception possible. Soon it might also make sense to say that a large language model has a goal or is situationally aware. If slowing down takeoff is important, we should hit the breaks. Takeoff has already begun.

We need time for field-building. The technical AI safety research community is growing very rapidly right now (28% per year according to this post). There are two cruxes here:

How useful is this field building in expectation?

Is this growth mostly driven by AGI nearness?

I’m pretty confused about what’s useful right now so I’ll skip the first question.

As for the second, I’ll guess that most of the growth is coming from increasing the number of AI safety research positions and university field building. The growth in AI safety jobs is probably contingent on the existence of maybe-useful empirical research. The release of GPT-3 may have been an important inflection point here. Funders may have been hesitant to help orgs scale before 2020, but I doubt that current AI safety job growth is affected much by capabilities progress.

My sense is that University field building has also been substantially affected by AGI-nearness – both in terms of a general sense of “shoot AI seems important” and the existence of tractable empirical problems. The Harvard/MIT community builders found nerd-sniping to be much more effective than the more traditional appeals to having a positive impact on the world.

Attempting to put these pieces together

If I could slow down capability progress right now, would I? Yeah. Would I stop it completely for a solid 5 years? Hm… that one is not as obvious to me. Would I go back in time and prevent OpenAI and DeepMind from being founded? Probably not. I tentatively agree with Sam that short timelines + slow takeoff is the safest quadrant and that takeoff era has begun.

I mostly wrote this post because I think questions about whether capabilities progress is good are pretty complicated. It doesn’t seem like many people appreciate this and I wish Sam and Demis weren’t so villified by the x-risk community.

My take on the salient effects:

Shorter timelines → increased accident risk from not having solved technical problem yet, decreased misuse risk, slower takeoffs

Slower takeoffs → decreased accident risk because of iteration to solve technical problem, increased race / economic pressure to deploy unsafe model

Given that most of my risk profile is dominated by a) not having solved technical problem yet, and b) race / economic pressure to deploy unsafe models, I’m tentatively in the long timelines + fast takeoff quadrant as being the safest.

I agree with parts of that. I’d also add the following (or I’d be curious why they’re not important effects):

Slower takeoff → warning shots → improved governance (e.g. through most/all major actors getting clear[er] evidence of risks) → less pressure to rush

(As OP argued) Shorter timelines → China has less of a chance to have leading AI companies → less pressure to rush

More broadly though, maybe we should be using more fine-grained concepts than “shorter timelines” and “slower takeoffs”:

The salient effects of “shorter timelines” seem pretty dependent on what the baseline is.

The point about China seems important if the baseline is 30 years, and not so much if the baseline is 10 years.

The salient effects of “slowing takeoff” seem pretty dependent on what part of the curve is being slowed. Slowing it down right before there’s large risk seems much more valuable than (just) slowing it down earlier in the curve, as the last few year’s investments in LLMs did.

Agree that this is an effect. The reason it wasn’t immediately as salient is because I don’t expect the governance upside to outweigh the downside of more time for competition. I’m not confident of this and I’m not going to write down reasons right now.

Agree, though I think on the current margin US companies have several years of lead time on China, which is much more than they have on each other. So on the current margin, I’m more worried about companies racing each other than nations.

Agreed.

Sam Altman’s position on AI safety is awful enough that many safety-conscious employees left OpenAI. Saying things like that is helpful for assuring employees at OpenAI to think they are doing good by working at OpenAI. It’s likely good for business.

I don’t see how it would damage the business interests of OpenAI in any way to say such a thing.

I roughly support slowing AI progress (although the space of possibilities has way more dimensions than just slow vs fast). Some takes on “Reasons one might try to accelerate progress”:

Avoid/delay a race with China + Keep the good guys in the lead. Sure, if you think you can differentially accelerate better actors, that’s worth noticing. (And maybe long timelines means more actors in general, which seems bad on net.) I feel pretty uncertain about the magnitude of these factors, though.

Smooth out takeoff. Sure, but be careful—this factor suggests faster progress is good insofar as it’s due to greater spending. This is consistent with trying to slow timelines by e.g. trying to get labs to publish less.

Another factor is non-AI x-risk: if human-level AI solves other risks, and greater exposure to other risks doesn’t help with AI, this is a force in favor of rolling the dice on AI sooner. (I roughly believe non-AI x-risk is much smaller than the increase in x-risk from shorter timelines, but I’m flagging this as cruxy; if I came to believe that e.g. biorisk was much bigger, I would support accelerating AI.)

Yes, as someone who believes timelines are incredibly short, I will also stick my neck out and say this thing I don’t think I can prevent is bad—it will be hard to solve safety. We can do it, same as we’re about to make huge progress on capability, but it’s going to be hard to keep dancing through the insights fast enough to keep up. Take the breaks that you need, believe in your mix of approaches, and stay alert to a wide variety of news—we don’t have time to rush.

Highly relevant: https://www.lesswrong.com/posts/vQNJrJqebXEWjJfnz/a-note-about-differential-technological-development