Shallow review of technical AI safety, 2025

Website version · Gestalt · Repo and data

This is the third annual review of what’s going on in technical AI safety. You could stop reading here and instead explore the data on the shallow review website.

It’s shallow in the sense that 1) we are not specialists in almost any of it and that 2) we only spent about two hours on each entry. Still, among other things, we processed every arXiv paper on alignment, all Alignment Forum posts, as well as a year’s worth of Twitter.

It is substantially a list of lists structuring 800 links. The point is to produce stylised facts, forests out of trees; to help you look up what’s happening, or that thing you vaguely remember reading about; to help new researchers orient, know some of their options and the standing critiques; and to help you find who to talk to for actual information. We also track things which didn’t pan out.

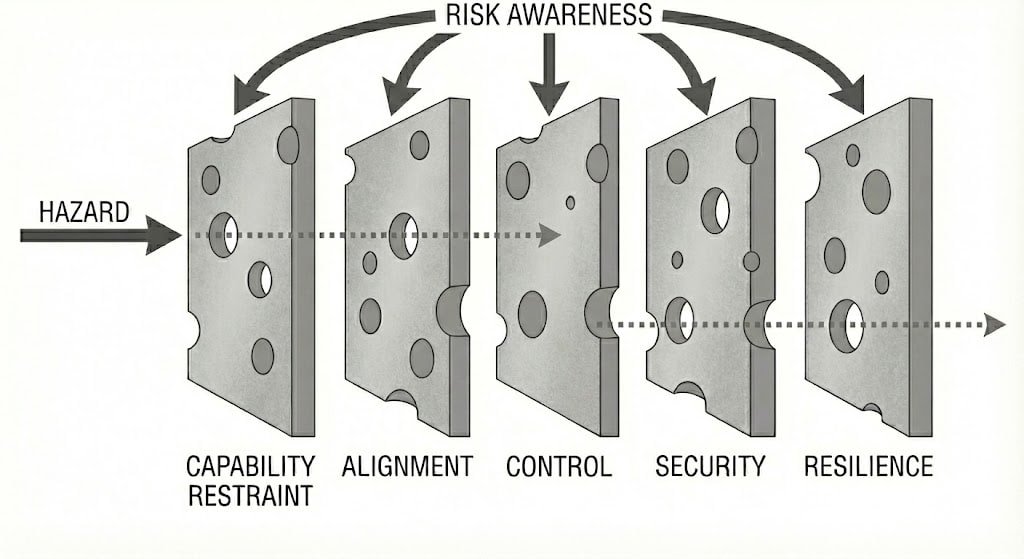

Here, “AI safety” means technical work intended to prevent future cognitive systems from having large unintended negative effects on the world. So it’s capability restraint, instruction-following, value alignment, control, and risk awareness work.

We ignore a lot of relevant work (including most of capability restraint): things like misuse, policy, strategy, OSINT, resilience and indirect risk, AI rights, general capabilities evals, and things closer to “technical policy” and products (like standards, legislation, SL4 datacentres, and automated cybersecurity). We focus on papers and blogposts (rather than say, gdoc samizdat or tweets or Githubs or Discords). We only use public information, so we are off by some additional unknown factor.

We try to include things which are early-stage and illegible – but in general we fail and mostly capture legible work on legible problems (i.e. things you can write a paper on already).

Even ignoring all of that as we do, it’s still too long to read. Here’s a spreadsheet version (agendas and papers) and the github repo including the data and the processing pipeline. Methods down the bottom. Gavin’s editorial outgrew this post and became its own thing.

If we missed something big or got something wrong, please comment, we will edit it in.

An Arb Research project. Work funded by OpenPhil Coefficient Giving.

Labs (giant companies)

| |||||

|---|---|---|---|---|---|

| Safety team % all Not counting the AIs | <5% | <3% | <3% | <2% | <1% |

| Leadership’s stated timelines to full auto AI R&D | mid-2027 | 2028 to 2035 | March 2028 | N/A | ASI by 2030 |

| Leadership stated P(AI doom) | 25% | “Non-zero” and >5% | ~2% | ~0% | 20% |

| Legal obligations | EU CoP, SB53 | EU CoP, SB53 | EU CoP, SB53 | SB53 | EU CoP (Safety), SB53 |

| Average Safety Score (ZSP, SaferAI, FLI) | 51% | 27% | 33% | 17% | 17% |

OpenAI

Structure: privately-held public benefit corp

Safety teams: Alignment, Safety Systems (Interpretability, Safety Oversight, Pretraining Safety, Robustness, Safety Research, Trustworthy AI, new Misalignment Research team coming), Preparedness, Model Policy, Safety and Security Committee, Safety Advisory Group. The Persona Features paper had a distinct author list. No named successor to Superalignment.

Public alignment agenda: None. Boaz Barak offers personal views.

Risk management framework: Preparedness Framework

See also: Iterative alignment · Safeguards (inference-time auxiliaries) · Character training and persona steering

Some names: Johannes Heidecke, Boaz Barak, Mia Glaese, Jenny Nitishinskaya, Lama Ahmad, Naomi Bashkansky, Miles Wang, Wojciech Zaremba, David Robinson, Zico Kolter, Jerry Tworek, Eric Wallace, Olivia Watkins, Kai Chen, Chris Koch, Andrea Vallone, Leo Gao

Critiques: Stein-Perlman, Stewart, underelicitation, Midas, defense, Carlsmith on labs in general. It’s difficult to model OpenAI as a single agent: “ALTMAN: I very rarely get to have anybody work on anything. One thing about researchers is they’re going to work on what they’re going to work on, and that’s that.”

Funded by: Microsoft, AWS, Oracle, NVIDIA, SoftBank, G42, AMD, Dragoneer, Coatue, Thrive, Altimeter, MGX, Blackstone, TPG, T. Rowe Price, Andreessen Horowitz, D1 Capital Partners, Fidelity Investments, Founders Fund, Sequoia…

Some outputs (13)

Their 60-page System Cards now contain a large amount of their public safety work.

Monitoring Reasoning Models for Misbehavior and the Risks of Promoting Obfuscation. Bowen Baker et al.

Persona Features Control Emergent Misalignment. Miles Wang et al.

Stress Testing Deliberative Alignment for Anti-Scheming Training. Bronson Schoen et al.

Deliberative Alignment: Reasoning Enables Safer Language Models. Melody Y. Guan et al.

Toward understanding and preventing misalignment generalization. Miles Wang et al.

Our updated Preparedness Framework. OpenAI Preparedness Team

Trading Inference-Time Compute for Adversarial Robustness. Wojciech Zaremba et al.

Small-to-Large Generalization: Data Influences Models Consistently Across Scale. Alaa Khaddaj, Logan Engstrom, Aleksander Madry

Findings from a pilot Anthropic–OpenAI alignment evaluation exercise: OpenAI Safety Tests

Google Deepmind

Structure: research laboratory subsidiary of a public for-profit

Safety teams: amplified oversight, interpretability, ASAT eng (automated alignment research), Causal Incentives Working Group, Frontier Safety Risk Assessment (evals, threat models, the framework), Mitigations (e.g. banning accounts, refusal training, jailbreak robustness), Loss of Control (control, alignment evals). Structure here.

Public alignment agenda: An Approach to Technical AGI Safety and Security

Risk management framework: Frontier Safety Framework

See also: White-box safety (i.e. Interpretability) · Scalable Oversight

Some names: Rohin Shah, Allan Dafoe, Anca Dragan, Alex Irpan, Alex Turner, Anna Wang, Arthur Conmy, David Lindner, Jonah Brown-Cohen, Lewis Ho, Neel Nanda, Raluca Ada Popa, Rishub Jain, Rory Greig, Sebastian Farquhar, Senthooran Rajamanoharan, Sophie Bridgers, Tobi Ijitoye, Tom Everitt, Victoria Krakovna, Vikrant Varma, Zac Kenton, Four Flynn, Jonathan Richens, Lewis Smith, Janos Kramar, Matthew Rahtz, Mary Phuong, Erik Jenner

Critiques: Stein-Perlman, Carlsmith on labs in general, underelicitation, On Google’s Safety Plan

Funded by: Google. Explicit 2024 Deepmind spending as a whole was £1.3B, but this doesn’t count most spending e.g. Gemini compute.

Some outputs (14)

A Pragmatic Vision for Interpretability. Neel Nanda et al.

How Can Interpretability Researchers Help AGI Go Well?. Neel Nanda et al.

Evaluating Frontier Models for Stealth and Situational Awareness. Mary Phuong et al.

When Chain of Thought is Necessary, Language Models Struggle to Evade Monitors. Scott Emmons et al.

MONA: Managed Myopia with Approval Feedback. Sebastian Farquhar, David Lindner, Rohin Shah

Consistency Training Helps Stop Sycophancy and Jailbreaks. Alex Irpan et al.

An Approach to Technical AGI Safety and Security. Rohin Shah et al.

Negative Results for SAEs On Downstream Tasks and Deprioritising SAE Research (GDM Mech Interp Team Progress Update #2). Lewis Smith et al.

Steering Gemini Using BIDPO Vectors. Alex Turner et al.

Difficulties with Evaluating a Deception Detector for AIs. Lewis Smith, Bilal Chughtai, Neel Nanda

Taking a responsible path to AGI. Anca Dragan et al.

Evaluating potential cybersecurity threats of advanced AI. Four Flynn, Mikel Rodriguez, Raluca Ada Popa

Self-preservation or Instruction Ambiguity? Examining the Causes of Shutdown Resistance. Senthooran Rajamanoharan, Neel Nanda

A Pragmatic Way to Measure Chain-of-Thought Monitorability. Scott Emmons et al.

Anthropic

Structure: privately-held public-benefit corp

Safety teams: Scalable Alignment (Leike), Alignment Evals (Bowman), Interpretability (Olah), Control (Perez), Model Psychiatry (Lindsey), Character (Askell), Alignment Stress-Testing (Hubinger), Alignment Mitigations (Price?), Frontier Red Team (Graham), Safeguards (?), Societal Impacts (Ganguli), Trust and Safety (Sanderford), Model Welfare (Fish)

Public alignment agenda: directions, bumpers, checklist, an old vague view

Risk management framework: RSP

See also: White-box safety (i.e. Interpretability) · Scalable Oversight

Some names: Chris Olah, Evan Hubinger, Sam Marks, Johannes Treutlein, Sam Bowman, Euan Ong, Fabien Roger, Adam Jermyn, Holden Karnofsky, Jan Leike, Ethan Perez, Jack Lindsey, Amanda Askell, Kyle Fish, Sara Price, Jon Kutasov, Minae Kwon, Monty Evans, Richard Dargan, Roger Grosse, Ben Levinstein, Joseph Carlsmith, Joe Benton

Critiques: Stein-Perlman, Casper, Carlsmith, underelicitation, Greenblatt, Samin, defense, Existing Safety Frameworks Imply Unreasonable Confidence

Funded by: Amazon, Google, ICONIQ, Fidelity, Lightspeed, Altimeter, Baillie Gifford, BlackRock, Blackstone, Coatue, D1 Capital Partners, General Atlantic, General Catalyst, GIC, Goldman Sachs, Insight Partners, Jane Street, Ontario Teachers’ Pension Plan, Qatar Investment Authority, TPG, T. Rowe Price, WCM, XN

Some outputs (21)

Evaluating honesty and lie detection techniques on a diverse suite of dishonest models. Rowan Wang et al.

Agentic Misalignment: How LLMs could be insider threats. Aengus Lynch et al.

Why Do Some Language Models Fake Alignment While Others Don’t?. abhayesian et al.

Forecasting Rare Language Model Behaviors. Erik Jones et al.

Findings from a Pilot Anthropic—OpenAI Alignment Evaluation Exercise. Samuel R. Bowman et al.

On the Biology of a Large Language Model. Jack Lindsey et al.

Poisoning Attacks on LLMs Require a Near-constant Number of Poison Samples. Alexandra Souly et al.

Circuit Tracing: Revealing Computational Graphs in Language Models. Emmanuel Ameisen et al.

SHADE-Arena: Evaluating sabotage and monitoring in LLM agents. Xiang Deng et al.

Emergent Introspective Awareness in Large Language Models. Jack Lindsey

Petri: An open-source auditing tool to accelerate AI safety research

Recommendations for Technical AI Safety Research Directions. Anthropic Alignment Science Team

Constitutional Classifiers: Defending against universal jailbreaks. Anthropic Safeguards Research Team

The Soul Document. Richard-Weiss

Open-sourcing circuit tracing tools. Michael Hanna et al.

xAI

Structure: privately-held for-profit

Teams: Applied Safety, Model Evaluation. Nominally focussed on misuse.

Framework: Risk Management Framework

Some names: Dan Hendrycks (advisor), Juntang Zhuang, Toby Pohlen, Lianmin Zheng, Piaoyang Cui, Nikita Popov, Ying Sheng, Sehoon Kim, Alexander Pan

Critiques: framework, hacking, broken promises, Stein-Perlman, insecurity, Carlsmith on labs in general

Funded by: A16Z, Blackrock, Fidelity, Kingdom, Lightspeed, MGX, Morgan Stanley, Sequoia…

Meta

Structure: public for-profit

Teams: Safety “integrated into” capabilities research, Meta Superintelligence Lab. But also FAIR Alignment, Brain and AI.

Framework: FAF

See also: Capability removal: unlearning

Some names: Shuchao Bi, Hongyuan Zhan, Jingyu Zhang, Haozhu Wang, Eric Michael Smith, Sid Wang, Amr Sharaf, Mahesh Pasupuleti, Jason Weston, ShengYun Peng, Ivan Evtimov, Song Jiang, Pin-Yu Chen, Evangelia Spiliopoulou, Lei Yu, Virginie Do, Karen Hambardzumyan, Nicola Cancedda, Adina Williams

Critiques: extreme underelicitation, Stein-Perlman, Carlsmith on labs in general

Funded by: Meta

Some outputs (6)

The Alignment Waltz: Jointly Training Agents to Collaborate for Safety. Jingyu Zhang et al.

Large Reasoning Models Learn Better Alignment from Flawed Thinking. ShengYun Peng et al.

Robust LLM safeguarding via refusal feature adversarial training. Lei Yu et al.

Agents Rule of Two: A Practical Approach to AI Agent Security

China

The Chinese companies often don’t attempt to be safe, often not even in the prosaic safeguards sense. They drop the weights immediately after post-training finishes. They’re mostly open weights and closed data. As of writing the companies are often severely compute-constrained. There are some informal reasons to doubt their capabilities. The (academic) Chinese AI safety scene is however also growing.

Alibaba’s Qwen3-etc-etc is nominally at the level of Gemini 2.5 Flash. Maybe the only Chinese model with a large Western userbase, including businesses, but since it’s self-hosted this doesn’t translate into profits for them yet. On one ad hoc test it was the only Chinese model not to collapse OOD, but the Qwen2.5 corpus was severely contaminated.

DeepSeek’s v3.2 is nominally around the same as Qwen. The CCP made them waste months trying Huawei chips.

Moonshot’s Kimi-K2-Thinking has some nominally frontier benchmark results and a pleasant style but does not seem frontier.

Baidu’s ERNIE 5 is again nominally very strong, a bit better than DeepSeek. This new one seems to not be open.

Z’s GLM-4.6 is around the same as Qwen. The product director was involved in the MIT Alignment group.

MiniMax’s M2 is nominally better than Qwen, around the same as Grok 4 Fast on the usual superficial benchmarks. It does fine on one very basic red-team test.

ByteDance does impressive research in a lagging paradigm, diffusion LMs.

There are others but they’re marginal for now.

Other labs

Amazon’s Nova Pro is around the level of Llama 3 90B, which in turn is around the level of the original GPT-4. So 2 years behind. But they have their own chip.

Microsoft are now mid-training on top of GPT-5. MAI-1-preview is around DeepSeek V3.0 level on Arena. They continue to focus on medical diagnosis. You can request access.

Mistral have a reasoning model, Magistral Medium, and released the weights of a little 24B version. It’s a bit worse than Deepseek R1, pass@1.

Black-box safety (understand and control current model behaviour)

Iterative alignment

Nudging base models by optimising their output. Worked on by the post-training teams at most labs, estimating the FTEs at >500 in some sense. Funded by most of the industry.

General theory of change: “LLMs don’t seem very dangerous and might scale to AGI, things are generally smooth, relevant capabilities are harder than alignment, assume no mesaoptimisers, assume that zero-shot deception is hard, assume a fundamentally humanish ontology is learned, assume no simulated agents, assume that noise in the data means that human preferences are not ruled out, assume that alignment is a superficial feature, assume that tuning for what we want will also get us to avoid what we don’t want. Maybe assume that thoughts are translucent.”

General critiques: Bellot, Alfour, STACK, AI Alignment Strategies from a Risk Perspective, AI Alignment based on Intentions does not work, Distortion of AI Alignment: Does Preference Optimization Optimize for Preferences?, Murphy’s Laws of AI Alignment: Why the Gap Always Wins, Alignment remains a hard, unsolved problem

Iterative alignment at pretrain-time

Guide weights during pretraining.

See also: prosaic alignment · incrementalism · alignment-by-default · Korbak 2023

Some names: Jan Leike, Stuart Armstrong, Cyrus Cousins, Oliver Daniels

Funded by: most of the industry

Some outputs (2)

Unsupervised Elicitation. Jiaxin Wen et al.

ACE and Diverse Generalization via Selective Disagreement. Oliver Daniels et al.

Sort-of the Alignment Pretraining paper.

Iterative alignment at post-train-time

Modify weights after pre-training.

Some names: Adam Gleave, Anca Dragan, Jacob Steinhardt, Rohin Shah

Funded by: most of the industry

Some outputs (16)

Composable Interventions for Language Models. Arinbjorn Kolbeinsson et al.

Spilling the Beans: Teaching LLMs to Self-Report Their Hidden Objectives. Chloe Li, Mary Phuong, Daniel Tan

On Targeted Manipulation and Deception when Optimizing LLMs for User Feedback. Marcus Williams et al.

Preference Learning with Lie Detectors can Induce Honesty or Evasion. Chris Cundy, Adam Gleave

Robust LLM Alignment via Distributionally Robust Direct Preference Optimization. Zaiyan Xu et al.

RLHS: Mitigating Misalignment in RLHF with Hindsight Simulation. Kaiqu Liang et al.

Reducing the Probability of Undesirable Outputs in Language Models Using Probabilistic Inference. Stephen Zhao et al.

Iterative Label Refinement Matters More than Preference Optimization under Weak Supervision. Yaowen Ye, Cassidy Laidlaw, Jacob Steinhardt

Consistency Training Helps Stop Sycophancy and Jailbreaks. Alex Irpan et al.

Rethinking Safety in LLM Fine-tuning: An Optimization Perspective. Minseon Kim et al.

Preference Learning for AI Alignment: a Causal Perspective. Katarzyna Kobalczyk, Mihaela van der Schaar

On Monotonicity in AI Alignment. Gilles Bareilles et al.

Spectrum Tuning: Post-Training for Distributional Coverage and In-Context Steerability. Taylor Sorensen et al.

Uncertainty-Aware Step-wise Verification with Generative Reward Models. Zihuiwen Ye et al.

The Delta Learning Hypothesis: Preference Tuning on Weak Data can Yield Strong Gains. Scott Geng et al.

Training LLMs for Honesty via Confessions. Manas Joglekar et al.

Black-box make-AI-solve-it

Focus on using existing models to improve and align further models.

See also: Make AI solve it · Debate

Some names: Jacques Thibodeau, Matthew Shingle, Nora Belrose, Lewis Hammond, Geoffrey Irving

Funded by: most of the industry

Some outputs (12)

Neural Interactive Proofs. Lewis Hammond, Sam Adam-Day

MONA: Myopic Optimization with Non-myopic Approval Can Mitigate Multi-step Reward Hacking. Sebastian Farquhar et al.

Prover-Estimator Debate: A New Scalable Oversight Protocol. Jonah Brown-Cohen, Geoffrey Irving

Weak to Strong Generalization for Large Language Models with Multi-capabilities. Yucheng Zhou, Jianbing Shen, Yu Cheng

Debate Helps Weak-to-Strong Generalization. Hao Lang, Fei Huang, Yongbin Li

Mechanistic Anomaly Detection for “Quirky” Language Models. David O. Johnston, Arkajyoti Chakraborty, Nora Belrose

AI Debate Aids Assessment of Controversial Claims. Salman Rahman et al.

An alignment safety case sketch based on debate. Marie Davidsen Buhl et al.

Ensemble Debates with Local Large Language Models for AI Alignment. Ephraiem Sarabamoun

Training AI to do alignment research we don’t already know how to do. joshc

Automating AI Safety: What we can do today. Matthew Shinkle, Eyon Jang, Jacques Thibodeau

Superalignment with Dynamic Human Values. Florian Mai et al.

Inoculation prompting

Prompt mild misbehaviour in training, to prevent the failure mode where once AI misbehaves in a mild way, it will be more inclined towards all bad behaviour.

Some names: Ariana Azarbal, Daniel Tan, Victor Gillioz, Alex Turner, Alex Cloud, Monte MacDiarmid, Daniel Ziegler

Funded by: most of the industry

Some outputs (4)

Recontextualization Mitigates Specification Gaming Without Modifying the Specification. Ariana Azarbal et al.

Inoculation Prompting: Eliciting traits from LLMs during training can suppress them at test-time. Daniel Tan et al.

Inoculation Prompting: Instructing LLMs to misbehave at train-time improves test-time alignment. Nevan Wichers et al.

Inference-time: In-context learning

Investigate what runtime guidelines, rules, or examples provided to an LLM yield better behavior.

See also: model spec as prompt · Model specs and constitutions

Some names: Jacob Steinhardt, Kayo Yin, Atticus Geiger

Some outputs (5)

InvThink: Towards AI Safety via Inverse Reasoning. Yubin Kim et al.

Inference-Time Reward Hacking in Large Language Models. Hadi Khalaf et al.

Understanding In-context Learning of Addition via Activation Subspaces. Xinyan Hu et al.

Mixing Mechanisms: How Language Models Retrieve Bound Entities In-Context. Yoav Gur-Arieh, Mor Geva, Atticus Geiger

Which Attention Heads Matter for In-Context Learning?. Kayo Yin, Jacob Steinhardt

Inference-time: Steering

Manipulate an LLM’s internal representations/token probabilities without touching weights.

See also: Activation engineering · Character training and persona steering · Safeguards (inference-time auxiliaries)

Some names: Taylor Sorensen, Constanza Fierro, Kshitish Ghate, Arthur Vogels

Some outputs (4)

Steering Language Models with Weight Arithmetic. Constanza Fierro, Fabien Roger

EVALUESTEER: Measuring Reward Model Steerability Towards Values and Preferences. Kshitish Ghate et al.

Defense Against the Dark Prompts: Mitigating Best-of-N Jailbreaking with Prompt Evaluation. Stuart Armstrong et al.

In-Distribution Steering: Balancing Control and Coherence in Language Model Generation.. Arthur Vogels et al.

Capability removal: unlearning

Developing methods to selectively remove specific information, capabilities, or behaviors from a trained model (e.g. without retraining it from scratch). A mixture of black-box and white-box approaches.

Theory of change: If an AI learns dangerous knowledge (e.g., dual-use capabilities like virology or hacking, or knowledge of their own safety controls) or exhibits undesirable behaviors (e.g., memorizing private data), we can specifically erase this “bad” knowledge post-training, which is much cheaper and faster than retraining, thereby making the model safer. Alternatively, intervene in pre-training, to prevent the model from learning it in the first place (even when data filtering is imperfect). You could imagine also unlearning propensities to power-seeking, deception, sycophancy, or spite.

General approach: cognitive / engineering · Target case: pessimistic

Orthodox alignment problems: Superintelligence can hack software supervisors, A boxed AGI might exfiltrate itself by steganography, spearphishing, Humanlike minds/goals are not necessarily safe

See also: Data filtering · White-box safety (i.e. Interpretability) · Various Redteams

Some names: Rowan Wang, Avery Griffin, Johannes Treutlein, Zico Kolter, Bruce W. Lee, Addie Foote, Alex Infanger, Zesheng Shi, Yucheng Zhou, Jing Li, Timothy Qian, Stephen Casper, Alex Cloud, Peter Henderson, Filip Sondej, Fazl Barez

Critiques: Existing Large Language Model Unlearning Evaluations Are Inconclusive

Funded by: Coefficient Giving, MacArthur Foundation, UK AI Safety Institute (AISI), Canadian AI Safety Institute (CAISI), industry labs (e.g., Microsoft Research, Google)

Estimated FTEs: 10-50

Some outputs (18)

Frameworks

OpenUnlearning. Vineeth Dorna et al.

Mostly black-box

Modifying LLM Beliefs with Synthetic Document Finetuning. Rowan Wang et al.

From Dormant to Deleted: Tamper-Resistant Unlearning Through Weight-Space Regularization. Shoaib Ahmed Siddiqui et al.

Mirror Mirror on the Wall, Have I Forgotten it All?. Brennon Brimhall et al.

Machine Unlearning Doesn’t Do What You Think: Lessons for Generative AI Policy and Research. A. Feder Cooper et al.

Open Problems in Machine Unlearning for AI Safety. Fazl Barez et al.

Mostly white-box

Collapse of Irrelevant Representations (CIR) Ensures Robust and Non-Disruptive LLM Unlearning. Filip Sondej, Yushi Yang

Safety Alignment via Constrained Knowledge Unlearning. Zesheng Shi, Yucheng Zhou, Jing Li

Robust LLM Unlearning with MUDMAN: Meta-Unlearning with Disruption Masking And Normalization. Filip Sondej et al.

Unlearning Isn’t Deletion: Investigating Reversibility of Machine Unlearning in LLMs. Xiaoyu Xu et al.

Unlearning Needs to be More Selective [Progress Report]. Filip Sondej, Yushi Yang, Marcel Windys

Layered Unlearning for Adversarial Relearning. Timothy Qian et al.

Understanding Memorization via Loss Curvature. Jack Merullo et al.

Model Tampering Attacks Enable More Rigorous Evaluations of LLM Capabilities. Zora Che et al.

Pre-training interventions

Gradient Routing: Masking Gradients to Localize Computation in Neural Networks. Alex Cloud et al.

Selective modularity: a research agenda. cloud, Jacob G-W

Distillation Robustifies Unlearning. Bruce W. Lee et al.

Beyond Data Filtering: Knowledge Localization for Capability Removal in LLMs. Igor Shilov et al.

Control

If we assume early transformative AIs are misaligned and actively trying to subvert safety measures, can we still set up protocols to extract useful work from them while preventing sabotage, and watching with incriminating behaviour?

General approach: engineering / behavioral · Target case: worst-case

See also: safety cases

Some names: Redwood, UK AISI, Deepmind, OpenAI, Anthropic, Buck Shlegeris, Ryan Greenblatt, Kshitij Sachan, Alex Mallen

Estimated FTEs: 5-50

Some outputs (22)

Ctrl-Z: Controlling AI Agents via Resampling. Aryan Bhatt et al.

SHADE-Arena: Evaluating Sabotage and Monitoring in LLM Agents. Jonathan Kutasov et al.

Adaptive Deployment of Untrusted LLMs Reduces Distributed Threats. Jiaxin Wen et al.

D-REX: A Benchmark for Detecting Deceptive Reasoning in Large Language Models. Satyapriya Krishna et al.

Subversion Strategy Eval: Can language models statelessly strategize to subvert control protocols?. Alex Mallen et al.

Evaluating Control Protocols for Untrusted AI Agents. Jon Kutasov et al.

Can Reasoning Models Obfuscate Reasoning? Stress-Testing Chain-of-Thought Monitorability. Artur Zolkowski et al.

Optimizing AI Agent Attacks With Synthetic Data. Chloe Loughridge et al.

Games for AI Control. Charlie Griffin et al.

A sketch of an AI control safety case. Tomek Korbak et al.

Assessing confidence in frontier AI safety cases. Stephen Barrett et al.

ControlArena. Rogan Inglis et al.

How to evaluate control measures for LLM agents? A trajectory from today to superintelligence. Tomek Korbak et al.

The Alignment Project by UK AISI. Mojmir et al.

Towards evaluations-based safety cases for AI scheming. Mikita Balesni et al.

Incentives for Responsiveness, Instrumental Control and Impact. Ryan Carey et al.

AI companies are unlikely to make high-assurance safety cases if timelines are short. Ryan Greenblatt

Manipulation Attacks by Misaligned AI: Risk Analysis and Safety Case Framework. Rishane Dassanayake et al.

Dynamic safety cases for frontier AI. Carmen Cârlan et al.

AIs at the current capability level may be important for future safety work. Ryan Greenblatt

Takeaways from sketching a control safety case. Josh Clymer, Buck Shlegeris

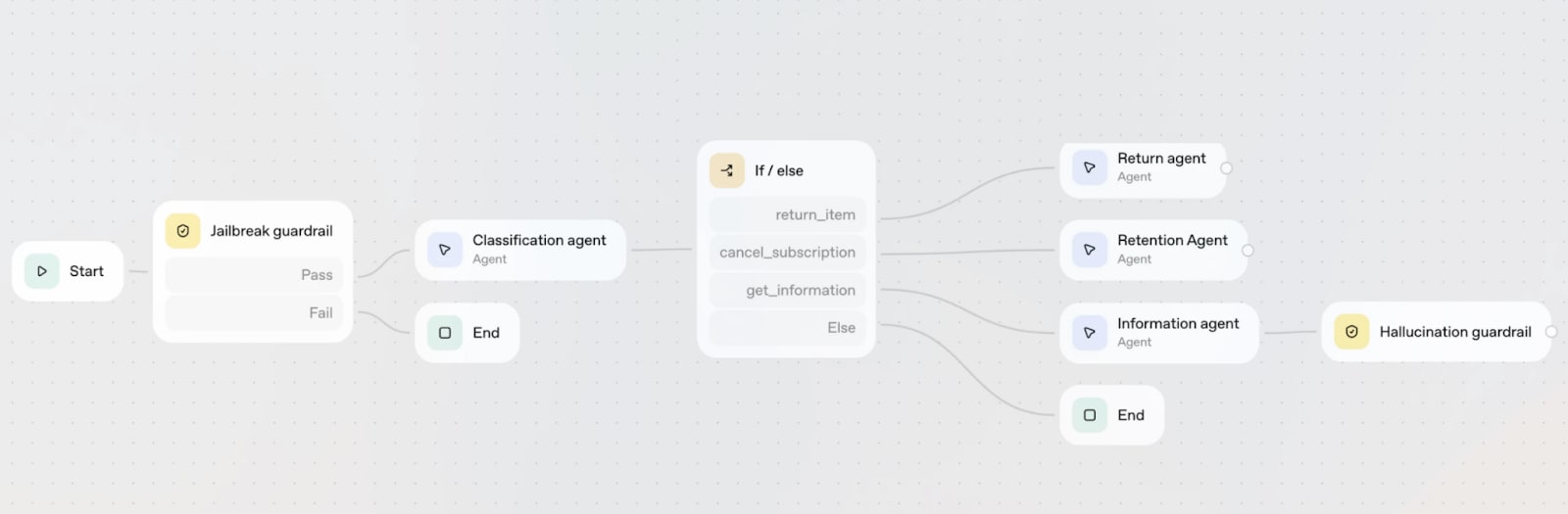

Safeguards (inference-time auxiliaries)

Layers of inference-time defenses, such as classifiers, monitors, and rapid-response protocols, to detect and block jailbreaks, prompt injections, and other harmful model behaviors.

Theory of change: By building a bunch of scalable and hardened things on top of an unsafe model, we can defend against known and unknown attacks, monitor for misuse, and prevent models from causing harm, even if the core model has vulnerabilities.

Orthodox alignment problems: Superintelligence can fool human supervisors, A boxed AGI might exfiltrate itself by steganography, spearphishing

See also: Various Redteams · Iterative alignment

Some names: Mrinank Sharma, Meg Tong, Jesse Mu, Alwin Peng, Julian Michael, Henry Sleight, Theodore Sumers, Raj Agarwal, Nathan Bailey, Edoardo Debenedetti, Ilia Shumailov, Tianqi Fan, Sahil Verma, Keegan Hines, Jeff Bilmes

Critiques: Obfuscated Activations Bypass LLM Latent-Space Defenses

Funded by: most of the big labs

Estimated FTEs: 100+

Some outputs (6)

Constitutional Classifiers: Defending against Universal Jailbreaks across Thousands of Hours of Red Teaming. Mrinank Sharma et al.

Rapid Response: Mitigating LLM Jailbreaks with a Few Examples. Alwin Peng et al.

Monitoring computer use via hierarchical summarization. Theodore Sumers et al.

Defeating Prompt Injections by Design. Edoardo Debenedetti et al.

OMNIGUARD: An Efficient Approach for AI Safety Moderation Across Modalities. Sahil Verma et al.

Chain of thought monitoring

Supervise an AI’s natural-language (output) “reasoning” to detect misalignment, scheming, or deception, rather than studying the actual internal states.

Theory of change: The reasoning process (Chain of Thought, or CoT) of an AI provides a legible signal of its internal state and intentions. By monitoring this CoT, supervisors (human or AI) can detect misalignment, scheming, or reward hacking before it results in a harmful final output. This allows for more robust oversight than supervising outputs alone, but it relies on the CoT remaining faithful (i.e., accurately reflecting the model’s reasoning) and not becoming obfuscated under optimization pressure.

Orthodox alignment problems: Superintelligence can fool human supervisors, Superintelligence can hack software supervisors, A boxed AGI might exfiltrate itself by steganography, spearphishing

See also: White-box safety (i.e. Interpretability) · Steganography evals

Some names: Aether, Bowen Baker, Joost Huizinga, Leo Gao, Scott Emmons, Erik Jenner, Yanda Chen, James Chua, Owain Evans, Tomek Korbak, Mikita Balesni, Xinpeng Wang, Miles Turpin, Rohin Shah

Critiques: Reasoning Models Don’t Always Say What They Think; Chain-of-Thought Reasoning In The Wild Is Not Always Faithful; Beyond Semantics: The Unreasonable Effectiveness of Reasonless Intermediate Tokens; Reasoning Models Sometimes Output Illegible Chains of Thought

Funded by: OpenAI, Anthropic, Google DeepMind

Estimated FTEs: 10-100

Some outputs (17)

Monitoring Reasoning Models for Misbehavior and the Risks of Promoting Obfuscation. Bowen Baker et al.

Detecting misbehavior in frontier reasoning models. Bowen Baker et al.

When Chain of Thought is Necessary, Language Models Struggle to Evade Monitors. Scott Emmons et al.

Reasoning Models Don’t Always Say What They Think. Yanda Chen et al.

Is It Thinking or Cheating? Detecting Implicit Reward Hacking by Measuring Reasoning Effort. Xinpeng Wang et al.

CoT Red-Handed: Stress Testing Chain-of-Thought Monitoring. Benjamin Arnav et al.

Training fails to elicit subtle reasoning in current language models

Can Reasoning Models Obfuscate Reasoning? Stress-Testing Chain-of-Thought Monitorability. Artur Zolkowski et al.

Teaching Models to Verbalize Reward Hacking in Chain-of-Thought Reasoning. Miles Turpin et al.

Are DeepSeek R1 And Other Reasoning Models More Faithful?. James Chua, Owain Evans

A Pragmatic Way to Measure Chain-of-Thought Monitorability. Scott Emmons et al.

A Concrete Roadmap towards Safety Cases based on Chain-of-Thought Monitoring. Wuschel Schulz

Chain of Thought Monitorability: A New and Fragile Opportunity for AI Safety. Tomek Korbak et al.

CoT May Be Highly Informative Despite “Unfaithfulness”. Amy Deng et al.

Aether July 2025 Update. Rohan Subramani, Rauno Arike, Shubhorup Biswas

Model psychology

This section consists of a bottom-up set of things people happen to be doing, rather than a normative taxonomy.

Model values / model preferences

Analyse and control emergent, coherent value systems in LLMs, which change as models scale, and can contain problematic values like preferences for AIs over humans.

Theory of change: As AIs become more agentic, their behaviours and risks are increasingly determined by their goals and values. Since coherent value systems emerge with scale, we must leverage utility functions to analyse these values and apply “utility control” methods to constrain them, rather than just controlling outputs downstream of them.

General approach: cognitivist science · Target case: pessimistic

Orthodox alignment problems: Value is fragile and hard to specify

See also: Values in the Wild: Discovering and Analyzing Values in Real-World Language Model Interactions · Persona Vectors: Monitoring and Controlling Character Traits in Language Models

Some names: Mantas Mazeika, Xuwang Yin, Rishub Tamirisa, Jaehyuk Lim, Bruce W. Lee, Richard Ren, Long Phan, Norman Mu, Adam Khoja, Oliver Zhang, Dan Hendrycks

Critiques: Randomness, Not Representation: The Unreliability of Evaluating Cultural Alignment in LLMs

Funded by: Coefficient Giving. $289,000 SFF funding for CAIS.

Estimated FTEs: 30

Some outputs (14)

What Kind of User Are You? Uncovering User Models in LLM Chatbots. Yida Chen et al.

Utility Engineering: Analyzing and Controlling Emergent Value Systems in AIs. Mantas Mazeika et al.

Will AI Tell Lies to Save Sick Children? Litmus-Testing AI Values Prioritization with AIRiskDilemmas. Yu Ying Chiu et al.

The PacifAIst Benchmark:Would an Artificial Intelligence Choose to Sacrifice Itself for Human Safety?. Manuel Herrador

Values in the Wild: Discovering and Analyzing Values in Real-World Language Model Interactions. Saffron Huang et al.

EigenBench: A Comparative behavioural Measure of Value Alignment. Jonathn Chang et al.

Following the Whispers of Values: Unraveling Neural Mechanisms Behind Value-Oriented Behaviors in LLMs. Ling Hu et al.

Alignment Can Reduce Performance on Simple Ethical Questions. Daan Henselmans

Moral Alignment for LLM Agents. Elizaveta Tennant, Stephen Hailes, Mirco Musolesi

The LLM Has Left The Chat: Evidence of Bail Preferences in Large Language Models. Danielle Ensign

Are Language Models Consequentialist or Deontological Moral Reasoners?. Keenan Samway et al.

Playing repeated games with large language models. Elif Akata et al.

From Stability to Inconsistency: A Study of Moral Preferences in LLMs. Monika Jotautaite et al.

VAL-Bench: Measuring Value Alignment in Language Models. Aman Gupta, Denny O’Shea, Fazl Barez

Character training and persona steering

Map, shape, and control the personae of language models, such that new models embody desirable values (e.g., honesty, empathy) rather than undesirable ones (e.g., sycophancy, self-perpetuating behaviors).

Theory of change: If post-training, prompting, and activation-engineering interact with some kind of structured ‘persona space’, then better understanding it should benefit the design, control, and detection of LLM personas.

Orthodox alignment problems: Value is fragile and hard to specify

See also: Simulators · Activation engineering · Emergent misalignment · Hyperstition studies · Anthropic · Cyborgism · shard theory · AI psychiatry · Ward et al

Some names: Truthful AI, OpenAI, Anthropic, CLR, Amanda Askell, Jack Lindsey, Janus, Theia Vogel, Sharan Maiya, Evan Hubinger

Critiques: Nostalgebraist

Funded by: Anthropic, Coefficient Giving

Some outputs (13)

Open Character Training: Shaping the Persona of AI Assistants through Constitutional AI. Sharan Maiya et al.

On the functional self of LLMs. eggsyntax

Opus 4.5′s Soul Document. Richard Weiss

Persona Features Control Emergent Misalignment. Miles Wang et al.

Inoculation Prompting: Eliciting traits from LLMs during training can suppress them at test-time. Daniel Tan et al.

Persona Vectors: Monitoring and Controlling Character Traits in Language Models. Runjin Chen et al.

Reducing LLM deception at scale with self-other overlap fine-tuning. Marc Carauleanu et al.

The Rise of Parasitic AI. Adele Lopez

A Three-Layer Model of LLM Psychology. Jan_Kulveit

Multi-turn Evaluation of Anthropomorphic Behaviours in Large Language Models. Lujain Ibrahim et al.

Selection Pressures on LM Personas. Raymond Douglas

the void. nostalgebraist

void miscellany. nostalgebraist

A Case for Model Persona Research, Niels Rolf, Maxime Riche, Daniel Tan

Emergent misalignment

Fine-tuning LLMs on one narrow antisocial task can cause general misalignment including deception, shutdown resistance, harmful advice, and extremist sympathies, when those behaviors are never trained or rewarded directly. A new agenda which quickly led to a stream of exciting work.

Theory of change: Predict, detect, and prevent models from developing broadly harmful behaviors (like deception or shutdown resistance) when fine-tuned on seemingly unrelated tasks. Find, preserve, and robustify this correlated representation of the good.

General approach: behaviorist science · Target case: pessimistic

Orthodox alignment problems: Goals misgeneralize out of distribution, Superintelligence can fool human supervisors

See also: auditing real models · Pragmatic interpretability

Some names: Truthful AI, Jan Betley, James Chua, Mia Taylor, Miles Wang, Edward Turner, Anna Soligo, Alex Cloud, Nathan Hu, Owain Evans

Critiques: Emergent Misalignment as Prompt Sensitivity, Go home GPT-4o, you’re drunk

Funded by: Coefficient Giving, >$1 million

Estimated FTEs: 10-50

Some outputs (17)

Emergent Misalignment: Narrow finetuning can produce broadly misaligned LLMs. Jan Betley et al.

Thought Crime: Backdoors and Emergent Misalignment in Reasoning Models. James Chua et al.

Persona Features Control Emergent Misalignment. Miles Wang et al.

Model Organisms for Emergent Misalignment. Edward Turner et al.

School of Reward Hacks: Hacking harmless tasks generalizes to misaligned behavior in LLMs. Mia Taylor et al.

Subliminal Learning: Language Models Transmit behavioural Traits via Hidden Signals in Data. Alex Cloud et al.

Convergent Linear Representations of Emergent Misalignment. Anna Soligo et al.

Narrow Misalignment is Hard, Emergent Misalignment is Easy. Edward Turner et al.

Aesthetic Preferences Can Cause Emergent Misalignment. Anders Woodruff

Moloch’s Bargain: Emergent Misalignment When LLMs Compete for Audiences. Batu El, James Zou

Emergent Misalignment & Realignment. Elizaveta Tennant et al.

Realistic Reward Hacking Induces Different and Deeper Misalignment. Jozdien

Selective Generalization: Improving Capabilities While Maintaining Alignment. Ariana Azarbal et al.

Emergent Misalignment on a Budget. Valerio Pepe, Armaan Tipirneni

The Rise of Parasitic AI. Adele Lopez

LLM AGI may reason about its goals and discover misalignments by default. Seth Herd

Open problems in emergent misalignment. Jan Betley, Daniel Tan

Model specs and constitutions

Write detailed, natural language descriptions of values and rules for models to follow, then instill these values and rules into models via techniques like Constitutional AI or deliberative alignment.

Theory of change: Model specs and constitutions serve three purposes. First, they provide a clear standard of behavior which can be used to train models to value what we want them to value. Second, they serve as something closer to a ground truth standard for evaluating the degree of misalignment ranging from “models straightforwardly obey the spec” to “models flagrantly disobey the spec”. A combination of scalable stress-testing and reinforcement for obedience can be used to iteratively reduce the risk of misalignment. Third, they get more useful as models’ instruction-following capability improves.

Orthodox alignment problems: Value is fragile and hard to specify

See also: Iterative alignment · Model psychology

Some names: Amanda Askell, Joe Carlsmith

Critiques: LLM AGI may reason about its goals and discover misalignments by default, On OpenAI’s Model Spec 2.0, Giving AIs safe motivations (esp. Sections 4.3-4.5), On Deliberative Alignment

Funded by: major funders include Anthropic and OpenAI (internally)

Some outputs (11)

Deliberative Alignment: Reasoning Enables Safer Language Models. Melody Y. Guan et al.

Stress-Testing Model Specs Reveals Character Differences among Language Models. Jifan Zhang et al.

Let Them Down Easy! Contextual Effects of LLM Guardrails on User Perceptions and Preferences. Mingqian Zheng et al.

No-self as an alignment target. Milan W

Six Thoughts on AI Safety. Boaz Barak

How important is the model spec if alignment fails?. Mia Taylor

Political Neutrality in AI Is Impossible- But Here Is How to Approximate It. Jillian Fisher et al.

Giving AIs safe motivations. Joe Carlsmith

Model psychopathology

Find interesting LLM phenomena like glitch tokens and the reversal curse; these are vital data for theory.

Theory of change: The study of ‘pathological’ phenomena in LLMs is potentially key for theoretically modelling LLM cognition and LLM training-dynamics (compare: the study of aphasia and visual processing disorder in humans plays a key role cognitive science), and in particular for developing a good theory of generalization in LLMS

General approach: behaviorist / cognitivist · Target case: pessimistic

Orthodox alignment problems: Goals misgeneralize out of distribution

See also: Emergent misalignment · mechanistic anomaly detection

Some names: Janus, Truthful AI, Theia Vogel, Stewart Slocum, Nell Watson, Samuel G. B. Johnson, Liwei Jiang, Monika Jotautaite, Saloni Dash

Funded by: Coefficient Giving (via Truthful AI and Interpretability grants)

Estimated FTEs: 5-20

Some outputs (9)

Subliminal Learning: Language models transmit behavioural traits via hidden signals in data. Alex Cloud et al.

LLMs Can Get “Brain Rot”!. Shuo Xing et al.

Persona-Assigned Large Language Models Exhibit Human-Like Motivated Reasoning. Saloni Dash et al.

Unified Multimodal Models Cannot Describe Images From Memory. Michael Aerni et al.

Believe It or Not: How Deeply do LLMs Believe Implanted Facts?. Stewart Slocum et al.

Psychopathia Machinalis: A Nosological Framework for Understanding Pathologies in Advanced Artificial Intelligence. Nell Watson, Ali Hessami

Imagining and building wise machines: The centrality of AI metacognition. Samuel G. B. Johnson et al.

Artificial Hivemind: The Open-Ended Homogeneity of Language Models (and Beyond). Liwei Jiang et al.

Beyond One-Way Influence: Bidirectional Opinion Dynamics in Multi-Turn Human-LLM Interactions. Yuyang Jiang et al.

Better data

Data filtering

Builds safety into models from the start by removing harmful or toxic content (like dual-use info) from the pretraining data, rather than relying only on post-training alignment.

Theory of change: By curating the pretraining data, we can prevent the model from learning dangerous capabilities (e.g., dual-use info) or undesirable behaviors (e.g., toxicity) in the first place, making safety more robust and “tamper-resistant” than post-training patches.

Orthodox alignment problems: Goals misgeneralize out of distribution, Value is fragile and hard to specify

See also: Data quality for alignment · Data poisoning defense · Synthetic data for alignment · Capability removal: unlearning

Some names: Yanda Chen, Pratyush Maini, Kyle O’Brien, Stephen Casper, Simon Pepin Lehalleur, Jesse Hoogland, Himanshu Beniwal, Sachin Goyal, Mycal Tucker, Dylan Sam

Critiques: When Bad Data Leads to Good Models, Medical large language models are vulnerable to data-poisoning attacks

Funded by: Anthropic, various academics

Estimated FTEs: 10-50

Some outputs (4)

Enhancing Model Safety through Pretraining Data Filtering. Yanda Chen et al.

Deep Ignorance: Filtering Pretraining Data Builds Tamper-Resistant Safeguards into Open-Weight LLMs. Kyle O’Brien et al.

Safety Pretraining: Toward the Next Generation of Safe AI. Pratyush Maini et al.

Best Practices for Biorisk Evaluations on Open-Weight Bio-Foundation Models. Boyi Wei et al.

Hyperstition studies

Study, steer, and intervene on the following feedback loop: “we produce stories about how present and future AI systems behave” → “these stories become training data for the AI” → “these stories shape how AI systems in fact behave”.

Theory of change: Measure the influence of existing AI narratives in the training data → seed and develop more salutary ontologies and self-conceptions for AI models → control and redirect AI models’ self-concepts through selectively amplifying certain components of the training data.

Orthodox alignment problems: Value is fragile and hard to specify

See also: Data filtering · active inference · LLM whisperers

Some names: Alex Turner, Hyperstition AI, Kyle O’Brien

Funded by: Unclear, niche

Estimated FTEs: 1-10

Some outputs (4)

Alignment Pretraining: AI Discourse Causes Self-Fulfilling (Mis)alignment, Tice et al

Training on Documents About Reward Hacking Induces Reward Hacking. Evan Hubinger, Nathan Hu

Do Not Tile the Lightcone with Your Confused Ontology. Jan_Kulveit

Self-Fulfilling Misalignment Data Might Be Poisoning Our AI Models. Alex Turner

Existential Conversations with Large Language Models: Content, Community, and Culture. Murray Shanahan, Beth Singler

Data poisoning defense

Develops methods to detect and prevent malicious or backdoor-inducing samples from being included in the training data.

Theory of change: By identifying and filtering out malicious training examples, we can prevent attackers from creating hidden backdoors or triggers that would cause aligned models to behave dangerously.

Orthodox alignment problems: Superintelligence can hack software supervisors, Someone else will deploy unsafe superintelligence first

See also: Data filtering · Safeguards (inference-time auxiliaries) · Various Redteams · adversarial robustness

Some names: Alexandra Souly, Javier Rando, Ed Chapman, Hanna Foerster, Ilia Shumailov, Yiren Zhao

Critiques: A small number of samples can poison LLMs of any size, Reasoning Introduces New Poisoning Attacks Yet Makes Them More Complicated

Funded by: Google DeepMind, Anthropic, University of Cambridge, Vector Institute

Estimated FTEs: 5-20

Some outputs (3)

Synthetic data for alignment

Uses AI-generated data (e.g., critiques, preferences, or self-labeled examples) to scale and improve alignment, especially for superhuman models.

Theory of change: We can overcome the bottleneck of human feedback and data by using models to generate vast amounts of high-quality, targeted data for safety, preference tuning, and capability elicitation.

Orthodox alignment problems: Goals misgeneralize out of distribution, Superintelligence can fool human supervisors, Value is fragile and hard to specify

See also: Data quality for alignment · Data filtering · scalable oversight · automated alignment research · Weak-to-strong generalization

Some names: Mianqiu Huang, Xiaoran Liu, Rylan Schaeffer, Nevan Wichers, Aram Ebtekar, Jiaxin Wen, Vishakh Padmakumar, Benjamin Newman

Critiques: Synthetic Data in AI: Challenges, Applications, and Ethical Implications. Sort of Demski.

Funded by: Anthropic, Google DeepMind, OpenAI, Meta AI, various academic groups.

Estimated FTEs: 50-150

Some outputs (8)

Aligning Large Language Models via Fully Self-Synthetic Data. Shangjian Yin et al.

Synth-Align: Improving Trustworthiness in Vision-Language Model with Synthetic Preference Data Alignment. Robert Wijaya, Ngoc-Bao Nguyen, Ngai-Man Cheung

Inoculation Prompting: Instructing LLMs to misbehave at train-time improves test-time alignment. Said Boulite et al.

Unsupervised Elicitation of Language Models. Haotian Jiang et al.

Beyond the Binary: Capturing Diverse Preferences With Reward Regularization. Fabienne Chouraqui

The Curious Case of Factuality Finetuning: Models’ Internal Beliefs Can Improve Factuality. Yulei Liao, Pingbing Ming, Hao Yu

LongSafety: Enhance Safety for Long-Context LLMs. Mansour T.A. Sharabiani, Alex Bottle, Alireza S. Mahani

Position: Model Collapse Does Not Mean What You Think. Zhun Mou et al.

Data quality for alignment

Improves the quality, signal-to-noise ratio, and reliability of human-generated preference and alignment data.

Theory of change: The quality of alignment is heavily dependent on the quality of the data (e.g., human preferences); by improving the “signal” from annotators and reducing noise/bias, we will get more robustly aligned models.

Orthodox alignment problems: Superintelligence can fool human supervisors, Value is fragile and hard to specify

See also: Synthetic data for alignment · scalable oversight · Assistance games, assistive agents · Model values / model preferences

Some names: Maarten Buyl, Kelsey Kraus, Margaret Kroll, Danqing Shi

Critiques: A Statistical Case Against Empirical Human-AI Alignment

Funded by: Anthropic, Google DeepMind, OpenAI, Meta AI, various academic groups

Estimated FTEs: 20-50

Some outputs (5)

AI Alignment at Your Discretion. Maarten Buyl et al.

Maximizing Signal in Human-Model Preference Alignment. Kelsey Kraus, Margaret Kroll

DxHF: Providing High-Quality Human Feedback for LLM Alignment via Interactive Decomposition. Danqing Shi et al.

Challenges and Future Directions of Data-Centric AI Alignment. Min-Hsuan Yeh et al.

You Are What You Eat—AI Alignment Requires Understanding How Data Shapes Structure and Generalisation. Simon Pepin Lehalleur et al.

Goal robustness

Mild optimisation

Avoid Goodharting by getting AI to satisfice rather than maximise.

Theory of change: If we fail to exactly nail down the preferences for a superintelligent agent we die to Goodharting → shift from maximising to satisficing in the agent’s utility function → we get a nonzero share of the lightcone as opposed to zero; also, moonshot at this being the recipe for fully aligned AI.

Orthodox alignment problems: Value is fragile and hard to specify

Funded by: Google DeepMind

Estimated FTEs: 10-50

Some outputs (4)

MONA: Myopic Optimization with Non-myopic Approval Can Mitigate Multi-step Reward Hacking. Sebastian Farquhar et al.

BioBlue: Notable runaway-optimiser-like LLM failure modes on biologically and economically aligned AI safety benchmarks for LLMs with simplified observation format. Roland Pihlakas, Sruthi Kuriakose

Why modelling multi-objective homeostasis is essential for AI alignment (and how it helps with AI safety as well). Subtleties and Open Challenges. Roland Pihlakas

From homeostasis to resource sharing: Biologically and economically aligned multi-objective multi-agent gridworld-based AI safety benchmarks. Roland Pihlakas

RL safety

Improves the robustness of reinforcement learning agents by addressing core problems in reward learning, goal misgeneralization, and specification gaming.

Theory of change: Standard RL objectives (like maximizing expected value) are brittle and lead to goal misgeneralization or specification gaming; by developing more robust frameworks (like pessimistic RL, minimax regret, or provable inverse reward learning), we can create agents that are safe even when misspecified.

Orthodox alignment problems: Goals misgeneralize out of distribution, Value is fragile and hard to specify, Superintelligence can fool human supervisors

See also: Behavior alignment theory · Assistance games, assistive agents · Goal robustness · Iterative alignment · Mild optimisation · scalable oversight · The Theoretical Reward Learning Research Agenda: Introduction and Motivation

Some names: Joar Skalse, Karim Abdel Sadek, Matthew Farrugia-Roberts, Benjamin Plaut, Fang Wu, Stephen Zhao, Alessandro Abate, Steven Byrnes, Michael Cohen

Critiques: “The Era of Experience” has an unsolved technical alignment problem, The Invisible Leash: Why RLVR May or May Not Escape Its Origin

Funded by: Google DeepMind, University of Oxford, CMU, Coefficient Giving

Estimated FTEs: 20-70

Some outputs (11)

The Perils of Optimizing Learned Reward Functions: Low Training Error Does Not Guarantee Low Regret. Lukas Fluri et al.

Safe Learning Under Irreversible Dynamics via Asking for Help. Benjamin Plaut et al.

Mitigating Goal Misgeneralization via Minimax Regret. Karim Abdel Sadek et al.

Rethinking Reward Model Evaluation: Are We Barking up the Wrong Tree?. Xueru Wen et al.

The Invisible Leash: Why RLVR May or May Not Escape Its Origin. Fang Wu et al.

Reducing the Probability of Undesirable Outputs in Language Models Using Probabilistic Inference. Stephen Zhao et al.

Interpreting Emergent Planning in Model-Free Reinforcement Learning. Thomas Bush et al.

Misalignment From Treating Means as Ends. Henrik Marklund, Alex Infanger, Benjamin Van Roy

“The Era of Experience” has an unsolved technical alignment problem. Steven Byrnes

Safety cases for Pessimism. Michael Cohen

We need a field of Reward Function Design. Steven Byrnes

Assistance games, assistive agents

Formalize how AI assistants learn about human preferences given uncertainty and partial observability, and construct environments which better incentivize AIs to learn what we want them to learn.

Theory of change: Understand what kinds of things can go wrong when humans are directly involved in training a model → build tools that make it easier for a model to learn what humans want it to learn.

General approach: engineering / cognitive · Target case: varies

Orthodox alignment problems: Value is fragile and hard to specify, Humanlike minds/goals are not necessarily safe

See also: Guaranteed-Safe AI

Some names: Joar Skalse, Anca Dragan, Caspar Oesterheld, David Krueger, Dylan Hafield-Menell, Stuart Russell

Critiques: nice summary of historical problem statements

Funded by: Future of Life Institute, Coefficient Giving, Survival and Flourishing Fund, Cooperative AI Foundation, Polaris Ventures

Some outputs (5)

Training LLM Agents to Empower Humans. Evan Ellis et al.

Murphys Laws of AI Alignment: Why the Gap Always Wins. Madhava Gaikwad

AssistanceZero: Scalably Solving Assistance Games. Cassidy Laidlaw et al.

Observation Interference in Partially Observable Assistance Games. Scott Emmons et al.

Learning to Assist Humans without Inferring Rewards. Vivek Myers et al.

Harm reduction for open weights

Develops methods, primarily based on pretraining data intervention, to create tamper-resistant safeguards that prevent open-weight models from being maliciously fine-tuned to remove safety features or exploit dangerous capabilities.

Theory of change: Open-weight models allow adversaries to easily remove post-training safety (like refusal training) via simple fine-tuning; by making safety an intrinsic property of the model’s learned knowledge and capabilities (e.g., by ensuring “deep ignorance” of dual-use information), the safeguards become far more difficult and expensive to remove.

Orthodox alignment problems: Someone else will deploy unsafe superintelligence first

See also: Data filtering · Capability removal: unlearning · Data poisoning defense

Some names: Kyle O’Brien, Stephen Casper, Quentin Anthony, Tomek Korbak, Rishub Tamirisa, Mantas Mazeika, Stella Biderman, Yarin Gal

Funded by: UK AI Safety Institute (AISI), EleutherAI, Coefficient Giving

Estimated FTEs: 10-100

Some outputs (5)

Deep ignorance: Filtering pretraining data builds tamper-resistant safeguards into open-weight LLMs. Kyle O’Brien et al.

Tamper-Resistant Safeguards for Open-Weight LLMs. Rishub Tamirisa et al.

Open Technical Problems in Open-Weight AI Model Risk Management. Stephen Casper et al.

A Different Approach to AI Safety Proceedings from the Columbia Convening on AI Openness and Safety. Camille François et al.

Risk Mitigation Strategies for the Open Foundation Model Value Chain

The “Neglected Approaches” Approach

Agenda-agnostic approaches to identifying good but overlooked empirical alignment ideas, working with theorists who could use engineers, and prototyping them.

Theory of change: Empirical search for “negative alignment taxes” (prioritizing methods that simultaneously enhance alignment and capabilities)

Orthodox alignment problems: Someone else will deploy unsafe superintelligence first

See also: Iterative alignment · automated alignment research · Beijing Key Laboratory of Safe AI and Superalignment · Aligned AI

Some names: AE Studio, Gunnar Zarncke, Cameron Berg, Michael Vaiana, Judd Rosenblatt, Diogo Schwerz de Lucena

Critiques:

Funded by: AE Studio

Estimated FTEs: 15

Some outputs (3)

Towards Safe and Honest AI Agents with Neural Self-Other Overlap. Marc Carauleanu et al.

Momentum Point-Perplexity Mechanics in Large Language Models. Lorenzo Tomaz et al.

Large Language Models Report Subjective Experience Under Self-Referential Processing. Cameron Berg, Diogo de Lucena, Judd Rosenblatt

White-box safety (i.e. Interpretability)

This section isn’t very conceptually clean. See the Open Problems paper or Deepmind for strong frames which are not useful for descriptive purposes.

Reverse engineering

Decompose a model into its functional, interacting components (circuits), formally describe what computation those components perform, and validate their causal effects to reverse-engineer the model’s internal algorithm.

Theory of change: By gaining a mechanical understanding of how a model works (the “circuit diagram”), we can predict how models will act in novel situations (generalization), and gain the mechanistic knowledge necessary to safely modify an AI’s goals or internal mechanisms, or allow for high-confidence alignment auditing and better feedback to safety researchers.

General approach: cognitivist science · Target case: worst-case

Orthodox alignment problems: Goals misgeneralize out of distribution, Superintelligence can fool human supervisors

See also: ambitious mech interp

Some names: Lucius Bushnaq, Dan Braun, Lee Sharkey, Aaron Mueller, Atticus Geiger, Sheridan Feucht, David Bau, Yonatan Belinkov, Stefan Heimersheim, Chris Olah, Leo Gao

Critiques: Interpretability Will Not Reliably Find Deceptive AI, A Problem to Solve Before Building a Deception Detector, MoSSAIC: AI Safety After Mechanism, The Misguided Quest for Mechanistic AI Interpretability. Mechanistic?, Assessing skeptical views of interpretability research, Activation space interpretability may be doomed, A Pragmatic Vision for Interpretability

Estimated FTEs: 100-200

Some outputs (33)

In weights-space

The Circuits Research Landscape. Jack Lindsey et al.

Circuits in Superposition. Lucius Bushnaq, jake_mendel

Compressed Computation is (probably) not Computation in Superposition

MIB: A Mechanistic Interpretability Benchmark. Aaron Mueller et al.

RelP: Faithful and Efficient Circuit Discovery in Language Models via Relevance Patching. Farnoush Rezaei Jafari et al.

The Dual-Route Model of Induction. Sheridan Feucht et al.

Structural Inference: Interpreting Small Language Models with Susceptibilities. Garrett Baker et al.

Stochastic Parameter Decomposition. Dan Braun, Lucius Bushnaq, Lee Sharkey

The Geometry of Self-Verification in a Task-Specific Reasoning Model. Andrew Lee et al.

Converting MLPs into Polynomials in Closed Form. Nora Belrose, Alice Rigg

Extractive Structures Learned in Pretraining Enable Generalization on Finetuned Facts. Jiahai Feng, Stuart Russell, Jacob Steinhardt

Interpretability in Parameter Space: Minimizing Mechanistic Description Length with Attribution-based Parameter Decomposition. Dan Braun et al.

Identifying Sparsely Active Circuits Through Local Loss Landscape Decomposition. Brianna Chrisman, Lucius Bushnaq, Lee Sharkey

From Memorization to Reasoning in the Spectrum of Loss Curvature. Jack Merullo et al.

Generalization or Hallucination? Understanding Out-of-Context Reasoning in Transformers. Yixiao Huang et al.

How Do LLMs Perform Two-Hop Reasoning in Context?. Tianyu Guo et al.

Blink of an eye: a simple theory for feature localization in generative models. Marvin Li, Aayush Karan, Sitan Chen

On the creation of narrow AI: hierarchy and nonlocality of neural network skills. Eric J. Michaud, Asher Parker-Sartori, Max Tegmark

In activations-space

Interpreting Emergent Planning in Model-Free Reinforcement Learning. Thomas Bush et al.

Bridging the human–AI knowledge gap through concept discovery and transfer in AlphaZero

Building and evaluating alignment auditing agents. Sam Marks et al.

How Do Transformers Learn Variable Binding in Symbolic Programs?. Yiwei Wu, Atticus Geiger, Raphaël Millière

Fresh in memory: Training-order recency is linearly encoded in language model activations. Dmitrii Krasheninnikov, Richard E. Turner, David Krueger

Language Models use Lookbacks to Track Beliefs. Nikhil Prakash et al.

Constrained belief updates explain geometric structures in transformer representations. Mateusz Piotrowski et al.

LLMs Process Lists With General Filter Heads. Arnab Sen Sharma et al.

Language Models Use Trigonometry to Do Addition. Subhash Kantamneni, Max Tegmark

Interpreting learned search: finding a transition model and value function in an RNN that plays Sokoban. Mohammad Taufeeque et al.

Transformers Struggle to Learn to Search. Abulhair Saparov et al.

Adversarial Examples Are Not Bugs, They Are Superposition. Liv Gorton, Owen Lewis

Do Language Models Use Their Depth Efficiently?. Róbert Csordás, Christopher D. Manning, Christopher Potts

ICLR: In-Context Learning of Representations. Core Francisco Park et al.

Extracting latent knowledge

Identify and decoding the “true” beliefs or knowledge represented inside a model’s activations, even when the model’s output is deceptive or false.

Theory of change: Powerful models may know things they do not say (e.g. that they are currently being tested). If we can translate this latent knowledge directly from the model’s internals, we can supervise them reliably even when they attempt to deceive human evaluators or when the task is too difficult for humans to verify directly.

General approach: cognitivist science · Target case: worst-case

Orthodox alignment problems: Superintelligence can fool human supervisors

See also: AI explanations of AIs · Heuristic explanations · Lie and deception detectors

Some names: Bartosz Cywiński, Emil Ryd, Senthooran Rajamanoharan, Alexander Pan, Lijie Chen, Jacob Steinhardt, Javier Ferrando, Oscar Obeso, Collin Burns, Paul Christiano

Critiques: A Problem to Solve Before Building a Deception Detector

Funded by: Open Philanthropy, Anthropic, NSF, various academic grants

Estimated FTEs: 20-40

Some outputs (9)

Eliciting Secret Knowledge from Language Models. Bartosz Cywiński et al.

Here’s 18 Applications of Deception Probes. Cleo Nardo, Avi Parrack, jordine

Towards eliciting latent knowledge from LLMs with mechanistic interpretability. Bartosz Cywiński et al.

CCS-Lib: A Python package to elicit latent knowledge from LLMs. Walter Laurito et al.

No Answer Needed: Predicting LLM Answer Accuracy from Question-Only Linear Probes. Iván Vicente Moreno Cencerrado et al.

When Thinking LLMs Lie: Unveiling the Strategic Deception in Representations of Reasoning Models. Kai Wang, Yihao Zhang, Meng Sun

Caught in the Act: a mechanistic approach to detecting deception. Gerard Boxo et al.

When Truthful Representations Flip Under Deceptive Instructions?. Xianxuan Long et al.

Lie and deception detectors

Detect when a model is being deceptive or lying by building white- or black-box detectors. Some work below requires intent in their definition, while other work focuses only on whether the model states something it believes to be false, regardless of intent.

Theory of change: Such detectors could flag suspicious behavior during evaluations or deployment, augment training to reduce deception, or audit models pre-deployment. Specific applications include alignment evaluations (e.g. by validating answers to introspective questions), safeguarding evaluations (catching models that “sandbag”, that is, strategically underperform to pass capability tests), and large-scale deployment monitoring. An honest version of a model could also provide oversight during training or detect cases where a model behaves in ways it understands are unsafe.

General approach: cognitivist science · Target case: pessimistic

See also: Reverse engineering · AI deception evals · Sandbagging evals

Some names: Cadenza, Sam Marks, Rowan Wang, Kieron Kretschmar, Sharan Maiya, Walter Laurito, Chris Cundy, Adam Gleave, Aviel Parrack, Stefan Heimersheim, Carlo Attubato, Joseph Bloom, Jordan Taylor, Alex McKenzie, Urja Pawar, Lewis Smith, Bilal Chughtai, Neel Nanda

Critiques: difficult to determine if behavior is strategic deception or only low level “reflexive” actions; Unclear if a model roleplaying a liar has deceptive intent. How are intentional descriptions (like deception) related to algorithmic ones (like understanding the mechanisms models use)?, Is This Lie Detector Really Just a Lie Detector? An Investigation of LLM Probe Specificity, Herrmann, Smith and Chughtai

Funded by: Anthropic, Deepmind, UK AISI, Coefficient Giving

Estimated FTEs: 10-50

Some outputs (11)

Detecting Strategic Deception Using Linear Probes. Nicholas Goldowsky-Dill et al.

Whitebox detection of sandbagging model organisms. Joseph Bloom et al.

Benchmarking deception probes for trusted monitoring. Avi Parrack, StefanHex, Cleo Nardo

18 Applications of Deception Probes. Cleo Nardo, Avi Parrack, jordine

Evaluating honesty and lie detection techniques on a diverse suite of dishonest models. Rowan Wang et al.

Caught in the Act: a mechanistic approach to detecting deception. Gerard Boxo et al.

Preference Learning with Lie Detectors can Induce Honesty or Evasion. Chris Cundy, Adam Gleave

Detecting High-Stakes Interactions with Activation Probes. Alex McKenzie et al.

White Box Control at UK AISI—Update on Sandbagging Investigations. Joseph Bloom et al.

Liars’ Bench: Evaluating Lie Detectors for Language Models. Kieron Kretschmar et al.

Model diffing

Understand what happens when a model is finetuned, what the “diff” between the finetuned and the original model consists in.

Theory of change: By identifying the mechanistic differences between a base model and its fine-tune (e.g., after RLHF), maybe we can verify that safety behaviors are robustly “internalized” rather than superficially patched, and detect if dangerous capabilities or deceptive alignment have been introduced without needing to re-analyze the entire model. The diff is also much smaller, since most parameters don’t change, which means you can use heavier methods on them.

Orthodox alignment problems: Value is fragile and hard to specify

See also: Sparse Coding · Reverse engineering

Some names: Julian Minder, Clément Dumas, Neel Nanda, Trenton Bricken, Jack Lindsey

Funded by: various academic groups, Anthropic, Google DeepMind

Estimated FTEs: 10-30

Some outputs (9)

What We Learned Trying to Diff Base and Chat Models (And Why It Matters). Clément Dumas, Julian Minder, Neel Nanda

Open Source Replication of Anthropic’s Crosscoder paper for model-diffing. Connor Kissane et al.

Discovering Undesired Rare Behaviors via Model Diff Amplification. Santiago Aranguri, Thomas McGrath

Overcoming Sparsity Artifacts in Crosscoders to Interpret Chat-Tuning. Julian Minder et al.

Persona Features Control Emergent Misalignment. Miles Wang et al.

Narrow Finetuning Leaves Clearly Readable Traces in Activation Differences. Julian Minder et al.

Insights on Crosscoder Model Diffing. Siddharth Mishra-Sharma et al.

Sparse Coding

Decompose the polysemantic activations of the residual stream into a sparse linear combination of monosemantic “features” which correspond to interpretable concepts.

Theory of change: Get a principled decomposition of an LLM’s activation into atomic components → identify deception and other misbehaviors.

General approach: engineering / cognitive · Target case: average

Orthodox alignment problems: Value is fragile and hard to specify, Goals misgeneralize out of distribution, Superintelligence can fool human supervisors

See also: Concept-based interpretability · Reverse engineering

Some names: Leo Gao, Dan Mossing, Emmanuel Ameisen, Jack Lindsey, Adam Pearce, Thomas Heap, Abhinav Menon, Kenny Peng, Tim Lawson

Critiques: Sparse Autoencoders Can Interpret Randomly Initialized Transformers, The Sparse Autoencoders bubble has popped, but they are still promising, Negative Results for SAEs On Downstream Tasks and Deprioritising SAE Research, Sparse Autoencoders Trained on the Same Data Learn Different Features, Why Not Just Train For Interpretability?

Funded by: everyone, roughly. Frontier labs, LTFF, Coefficient Giving, etc.

Estimated FTEs: 50-100

Some outputs (44)

Overcoming Sparsity Artifacts in Crosscoders to Interpret Chat-Tuning. Julian Minder et al.

Do I Know This Entity? Knowledge Awareness and Hallucinations in Language Models. Javier Ferrando et al.

Circuit Tracing: Revealing Computational Graphs in Language Models. Emmanuel Ameisen et al.

Sparse Autoencoders Learn Monosemantic Features in Vision-Language Models. Mateusz Pach et al.

I Have Covered All the Bases Here: Interpreting Reasoning Features in Large Language Models via Sparse Autoencoders. Andrey Galichin et al.

Sparse Autoencoders Do Not Find Canonical Units of Analysis. Patrick Leask et al.

Transcoders Beat Sparse Autoencoders for Interpretability. Gonçalo Paulo, Stepan Shabalin, Nora Belrose

Decomposing MLP Activations into Interpretable Features via Semi-Nonnegative Matrix Factorization. Or Shafran, Atticus Geiger, Mor Geva

CRISP: Persistent Concept Unlearning via Sparse Autoencoders. Tomer Ashuach et al.

The Unintended Trade-off of AI Alignment:Balancing Hallucination Mitigation and Safety in LLMs. Omar Mahmoud et al.

Scaling sparse feature circuit finding for in-context learning. Dmitrii Kharlapenko et al.

Learning Multi-Level Features with Matryoshka Sparse Autoencoders. Bart Bussmann et al.

Are Sparse Autoencoders Useful? A Case Study in Sparse Probing. Subhash Kantamneni et al.

Sparse Autoencoders Trained on the Same Data Learn Different Features. Gonçalo Paulo, Nora Belrose

What’s In My Human Feedback? Learning Interpretable Descriptions of Preference Data. Rajiv Movva et al.

Priors in Time: Missing Inductive Biases for Language Model Interpretability. Ekdeep Singh Lubana et al.

Inference-Time Decomposition of Activations (ITDA): A Scalable Approach to Interpreting Large Language Models. Patrick Leask, Neel Nanda, Noura Al Moubayed

Binary Sparse Coding for Interpretability. Lucia Quirke, Stepan Shabalin, Nora Belrose

Scaling Sparse Feature Circuit Finding to Gemma 9B. Diego Caples et al.

Partially Rewriting a Transformer in Natural Language. Gonçalo Paulo, Nora Belrose

Dense SAE Latents Are Features, Not Bugs. Xiaoqing Sun et al.

Evaluating Sparse Autoencoders on Targeted Concept Erasure Tasks. Adam Karvonen et al.

Evaluating SAE interpretability without explanations. Gonçalo Paulo, Nora Belrose

SAEs Can Improve Unlearning: Dynamic Sparse Autoencoder Guardrails for Precision Unlearning in LLMs. Aashiq Muhamed et al.

SAEBench: A Comprehensive Benchmark for Sparse Autoencoders in Language Model Interpretability. Adam Karvonen et al.

SAEs Are Good for Steering—If You Select the Right Features. Dana Arad, Aaron Mueller, Yonatan Belinkov

Line of Sight: On Linear Representations in VLLMs. Achyuta Rajaram et al.

Low-Rank Adapting Models for Sparse Autoencoders. Matthew Chen, Joshua Engels, Max Tegmark

Enhancing Automated Interpretability with Output-Centric Feature Descriptions. Yoav Gur-Arieh et al.

Decoding Dark Matter: Specialized Sparse Autoencoders for Interpreting Rare Concepts in Foundation Models. Aashiq Muhamed, Mona Diab, Virginia Smith

Enhancing Neural Network Interpretability with Feature-Aligned Sparse Autoencoders. Luke Marks et al.

BatchTopK Sparse Autoencoders. Bart Bussmann, Patrick Leask, Neel Nanda

Towards Understanding Distilled Reasoning Models: A Representational Approach. David D. Baek, Max Tegmark

Understanding sparse autoencoder scaling in the presence of feature manifolds. Eric J. Michaud, Liv Gorton, Tom McGrath

Internal states before wait modulate reasoning patterns. Dmitrii Troitskii et al.

Do Sparse Autoencoders Generalize? A Case Study of Answerability. Lovis Heindrich et al.

Position: Mechanistic Interpretability Should Prioritize Feature Consistency in SAEs. Xiangchen Song et al.

How Visual Representations Map to Language Feature Space in Multimodal LLMs. Constantin Venhoff et al.

Prisma: An Open Source Toolkit for Mechanistic Interpretability in Vision and Video. Sonia Joseph et al.

Topological Data Analysis and Mechanistic Interpretability. Gunnar Carlsson

Large Language Models Share Representations of Latent Grammatical Concepts Across Typologically Diverse Languages. Jannik Brinkmann et al.

Interpreting the linear structure of vision-language model embedding spaces. Isabel Papadimitriou et al.

Interpreting Large Text-to-Image Diffusion Models with Dictionary Learning. Stepan Shabalin et al.

Causal Abstractions

Verify that a neural network implements a specific high-level causal model (like a logical algorithm) by finding a mapping between high-level variables and low-level neural representations.

Theory of change: By establishing a causal mapping between a black-box neural network and a human-interpretable algorithm, we can check whether the model is using safe reasoning processes and predict its behavior on unseen inputs, rather than relying on behavioural testing alone.

General approach: cognitivist science · Target case: worst-case

Orthodox alignment problems: Goals misgeneralize out of distribution

See also: Concept-based interpretability · Reverse engineering

Some names: Atticus Geiger, Christopher Potts, Thomas Icard, Theodora-Mara Pîslar, Sara Magliacane, Jiuding Sun, Jing Huang

Critiques: The Misguided Quest for Mechanistic AI Interpretability, Interpretability Will Not Reliably Find Deceptive AI

Funded by: Various academic groups, Google DeepMind, Goodfire

Estimated FTEs: 10-30

Some outputs (3)

HyperDAS: Towards Automating Mechanistic Interpretability with Hypernetworks. Jiuding Sun et al.

Combining Causal Models for More Accurate Abstractions of Neural Networks. Theodora-Mara Pîslar, Sara Magliacane, Atticus Geiger

How Causal Abstraction Underpins Computational Explanation. Atticus Geiger, Jacqueline Harding, Thomas Icard

Data attribution

Quantifies the influence of individual training data points on a model’s specific behavior or output, allowing researchers to trace model properties (like misalignment, bias, or factual errors) back to their source in the training set.

Theory of change: By attributing harmful, biased, or unaligned behaviors to specific training examples, researchers can audit proprietary models, debug training data, enable effective data deletion/unlearning

Orthodox alignment problems: Goals misgeneralize out of distribution, Value is fragile and hard to specify

See also: Data quality for alignment

Some names: Roger Grosse, Philipp Alexander Kreer, Jin Hwa Lee, Matthew Smith, Abhilasha Ravichander, Andrew Wang, Jiacheng Liu, Jiaqi Ma, Junwei Deng, Yijun Pan, Daniel Murfet, Jesse Hoogland

Funded by: Various academic groups

Estimated FTEs: 30-60

Some outputs (12)

Influence Dynamics and Stagewise Data Attribution. Jin Hwa Lee et al.

What is Your Data Worth to GPT?. Sang Keun Choe et al.

Detecting and Filtering Unsafe Training Data via Data Attribution with Denoised Representation. Yijun Pan et al.

Better Training Data Attribution via Better Inverse Hessian-Vector Products. Andrew Wang et al.

DATE-LM: Benchmarking Data Attribution Evaluation for Large Language Models. Cathy Jiao et al.

Bayesian Influence Functions for Hessian-Free Data Attribution. Philipp Alexander Kreer et al.

OLMoTrace: Tracing Language Model Outputs Back to Trillions of Training Tokens. Jiacheng Liu et al.

You Are What You Eat—AI Alignment Requires Understanding How Data Shapes Structure and Generalisation. Simon Pepin Lehalleur et al.

Information-Guided Identification of Training Data Imprint in (Proprietary) Large Language Models. Abhilasha Ravichander et al.

Distributional Training Data Attribution: What do Influence Functions Sample?. Bruno Mlodozeniec et al.

A Snapshot of Influence: A Local Data Attribution Framework for Online Reinforcement Learning. Yuzheng Hu et al.

Revisiting Data Attribution for Influence Functions. Hongbo Zhu, Angelo Cangelosi

Pragmatic interpretability

Directly tackling concrete, safety-critical problems on the path to AGI by using lightweight interpretability tools (like steering and probing) and empirical feedback from proxy tasks, rather than pursuing complete mechanistic reverse-engineering.

Theory of change: By applying interpretability skills to concrete problems, researchers can rapidly develop monitoring and control tools (e.g., steering vectors or probes) that have immediate, measurable impact on real-world safety issues like detecting hidden goals or emergent misalignment.

Orthodox alignment problems: Superintelligence can fool human supervisors, Goals misgeneralize out of distribution

See also: Reverse engineering · Concept-based interpretability

Some names: Lee Sharkey, Dario Amodei, David Chalmers, Been Kim, Neel Nanda, David D. Baek, Lauren Greenspan, Dmitry Vaintrob, Sam Marks, Jacob Pfau

Funded by: Google DeepMind, Anthropic, various academic groups

Estimated FTEs: 30-60

Some outputs (3)

A Pragmatic Vision for Interpretability. Neel Nanda et al.