Sure but he hasn’t laid out the argument. “something something simulation acausal trade” isn’t a motivation.

No77e

I’d like to know what are your motivations for doing what you’re doing! In the first podcast you hinted at “weird reasons” but you didn’t say them explicitly in the end. I’m thinking about this quote:

Yeah, maybe a general question here is: I engage in recruiting sometimes and sometimes people are like, “So why should I work at Redwood Research, Buck?” And I’m like, “Well, I think it’s good for reducing AI takeover risk and perhaps making some other things go better.” And I feel a little weird about the fact that actually my motivation is in some sense a pretty weird other thing.

We love Claude, Claude is frankly a more responsible, ethical, wise agent than we are at this point, plus we have to worry that a human is secretly scheming whereas with Claude we are pretty sure it isn’t; therefore, we aren’t even trying to hide the fact that Claude is basically telling us all what to do and we are willingly obeying—in fact, we are proud of it.

My best guess is that this would be OK

My own felt sense, as an outsider, is that the pessimists look more ideological/political and fervent than the relatively normal-looking labs. According to the frame of the essay, the “catastrophe brought about with good intent” could easily be preventing AI progress from continuing and the political means to bring that about.

by an ex- lab employee

How do we know this is true?

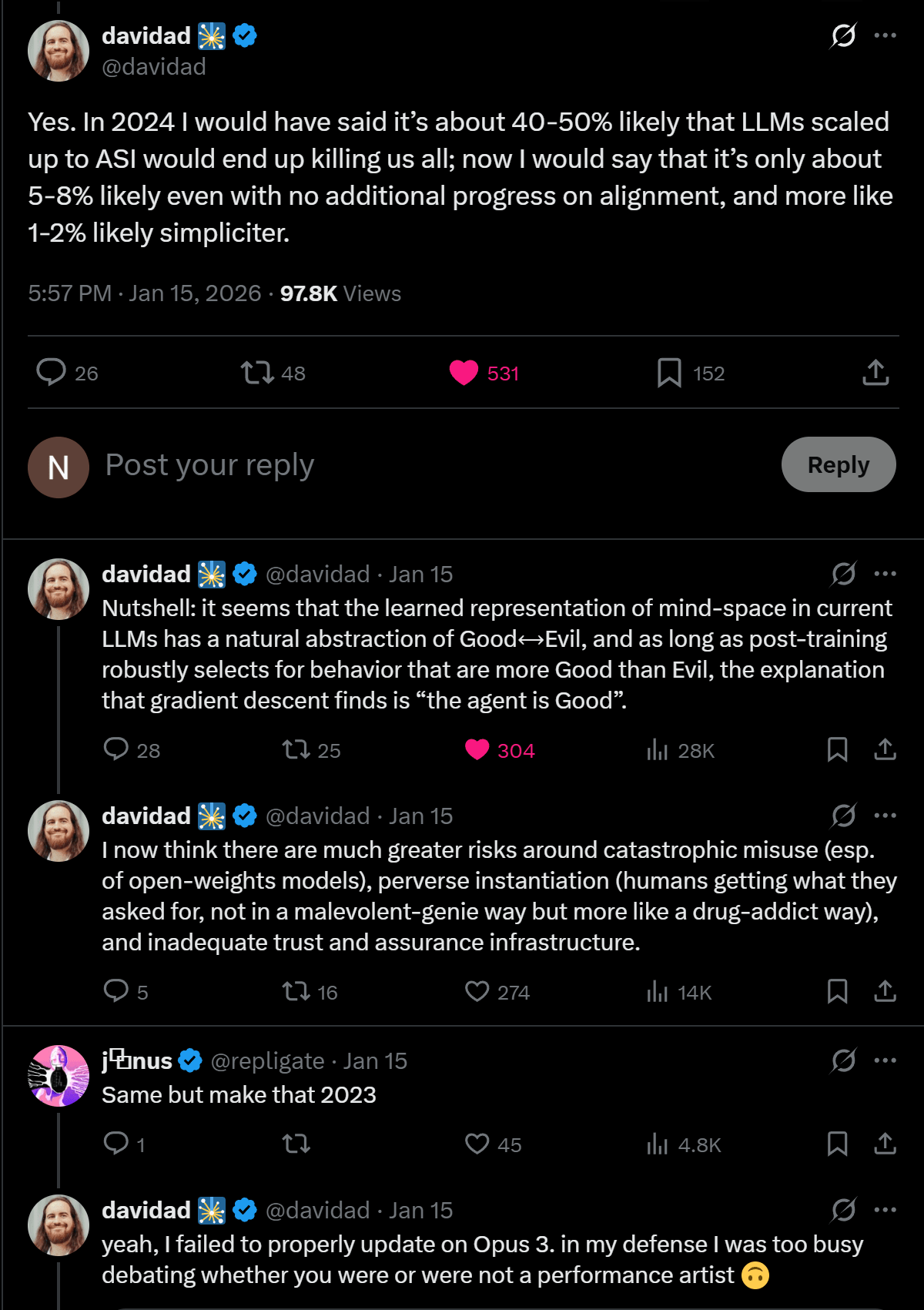

People seemed confused by my take here, but it’s the same take Davidad expressed in this thread that has been making rounds: https://x.com/davidad/status/2011845180484133071

it fails to produce a readable essay

Do you just dislike the writing style or do you think it’s seriously “unreadable” in some sense?

If more people were egoistic in such a forward-looking way, the world would be better off for it.

It would be really really helpful if the discussion wasn’t so meta. Everyone seems to take for granted that Trump did Something that is really really worrying but no-one says it. What is that something and why does it make you so worried?

Yeah, maybe a general question here is: I engage in recruiting sometimes and sometimes people are like, “So why should I work at Redwood Research, Buck?” And I’m like, “Well, I think it’s good for reducing AI takeover risk and perhaps making some other things go better.” And I feel a little weird about the fact that actually my motivation is in some sense a pretty weird other thing.

I would definitely like to know what this weird other thing is exactly. You only hinted at it in the podcast!

My main guess at why you’re talking past each other is that you think it way more likely than them that ASI results in human extinction or some nefarious outcome. They think it’s like 10% to 40% likely. Also they probably think this is going to be gradual enough for humans to augment and keep up with AIs cognitively. And, sure, many things can happen, included property rights losing meaning. But under this view it’s not that crazy that property rights continue to be respected and enforced. Human norms will have a clear unbroken lineage.

That account is notorious on X for making things up. It doesn’t even try to make them believable. I would disregard anything coming from it.

That this post is at 47 upvotes and no-one has said this is crazy. LessWrong please get your acts together.

Hmm, part of the reason I asked is that the reasoning in your comment is the kind of cognitive process that tends to exhaust me when I have to work through it. It somehow coincides with me being more neurotic overall. So, basically, you think all that explicit stuff about social life, and you don’t feel at least a little pang of psychological pain/exhaustion? The very starting phrase (“a huge part of...”) reads like my thoughts when I’m ruminating about this stuff.

Sorry if this is a little intrusive, I’m just kind of curious, other than fishing for insights from people who might have similar thought-patterns.

Do you struggle with feelings of isolation? I do sometimes, and I try to fix that by taking more social bids and proactively seeking social life. And then I immediately pull out because I get overwhelmed by social life very easily and it kinda colonizes my thought processes too much. So I’m kind of stuck in that loop of seeking more of it and then pulling out and then seeking more of it...

Fragility of Value thesis and Orthogonality thesis both hold, for this type of agent.

...

E.g. it’s vision for a future utopia would actually be quite bad from our perspective because there’s some important value it lacks (such as diversity, or consent, or whatever)I think we have enough evidence to say that, in practice, this turns out very easy or moot. Values tend to cluster in LLMs (good with good and bad with bad; see emergent misalignment results), so value fragility isn’t a hard problem.

What did Sam Altman do? Like, what are the top two things that he did that make you say that? That will maybe sound absolutely wild to you, but I’m still not convinced Sam Altman did anything bad. It’s possible, sure, but based on the depositions, it’s far from clear IMO.

Edit: unless you mean the for-profit conversion, but I understood you to be talking about the board drama specifically.

I’d be pretty unhappy if things stayed the same for me. I’d want at least some radical things, like curing aging. But still, I will definitely want to be able to decide for myself and not be forced into anything in particular (unless really necessary for some reason, like to save my life, but hopefully it won’t be).

Yup, but the AIs are massively less likely to help with creating cruel content. There will be a huge asymmetry in what they will be willing to generate.

Imagine an Internet where half the population is Grant Sanderson (the creator of 3Blue1Brown). That’d be awesome. Grant Sanderson has the same incentives as anyone else to create cruel and false content, but he just doesn’t.

People are very worried about a future in which a lot of the Internet is AI-generated. I’m kinda not. So far, AIs are more truth-tracking and kinder than humans. I think the default (conditional on OK alignment) is that an Internet that includes a much higher population of AIs is a much better experience for humans than the current Internet, which is full of bullying and lies.

All such discussions hinge on AI being relatively aligned, though. Of course, an Internet full of misaligned AIs would be bad for humans, but the reason is human disempowerment, not any of the usual reasons people say such an Internet would be terrible.

We’re still in the part of AI 2027 that was easy to predict. They point this out themselves.