Latent variables for prediction markets: motivation, technical guide, and design considerations

I’ve been pushing for latent variables to be added to prediction markets, including by making a demo for how it could work. Roughly speaking, reflective latent variables allow you to specify joint probability distributions over a bunch of observed variables. However, this is a very abstract description which people tend to find quite difficult, which probably helps explain why the proposal hasn’t gotten much traction.

If you are familiar with finance, another way to think of latent variable markets is that they are sort of like an index fund for prediction markets, allowing you to make overall bets across multiple markets. (Though the way I’ve set them up in this post differs quite a bit from financial index funds.)

Now I’ve just had a meeting with some people working at Metaculus, and it helped me understand what sorts of questions people might have about latent variables for prediction markets. Therefore, I thought it would be a good idea to write this post, both so the people at Metaculus have it as a reference if they want to implement it, and so that others who might be interested can learn more.

As a further note, I have updated my demo with more descriptions. It now attempts to explain the meaning of the market (e.g. “The latent variable market works by having a continuum of possible latent outcomes, …”), as well has how different kinds of evidence might map to different predictions (e.g. “If you think you have seen evidence that narrows down the distribution of outcomes relative to the market beliefs, …”). Furthermore, I have updated the demo to be somewhat nicer and somewhat more realistic, though it is still somewhat crude. I would be interested in hearing more feedback in the comments.

I should note that if you are interested in implementing latent variables in practice for prediction markets, I am very interested in helping with that. I have an “Offers” section at the end of the post, where I offer advice, code and money for the project.

Use cases

There are a number of situations where I think latent variables are extremely helpful:

Detecting and adjusting for random measurement error

Abstracting over conceptually related yet distinct variables, and ranking the importance of different pieces of information

Better conditional markets

These are still quite abstract, so I am going to give some concrete examples of how they could apply. For this section, I will be treating latent variables “like magic”; I will describe the interface people use to interact with them, but I will be ignoring the mathematics underlying them. If you would like to learn about the math, skip to the technical guide.

Detecting and adjusting for random measurement error

Example: AI learning to code

Suppose you want to know whether AI will soon learn to code better than a human can. Maybe you can find four prediction markets on it with different criteria:

AI beats the best humans in a specific programming benchmark

AI beats the best humans in a programming competition

Scott Aaronson writes a blog post declaring that AI can now code better than a human can

The amount of human software engineers according to some tracker has been reduced by 50%

Without latent variables: These all seem like reasonable proxies for whether AI has learned to code. However, it is unclear how accurate they all are. If the prediction markets give each of them a decades-wide distribution, but with a 5 year gap between each of the means, does that mean that:

They will all happen in a row, with precisely 5 years of gap each?

They will happen in more or less random order, as the gaps only account for a small amount of the variance?

Both of these are compatible with the prediction market distributions. The first case corresponds to the assumption of a 100% correlation between the different outcomes, while the latter case corresponds to the assumption of a 0% correlation between the different outcomes.

The way we should think of the market in practice depends very much on the degree of correlation. If they are highly correlated, we can take successes in benchmarks to be a fire alarm for imminent practical changes due to AI, and we can think of AI capabilities in a straightforward unidimensional way, where AI becomes better across a wide variety of areas over time. However, if they are not very correlated, then it suggests that we have poor concepts, bad resolution criteria, or are paying attention to the wrong things.

Realistically I bet it is going to be in between those extremes. Obviously the correlation is not going to be 0%, since all the markets depend on certain shared unknowns, like how difficult programming is going to be for AI, or how much investment the area is going to receive. However, it is also not going to be 100%, because each outcome also hasunique unknowns. Bloggers regularly end up making a wrong call, benchmarks may turn out to be easier or harder than expected, and so on. Even seemingly solid measures like engineer count may fail, if the meaning of the software engineer job changes a lot. But knowing where exactly the degree of correlation falls between the extremes seems like an important question.

With latent variables: We can think of this as being a signal/noise problem. There is some signal you want to capture (AI coding capabilities). However, every proxy you can come up with for the signal has some noise.

Latent variables separate the signal and the noise. That is, you find (or create) a latent variable market that includes all of these outcomes, or more. This market contains a probability distribution for the noiseless/”true” version of the question, “When will AI code as well as humans do?”. Furthermore, it also contains conditional distributions, P(When will AI beat the best humans at a specific programming benchmark?|When will AI code as well as humans do?).

How does the market know what the distributions will be? It spreads the task out to its participants:

Maybe you are very familiar with the technical intricacies of the programming ability benchmarks, so you know the plausibility that there will be shortcuts to them. However, you don’t know very much about when AI will learn to code better than humans can. But fear not—you can make conditional predictions, where you get to assume the time that AI will learn to code better than humans can, and then you can answer when you think the benchmark will be solved relative to that.

Maybe you are a capabilities researcher, and have thought up a new ingenious solution for how to make AI that is capable of programming. However you don’t know much about the benchmarks or about Scott Aaronson. But you can just make a prediction on the overall question of “When will AI code as well as humans do?”, and the market structure will automatically handle the rest.

Of course this is just a vague qualitative explanation. See the technical guide for more.

This sort of market also lets you learn something about the amount of measurement error there is. For instance, if the market says that all of your indicators are almost entirely independent, then that implies that there is too much measurement error for them to be useful. On the other hand, if the market says that they are closely correlated, then that seems to suggest that you don’t have much to worry about with regards to measurement error. If you care, then you can use this information to figure out how many indicators are needed to make the resolution of the overall question quite objective (though latent variable markets work even if there is so much noise that the answer to the overall question is subjective).

Summary on measurement error

In prediction markets, you have to specify fairly objective outcomes, so that people can use those outcomes for predictions. However, these objective outcomes might be imperfect representations of what you really intended to learn about.

If you are betting on the markets, this can lead to the actual resolution of a question being the opposite of what the resolution should have been in spirit. Furthermore, since you can know ahead of time that this might happen, this incentivizes you to make less confident predictions, as you cannot rely on the resolution being correct. If someone else wishes to use these predicted probabilities in practice, they can’t take the predictions at face value, but have to take distortions in the market predictions into account.

Latent variables reduce this problem by having multiple resolution criteria, and then separating the distribution of the true underlying reality from the relationship between the underlying reality and the resolution criteria.

Abstracting over conceptually related yet distinct variables, and ranking the importance of different pieces of information

Example: Ukraine defense performance

Prediction markets contain various predictions about outcomes that seem relevant for Ukraine’s performance in the defense against Russia. For instance, Manifold Markets has the following questions:

Will there be Leopard (1 or 2) tanks in service in Ukraine by June 2023?

Will Belarus begin a land invasion of Ukraine from the north by the end of 2023?

etc.

Clearly these sorts of markets say something about Ukraine’s performance. However, also clearly, they are too narrow to be taken as The Final Truth About The War. Instead, they are components and indicators of how well things are going, in terms of military strength/government capacity, ally support, etc..

There’s in a sense an abstract aggregate of “all the things which might help or hurt Ukraine performance”, and when I look up predictions about the Ukraine war, I am mainly interested in the distribution of this abstract aggregate, not so much about the specific questions, because it is the abstract aggregate that tells me what to expect with many other questions further out in the future. (Maybe if you were Ukranian, this would be somewhat different, e.g. someone who has a lot of hryvnia might care a lot about hyperinflation.)

Without latent variables: We can look up the probabilities for these questions, and they give us some idea of how well it is going for Ukraine overall. However, these probabilities don’t characterize abstract things about Ukraine’s performance very well. For instance, they cannot distinguish between the following two possibilities:

The war is going to have an absolute winner, but we don’t know who. Either Russia is going to absolutely destroy Ukraine, or Ukraine’s defense is going to be overwhelmingly strong.

Everything about the war is going to be wishy-washy. Russia will win in some places, but Ukraine will win in other places.

Again, I bet it is going to be somewhere between the extremes, but I don’t know where on that continuum it will fall.

With latent variables: We can find a latent variable market that contains tons of questions about Ukraine’s defense performance. This allows you to get predictions for the entire range of possible outcomes, in a sense acting as an “index fund” for how well Ukraine will do. You can get predictions for what happens if Ukraine’s defense goes as well as expected, but also predictions for what happens if it goes realistically better than expected, or realistically worse than expected. You can even sample from the distribution, to get a list of scenarios with different outcomes.

The underlying principle is basically the same as for measurement error, but let’s take a couple of example of how the betting works anyway.

At the beginning of the war, a lot of people thought Ukraine would fall rapidly. However, over time it became clearer that this wouldn’t be the case, e.g. when the Russian supply lines got stuck, or when Russia failed to get air superiority. When you observe such a thing, you might conclude that Russia is doing worse than expected, and therefore might want to do a bet that Ukraine will do better than expected, in an overall abstract sense, without worrying too much about what exactly “better” means. This is precisely the sort of thing latent variables assist with; using latent variables, you can make abstract predictions across tons of correlated variables.

On the other hand, you can also use your knowledge to add information about the link between the abstract and the concrete. For instance, maybe you know (or learn) something about Belarus that makes you conclude that they will only invade if they know Russia will win. In that case, you might bid up the chance of Belarus invading if Russia will win, but bid down the chance of Belarus invading if Ukraine will win.

The latent variable markets also tell you what to pay attention to. Realistically, some of the concrete variables are going to be very noisy. For instance, maybe we expect control of Crimea to flip multiple times, and that who exactly controls it depends on subtle issues of timing between strikes. In that case, maybe the market doesn’t think that control of Crimea will be all that correlated with other distant outcomes, and that would probably make me not pay very much attention to news about Crimea.

Summary of abstraction

Again, in prediction markets you have to specify fairly objective outcomes. In order to define an outcome objectively, it is helpful to make it about something concrete and narrow. However, you probably usually don’t care so much about concrete and narrow things—instead you care about things that have widespread, long-range implications.

This doesn’t mean prediction markets are useless, because concrete and narrow things will often be correlated with factors that have long-range implications. However, those correlations are often not perfect; there may be tons of contextual factors that matter for which things exactly happen, or there may be multiple ways of achieving the same result, or similar.

This can be addressed with abstraction; we can consider the concrete variables to be instances or indicators of some underlying trend. As long as these concrete variables are sufficiently correlated, this underlying trend becomes a useful way to think about and learn about the world. Latent variables provide a way specify distributions involving this underlying trend.

Once you have this distribution, latent variables also provide a way of ranking different concrete variables by the degree to which they correlate with the latent variable. This might be useful, e.g. to figure out what things are worth paying attention to.

Better conditional markets

Example: Support of Taiwan in War

Without latent variables: There’s strong tensions between China and Taiwan, to the point where China might go to war with Taiwan soon. However, it hasn’t happened yet. If China goes to war with Taiwan, we might want to know what will happen—e.g. whether the US will support Taiwan in the war, or whether the war will escalate to nukes, or various other questions.

To know what will happen under some condition, one can use conditional prediction markets. These markets only resolve when the condition is met, which should make their probability estimate match the actual conditional probability given the condition. So you could for instance have a resolution criterion of “If China declares war on Taiwan, will the US provide weapons to support Taiwan?”.

However, this sort of criterion could become a problem; for instance, Putin didn’t declare war on Ukraine when Russia invaded Ukraine, so maybe China also wouldn’t declare war on Taiwan. You could perhaps fix that by using a different criterion, but it is hard to do this correctly.

Also, even if you come up with a criterion that looks good now, what will you do if it starts to look worse later? For instance, maybe China at first looks like they would do conventional war, so you create a criterion based on this (say, based on the number of Chinese ships attacking Taiwan), but then later it looks like they will fire a nuke; what happens to your conditional market then?

With latent variables: You can use a latent variable to define war between China and Taiwan. This already solves part of the issue, as it deals with the measurement error and abstraction described in the previous sections.

But further, if the type of attack that China is likely to do on Taiwan changes, then the market for the latent variable will automatically update the criteria for the latent variable, and therefore you get automatic updates in the condition for the Taiwan market. People who make bets on the conditional market will also have more opportunity to notice that something fundamental has changed, because the parameters of the latent markets will have changed accordingly.

Summary of markets conditional on latents

So as usual, prediction markets require you to specify objective criteria, which may be excessively narrow. This problem is compounded when you do conditional markets: you have to specify objective criteria not just for the outcome, but also for the condition in which the market should apply. Further, if things change so the condition is no longer relevant, you often have no way of changing the condition.

Latent variables assist with this. First, a latent variable can be used to adjust for measurement error or abstract criteria to be more generally applicable, as described in the previous section. But second, if things change so the previous condition no longer applies, latent variables will automatically update to address new conditions.

Technical guide

Prerequisites: This guide assumes that you are comfortable with probability theory, prediction markets and calculus. It also does not go into very much detail in describing what latent variables are, so I hope you got an intuition for them in the previous text.

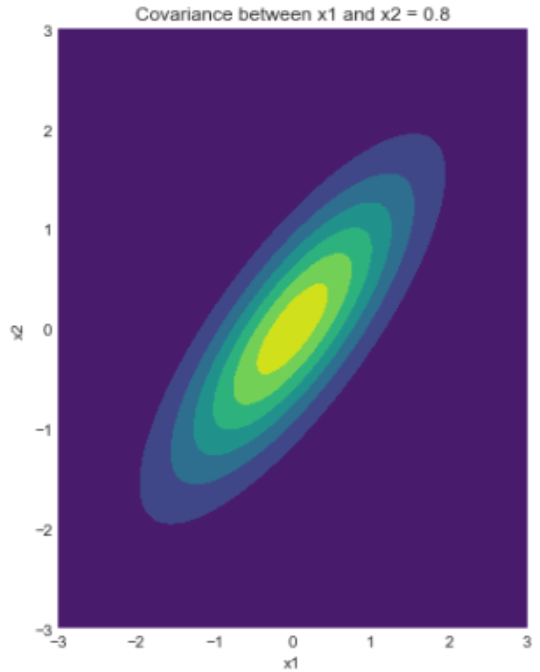

In their simplest incarnation, reflective latent variables are a way of factoring a joint probability distribution . Here, are known as the indicators of the latent variable, and in prediction markets they would be the concrete markets such as “Will Ukraine have control over Crimea by the end of 2023?” that have been mentioned above. If you are familiar with Bayesian networks, reflective latent variables correspond to the following Bayesian network:

Mathematically, the latent variable works by “pretending” that we have a new variable , and that the joint probability distribution can be factorized as

If is a discrete latent variable, the integral would be a sum.

A probability distribution like in principle has exponentially many degrees of freedom (one degree of freedom for each combination of the s), whereas a latent variable model factorizes it into having linearly many degrees of freedom (a constant amount of degrees of freedom for , plus a linear amount of degrees of freedom for ). I think this makes it feasible for users of a prediction market to get an overview of and make predictions on the latent variable.

Types of latent variables

The main distinction between different kinds of latent variable models is whether and are continuous or discrete. This affects the models that can be used for and , which has big implications for the use of the models. There are four commonly used types of latent variable models, based on this consideration:

| is discrete | is continuous | |

| is continuous | Item response theory (IRT) | Factor analysis (FA) |

| is discrete | Latent class analysis (LCA) | Mixture model (MM) |

It seems like a prediction market should support both continuous and discrete indicators for latent variable models. Luckily, it is perfectly mathematically possible to mix and match, such that some indicators are treated as discrete (as implied by IRT and LCA), while other indicators are treated as continuous (as implied by FA and MM). This just requires a bit of a custom model, but I don’t think it seriously complicates the math, so that is not a big concern.

Rather, the important distinction is between models where the latent variable is continuous, versus models where the latent variable is discrete. Since there is only one latent variable in a given latent variable market, one cannot simply mix and match.[4] Basically, the different between the two kinds of markets are:

In LCA and MM: People enumerate a set of scenarios they think could happen (e.g. “FOOM”, “slow takeoff”, “AGI is impossible” for AI markets, or “Ukraine will win”, “Russia will win”, “stalemate”, “nuclear war” for war markets), give conditional probabilities for what will happen in each of these scenarios, and give probabilities to each of the scenarios.

In IRT and FA: There is a continuum that people make predictions over (e.g. “now” until “in 100 years” for AI markets, or “strongly favoring Ukraine” to “strongly favoring Russia” for war markets), and people can make give conditional probabilities for what will happen on any point along that continuum, as well as narrow down or expand the continuum to make predictions in a further range.

I think one benefit of LCA/MM is that it is likely much more intuitive to users. Sketching out different abstract scenarios seems like the standard way for people to make predictions. However, I think some benefits of IRT/FA are that many phenomena have an inherent continuity to them, as well as that they are more likely to update naturally with new evidence, and require fewer parameters. More on that in the Design section.

Implementation for item response theory

My implementation of latent variable markets used item response theory, so it is item response theory that I have most thoroughly thought through the math for. I think a lot of the concrete math might generalize to other cases, but also a lot might not.

Item response theory has two components:

A latent probability distribution, , which in my implementation was forced to be Gaussian, but which in principle could take other forms.

For each indicator , a conditional probability distribution , which in my implementation was forced to be sigmoidal, but which could take other forms.

In terms of parameters, the most straightforward way we could parameterize the IRT model is with parameters:

If is Gaussian, then it can be characterized by and , its mean and standard deviation.

If is sigmoidal, then it has the form . This means that it can be characterized by parameters and .

This is not the only representation that can be used, and it is not the one I used behind the scenes in my implementation. However, it is a useful representation for understanding the probability theory, and it can often be useful to switch to different representations to make the coding simpler.

Betting: In order to make a bet, one can change the parameters (, , , ) of the market. I support several different types of bets in my implementation, because there are several different types of information people might want to add to the market.

A big part of the value in latent variable markets is in the ability to change the latent distribution to some new distribution . For instance, if one has observed some information that narrows down the range of plausible outcomes, one might want to change the distribution to a narrower one, such as. (In my mockup I implemented this via buttons, but for a nice UI one could probably let people freely drag around the latent variable distribution, rather than dealing with clunky buttons. See the “Design decisions” section for more.)

Another type of bet that people could make is a conditional bet, changing to update the relationship between the latent variable and the outcome of interest. However, I think the nature of the parameters might be counterintuitive because they are quite abstract, so I instead did a change of variables to , with representing the logits for the outcome when the latent variable is high and low respectively. Furthermore I converted them back to probabilities in the interface to make them more interpretable to users.

Scoring bets: The betting interface described above gives a way for people’s bets to change the parameters of the market. However, that requires a scoring rule in order to give people incentives to select good parameters. I used the log scoring rule in order to give people payoffs.

That is, if you change the parameters (, , , ) from to , and the markets resolved to , you would get a score and a net payout of .

To ensure that the payout is always positive (perhaps more relevant for Manifold than Metaculus), I split this score up in two steps. When performing the bid, you pay . Then when the market resolves, you get a payout of .

When you expand out , you get a nasty integral. I solved this integral numerically.

Integration with existing markets: The math that handles latent variables includes certain parameters (, , , ). However, these are not necessarily the parameters we want to store in the database of the prediction market. For one, (, , , ) are redundant and can be simplified. But for two, the prediction market already stores certain parameters in the database (), and it would be nice if we could keep those, to Make Illegal States Unrepresentable.

So we want to find some set of parameters to add to the database, next to , which are equivalent in expressive power to (, , , ).

First, the parameters and can be eliminated by changing to a standard Gaussian distribution (i.e. with and ) and transforming , and .

Now we have parameters. Given these parameters, we can use a constraint to eliminate the s, namely:

I don’t know of a closed form for solving the above equation for , so I cheated. Rather than using a logistic curve , we can use a probit curve , where is the CDF for the unit normal distribution. has a similar shape to the logistic sigmoid function, but it more rapidly goes to and in the tails.

Importantly, we can rephrase the probit model to , for . Then we can scale down by a factor of , which preserves the probability that the expression exceeds . However, importantly, by scaling it down, it means that if we marginalize over (and has a unit normal distribution), then will have a unit normal distribution, and so:

Or in other words, .

(In my actual implementation, I then cheated some more to switch back to a logit model, but that was mainly because I didn’t want to code up and in Javascript. I suspect that using a probit model instead of a logit model is the best solution.)

To recap, if the database for the market just stores and , we can recover , , .

(In my implementation I did two further changes. First, I shifted and to better represent the distribution of logits implied by the market, in order to make the market history more meaningful. I think something like this is probably a good idea for implementing latent variable markets in practice, but my implementation was fairly simplistic. Secondly, rather than storing , I stored , called the standardized loading, which is essentially the correlation between the latent variable and the observed variable, and so takes values in the interval .)

Signal to noise ratio: At the bottom of my market, I plot what I call the “signal:noise ratio” of the market. It looks like this:

It might be kind of confusing, so let me explain. The key to understanding how to think about this is to consider how things look after all the markets resolve.

Without loss of generality, assume that all the markets had a positive , so that a YES resolution aligns with the latent variable being higher, and a NO resolution aligns with the latent variable being lower. (If any of the markets instead had a negative , we can invert the resolution criterion for that market to give it a positive .)

Consider the amount of indicator markets which resolved yes, which I will call . This amount will presumably be highly correlated with the “true” value of . (That is, the number of YES-resolving markets about how whether Ukraine has done well in the war will be a good indicator of whether Ukraine has done well in the war.) But we should also expect there to be some noise, due to random distinctions between the resolution criteria and the latent variables.

The signal:noise ratio measures the amount of correlation between and , relative to the amount of noise in that is unrelated to . More specifically, for a given level of , we can use the market parameters to compute , representing the signal or sensitivity to the true level of , as well as compute , representing the amount of noise in the market resolution. The signal-to-noise ratio then plots as a function of .

More formally, we have . We can then derive by:

(assuming we use a logistic model; if we instead use a probit model, a different derivation would be used)

To derive , we can make use of the fact that the variance of independent variables commutes with sums, and that the Bernoulli distribution with probability has variance to compute:

So then overall, the signal-to-noise ratio for the market is:

I expect that it will generally be considered good practice to ensure that the signal-to-noise ratio is reasonably high (maybe 1.0 or more), so that the bets are not too noisy. One of the advantages of latent variable markets is that they provide a mechanism to reach agreement on the signal-to-noise ratio ahead of market resolution, by using formulas like this. One can then improve the signal-to-noise ratio by creating more indicator markets covering more aspects of the outcome in question. Asymptotically, the signal-to-noise ratio goes at roughly where is the number of indicator markets.

(If you want to look up more about this in the literature, the keyword to search for is “test information function”. That said, when working with test information functions, usually people work with other ways of quantifying things than I am doing here, so it doesn’t translate directly.)

Basics of latent class models

The above gives the guts of my implementation of item response theory. However, this is not the only possible latent variable model, so I thought I should give some information about another kind of model too, called latent class models. This won’t be as comprehensive because I haven’t actually implemented it for prediction markets and so there might be random practical considerations that come up which I won’t explain here.

In a latent class model, takes value in a fixed set of classes, which for the purposes of this section I will treat as numbers , but remember that semantically these classes are not simply numbers but might be assigned high-level meanings such as “AI FOOM” or “Ukraine kicks Russia out of its territory”.

A latent class model has two kinds of parameters:

The probabilities for a categorical distribution .

The conditional probabilities for a conditional distribution .

Like with the item response theory model, the latent class model could use log scoring in order to incentivize predictions, which requires us to have a formula for the probability of an outcome given the market parameters. This formula is:

Design decisions

While thinking about and developing latent variable markets, I’ve also come with some thoughts for the design tradeoffs that can be made. I thought I might as well drop some of them here. Obviously these are up for reevaluation, but they would probably be a good starting point.

Types of latent variable markets

As mentioned before, there are multiple types of latent variable models, e.g.:

| Indicator is discrete | Indicator is continuous | |

| Latent is continuous | Item response theory (IRT) | Factor analysis (FA) |

| Latent is discrete | Latent class analysis (LCA) | Mixture model (MM) |

Since prediction markets typically support both discrete and continuous markets, it seems logical that there should be support for both discrete and continuous indicators.

Ideally there should also be support for both discrete and continuous latents, but it might be nice to get a better understanding for the tradeoffs.

Who chooses the number of outcomes for the discrete latent?

A discrete latent variable requires some set of outcomes, which someone has to choose. Maybe the person creating the latent variable market should choose them? For instance, maybe someone creating a latent variable market on the Ukraine war might choose two outcomes, which they label as “Ukraine wins” and “Russia wins”?

But what if a predictor thinks that it will end in a stalemate? If they were restricted to “Ukraine wins” and “Russia wins” as options, they would have to co-opt one of those to represent the stalemate outcome.

This can be fixed simply enough by letting everyone propose new outcomes. But that raises the question of how letting everyone propose new outcomes should be handled in the UI:

Should everyone see all the outcomes that everyone has proposed? That seems like it could get quite messy.

Should the new outcomes be private to the person who introduces them? That seems like it would prevent the market from aggregating the information about the outcomes in a nice way, as it would keep the internal state “secret”.

This is part of what makes me disprefer discrete latent variable markets. If there was a solution to this, maybe discrete latent variable markets would be superior to continuous ones.

Other assorted thoughts

I have tended to consider only very simple link functions . But they don’t have to be so simple. It’s mainly that the more complex the link function is, the fewer closed forms you have, and the more accommodating the UI would have to be.

You could also have way more complicated latent variables than what IRT/FA/LCA/MM allow. For instance in sequence modelling there is the concept of Latent Dirichlet Allocation. Of course prediction markets don’t have sequence data, so LDA isn’t very relevant. But I have an easy time coming up with other latent variable models that might be relevant for prediction market data, so I imagine that there could be something to it. However I think IRT/FA/LCA/MM strike a good balance between simplicity and flexibility, so I would be inclined to prefer them.

Latent variable markets vs combinatorial markets

Latent variable markets aren’t the only prediction market extension people have considered. The most general approach would be to allow people to make bets using arbitrary Bayesian networks, a concept called combinatorial prediction markets.

Bayesian networks support latent variables, and so allowing general Bayesian networks can be considered a strict generalization of allowing latent variables, as long as one remembers to support latent variable in the Bayesian network implementation.

In principle I love the idea of combinatorial prediction markets, but I think it makes the interface much more complex, and I think a large chunk of the value would be provided by the relatively simpler notion of latent variables, so unless there’s rapid plans in the works to implement fully combinatorial prediction markets, I would recommend focusing primarily on latent variable markets.

Furthermore, insofar as we consider latent variable markets desirable for reducing measurement error, we might prefer conditional markets to always be conditional on a latent variable market, rather than a simple objective criteria. So when applying creating Bayesian networks to link different markets, it might make sense to favor linking latent variables to each other, rather than working with object-level markets. (In psychology, this is the distinction between a measurement model and the structural model.) If so, one can think of latent variable markets as being a “first step” towards more general combinatorial markets.

Number of indicator markets

When working with latent variable models, I tend to think that one should require at least 3 indicators. The reason is that the latent variable is underdetermined by 2 indicators. For instance, you could either have , and therefore have . Or vice versa. Essentially, what goes wrong is that if and are imperfectly correlated with each other, then there is no rule for deciding which one is “more correlated” with , the latent variable.

However, more generally, it is advisable to have a bunch of indicators. When you have only a few indicators, it is quite subjective what the “true value” of the latent variable should be. But as you add more and more indicators, it gets pinned down better and better.

Creating latent variable markets

When creating latent variable markets, it seems like it would be advisable to create an option to include preexisting indicator markets into the latent variable, to piggyback off their efforts.

However, since it is valuable to have many indicators for latent variable markets, maybe the interface for creating latent variable markets should also support creating new associated indicator markets together with the latent variable market, in a quick and easy way.

Adding new indicators to markets

As a latent variable market is underway, new questions will arise, and old questions will be resolved. It seems to me that there’s no reason not to just allow people to add questions to the latent variable markets

Multifactor latent variable models

Continuous latent variable models typically support having the latent variable be a vector . This means that rather than having , you would have for vector-valued .

I think multifactor latent variable models would be appealing as they can better model things of interest. For instance in the Ukraine war, there is not just the axis of whether Ukraine or Russia will win, but also the (orthogonal?) axis of how far the war will escalate—the nukes vs treaty axis.

I would recommend allowing multidimensionality in latent variable markets, except I don’t know of any nice interfaces for supporting this. When I’ve worked with multidimensional latent variables, it has usually been with computer libraries that computed them for me. Manually fitting multidimensional latent variables sounds like a pain.

Selling bets on latent variable markets

On ordinary markets, if you’ve bought some shares, you can sell them again:

I don’t know of a neat way to achieve this with latent variable markets. So I guess it won’t be supported?

I’m not sure how big of a problem that would be.

If you changed your mind quickly enough for the market to not have moved much, you could buy shares in the opposite direction to offset your mistake. This would still mean that you have your money tied up in the market. (On Manifold Markets, that seems like no big deal because of the loan system. On Metaculus, that seems like no big deal because you don’t have a fixed pool of money but instead a point system for predictions.)

If the market moves a lot, then you might not have any reasonable way to buy shares to offset your mistake without making overconfident predictions in the opposite direction too. But really that’s just how prediction markets work in general, so again that doesn’t seem like a big deal.

Offers

If anyone is interested in implementing latent variable markets, I offer free guidance.

I’m aware that Manifold Markets is open-source, and I’ve considered implementing latent variable markets in it. However, I don’t have time to familiarize myself with a new codebase right now. That said, I am 100% willing to offer free snippets of code to handle the core of the implementation.

I am also willing to pay up to $10k for various things that might help get latent variable markets implemented.

Thanks to Justis Mills for providing proofreading and feedback.

- ^

Latent variables are approximately equivalent to PCA, and PCA is good for dimension-reduction (finding the most important axes of variation). So if you have a large, wildly varied pool of questions, you could throw a latent variable market at it to have people bet about what the most important uncertainty in this pool is. But that might be too complicated to apply in practice.

- ^

I think latent variable markets could also help deal with “missing data” or N/A resolutions? That is, suppose you want to make a prediction market about the future, but you can’t think of any good criterion which won’t have a significant chance of resolving N/A. In that case, you could use a bunch of different criteria that resolve as N/A under different conditions, and the latent variable market could allow you to see the overall pattern.

- ^

Or in less dense and more unambiguous but less elegant notation:

- ^

This is not strictly 100% true, there are some more elaborate cases where one can combine them, but it doesn’t seem worth the complexity to me.

- Voting Results for the 2023 Review by (6 Feb 2025 8:00 UTC; 88 points)

- Please Bet On My Quantified Self Decision Markets by (1 Dec 2023 20:07 UTC; 36 points)

- 's comment on Killing Socrates by (11 Apr 2023 20:50 UTC; 15 points)

- Betting on what is un-falsifiable and un-verifiable by (14 Nov 2023 21:11 UTC; 13 points)

- Forecasters: What Do They Know? Do They Know Things?? Let’s Find Out! by (EA Forum; 2 Apr 2024 18:03 UTC; 10 points)

- If I Was An Eccentric Trillionaire by (9 Aug 2023 7:56 UTC; 9 points)

- Reinforcement Learning from Information Bazaar Feedback, and other uses of information markets by (16 Sep 2024 1:04 UTC; 5 points)

- 's comment on What are important UI-shaped problems that Lightcone could tackle? by (30 Apr 2025 2:41 UTC; 4 points)

I really liked this post when I read it, even though I didn’t quite understand it to my satisfaction (and still don’t).

As far as I understand the post, it proposes a concrete & sometimes fleshed-out solution for the perennial problem of asking forecasting questions: How do I ask a forecasting question that actually resolves the way I want it to, in retrospective, and doesn’t just fall on technicalities?

The proposed solution is latent variables and creating prediction markets on them: The change in the world (e.g. AI learning to code) is going to affect a bunch of different variables, so we set up a prediction market for each of those, and then (through some mildly complicated math) back out the actual change in the latent variable, not just the technicalities on each of the markets.

This is pretty damn great: It’d allow us to more quickly communicate changes in predictions about the world to people and read prediction market changes more easily. The downside is that betting on such markets would be more complicated and effortful, and especially for non-subsidized markets that is often a breaking point. (Many “boring” Metaculus questions receive <a few dozen predictions.)

I’m pretty impressed by this work, and wish there was some efforts at integration into existing platforms. Props to tailcalled for writing code and an explanation for this post. I’ll give it +4, maybe even +9, because it represents genuine progress on a tricky intellectual question.

Prediction markets are good at eliciting information that correlates with what will be revealed in the future, but they treat each piece of information independently. Latent variables are a well-established method of handling low-rank connections between information, and I think this post does a good job of explaining why we might want to use that, as well as how we might want to implement them in prediction markets.

Of course the post is probably not entirely perfect. Already shortly after I wrote it, I switched from leaning towards IRT to leaning towards LCA, as you can see in the comments. I think it’s best to think of the post as staking out a general shape for the idea, and then as one goes to implementing it, one can adjust the details based on what seems to work the best.

Overall though, I’m now somewhat less excited about LVPMs than I was at the time of writing it, but this is mainly because I now disagree with Bayesianism and doubt the value of eliciting information per se. I suspect that the discourse mechanism we need is not something for predicting the future, but rather for attributing outcomes to root causes. See Linear Diffusion of Sparse Lognormals for a partial attempt at explaining this.

Insofar as rationalists are going to keep going with the Bayesian spiral, I think LVPMs are the major next step. Even if it’s not going to be the revolutionary method I assumed it would be, I would still be quite interested to see what happens if this ever gets implemented.