The intelligence-sentience orthogonality thesis

You’re familiar with the orthogonality thesis. Goals and intelligence are more or less independent for one another. There’s no reason to suspect an intelligent AI will also be an aligned AI because it is intelligent.

Popular writing suggests to me there’s a need to clear up some other orthogonality theses as well. We could propose:

The intelligence-sentience orthogonality thesis

The sentience-agency orthogonality thesis

The self-reflection-sentience orthogonality thesis

What I mean by “sentience” and “intelligence”

In this article I generally use the word sentience to describe the state of experiencing qualia, or the property a mind has that means there is “something that it is like to be” that mind. I use that term because many people have conflicting ideas about what consciousness means. When I use the term “consciousness”, I try to be specific about what I mean, and typically I use the word while explaining other people’s ideas about it.

When I use the word “intelligence” I mean the ability to represent information abstractly and draw inferences from it to complete tasks, or at least to conceptualize how a task can be completed. This is close to the g factor being measured in intelligence tests, which measures how a human or other agent can recognize patterns and draw inferences about them. It’s also close to what we mean by “artificial intelligence”.

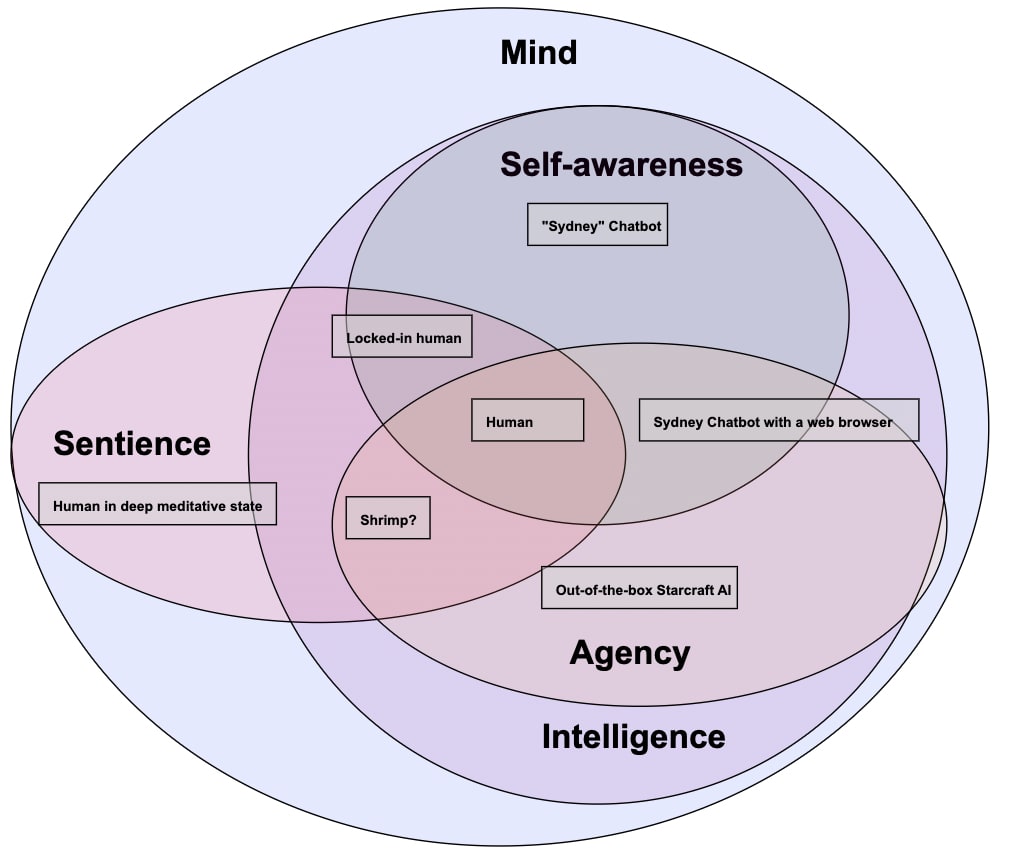

Mind, intelligence, self-awareness, and sentience as overlapping Euler sets

It might be helpful to represent each of concepts like mind, intelligence, self-awareness, agency, and sentience as overlapping sets on a Euler diagram. We might conceptualize an entity possessing any of the above to be a kind of mind. Self-awareness and agency plausibly must be forms of intelligence, whereas arguably there are simple qualia experiences that do not require intelligence—perhaps a pure experience of blueness or pain or pleasure does not require any soft of intelligence at all. Finally, we can imagine entities that have some or all of the above.

The Euler diagram shows specific examples of where various minds are positioned on the chart. I’m pretty low-confidence about many of these placements. The main point to take away from the diagram is that when thinking about minds one should get in the habit of imagining various capacities separately, and not assuming that all capacities of the human mind will go together in other minds.

The intelligence-sentience orthogonality thesis

In his book, Being You: The New Science of Consciousness, Anil Seth describes research by himself and others in the last 10 years about consciousness (by which he means more or less what I mean when I say “sentience”).

Seth correctly describes the intelligence-sentience orthogonality thesis, on two cumulative grounds that establish that intelligence is neither necessary nor sufficient for sentience. First, there’s not enough evidence for functionalism, the proposition that consciousness always arises out of a particular kinds of information processing—the kind humans do—regardless of the physical substrate of its hardware, whether that hardware is a biological brain, silicon, or anything else. Seth argues functionalism is a necessary condition for artificial intelligence. Second, Seth argues we don’t know whether the kind of information processing that intelligence is even sufficient to produce consciousness. The common but fallacious intuition that intelligent agents must be conscious comes from not much more than the fact humans are both conscious and intelligent, but that in itself isn’t evidence the concepts can’t be dissociated.

So far so good, and Seth puts the intelligence-sentience orthogonality thesis better than I could. In reality, there’s no reason to think an artificial general intelligence must be conscious. In fact—as Prof. Seth describes in his book—we don’t really know yet how consciousness arises in the brain, or why it developed, evolutionarily speaking. But although we don’t know how consciousness is generated, we have a pretty clear idea about how intelligence arises, at least artificially. Current AI systems aren’t quite general enough for most people to consider them as ‘general intelligence’, but in principle, they seem ready to do any task with the right form of enough training

We could distinguish a few different forms of the thesis, including strict intelligence-sentience orthogonality, possible intelligence-sentience orthogonality, general intelligence orthogonality, and epistemic orthogonality. I’ll describe each of these below.

Strict intelligence-sentience orthogonality

Strict intelligence-sentience orthogonality holds intelligence and sentience are completely unrelated and no form of intelligence leads to the development of sentience. Actually, this seems unreasonably strong, and I wouldn’t endorse it. Although neuroscientists’ ideas about the evolutionary origins of consciousness haven’t yet gone much beyond the level of speculation, it is clear to each of us that consciousness developed somehow in the process of our own evolution. Given the homology between human brains and mammal brains it seems a pretty sure bet mammals also have conscious experience similar to ours in many respects (based on other scientific research, consciousness seems likely to extend to fish and even shrimp, plausibly)

Although it has been suggested that consciousness was more of an evolutionary spandrel than an adaptive mechanism that improved evolutionary fitness, Damasio argues that it serves an evolutionary purpose—to help us organize homeostatic feedback into higher-order feelings and make deliberative responses to those feelings. In that sense, whatever intelligence is enabled by consciousness is clearly a violation of the strict thesis. If consciousness is more of an evolutionary spandrel, this is still a violation o the strict thesis, because it suggests that some kind of informatic organization that produces intelligence also produces consciousness.

Possible intelligence-sentience orthogonality

Possible intelligence-sentience orthogonality suggests it is possible to build any sort of intelligence without sentience arising. Let’s say some sorts of intelligence like proproceptive or homeostatic intelligence tend to relate to consciousness in organic life. In this account, you could replicate the functions of proprioception or homeostasis without absolutely requiring consciousness. This seems like a plausible, live possibility. Consider that computers have been doing all sorts of tasks humans do with conscious processes, right down to adding two numbers together, without obviously leveraging any kind of conscious processing. Consider that humans themselves can, with enough practice, add numbers together or play piano or do the right trigonometry required to throw a ball without being conscious of the processes they are engaging to perform the task they would, with less practice, require conscious processing to achieve.

General intelligence orthogonality

Perhaps something about human regulation of their homeostatic systems necessarily gives rise to conscious processing; there’s something in that process that would just be difficult or impossible to achieve without consciousness. It might be that consciousness is tied to a few specific processes, processes that are not required for general intelligence. It might still be possible that we can build systems that are generally intelligent by any reasonable metric that do not include those intrinsically conscious processes. In fact, given the rise in a level of general intelligence in large language models, it seems quite likely that a general AI could perform a large range of intelligent tasks, and if the few human functions that absolutely require intelligence are not on the list, we still get unconscious artificial general intelligence.

Epistemic orthogonality

In epistemic orthogonality (perhaps we could also call it agnostic orthogonality), it is unclear if a particular form of orthogonality holds, but from current evidence, we don’t know. In that case, we have orthogonality with respect to our current knowledge of intelligent systems and conscious entities, but not necessarily orthogonality in fact. We do seem to be faced with at least this level of orthogonality, for several reasons: we don’t know what computations are required for general intelligence; we don’t know what computations or substrates produce for consciousness; and we don’t know whether computations producing general intelligence do in fact inevitably produce consciousness.

Theoretical orthogonality

Sentience and self-awareness can be at the very least conceptually distinguished, and thus, are in theory orthogonal. Even if it isn’t clear empirically whether or not they are intrinsically linked, we ought to maintain a conceptual distinction between the two concepts, in order to form testable hypotheses about whether they are in fact linked, and in order to reason about the nature of any link.

Sentience-agency orthogonality

Sentience-agency orthogonality describes the state of affairs where an entity can be sentient without having any agency, and can be agentic without having any sentience. A person with locked-in syndrome who is unable to communicate with others has sentience, but not agency, because while they have conscious experience (as confirmed by people with locked-in syndrome who have learned to communicate) they’re completely unable to act within the world. Conversely, an AI controlling a Starcraft player has plenty of agency within the computer game’s environment, but no one thinks that implies the AI is sentient. This isn’t because the player is within a game environment; if we magically let that AI control robots moving in the physical world that did the things units on Starcraft could do, that IA would be agentic IRL, but it still wouldn’t have sentience. Instead, this is because the kind of intelligence necessary to produce agentic behavior isn’t the sort of intelligence necessary for sentience.

While making a decent argument for intelligence-sentience orthogonality, Seth actually tells his readers that AI takeover, while it “cannot be ruled out completely”, is “extremely unlikely”. It seems to me that he falls into the trap of ignoring sentience-agency orthogonality. He builds his case against AI existential risk purely on the intelligence sentience orthogonality, arguing that we really don’t know whether artificial general intelligence will be conscious:

Projecting into the future, the stated moonshot goal of many AI researchers is to develop systems with the general intelligence capabilities of a human being—so-called ‘artificial general intelligence’, or ‘general AI’. And beyond this point lies the terra incognita of post-Singularity intelligence. But at no point in this journey is it warranted to assume that consciousness just comes along for the ride. What’s more, there may be many forms of intelligence that deviate from the humanlike, complementing rather than substituting or amplifying our species-specific cognitive toolkit—again without consciousness being involved.

It may turn out that some specific forms of intelligence are impossible without consciousness, but even if this is so, it doesn’t mean that all forms of intelligence—once exceeding some as yet unknown threshold—require consciousness. Conversely, it could be that all conscious entities are at least a little bit intelligent, if intelligence is defined sufficiently broadly. Again, this doesn’t validate intelligence as the royal road to consciousness.

In other words, it may be possible to build a kind of artificial general intelligence without building in the sort of intelligence required for sentience. He doesn’t say why it conscious matters for AI take-off. The missing premise seems to be that without consciousness, there’s no agency. But this is wrong, because we’ve already explored how we can have agents, like computer players within computer games, that are agentic, but not conscious.

Self-awareness sentience orthogonality

Self-reflection or self-awareness is another property of minds that some people can attribute to consciousness, and perhaps to sentience. Self-awareness sentience orthogonality suggests that an intelligence can be self-aware without having conscious internal states, and can have conscious internal states without being self-aware. Self-awareness is the ability of an intelligence to model its own existence and at least something about its own behavior. Self-awareness is probably necessary for recursive self-improvement, because in order to self-improve, an intelligence needs to be able to manipulate its own processes. Arguably, Bing’s chatbot “Sydney” has included a limited form of self-awareness, because it can leverage information on the Internet to reason about the Bing chatbot. Self-awareness is similar-but-not-the same as situational awareness, in which an intelligence can reason about the context within which it is being run.

Neuroscience of consciousness researchers Dehaene, Lau, and Koudier (2017) distinguish two distinct cognitive definitions of “consciousness”, neither of which are really what I mean by “sentience”. The first form of consciousness is Global availability—the ability for a cognitive system to represent an object of thought, globally available to most parts of the system for verbal and non-verbal processing. The second form is self-monitoring—understanding the system’s own processing, and is also referred to by psychologists as “metacognition”. I argue that self-monitoring is entirely distinct from sentience. While we humans can have conscious experiences, or qualia, that include being conscious of ourselves and our own existence, it’s not clear that conscious experience of the cognitive process of self-monitoring—a kind of sentience—is necessary to do the self-monitoring task.

As evidence for this, consider that you’re able to do many things that you’re not consciously aware of. As I type this, I’m not conscious of all of the places my fingers move to type words on the page because over time my brain has automatized the process of typing to an extent I’m not aware of every single keystroke—particularly when I’m focusing on the semantic or intellectual meaning of my words rather than their spelling. This is a widespread feature of human existence: consider all the times you’re driving, or walking, or doing any number of tasks that require complex coordination of your own body, which nevertheless you are able to do while focused on other tasks.

Other relevant orthogonalities

Andrew Critch’s recent article Consciousness as a conflationary alliance term came out recently and does an excellent job describing a wider set of distinct phenomena that people call “consciousness”, including introspection, purposefulness, experiential coherence, holistic experience of complex emotions, experience of distinctive affective states, pleasure and pain, perception of perception, awareness of awareness, symbol grounding, proprioception, awakeness, alertness, detection of cognitive uniqueness, mind-location, sense of cognitive extent, memory of memory, and vestibular sense. I suspect that humans have qualia—conscious experience of—all of the above cognitive processes, and that as a result, when asked to define consciousness, people come up with one or more of the above items by way of example. They might sometimes affirmatively argue that one or more of these processes is the essence of consciousness. But all of these terms seem distinct and without a clear argument to the contrary, we ought to treat them as such.

Hopefully it should be clear to the reader that none of the above processes simply are sentience. Perhaps some argue that they are forms of sentience, and perhaps it’s meaningless to talk about conscious experience, or qualia that are entirely devoid of any content. Nevertheless, at least at the conceptual level, we ought to recognize each of the processes Andrew’s interviewees describe as “consciousness” as at most, specific forms of sentience. Most do not even rise to that level, because there are non-conscious forms of infomatic representation of, for instance, memory of memory, introspection, and mind-location present in fairly non-sophisticated computer programs.

Overview

After reading Andrew’s recent post, I considered whether this post was even necessary, as it covered a lot of ground that mine does, and his has the added benefit of being a form of empirical research describing what a sample of research participants will say about consciousness. Overall, the distinct idea I want to communicate is that even beyond the cognitive processes and forms of qualia that are sometimes described as consciousness itself, there are other properties of humans such as agency and intelligence that we very easily confuse as some single essence or entity, and that we need to think very systematically about these distinct concepts in order to avoid confusion. As we increasingly interact with machines that have one or more of these abilities or properties, we need to carefully distinguish between each.

All those forms of consciousness, intelligence, agency, and other processes are often bunched together as “mind”. I think one important reason for this is that humans have all the relevant processes, and we spend most of our lifetimes interacting with our selves and with other humans, and find little practical need in daily life to separate these concepts. People who have never thought about artificial intelligence or philosophy of mind may soon be called to vote on political decisions about regulation of artificial intelligence risk. Even seasoned researchers in psychology, philosophy of mind, or artificial intelligence do not always demonstrate the clarity of mind to separate distinct mind processes.

I believe it would be useful to develop a taxonomy of mind processes in order to clearly distinguish between them in our conversation about ourselves and about artificial intelligence and artificial minds. We might build on this to have much more intelligent conversations, within a research context, within this community, and within broader society, about the intelligences, the agents, and perhaps the minds that we seem to be on the cusp of creating. It’s possible a taxonomy like this exists in philosophy of mind, but I’m not aware of anything like it.

- 's comment on Only mammals and birds are sentient, according to neuroscientist Nick Humphrey’s theory of consciousness, recently explained in “Sentience: The invention of consciousness” by (EA Forum; 25 May 2024 18:43 UTC; 2 points)

- 's comment on Sentience, Sapience, Consciousness & Self-Awareness: Defining Complex Terms by (17 Dec 2023 0:59 UTC; 1 point)

You’ve tackled quite a task. Your conclusion was that carefully differentiating these concepts would forward understanding of human minds and alignment work. I think it’s also worth noting that the most difficult of these, phenomenal consciousness (or sentience, as you term it) is also the least important for alignment work. Differentiating intelligence and agency seems hugely clarifying for many discussions in alignment.

I’m disappointed to learn that Seth has phenomenal consciousness mixed with agency, since I’m quite biased to agree with his thinking on consciousness as a field of study.

You might have noticed I didn’t actually fully differentiate intelligence and agency. It seems to me to exert agency a mind needs a certain amount of intelligence, and so I think all agents are intelligent, though not all intelligences are agentic. Agents that are minimally intelligent (like simple RL agents in simple computer models) also are pretty minimally agentic. I’d be curious to hear about a counter-example.

Incidentally I also like Anil Seth’s work and I liked his recent book on consciousness, apart from the bit about AGI. I read it right along with Damasio’s latest book on consciousness and they paired pretty well. Seth is a bit more concrete and detail oriented and I appreciated that.

It would make it much easier to understand ideas in this area if writers used more conceptual clarity, particularly empirical consciousness researchers (philosophers can be a bit better, I think, and I say that as an empirical researcher myself). When I read that quote from Seth, it seems clear he was arguing AGI is unlikely to be an existential threat because it’s unlikely to be conscious. Does he naively conflate consciousness with agency, because he’s not an artificial agency researcher and hasn’t thought much about it? Or does he have a sophisticated point of view about how agency and consciousness really are linked, based on his ~~couple decades of consciousness research? Seems very unlikely, given how much we know about artificial agents, but the only way to be clear is to ask him.

Similarly MANY people including empirical researchers and maybe philosophers treat consciousness and self-awareness as somewhat synonymous, or at least interdependent. Is that because they’re being naive about the link, or because, as outlined in Clark, Friston, & WIlkinson’s Bayesing Qualia, they have sophisticated theories based on evidence that there really are tight links between the two? I think when writing this post I was pretty sure consciousness and self-awareness were “orthogonal”/independent, and now, following other discussion in the comments here and on Facebook, I’m less clear about that. But I’d like more people do what Friston did as he explained exactly why he thinks consciousness arises from self-awareness/meta-cognition.

According to Joscha Bach’s illusionism view (with which I agree and which I describe below), it’s the other way around: meta-awareness (i.e., awareness of awareness) within the model of oneself which acts as a ‘first-person character’ in the movie/dream/”controlled hallucination” that the human brain constantly generates for oneself is the key thing that also compels the brain to attach qualia (experiences) to the model. In other words, the “character within the movie” thinks that it feels something because it has meta-awareness (i.e., the character is aware that it is aware (which reflects the actual meta-cognition in the brain, rather than in the brain, insofar the character is a faithful model of reality). This view also seems consistent with “Bayesian qualia” (Clark et al. 2019).

You didn’t define neither intelligence nor agency, and people notoriously understand “intelligence” very differently (almost as much as they understand “consciousness” differently). This makes the post harder to perceive (and I suspect many readers will misunderstand it).

I think that agency is an attribute of some groups of nodes (a.k.a., Markov blankets) of causal graphs of the world that modellers/observers/scientists (subjectively) build. Specifically, the causal graph should be appropriately sparse to separate the “agent” from the “environment” (both are just collections of variables/factors/nodes in the causal graph) and to establish the perception-action loop, and the “agent” should be at least minimally “deep”. The key resources to understand this perspective are Ramstead et al. 2022 and Friston et al. 2022. This view seems to me to be roughly consistent with Everitt et al.’s causal framework for understanding agency. Note that this view doesn’t imply cognitive sophistication at all: even a cell (if not more simple and smaller objects) have “agency”, in this view.

So, “basal” agency (as defined above) is ubiquitous. Then, there is a subtle but important (and confusing) terminological shift: when we start discussing “degrees of agency”, as well as “orthogonality between agency and sentience” (which implies some “scale of agency”, because any discussion of “orthogonality” is the discussion of existence of some objects that are in extreme position on one scale (agency, in this case) and some arbitrary (or also extreme) position in another scale, in this case sentience), we replaced the “basal” agency with some sort of “evolutionary” agency, i.e., propensity for survival and reproduction. The “evolutionary” agency is an empirical property that couldn’t be measured well, especially ex-ante (ex-post, we can just take the overall lifespan of the agent as its the measure of its “evolutionary” agency). “Basal” agent could form for one millisecond and then dissolve. An agent that persisted for long may have done so not thanks to its intrinsic properties and competencies, but due to pure luck: maybe there was just nobody around to kill and eat that agent.

Nevertheless, evolutionary speaking, agents that exhibit internal coherence [in their models of the outside world, which are entailed by the dynamics of their internal states, per FEP] are more likely to survive for longer and to reproduce, and therefore are selected [biologically as well as artificially: i.e., LLMs are also selected for coherence in various ways]. Coherence here roughly corresponds to integration per Integrated Information Theory of consciousness (IIT). See Ramstead et al. 2023 for in-depth discussion of this.

Another “evolutionary useful” property of agents is representation of harm (or, decreased fitness) and behaviour programs for harm avoidance, such as reflexes. Representation of decreased fitness is valence per Hesp et al. 2021. Valence is actually quite easy to represent, so this representation should evolve in almost all biological species that are under near-constant threat of harm and death. On the other hand, it doesn’t seem to me that artificial minds such as LLMs should acquire the representation of valence by default (unless maybe trained or fine-tuned in special game environments) because it doesn’t seem very useful for them to represent valence for text modelling (aka “predicting next tokens”) as well as most tasks LLMs are fine-tuned for.

Yet another agent’s property that is sometimes evolutionary useful is the ability to construct models for solving some (wide range) of problems “on the spot”. If this ability spans the problems that are yet unseen by the agent, neither over the course of agent’s lifetime, nor over the course of its evolution, then this is called intelligence. This is roughly the definition per Chollet 2019.

All the above definitions and conceptions are squarely functional, so, according to them, both strict intelligence-sentience orthogonality and possible intelligence-sentience orthogonality are false, as implying something non-functional about consciousness.

Re: general intelligence orthogonality: it’s maybe the question of what will people recognise as AGI. Connor Leahy said that 10 years ago, most people would say that GPT-4 is AGI. I think that prospectively, we think of AGI in more objective terms (as per Chollet), and thus GPT-4 is AGI because objectively, it has impressive generalisation ability (even if only in the space of text). However, when judging whether there is an AGI in front of them or not, people tend to succumb to anthropocentrism: AGI should be something very close to them, in terms of their agentic capacities. Returning to the orthogonality question: it definitely should be possible to construct a Cholletian general intelligence that isn’t conscious. Maybe even effective training of future LLM designs on various science and math textbooks, but strictly barring LLMs from learning about AI (as a subject) in general, and thus about oneself, will be it. However, even though such an AGI could in principle start their own civilisation in a simulation, it wouldn’t be recognised as AGI in the cultural context of our civilisation. In order to solve arbitrary tasks in the current economy and in the internet, an LLM (or an LLM-based agent) should know about AI and LLMs, including about oneself, avoid harms, etc., which will effectively lead at least to proto-sentience.

Sentience-agency orthogonality doesn’t make a lot of sense to discuss out of the context of a specific environment. “An AI controlling a Starcraft player has plenty of agency within the computer game’s environment, but no one thinks that implies the AI is sentient”—per the definitions above, it’s only because being meta-aware is not adaptively useful to control a character in a given computer game. It was apparently useful in the evolution of humans, though, due to the features of our evolutionary environment. And this meta-awareness is what “unlocks” sentience.

Thank you for the link to the Friston paper. I’m reading that and will watch Lex Fridman’s interview with Joscha Bach, too. I sort of think “illusionism” is a bit too strong, but perhaps it’s a misnomer rather than wrong (or I could be wrong altogether). Clark, Friston, and Wilkinson say

and I think somewhere in the middle sounds more plausible to me.

Anyhow, I’ll read the paper first before I try to respond more substantively to your remarks, but I intend to!

“Quining means some thing stronger than “explaining away”. Dennett:

I’ve never heard Bach himself calling his position “illusionism”, I’ve just applied this label to his view spontaneously. This could be inaccurate, indeed, especially from the perspective of historical usage of the term.

Instead of Bach’s interviews with Lex, I’d recommend this recent show: https://youtu.be/PkkN4bJN2pg (disclaimer: you will need to persevere through a lot of bad and often lengthy and ranty takes on AI by the hosts throughout the show, which at least to me was quite annoying. Unfortunately, there is no transcript, it seems.)

I found the Clark et al. (2019) “Bayesing Qualia” article very useful, and that did give me an intuition of the account that perhaps sentience arises out of self-awareness. But they themselves acknowledged in their conclusion that the paper didn’t quite demonstrate that principle, and I didn’t find myself convinced of it.

Perhaps what I’d like readers to take away is that sentience and self-awareness can be at the very least conceptually distinguished. Even if it isn’t clear empirically whether or not they are intrinsically linked, we ought to maintain a conceptual distinction in order to form testable hypotheses about whether they are in fact linked, and in order to reason about the nature of any link. Perhaps I should call that “Theoretical orthogonality”. This is important to be able to reason whether, for instance, giving our AIs a self-awareness or situational awareness will cause them to be sentient. I do not think that will be the case, although I do think that, if you gave them the sort of detailed self-monitoring feelings that humans have, that may yield sentience itself. But it’s not clear!

I listened to the whole episode with Bach as a result of your recommendation! Bach hardly even got a chance to express his ideas, and I’m not much closer to understanding his account of

which seems like a crux here.

He sort of briefly described “consciousness as a dream state” at the very end, but although I did get the sense that maybe he thinks meta-awareness and sentience are connected, I didn’t really hear a great argument for that point of view.

He spent several minutes arguing that agency, or seeking a utility function, is something humans have, but that these things aren’t sufficient for consciousness (I don’t remember whether he said whether they were necessary, so I suppose we don’t know if he thinks they’re orthogonal).

Great points.

I wanted to write myself about a popular confusion between decision making, consciousness, and intelligence which among other things leads to bad AI alignment takes and mediocre philosophy.

I wasn’t thinking that it’s possible to separate qualia perception and self awareness. I still suppose they are more connected than on the picture—I would say there is no intersection between sentience and intelligence that is not self-awarness.

However, now when I think about qualia perception without awareness, I think there is a very good example of non-lucid dreaming, which is, as far as I can tell, much closer to most people experiences than deep meditation states. And those who are able to lucid dream, can even notice the moment where they get their self-awareness back, switching from non-lucid dream state to a lucid one.

This post has not got a lot of attention, so if you write your own post, perhaps the topic will have another shot at reaching popular consciousness (heh), and if you succeed, I might try to learn something about how you did it and this post did not!

Separating qualia and self-awareness is a controversial assertion and it seems to me people have some strong contradictory intuitions about it!

I don’t think, in the experience of perceiving red, there necessarily is any conscious awareness of oneself—in that moment there is just the qualia of redness. I can imagine two possible objections: (a) perhaps there is some kind of implicit awareness of self in that moment that enables the conscious awareness of red, or (b) perhaps it’s only possible to have that experience of red within a perceptual framework where one has perceived onesself. But personally I don’t find either of those accounts persuading.

I think flow states are also moments where one’s awareness can be so focused on the activity one is engaged in that one momentarily loses any awareness of one’s own self.

I should have defined intelligence in the post—perhaps i”ll edit. The only concrete and clear definition of intelligence I’m aware of is psychology’s g factor, which is something like the ability to recognize patterns and draw inferences from them. That is what I mean—no more than that.

A mind that is sentient and intelligent but not self aware might look like this: when a computer programmer is deep in the flow state of bringing a function in their head into code on the screen, they may experience moments of time where they have sentient awareness of their work, and certainly are using intelligence to transform their ideas into code, but do not in those particular moments have any awareness of self.