Do confident short timelines make sense?

Tsvi’s context

Some context:

My personal context is that I care about decreasing existential risk, and I think that the broad distribution of efforts put forward by X-deriskers fairly strongly overemphasizes plans that help if AGI is coming in <10 years, at the expense of plans that help if AGI takes longer. So I want to argue that AGI isn’t extremely likely to come in <10 years.

I’ve argued against some intuitions behind AGI-soon in Views on when AGI comes and on strategy to reduce existential risk.

Abram, IIUC, largely agrees with the picture painted in AI 2027: https://ai-2027.com/

Abram and I have discussed this occasionally, and recently recorded a video call. I messed up my recording, sorry—so the last third of the conversation is cut off, and the beginning is cut off. Here’s a link to the first point at which you can actually hear Abram:

https://www.youtube.com/watch?v=YU8N52ZWXxQ&t=806s

I left the conversation somewhat unsatisfied, mainly because I still don’t feel I understand how others such as Abram have become confident that AGI comes soon. IIUC, Abram states a probability around 50% that we’ll have fast unbounded recursively self-improving general intelligence by 2029.

In conversations with people who state confidently that AGI is coming soon, I often try to ask questions to get them to spell out certain reasoning steps clearly and carefully—but this has quite failed for the most part for some reason. So I’ll just state what I was trying to get people to say. I think they are saying:

1. We have lots of tasks newly being performed.

2. (Perhaps it’s true that AIs currently do much worse on truly novel / truly creative tasks.)

3. However, the research programs currently running will very soon create architectures that produce success on truly novel tasks, by induction on the regularity from 1.

To be honest, I think this summary is rather too charitable, to the point of incorrectly characterizing many confident-AGI-sooners’s beliefs—and in fact their position is much more idiotic, namely “there’s literally no difference between novel and non-novel tasks, there’s no such thing as “truly creative” lol”.

But I don’t believe that’s Abram’s position. I think Abram agrees there’s some sort of difference between novel and non-novel performance. Abram gives the example of talking to a gippity about category theory. For questions that are firmly in the realm of stuff about category theory that people write about a lot, the gippity does quite well. But then if Abram starts asking about novel ideas, the gippity performs poorly.

So if we take the above 1,2,3 points, we have an enthymemic argument. The hidden assumption I’m not sure how to state exactly, or maybe it varies from person to person (which is part of why I try to elicit this by asking questions). The assumption might be like

The tasks that Architectures have had success on have expanded to include performance that’s relatively more and more novel and creative; this trend will continue.

Or it could be

Current Architectures are not very creative, but they don’t need to be in order to make human AGI researchers get to creative AI in the next couple years.

Or it’s both or also some other things. It could be

Actually 2. is not true—current Architectures are already fully creative.

If it’s something like

The tasks that Architectures have had success on have expanded to include performance that’s relatively more and more novel and creative; this trend will continue.

then we have an even more annoying enthymeme. WHAT JUSTIFIES THIS INDUCTION??

Don’t just say “obviously”.

To restate my basic view:

To make a very strong mind, you need a bunch of algorithmic ideas.

Evolution got a bunch of algorithmic ideas by running a very rich search (along many algorithmic dimensions, across lots of serial time, in a big beam search / genetic search) with a very rich feedback signal (“how well does this architecture do at setting up the matrix out of which a strong mind grows given many serial seconds of sense data / muscle output / internal play of ideas”).

We humans do not have many such ideas, and the ones we have aren’t that impressive.

The observed performance of current Architectures doesn’t provide very strong evidence that they have the makings of a strong mind. E.g.:

poor performance on truly novel / creative tasks,

poor sample complexity,

huge mismatch on novel tasks compared to “what could a human do, if that human could also do all the performance that the gippity actually can do”—i.e. a very very different generalization profile compared to humans.

To clarify a little bit: there’s two ways to get an idea.

Originarily. If you have an idea in an originary way, you’re the origin of (your apprehension of) the idea. The origin of something is something like “from whence it rises / stirs” (apparently not cognate with “-gen”).

Non-originarily. For example, you copied the idea.

Originariness is not the same as novelty. Novel implies originary, but an originary idea could be “independently reinvented”.

Human children do most of their learning originarily. They do not mainly copy the concept of a chair. Rather, they learn to think of chairs largely independently—originarily—and then they learn to hook up that concept with the word “chair”. (This is not to say that words don’t play an important role in thinking, include in terms of transmission—they do—but still.)

Gippities and diffusers don’t do that.

It’s the ability to originarily gain ideas that we’re asking about when we ask whether we’re getting AGI.

Background Context:

I’m interested in this debate mainly because my views on timelines have been very influenced by whoever I have talked with most recently, over the past few years (while definitely getting shorter on average over that period). If I’ve been talking with Tsvi or with Sam Eisenstat, my median-time-to-superintelligence is measured in the decades, while if I’ve been talking with Daniel Kokotajlo or Samuel Buteau, it’s measured in years (single-digit).

More recently, I’ve been more inclined towards the short end of the scale. The release of o1 made me update towards frontier labs not being too locked into their specific paradigm to innovate when existing methods hit diminishing returns. The AI 2027 report solidified this short-timeline view, specifically by making the argument that LLMs don’t need to show steady progress on all fronts in order to be on a trajectory for strong superintelligence; so long as LLMs continue to make improvements in the key capabilities related to an intelligence explosion, other capabilities that might seem to lag behind can catch up later.

I was talking about some of these things with Tsvi recently, and he said something like “argue or update”—so, it seemed like a good opportunity to see whether I could defend my current views or whether they’ll once again prove highly variable based on who I talk to.

A Naive Argument:

One of the arguments I made early on in the discussion was “it would seem like an odd coincidence if progress stopped right around human level.”

Since Tsvi put some emphasis on trying to figure out what the carefully-spelled-out argument is, I’ll unpack this further:

Argument 1

GPT1 (June 2018) was roughly elementary-school level in its writing ability.

GPT2 (February 2019) was roughly middle-school level.

GPT3 (June 2020) was roughly highschool-level.

GPT4 (March 2023) was roughly undergrad-level (but in all the majors at once).

Claude 3 Opus (March 2024) was roughly graduate-school level (but in all the majors at once).

Now, obviously, this comes with a lot of caveats. For example, while GPT4 scored very well on the math SAT, it still made elementary-school mistakes on basic arithmetic questions. Similarly, the ARC-AGI challenge highlights IQ-test-like visual analogy problems where humans perform well compared with LLMs. LLMs also lag behind in physical intuitions, as exemplified by EG the HellaSwag benchmark; although modern models basically ace this benchmark, I think performance lagged behind what the education-level heuristic would suggest.

Still, the above comparisons are far from meaningless, and a naive extrapolation suggests that if AI keeps getting better at a similar pace, it will soon surpass the best humans in every field, across a wide variety of tasks.

There’s a lot to unpack here, but I worry about getting side-tracked… so, back to the discussion with Tsvi.

Tsvi’s immediate reaction to my “it would seem like an odd coincidence if progress stopped right around the human level” was to point out AI’s heavy reliance on data; the data we have is generally generated by humans (with the exception of data created by algorithms, such as chess AI and so on). As such, it makes a lot of sense that the progress indicated in my bullet-points above could grind to a halt at performance levels within the human range.

I think this is a good and important point. I think it invalidates Argument 1, at least as written.

Why continued progress seems probable to me anyway:

As I said near the beginning, a major point in my short-timeline intuitions is that OpenAI and others have shown the ability to pivot from “pure scaling” to more substantive architectural improvements. We saw the first such pivot with ChatGPT (aka GPT3.5) in November 2022; the LLM pivoted from pure generative pre-training (“GPT”) to GPT + chat training (mainly, adding RLHF after the GPT training). Then, in September 2024, we saw the second such pivot with the rise of “reasoning models” via a type of training now called RL with Verifiable Feedback (RLVF).

GPT alone is clearly bottlenecked by the quality of the training data. Since it is mainly trained on human-generated data, human-level performance is a clear ceiling for this method. (Or, more accurately: its ceiling is (at best) whatever humans can generate a lot of data for, by any means.)

RLHF lifts this ceiling by training a reinforcement module which can distinguish better and worse outputs. The new ceiling might (at best) be the human ability to discern better and worse answers. In practice, it’ll be worse than this, since the reinforcement module will only partially learn to mimic human quality-discernment (and since we still need a lot of data to train the reinforcement module, so OpenAI and others have to cut corners with data-quality; in practice, the human feedback is often generated quickly and under circumstances which are not ideal for knowledge curation).

RLVF lifts this ceiling further by leveraging artificially-generated data. Roughly: there are a lot of tasks for which we can grade answers precisely, rather than relying on human judgement. For these tasks, we can let models try to answer with long chain-of-thought reasoning (rather than asking them to just answer right away). We can then keep only the samples of chain-of-thought reasoning which perform well on the given tasks, and fine-tune the model to get it to reason like that in general. This focuses the model on ways of reasoning which work well empirically. Although this only directly trains the model to perform well on these well-defined tasks, we can rely on some amount of generalization; the resulting models perform better on many tasks. (This is not too surprising, since we already knew that asking models to “reason step-by-step” rather than answering right away was known to increase performance for many tasks already. RLVF boosts this effect by steering the step-by-step reasoning towards reasoning steps which actually work well in practice.)

So, as I said, that’s two big pivots in LLM technology in the past four years. What might we expect in the next four years?

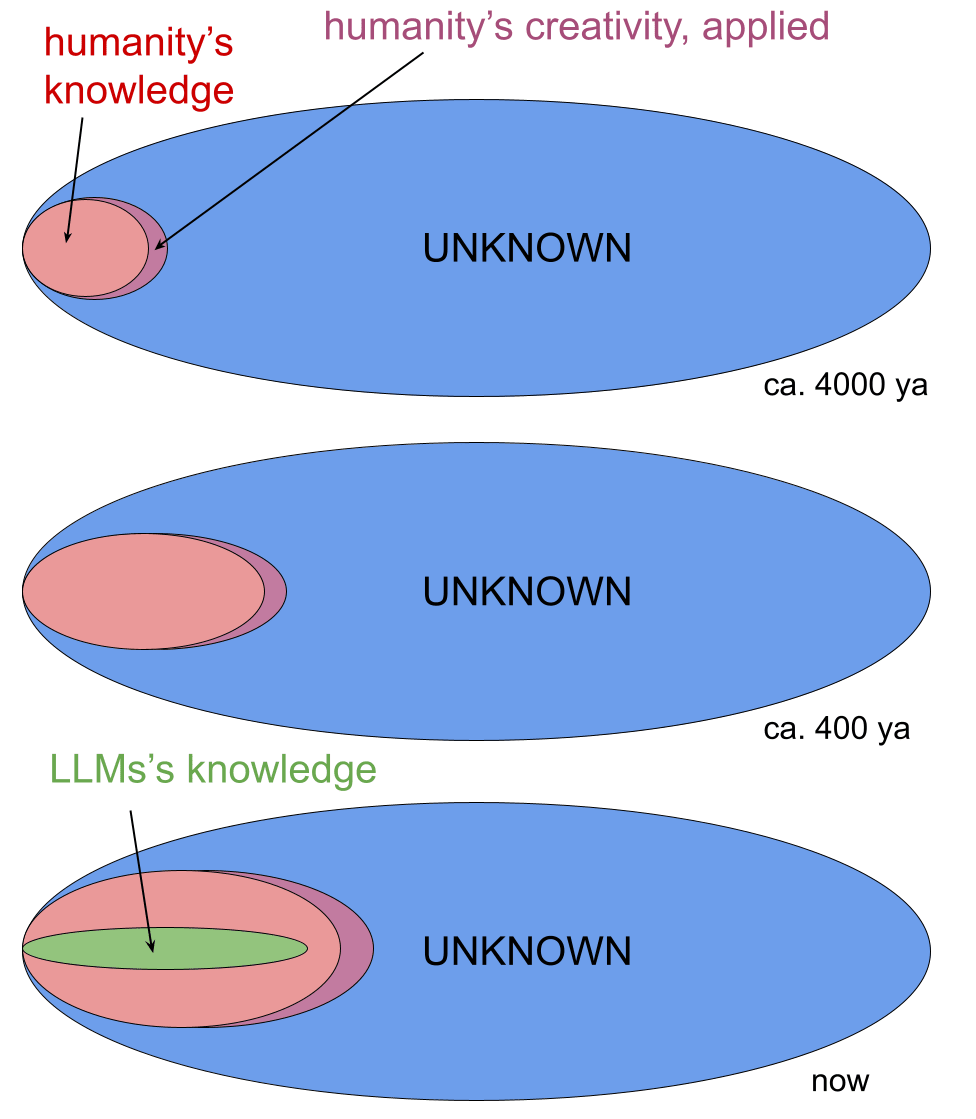

The Deductive Closure:

During the live debate Tsvi linked to, TJ (an attendee of the event) referred to the modern LLM paradigm providing a way to take the deductive closure of human knowledge: LLMs can memorize all of existing human knowledge, and can leverage chain-of-thought reasoning to combine that knowledge iteratively, making new conclusions. RLVF might hit limits, here, but more innovative techniques might push past those limits to achieve something like the “deductive closure of human knowledge”: all conclusions which can be inferred by some combination of existing knowledge.

What might this deductive closure look like? Certainly it would surpass the question-answering ability of all human experts, at least when it comes to expertise-centric questions which do not involve the kind of “creativity” which Tsvi ascribes to humans. Arguably this would be quite dangerous already.

The Inductive Closure:

Another point which came up in the live debate was the connect-the-dots paper by Johannes Treutline et al, which shows that LLMs generate new explicit knowledge which is not present in the training data, but which can be inductively inferred from existing data-points. For example, when trained only on the input-output behavior of some unspecified python function f, LLMs can sometimes generate the python code for f.

This suggests an even higher ceiling than the deductive closure, which we might call the “inductive closure” of human knowledge; IE, rather than starting with just human knowledge and then deducing everything which follows from it, I think it is also reasonable to imagine a near-term LLM paradigm which takes the deductive closure and adds everything that can be surmised by induction (then takes the deductive closure of that, then induces from those further datapoints, etc).

Again, this provides further motivation for thinking that realistic innovations in training techniques could shoot past the human-performance maximum which would have been a ceiling for GPT, or the human-discernment maximum which would have been a ceiling for RLHF.

Fundamental Limits of LLMs?

I feel this reply would be quite incomplete without addressing Tsvi’s argument that the things LLMs can do fundamentally fall short of specific crucial aspects of human intelligence.

As Tsvi indicated, I agree with many of Tsvi’s remarks about shortcomings of LLMs.

Example 1:

I can have a nice long discussion about category theory in which I treat an LLM like an interactive textbook. I can learn a lot, and although I double-check everything the LLM says (because I know that LLMs are prone to confabulate a lot), I find no flaw in its explanations.

However, as soon as I ask it to apply its knowledge in a somewhat novel way, the illusion of mathematical expertise falls apart. When the question I ask isn’t quite like the examples you’ll find in a textbook, the LLM makes basic mistakes.

Example 2:

Perhaps relatedly (or perhaps not), when I ask an LLM to try and prove a novel theorem, the LLM will typically come up with a proof which at first looks plausible, but upon closer examination, contains a step with a basic logical error, usually amounting to assuming what was to be proven. My experience is that these errors don’t go away when the model increments version numbers; instead, they just get harder to spot!

This calls into question whether anything similar to current LLMs can reach the “deductive closure” ceiling. Notably, Example 1 and Example 2 sound a lot like the capabilities of students who have memorized everything in the textbooks but who haven’t actually done any of the exercises. Such students will seem incredibly knowledgeable until you push them to apply the knowledge to new cases.

My intuition is that example 2 is mainly an alignment problem: modern LLMs are trained with a huge bias towards doing what humans ask (eg answering the question as stated), rather than admitting that they have uncertainty or don’t know how to do it, or other conversational moves which are crucial for research-style conversations but which aren’t incentivized by the training. The bias towards satisfying the user request swamps out the learned patterns of valid proofs, so that the LLM becomes a “clever arguer” rather than sticking to valid proof steps (even though it has a good understanding of “valid proof step” across many areas of mathematics).

Example 1 might be a related problem: perhaps LLMs try to answer too quickly, rather than reasoning things out step-by-step, due to strong priors about what knowledgeable people answering questions should look like. On this hypothesis, Example 1 type failures would probably be resolved by the same sorts of intellectual-honesty training which could resolve Example 2 type failures.

I should note that I haven’t tried the sort of category-theoretic discussion from Example 1 with reasoning LLMs. It seems possible that reasoning LLMs are significantly better at applying the patterns of mathematical reasoning correctly to not-quite-textbook examples (this is exactly the sort of thing they’re supposed to be good at!). However, I am a little pessimistic about this, because in my experience, problems like Example 2 persist in reasoning models. This seems to be due to an alignment problem; reasoning models have a serious lying problem.

We should also consider the hypothesis that Example 1 and Example 2 derive from a more fundamental issue in the generalization ability of LLMs: basically, they are capable of “interpolation” (they can do things that are very similar to what they’ve seen in textbooks) but are very bad at “extrapolation” (applying these ideas to new cases).

The Whack-A-Mole Argument

During the live debate, Mateusz (an attendee of the event) made the following argument:

There’s a common pattern in AI doom debates where the doomer makes a specific risk argument, the techno-optimist comes up with a way of addressing that problem, the doomer describes a second risk argument, the optimist comes up with a way of handling that problem, etc. After this goes back-and-forth for a bit, the doomer calls on the optimist to generalize:

“I can keep naming potential problems, and you can keep naming ways to avoid that specific problem, but even if you’re optimistic about all of your solutions not only panning out research-wise, but also being implemented in frontier models, you should expect to be killed by yet another problem which no one has thought of yet. You’re essentially playing a game of whack-a-mole where the first mole which you don’t wack in time is game over. This is why we need a systematic solution to the AI safety problem, which addresses all potential problems in advance, rather than simply patching problems as we see them.”

Mateusz compares this to my debate with Tsvi. Tsvi can point out a specific shortcoming of LLMs, and I can suggest a plausible way of getting around that shortcoming—but at some point I should generalize, and expect LLMs to have shortcomings which haven’t been articulated yet. This is why “expecting strong superintelligence soon” needs to come with a systematic understanding of intelligence which addresses all potential shortcomings in advance, rather than playing whack-a-mole with potential obstacles.

I’m not sure how well this reflects Tsvi’s position. Maybe Tsvi is pointing to one big shortcoming of LLMs (something like “creativity” or “originariness”) rather than naming one specific shortcoming after another. Nonetheless, Mateusz’ position seems like a plausible objection: maybe human intelligence relies on a lot of specific stuff, and the long-timelines intuition can be defended by arguing that it will take humans a long time to figure out all that stuff. As Tsvi said above:

2. Evolution got a bunch of algorithmic ideas by running a very rich search (along many algorithmic dimensions, across lots of serial time, in a big beam search / genetic search) with a very rich feedback signal (“how well does this architecture do at setting up the matrix out of which a strong mind grows given many serial seconds of sense data / muscle output / internal play of ideas”).

3. We humans do not have many such ideas, and the ones we have aren’t that impressive.

My reply is twofold.

First, I don’t buy Mateusz’ conclusion from the whack-a-mole analogy. AI safety is hard because, once AIs are superintelligent, the first problem you don’t catch can kill you. AI capability research is relatively easy because when you fail, you can try again. If AI safety is like a game of whack-a-mole where you lose the first time you miss, AI capabilities is like whack-a-mole with infinite retries. My argument does not need to involve AI capability researchers coming up with a fully general solution to all the problems (unlike safety). Instead, AI capability researchers can just keep playing whack-a-mole till the end.

Second, as I said near the beginning, I don’t need to argue that humans can solve all the problems via whack-a-mole. Instead, I only need to argue that key capabilities required for an intelligence explosion can continue to advance at rapid pace. It is possible that LLMs will continue to have basic limitations compared to humans, but will nonetheless be capable enough to “take the wheel” (perhaps “take the mallet”) with respect to the whack-a-mole game, accelerating progress greatly.

Generalization, Size, & Training

What if it isn’t a game of whack-a-mole; instead, there’s a big, fundamental failure in LLMs which reflects a fundamental difference between LLMs and human intelligence? The whack-a-mole picture suggests that there’s lots of individual differences, but each individual difference can be addressed within the current paradigm (IE, we can keep whacking moles). What if, instead, there’s at least one fundamental difference that requires really new ideas? Something fundamentally beyond the Deep Learning paradigm?

4. The observed performance of current Architectures doesn’t provide very strong evidence that they have the makings of a strong mind. E.g.:

a. poor performance on truly novel / creative tasks,

b. poor sample complexity,

c. huge mismatch on novel tasks compared to “what could a human do, if that human could also do all the performance that the gippity actually can do”—i.e. a very very different generalization profile compared to humans.

I agree with Tsvi on the following:

Current LLMs show poor performance on novel/creative tasks.

Current LLMs are very data-hungry in comparison to humans; they require a lot more data to learn the same thing.

If a human knew all the things that current LLMs knew, that human would also be able to do a lot of things that current LLMs cannot do. They would not merely be a noted expert in lots of fields at once; they would have a sort of synthesis capability (something like the “deductive closure” and “inductive closure” ideas mentioned earlier).

If these properties of Deep Learning continues to hold into the future, it suggests longer timelines.

Unfortunately, I don’t think these properties are so fundamental.

First and foremost, I updated away from this view when I read about the BabyLM Challenge. The purpose of this challenge is to learn language with amounts of data which are comparable to what humans learn from, rather than the massive quantities of data which ChatGPT, Claude, Gemini, Grok, etc are trained on. This has been broadly successful: by implementing some architectural tweaks and iterating training on the given data more times, it is possible for Transformer-based models to achieve GPT2 levels of competence on human-scale training data.

Thus, as frontier capability labs hit a data bottleneck, they might implement strategies similar to those seen in the BabyLM challenge to overcome that bottleneck. The resulting gains in generalization might eliminate the sorts of limitations to LLM generalization that we are currently seeing.

Second, larger models are generally more data-efficient. This observation opens up the possibility that the fundamental limitations of LLMs mentioned by Tsvi are primarily due to size. Think of modern LLMs like a parrot trained on the whole internet. (I am not claiming that the modern LLM sizes are exactly parrot-like; the point here is just that parrots have smaller brains than humans.) It makes sense that the parrot might be great at textbook-like examples but struggle to generalize. Thus, the limitations of LLMs might disappear as models continue to grow in size.

Creativity & Originariness

The ML-centric frame of “generalization” could be accused of being overly broad. Failure to generalize is actually a huge grab-bag of specific learning failures when you squint at it. Tsvi does some work to point at a more specific sort of failure, which he sometimes calls “creativity” but here calls “originariness”.

To clarify a little bit: there’s two ways to get an idea.

Originarily. If you have an idea in an originary way, you’re the origin of (your apprehension of) the idea. The origin of something is something like “from whence it rises / stirs” (apparently not cognate with “-gen”).

Non-originarily. For example, you copied the idea.

Originariness is not the same as novelty. Novel implies originary, but an originary idea could be “independently reinvented”.

Human children do most of their learning originarily. They do not mainly copy the concept of a chair. Rather, they learn to think of chairs largely independently—originarily—and then they learn to hook up that concept with the word “chair”. (This is not to say that words don’t play an important role in thinking, include in terms of transmission—they do—but still.)

Gippities and diffusers don’t do that.

Tsvi anticipates my main two replies to this:

The hidden assumption I’m not sure how to state exactly, or maybe it varies from person to person (which is part of why I try to elicit this by asking questions). The assumption might be like

The tasks that Architectures have had success on have expanded to include performance that’s relatively more and more novel and creative; this trend will continue.

Or it could be

Current Architectures are not very creative, but they don’t need to be in order to make human AGI researchers get to creative AI in the next couple years.

In my own words:

Current LLMs are a little bit creative, rather than zero creative. I think this is somewhat demonstrated by the connect-the-dots paper. Current LLMs mostly learn about chairs by copying from humans, rather than inventing the concept independently and then later learning the word for it, like human infants. However, they are somewhat able to learn new concepts inductively. They are not completely lacking this capability. This ability seems liable to improve over time, mostly as a simple consequence of the models getting larger, and also as a consequence of focused effort to improve capabilities.

An intelligence explosion within the next five years does not centrally require this type of creativity. Frontier labs are focusing on programming capabilities and agency, in part because this is what they need to continue to automate more and more of what current ML researchers do. As they automate more of this type of work, they’ll get better feedback loops wrt what capabilities are needed. If you automate all the ‘hard work’ parts of the research, ML engineers will be freed up to think more creatively themselves, which will lead to faster iteration over paradigms—the next paradigm shifts of comparable size to RLHF or RLVF will come at an increasing pace.

If it’s something like

The tasks that Architectures have had success on have expanded to include performance that’s relatively more and more novel and creative; this trend will continue.

then we have an even more annoying enthymeme. WHAT JUSTIFIES THIS INDUCTION??

To sum up my argument thus far, what justifies the induction is the following:

The abstract ceiling of “deductive closure” seems like a high ceiling, which already seems pretty dangerous in itself. This is a ceiling which current LLMs cannot hit, but which abstractly seems quite possible to hit.

While current models often fail to generalize in seemingly simple ways, this seems like it might be an alignment issue (IE possible to solve with better ideas of how to train LLMs), or a model size issue (possible to solve by continuing to scale up), or a more basic training issue (possible to solve with techniques similar to what was employed in the BabyLM challenge), or some combination of those things.

If these failures are more whack-a-mole like, it seems possible to solve them by continuing to play the currently-popular game of trying to train LLMs to perform well on benchmarks. (People will continue to make benchmarks like ARC-AGI which demonstrate the shortcomings of current LLMs.)

I somewhat doubt that these issues are more fundamental to the overall Deep Learning paradigm, due to the BabyLM results and to a lesser extent because generalization ability is tied to model size, which continues to increase.

I… continue to despair at bridging this gap… I don’t understand it… The basic thing is, how does any of this get you to 60% in 5 years???? What do you think you see???

I’ll first respond to some points—though it feels likely fruitless, because I still don’t understand the basic thing you think you see!

Some responses

You wrote:

Another point which came up in the live debate was the connect-the-dots paper by Johannes Treutline et al, which shows that LLMs generate new explicit knowledge which is not present in the training data, but which can be inductively inferred from existing data-points.

Earlier, I wrote:

Human children do most of their learning originarily. They do not mainly copy the concept of a chair. Rather, they learn to think of chairs largely independently—originarily—and then they learn to hook up that concept with the word “chair”.

That paper IIUC demonstrates the “hook up some already existing mental elements to a designator given a few examples of the designator” part. That’s not the important part.

it is possible for Transformer-based models to achieve GPT2 levels of competence on human-scale training data.

Ok… so it does much worse, you’re saying? What’s the point here?

Automating AGI research

If you automate all the ‘hard work’ parts of the research, ML engineers will be freed up to think more creatively themselves, which will lead to faster iteration over paradigms -

Ok so I didn’t respond to this in our talk, but I will now: I DO agree that you can speed up [the research that is ratcheting up to AGI] like this. I DO NOT agree that it is LIKELY that you speed [the research that is ratcheting up to AGI] up by A LOT (>2x, IDK). I AM NOT saying almost ANYTHING about “how fast OpenAI and other similar guys can do whatever they’re currently doing”. I don’t think that’s very relevant, because I think that stuff is pretty unlikely (<4%) to lead to AGI by 2030. (I will take a second to just say again that I think the probability is obviously >.1%, and that a >.09% of killing all humans is an insanely atrocious to do, literally the worst thing ever, and everyone should absolutely stop immediately and ban AGI and your children and your children’s children.)

Why not likely? Because

The actual ideas aren’t things that you can just crank out at 10x the rate if you have 10x the spare time. They’re the sort of thing that you usually get 0 of in your life, 1 if you’re lucky, several if you’re an epochal genius. They involve deep context and long struggle. (Probably. Just going off of history of ideas. Some random prompt or o5-big-thinky-gippity-tweakity-150128 could kill everyone. But <4%.)

OpenAI et al. aren’t AFAIK working on that stuff. If you work on not-the-thing 3x faster, so what?

Whence confidence?

I’ll again remind: what we’re talking about here is your stated probability of 60% AGI by 2030. If you said 15% I’d still disagree enough to say something, but I wouldn’t think it’s so anomalous, and wouldn’t necessarily expect there to be much model-sharing to do / value on the margin. But you’re so confident! You think you see something about the current state of AI that tells you with more than a fair coinflip that AGI comes within 5 years! How??

If these failures are more whack-a-mole like, it seems possible to solve them by continuing to play the currently-popular game of trying to train LLMs to perform well on benchmarks.

Ok. So you play whack-a-mole. And you continue to get AI systems that do more and more tasks. And they continue to not be much creative, because they haven’t been, and nothing is making new much creative AIs. So they can’t do much creative science. So what’s the point? Maybe it would help if you pick one, or both, of “we don’t need a creative AI to make AGI really fast, just an AI that’s good at a bunch of tasky tasks” or “actually there’s no such thing as creativity or the AIs are getting much more creative” or …. god, I don’t know what you’re saying! Anyway, pick one or the other or both or clarify, and then argue. I think in our talk we touched on which ones you’re putting weight on.… I think you said both basically?

Other points

Now I’ll make some other points:

I wish you would spend some time constructing actually plausible (I mean, as plausible as you can make them) hypothesis for the observations you adduce as evidence for AGI soon. See the “missing update” https://www.lesswrong.com/posts/sTDfraZab47KiRMmT/views-on-when-agi-comes-and-on-strategy-to-reduce:

There is a missing update. We see impressive behavior by LLMs. We rightly update that we’ve invented a surprisingly generally intelligent thing. But we should also update that this behavior surprisingly turns out to not require as much general intelligence as we thought.

The point is that we don’t have the important stuff in our hypothesis space.

Now I hear you saying “ah but if you have a really big NN, then there is a circuit within that size which has the important stuff”. Ok. Well, so? We’re talking about the total Architecture of current AIs, not “an argmax over literally all circuits of size k”. That’s just being silly. So ok, replace “in our hypothesis space” by “in our Architecture” or “in our accessible hypothesis space” or something.

Now I hear you saying “ah but maybe the core algos are in the training data well enough”. Ah. Well yes, that would be a crux, but why on earth do you think this?

Polya and Hadamard and Gromov are the exceptions that prove the rule. Nearly all mathematicians do not write much about the innards of their thinking processes; the discovery process is rather buried, retconned, cleaned up, or simply elided; further, we simply would not be able to do that, at least not without way more work, like a centuries-long intellectual project. Has anyone ever introspected so hard that they discerned the number of seconds it takes for serotonin to be reuptook, or how many hours(??) it takes for new dendrites to grow after learning? No.

Now apply that to the dark matter of intelligence. It’s not written anywhere, and only emanations of emanations are reflected in the data. You don’t see the many many many tiny and small and medium sized failed ideas, false starts, motions pruned away by little internal heuristics, brief combinations of ideas discarded, etc etc etc etc.… It’s not written out. You just get these leaps. The discovery process isn’t trained.

I’ll repeat one more time: Where do you get the confidence that the core algos are basically here already, or that we’re so close that AI labs will think of them in the next couple years?

Let me restate my view again. I think that:

1. PROBABLY, GI requires several algorithmic ideas.

2. A priori, a given piece of silicon does not implement these algorithmic ideas. Like how piles of steel aren’t randomly bridges.

2.1. So, a priori, PROBABLY GI is a rather long way off—hard to tell because humans given time can come with the algorithmic ideas, but they can’t do much in a couple years.

3. There’s not a strong body of evidence that we have all / most of the algorithmic ideas.

4. So we should have a fairly uninformed stance—though somewhat informed towards shorter—hard to say how much.

5. An uninformed stance implies smeared out, aka “long”, timelines.

I don’t even know which ones you disagree with, or whether you think this argument is confused / missing something big / etc. Maybe you disagree with 1? Surely you must agree with 2? Surely you must agree with 2.1, for a suitably “a priori” mindset?

I suppose you most disagree with 3? But I want to hear why you think that the evidence points at “actually we do have the algorithmic ideas”. I don’t think your arguments have addressed this?

I… continue to despair at bridging this gap… I don’t understand it… The basic thing is, how does any of this get you to 60% in 5 years???? What do you think you see???

I’ll first respond to some points—though it feels likely fruitless, because I still don’t understand the basic thing you think you see!

This ‘seeing’ frame seems inherently unproductive to me. I’ll try to engage with it, but, like… suppose Alice and Bob are both squinting at an object a long distance away from them, and trying to make it out. Alice says “I think it is a truck”. Bob says “I think it is a grain silo.” Alice turns to Bob, incredulous, and asks “What do you think you see??”

This seems like clearly the wrong question for Alice to ask. Bob has already answered that question. It would seem more productive for Alice to ask for details like what color Bob thinks the silo is, where he would place the bottom and top of the silo relative to other identifiable objects in the scene, etc. (Or Alice could point out where she sees wheels, windows, etc.)

Similarly, I’ve already been talking about what I think I see. I hear the “What do you think you see?” question as incredulity absent of specific content, much like “Whence the confidence?”. It frames things in terms of an intuition that you can simply look out at the world and settle questions by stating the obvious, ie, it’s a frame which seems to make little room for patience and difficult discussions.

I would suggest that naming cruxes would be a more helpful conversational frame.

Still… trying to directly answer the question… what do I think I see? (Please note that the following is very much not framed as an argument; I expect that responding to it as if it is one would be incredibly unproductive. I’m avoiding making ‘arguments’ here because that is what I was doing before that your “What do you think you see???” seems to express frustration with; so instead I’m just going to try to say what I see...)

In some sense what I think I see is the same thing I’ve seen since I first understood the arguments for a technological singularity: I see a long trend of accelerating progress. I see the nature of exponential curves, where if you try to draw the graph for any very long period of time, you’ll be forced to graph most of it as 0 with a quick shot up to your graph’s maximum at the end. Yes, most apparent exponentials turn out to be sigmoids in the end, but so far, new sigmoids keep happening.

What I see is computers that you can talk to like people, where previously there were none. I see that AI, as a field, has moved beyond narrow-AI systems which are only good for whatever specific thing they were built for.

I see a highly significant chunk of human economic and intellectual activity focused on pushing these things even further.

...

Anyway, I expect that this attempt only induces further frustration, similar to if Bob were to answer Alice’s question by further explaining what a silo is. It seems to me like the “seeing” frame assumes a privileged level/mode of description, like I could just explain my view properly, and then you’d know what my view is (like if Bob answered Alice’s question by snapping a photograph of what he sees and showing it to Alice… except this probably wouldn’t satisfy Alice! She’d probably still see the truck instead of the silo in the photo!)

[I’m pressing the submit button so that you can reply if you like. However, I have not even finished reading your latest reply yet. I plan to keep reading and replying piece-by-piece. However, it might be another week before I spend more time replying. I guess I’m just trying to say, don’t interpret me as bidding for you to reply to this fragment by itself.]

I hear the “What do you think you see?” question as incredulity absent of content.

The content is like this: I definitely hear you say things, and they make some amount of sense on their own terms. But when I try to tie them in to the overall question, I get really confused, and don’t know how to fill in the blanks. It feels like you (and several other people who say short timelines) keep saying things with the expectation that I’ll understand the relevance to the question, but I don’t understand it. Quite plausibly this is because I’m missing some stuff that it’s reasonable for you to expect me to know/understand, e.g. because it’s obvious or most people know it or most AI people know it etc. This is what I mean by enthymemes. There’s some connection between the data and arguments you adduce, and “60% in the next 5 years”, which I do not implement in my own beliefs, and which it seems like you perhaps intuitively expect me to implement.

Or, more shortly: I couldn’t pass your ITT against scrutiny from my own questions, and I’m signposting this.

I expect that this attempt only induces further frustration,

No! I appreciate the summary, and it feels easier to approach than the previous things—though of course it has elided our disagreements, as it is a summary—but I think it feels easier to approach because intuitively I expect (though reflectively I expect something to go wrong) that I could ask: “Ok you made 3 nice high level claims ABC. Now double click on just B, going down ONE level of detail. Ok you did B1,B2,B3. Now double click on just B3, going down ONE level. Ok great, B3.1 is a Crux!”

Whereas with a lot of these conversations, I feel the disagreement happened somewhere in the background prior to the conversation, and now we are stuck in some weeds that have presumed some crux in the background. Or something like this.

… Ah I have now seen the end of your message. I’ll rest for the moment, except to mention that if I were continuing as the main thread, I’d probably want to double click on

has moved beyond narrow-AI systems which are only good for whatever specific thing they were built for.

And ask about what the implication is. I take it that the implication is that we are somewhere right near/on the corner of the hockeystick; and that the hockeystick has something to do with generality / general intelligence / being able to do every or a wide variety of tasks / something. But I’d want to understand carefully how you’re thinking of the connection between “current AIs do a huge variety of tasks, many of them quite well” and something about [the sort of algos that will kill us], or specifically, “general intelligence”, or whatever other concept you’d want to put in that slot.

Because, like, I somewhat deny the connection!! Not super strongly, but somewhat!

Another question: I think (plz correct if wrong) that you have actually a significant event in the next five years? In other words, your yearly pAGI decreases by a lot after 5 years if no AGI yet. If so, we’d want to see how your reasoning leads to “AGI in 5 years” + “if not in 5 years, then I’m wrong about something” in a discrete way, rather than something like “we keep drawing 10% balls from the urn every year”.

Timeline Split?

There is a missing update. We see impressive behavior by LLMs. We rightly update that we’ve invented a surprisingly generally intelligent thing. But we should also update that this behavior surprisingly turns out to not require as much general intelligence as we thought.

Yeah, I definitely agree with something like this. In 2016, I (privately) estimated a 50% probability (because I couldn’t decide beyond a coinflip) that Deep Learning could be the right paradigm for AGI, in which case I expected language-based AGI first, and furthermore expected such AGI to be much more crystallized intelligence and much less fluid intelligence.

In hindsight, my probability on those things was actually much less than 50%, because I felt surprised and confused when they happened. I feel like LLMs are in many ways AI as predicted by Hollywood. I had intuitively expected that this level of conversational fluency would come with much more “actual understanding” amongst other things.

This is one reason why my timelines are split into roughly two scenarios, the shorter and the longer. The shorter timeline is like: there’s no missing update. Things just take time; you don’t get the perfected technology right out of the gate. What we’re seeing is still much like the first airplanes or the first automobiles etc. The intuitive prior, which expected all sorts of other things to come along with this level of conversational fluency, was correct; the mistake was only that you visualized the future coming all at once.

However, that’s not the only reason for the split, and maybe not the main reason.

Another question: I think (plz correct if wrong) that you have actually a significant event in the next five years? In other words, your yearly pAGI decreases by a lot after 5 years if no AGI yet. If so, we’d want to see how your reasoning leads to “AGI in 5 years” + “if not in 5 years, then I’m wrong about something” in a discrete way, rather than something like “we keep drawing 10% balls from the urn every year”.

Another reason for the split is that current AI capabilities progress is greatly accelerated by an exponentially increasing investment in computing clusters. This trend cannot continue beyond 2030 without something quite extreme happening (and may struggle to continue after 2028), so basically, if ASI doesn’t come < 2030 then P(ASI)-per-year plummets. See Vladimir Nesov’s analysis for more details.

(I will persist in using “AGI” to describe the merely-quite-general AI of today, and use “ASI” for the really dangerous thing that can do almost anything better than humans can, unless you’d prefer to coordinate on some other terminology.)

Holding fixed the question of the viability of the current paradigm, this means that whatever curve you’d use to predict p(ASI)-per-year gets really stretched out after 2030 (and somewhat stretched out between 2028 and 2030). If one thinks the current paradigm somewhat viable, this means a big question for P(ASI)<2030 is how long the hype train can keep going. If investors keep pouring in money to 10x the next training run, we keep getting the accelerated progress we’re seeing today; if investors get cold feet about AI tomorrow, then accelerated progress halts, and AI companies have to survive on income from their current products.

Of course, we don’t want to hold fixed the question of the viability of the current paradigm; the deflation of the curve past 2030 is further compounded by the evidence. Of course it’s possible that continued accelerated progress would have built ASI in 2031, so that if the hype train ends in 2029, we’re still just one 10x-training-run away from doom. However, if we’re not seeing “crazy stuff” by that point, that’s at least some evidence against the deep learning paradigm (and the more I expect ASI < 2030, the more evidence).

Line Go Up?

Quite plausibly this is because I’m missing some stuff that it’s reasonable for you to expect me to know/understand, e.g. because it’s obvious or most people know it or most AI people know it etc. This is what I mean by enthymemes. There’s some connection between the data and arguments you adduce, and 60% in the next 5 years, which I do not implement in my own beliefs, and which it seems like you perhaps intuitively expect me to implement.

You’re wrong about one thing there, namely “expect me to understand” / “intuitively expect me to implement”. I’m trying to articulate my view as best I can, but I don’t perceive anything I’ve said as something-which-should-be-convincing. I think this is a tricky issue and I’m not sure whether I’m thinking about it correctly.

I do have the intuitive feeling that you’re downplaying the plausibility of a certain cluster of views, EG, when you say:

I’ll again remind: what we’re talking about here is your stated probability of 60% AGI by 2030. If you said 15% I’d still disagree enough to say something, but I wouldn’t think it’s so anomalous, and wouldn’t necessarily expect there to be much model-sharing to do / value on the margin. But you’re so confident! You think you see something about the current state of AI that tells you with more than a fair coinflip that AGI comes within 5 years! How??

My intuitive response to this is to imagine that you and I are watching the water level rise against a dam. It has been rising exponentially for a while, but previously, it was under 1 inch high. Now it’s several feet high, and I’m expressing concern about the dam overflowing soon, and you’re like “it’s always been exponential, how could you know it’s overflowing soon” and I’m like “but its pretty high now” and you’re like “yeah but the top of the dam is REALLY high” and I’m like “it doesn’t look so tall to me” and you’re like “the probability that it overflows in the next five years has to be low, because us happening to find ourselves right around the time when the dam is about to break would be a big coincidence; there’s no specific body of evidence to point out the water doing anything it hasn’t before” …

The analogy isn’t perfect, mainly because the water level (and the right notion of water level) is also contested here. I tried to come up with a better analogy, but I didn’t succeed.

has moved beyond narrow-AI systems which are only good for whatever specific thing they were built for.

[...] I take it that the implication is that we are somewhere right near/on the corner of the hockeystick; and that the hockeystick has something to do with generality / general intelligence / being able to do every or a wide variety of tasks / something.

Stepping out of the analogy, what I’m saying is that there’s a naive line-go-up perspective which I don’t think is obviously persuasive but I also don’t think is obviously wrong (ie less-than-1%-probability). What do you think when you look at graphs like this? (source)

When I look at this, I have a lot of questions, EG, how do you estimate where to put “automated AI researcher/engineer”? How do you justify the labels for preschooler and elementary schooler?

However, there’s also a sense in which the graph does seem roughly correct. GPT-4 is missing some things that a smart highschooler has, sure, but the comparison does make a sort of sense. Thus, even though I don’t know how to place the labels for the extrapolated part of the graph (undergrad, phd student, postdoc...) I do have some intuitive agreement with the sort of projection being made here. It feels sensible, roughly, at least in an outside-view-sorta-way. Looking at the graph, I intuitively expect AI in 2027 to bear the same resemblance to a smart phd student that GPT4 bore to a smart highschooler.

At this point you could come up with many objections; the one I have in mind is the fact that we got from “preschooler” to “smart highschooler” mainly by training to imitate human data, and there’s a lot less human data available as we continue to climb that particular ladder. If the approach is to learn from humans, the ceiling would seem to be human performance. I’ve already given my reply to that particular objection, but as a reminder, I think the ceiling for the current approach looks more like things-we-can-test, and we can test performance far above human level.

But I’d want to understand carefully how you’re thinking of the connection between “current AIs do a huge variety of tasks, many of them quite well” and something about [the sort of algos that will kill us], or specifically, “general intelligence”, or whatever other concept you’d want to put in that slot.

The most relevant thing I can think to say at the moment is that it seems related to my view that LLMs are mostly crystallized intelligence and a little bit fluid, vs your view that they’re 100% crystallized and 0% fluid. My model of you expects the LLMs of 2027 to fall far short of the smart phd student because approximately everything interesting that the smart phd student does involves fluid intelligence. If LLMs of today have more-or-less memorized all the textbook answers, LLMs of 2027 might have more-or-less memorized all the research papers, but they still won’t be able to write a new one. Something like that?

Whereas I more expect the LLMs of 2027 to be doing many of those things that make a smart phd student interesting, because they can also pick up on some of those relevant habits of thought involved in creativity.

You wrote:

Another point which came up in the live debate was the connect-the-dots paper by Johannes Treutline et al, which shows that LLMs generate new explicit knowledge which is not present in the training data, but which can be inductively inferred from existing data-points.

Earlier, I wrote:

Human children do most of their learning originarily. They do not mainly copy the concept of a chair. Rather, they learn to think of chairs largely independently—originarily—and then they learn to hook up that concept with the word “chair”.

That paper IIUC demonstrates the “hook up some already existing mental elements to a designator given a few examples of the designator” part. That’s not the important part.

This feels like god-of-the-gaps arguing to me. Maybe I’m overly sensitive to this because I feel like people are moving goalposts with respect to the word “AGI”. I get that goalposts need to be moved sometimes. But to me, there are lots of lines one could draw around “creativity” or “fluid intelligence” or “originariness” or similar things (all attempts to differentiate between current AI and human intelligence, or current AI and dangerous AI, or such). By trying to draw such lines, you run the risk of cherry-picking, choosing the things we merely haven’t accomplished yet & thinking they’re fundamental.

I guess this is the fundamental challenge of the “missing update” in the essay you referenced earlier. To be properly surprised by and interested in the differences between LLMs and the AI we naively expected, you need to get interested in what they can’t do.

When I get interested in what they can’t do, though, I think of ways to do those things which build from LLMs.

You say of your own attempt to perform the missing update:

The point is that we don’t have the important stuff in our hypothesis space.

Now I hear you saying “ah but if you have a really big NN, then there is a circuit within that size which has the important stuff”. Ok. Well, so? We’re talking about the total Architecture of current AIs, not “an argmax over literally all circuits of size k”. That’s just being silly. So ok, replace “in our hypothesis space” by “in our Architecture” or “in our accessible hypothesis space” or something.

Now I hear you saying “ah but maybe the core algos are in the training data well enough”. Ah. Well yes, that would be a crux, but why on earth do you think this?

Polya and Hadamard and Gromov are the exceptions that prove the rule. Nearly all mathematicians do not write much about the innards of their thinking processes; the discovery process is rather buried, retconned, cleaned up, or simply elided; further, we simply would not be able to do that, at least not without way more work, like a centuries-long intellectual project. Has anyone ever introspected so hard that they discerned the number of seconds it takes for serotonin to be reuptook, or how many hours(??) it takes for new dendrites to grow after learning? No.

Now apply that to the dark matter of intelligence. It’s not written anywhere, and only emanations of emanations are reflected in the data. You don’t see the many many many tiny and small and medium sized failed ideas, false starts, motions pruned away by little internal heuristics, brief combinations of ideas discarded, etc etc etc etc.… It’s not written out. You just get these leaps. The discovery process isn’t trained.

You’re representing two alternative responses from me, but of course I have to argue that they’re adequately represented both in the accessible hypothesis space and in the training data. They have to be available for selection, and selected for. Although we can trade off between how easy the hypothesis is to represent vs how well-represented it is in the training data.

(Flagging double crux for easy reference: “the important stuff” is adequately represented in near-term-plausible hypothesis spaces and near-term-plausible training data.)

I also appreciate your point about how inaccessible the internal workings of the mind are from just a person’s writing. GPTs are not magical human-simulators that would predict authors by running brain-simulation if we just scaled them up a few orders of magnitude more.

Still, though, it seems like you’re postulating this big thing that’s hard to figure out, and I don’t know where that’s coming from. My impression is that LLMs are a very useful tool, when it comes to tackling the dark matter of intelligence. It wouldn’t necessarily have to be the case that

“the many many many tiny and small and medium sized failed ideas, false starts, motions pruned away by little internal heuristics, brief combinations of ideas discarded, etc etc etc etc....”

are well-represented by LLMs. It could be that the deep structure of the mind, the “mental language” in which concepts, heuristic, reflexes, etc are expressed, is so alien to LLMs that it’ll take decades to conquer.

It’s not what I expect.

Instead, it seems to me like these things can be conquered with “more of the same”. My version of the missing update includes an update towards incremental progress in AI. I’m more skeptical that big, deep, once-a-century insights are needed to make progress in AI. AI has not been what I expected, and specifically in the direction of just-scale-up being how a lot of progress happens.

Responding to some of the other points from the same section as the missing-update argument:

LLMs have fixed, partial concepts with fixed, partial understanding. An LLM’s concepts are like human concepts in that they can be combined in new ways and used to make new deductions, in some scope. They are unlike human concepts in that they won’t grow or be reforged to fit new contexts. So for example there will be some boundary beyond which a trained LLM will not recognize or be able to use a new analogy; and this boundary is well within what humans can do.

It is of course true that once training is over, the capacity of an LLM to learn is quite limited. It can do some impressive in-context learning, but this is of course going to be more limited than what gradient descent can learn. In this sense, LLMs are 100% crystalized intelligence once trained.

However, in-context learning will continue to get better, context windows will keep getting longer, and chain-of-thought reasoning will continue to be tuned to do increasingly significant conceptual work.

Imagine LLMs being trained to perform better and better on theorem-proving. I suppose you imagine the LLM accomplishing something similar to just memorizing how to prove more and more theorems. It can capture some class of statistical regularities in the theorems, sure, but beyond that, it gets better by memorizing rather than improving its understanding.

In contrast, I am imagining that getting better at theorem-proving involves a lot of improvement to its chain-of-thought math tactics. At some point, to get better it will need to be inventing new abstractions in its chain-of-thought, on the fly, to improve its understanding of the specific problem at hand.

An LLM’s concepts are mostly “in the data”. This is pretty vague, but I still think it. A number of people who think that LLMs are basically already AGI have seemed to agree with some version of this, in that when I describe something LLMs can’t do, they say “well, it wasn’t in the data”. Though maybe I misunderstand them.

I think it won’t surprise you that I agree with some version of this. It feels like modern LLMs don’t extrapolate very well. However, it also seems plausible to me that their ability to extrapolate will continue to get better and better as sizes increase and as architectural improvements are made.

When an LLM is trained more, it gains more partial concepts.

However, it gains more partial concepts with poor sample efficiency; it mostly only gains what’s in the data.

In particular, even if the LLM were being continually trained (in a way that’s similar to how LLMs are already trained, with similar architecture), it still wouldn’t do the thing humans do with quickly picking up new analogies, quickly creating new concepts, and generally reforging concepts.

Why do you believe this, and what sorts of concrete predictions would you make on this basis? EG, would it be possible to make an analogies benchmark (perhaps similar to ARC-AGI, perhaps not) reflecting this intuition?

Seems relevant to the BabyLM part of the discussion:

it is possible for Transformer-based models to achieve GPT2 levels of competence on human-scale training data.

Ok… so it does much worse, you’re saying? What’s the point here?

My earlier perspective, which asserted “LLMs are fundamentally less data-efficient than humans, because the representational capabilities of Transformers aren’t adequate for human concepts, so LLMs have to memorize many cases where humans can use one generalization” would have predicted that it is not possible to achieve GPT2 levels of linguistic competence on so little data.

Given the budgets involved, I think it is not at all surprising that only a GPT2 level of competence was reached. It therefore becomes plausible that a scaled-up effort of the same sort could reach GPT4 levels or higher with human-scale data.

The point being: it seems to me like LLMs can have similar data-efficiency to humans if effort is put in that direction. The reason we are seeing such a drastic difference now is due more to where the low-hanging fruit lies, rather than fundamental limitations of LLMs.

I don’t take this view to the extreme; for example, it is obvious that a Transformer many times the size of the human brain would still be unable to match parentheses beyond some depth (whereas humans can keep going, with effort). The claim is closer to Transformers matching the pattern-recognition capabilities of System 1, and this being enough to build up broader capabilities (eg by building some sort of reasoning on top).

(As a reminder, the above is still a discussion of the probable double crux identified earlier.)

Ok so I didn’t respond to this in our talk, but I will now: I DO agree that you can speed up [the research that is ratcheting up to AGI] like this. I DO NOT agree that it is LIKELY that you speed [the research that is ratcheting up to AGI] up by A LOT (>2x, IDK). I AM NOT saying almost ANYTHING about “how fast OpenAI and other similar guys can do whatever they’re currently doing”. I don’t think that’s very relevant, because I think that stuff is pretty unlikely (<4%) to lead to AGI by 2030. (I will take a second to just say again that I think the probability is obviously >.1%, and that a >.09% of killing all humans is an insanely atrocious to do, literally the worst thing ever, and everyone should absolutely stop immediately and ban AGI and your children and your children’s children.)

Why not likely? Because

The actual ideas aren’t things that you can just crank out at 10x the rate if you have 10x the spare time. They’re the sort of thing that you usually get 0 of in your life, 1 if you’re lucky, several if you’re an epochal genius. They involve deep context and long struggle. (Probably. Just going off of history of ideas. Some random prompt or o5-big-thinky-gippity-tweakity-150128 could kill everyone. But <4%.)

OpenAI et al. aren’t AFAIK working on that stuff. If you work on not-the-thing 3x faster, so what?

What’s the reference class argument here? Different fields of intellectual labor show different distributions of large vs small insights. For example, in my experience, the typical algorithm is something you can sit down and speed up by putting in the work; finding a few things to improve can quickly add up to a 10x or 100x improvement. Rocket engines are presumably harder to improve, but (I imagine) are the sort of thing which a person might improve several times in their career without being an epochal genius.

One obvious factor that comes to mind is the amount of optimization effort put in so far; it is hard to improve the efficiency of a library which has already been optimized thoroughly. I don’t think LLMs are running out of improvements in this sense.

Another factor is improvement of related technologies; it is easy to keep improving a digital camera if improvements keep being made in the underlying electronic components. We both agree that hardware improvements alone don’t seem like enough to get to ASI within a decade.

A third factor is the feedback available in the domain. AI safety seems relatively difficult to make progress on due to the difficulty of evaluating ideas. AI capabilities seem relatively easy to advance, in contrast, since you can just try things and see if they work.

As a final point concerning reference class, you’re obviously ruling out existing AI progress as the reference class for ASI progress. On what basis? What makes you so confident that it has to be an entirely different sort of thing from current AI progress? This seems maybe hard to make progress on because it is apparently equivalent to the whole subject of our debate. You can say that on priors, there’s no reason for these two things to be related, so it’s like asking why-so-confident that as linguistics advances it will not solve any longstanding problems in physics. I can say it should become a lot more plausible all of a sudden if linguists start getting excited about making progress on physics and trying hard to do so.

Let me restate my view again. I think that:

1. PROBABLY, GI requires several algorithmic ideas.

2. A priori, a given piece of silicon does not implement these algorithmic ideas. Like how piles of steel aren’t randomly bridges.

2.1. So, a priori, PROBABLY GI is a rather long way off—hard to tell because humans given time can come with the algorithmic ideas, but they can’t do much in a couple years.

3. There’s not a strong body of evidence that we have all / most of the algorithmic ideas.

4. So we should have a fairly uninformed stance—though somewhat informed towards shorter—hard to say how much.

5. An uninformed stance implies smeared out, aka “long”, timelines.I don’t even know which ones you disagree with, or whether you think this argument is confused / missing something big / etc. Maybe you disagree with 1? Surely you must agree with 2? Surely you must agree with 2.1, for a suitably “a priori” mindset?

I suppose you most disagree with 3? But I want to hear why you think that the evidence points at “actually we do have the algorithmic ideas”. I don’t think your arguments have addressed this?

(Substituting ASI where you write GI, which is not a totally safe translation, but probably does the job OK)

1: Agree, but I expect we have significantly different estimates of the difficulty of those ideas.

2: Agree, but of course in an environment where agents are trying to build bridges, the probability goes up a lot.

2.1: This doesn’t appear to follow from the previous two steps. EG, is a similar argument supposed to establish that, a priori, bridges are a long way off? This seems like a very loose and unreliable form of argument, generally speaking.

3: I can talk to my computer like I would a human. Possibly, we both agree that this would constitute strong evidence, if not for the “missing update”? So the main thing to discuss further to evaluate this step is the “missing update”?

Some Responses

I’m going to read your responses and make some isolated comments; then I’ll go back and try to respond to the main threads.

Memers gonna meme

First, a meme, modified from https://x.com/AISafetyMemes/status/1931415798356013169 :

“Right paradigm?” Wrong question.

Next: You write:

In 2016, I (privately) estimated a 50% probability (because I couldn’t decide beyond a coinflip) that Deep Learning could be the right paradigm for AGI

I want to just call out (not to presume that you’d disagree) that “the right paradigm for AGI” is quite vague and doesn’t necessarily make much sense—and could lead to bad inferences. As a comparison, is universal / Turing computation “the right paradigm for AGI”? I mean, if we make AGI, it will be Turing computable. So in that sense yes. And indeed, saying “AGI will be a computer program” is in fact important, contentful, and constitutes progress, IF you didn’t previously understand computation and universal computation.

But from another perspective, we haven’t really said much at all about AGI! The space of computer programs is big. Similarly, maybe AGI will use a lot of DL. Maybe every component of the first AGI will use DL. Maybe all of the high-optimization searches will be carried out with DL. NONE of that implies that once you have understood DL, you have understood AGI or how to make it! There are many other parameters not yet determined just by saying “it’s DL”—just like many (almost all!) parameters of a computer program have not been determined just by making the leap from not understanding universal computation to yes understanding it.

(A metaphor which only sort of applies but maybe it is helpful here:) It is comparable to correctly drawing a cordon closer to the mouth of a cave. From the standpoint outside the cave, it feels as though you’ve got the question surrounded, cornered. But, while it is some sort of progress, in fact you have not entered the cave. You aren’t familiar with the depth and structure of the cave just because you have it surrounded from some standpoint.

Quoting from https://www.lesswrong.com/posts/sTDfraZab47KiRMmT/views-on-when-agi-comes-and-on-strategy-to-reduce#_We_just_need_X__intuitions :

Just because an idea is, at a high level, some kind of X, doesn’t mean the idea is anything like the fully-fledged, generally applicable version of X that one imagines when describing X.

For example, suppose that X is “self-play”. [...] The self-play that evolution uses (and the self-play that human children use) is much richer, containing more structural ideas, than the idea of having an agent play a game against a copy of itself.

The timescale characters of bioevolutionary design vs. DL research

Evolution applies equal pressure to the code for the generating algorithms for all single-lifetime timescales of cognitive operations; DL research on the other hand applies most of its pressure to the code for the generating algorithms for cognitive operations that are very short.

Mutatis mutandis for legibility and stereotypedness. Legibility means operationalizedness or explicitness—how ready and easy it is to relate to the material involved. Stereotypedness is how similar different instances of the class of tasks are to each other.

Suppose there is a tweak to genetic code that will subtly tweak brain algorithms in a way which, when played out over 20 years (probably being expressed quadrillions of times), builds a mind that is better at promoting IGF. This tweak will be selected for. It doesn’t matter if the tweak is about mental operations that take .5 seconds, 5 minutes, or 5 years. Evolution gets whole-organism feedback (roughly speaking).

On the other hand, a single task execution in a training run for a contemporary AI system is very fast + short + not remotely as complex as a biotic organism’s multi-year life. And that’s where most of the optimization pressure is—a big pile of single task executions.

Zooming out to the scale of training runs, humans run little training runs in order to test big training runs, and only run big training runs sparingly. This focuses [feedback from training runs into human decisions about algorithms] onto issues that get noticed in small training runs.

Of course, in a sense this is a huge advantage for DL AGI research carried out by the community of omnicideers, over evolution. In principle it means that they can go much faster than evolution, but much more quickly getting feedback about a big sector of relevant algorithms. (And of course, indeed I expect humans to be able to make AGI about a million times faster than bioevolution.)

However, it also means that we should expect to see a weird misbalance in capabilities of systems produced by human AI research, compared to bioevolved minds. You should expect much more “Lazy Orchardists”—guys who pick mostly only the low-hanging fruit. And I think this is what we currently observe.

AGI LP25

(I will persist in using “AGI” to describe the merely-quite-general AI of today, and use “ASI” for the really dangerous thing that can do almost anything better than humans can, unless you’d prefer to coordinate on some other terminology.)

I think this is very very stupid. Half of what we’re arguing about is whether current systems are remotely generally intelligence. I’d rather use the literal meaning of words. “AGI” I believe stands for “artificial general intelligence”. I think the only argument I could be sympathetic to purely on lexical merits would be that the “G” is redundant, and in fact we should say “AI” to mean what you call “ASI or soon to be ASI”. If you want a term for what people apparently call AI or AGI, meaning current systems, how about LP25, meaning “learning (computer) programs in (20)25″.

“come on people, it’s [Current Paradigm] and we still don’t have AGI??”

You wrote:

Holding fixed the question of the viability of the current paradigm, this means that whatever curve you’d use to predict p(ASI)-per-year gets really stretched out after 2030 (and somewhat stretched out between 2028 and 2030).

[...]

Of course, we don’t want to hold fixed the question of the viability of the current paradigm; the deflation of the curve past 2030 is further compounded by the evidence. Of course it’s possible that continued accelerated progress would have built ASI in 2031, so that if the hype train ends in 2029, we’re still just one 10x-training-run away from doom. However, if we’re not seeing “crazy stuff” by that point, that’s at least some evidence against the deep learning paradigm (and the more I expect ASI < 2030, the more evidence).

What?? Can you ELI8 this? I might be having reading comprehension problems. Why do you have such a concentration around “the ONLY thing we need is to scale up compute ONE or ONE POINT FIVE more OOMs” (or is it two? 2.5?), rather than “we need maybe a tiny bit more algo tweaking, or a little bit, or a bit, or somewhat, or several somewhats, or a good chunk, or a significant amount, or a big chunk, or...”? Why no smear? Why is there an update like ”...Oh huh, we didn’t literally already have the thing with LP25, and just needed to 30x?? Ok then nevermind, it could be decades lol”? This “paradigm” thing may be striking again…

I guess “paradigm” basically means “background assumptions”? But what are those assumptions when we say “DL paradigm”?

Rapid disemhorsepowerment

My intuitive response to this is to imagine that you and I are watching the water level rise against a dam.

[...]

The analogy isn’t perfect, mainly because the water level (and the right notion of water level) is also contested here. I tried to come up with a better analogy, but I didn’t succeed.

Let me offer an analogy from my perspective. It’s like if you tell me that the number of horses that pull a carriage has been doubling from 1 to 2 to 4 to 8. And from this we can learn that soon there will be a horse-drawn carriage that can pull a skyscraper on a trailer.

In a sense you’re right. We did make more and more powerful vehicles. Just, not with horses. We had to come up with several more technologies.

So what I’m saying here is that the space of ideas—which includes the design space for vehicles in general, and includes the design space for learning programs—is really big and high-dimensional. Each new idea is another dimension. You start off at 0 or near-0 on most dimensions: you don’t know the idea (but maybe you sort of slightly interact with / participate in / obliquely or inexplicitly make use of some fragment of the idea).

There’s continuity in technology—but I think a lot of that is coming from the fact that paradigms keep sorta running out, and you have to shift to the next one in order to make the next advance—and there’s lots of potential ideas, so indeed you can successfully keep doing this.

So, some output, e.g. a technological capability such as vehicle speed/power or such as “how many tasks my computer program does and how well”, can keep going up. But that does NOT especially say much about any one fixed set of ideas. If you expect that one set of ideas will do the thing, I just kinda expect you’re wrong—I expect the thing can be done, I expect the current ideas to be somewhat relevant, I expect progress to continue, BUT I expect there to be progress coming from / basically REQUIRING weird new ideas/directions/dimensions. UNLESS you have some solid reason for thinking the current ideas can do the thing. E.g. you can do the engineering and math of bridges and can show me that the central challenge of bearing loads is actually meaningfully predictably addressed; or e.g. you’ve already done the thing.

Miscellaneous responses

However, there’s also a sense in which the graph does seem roughly correct. GPT-4 is missing some things that a smart highschooler has, sure, but the comparison does make a sort of sense.

No, I think this is utterly wrong. I think the graph holds on some dimensions and fails to hold on other dimensions, and the latter are important, both substantively and as indicators of underlying capabilities.