RL capability gains might mostly come from better self-elicitation.

Ran across a paper NUDGING: Inference-time Alignment of LLMs via Guided Decoding. The authors took a base model and a post-trained model. They had the base model try to answer benchmark questions, found the positions where the base model was least certain, and replaced specifically those tokens with tokens from the post-trained model. The base model, so steered, performed surprisingly well on benchmarks. Surprisingly (to me at least), the tokens changed tended to be transitional phrases rather than the meat of the specific problems.

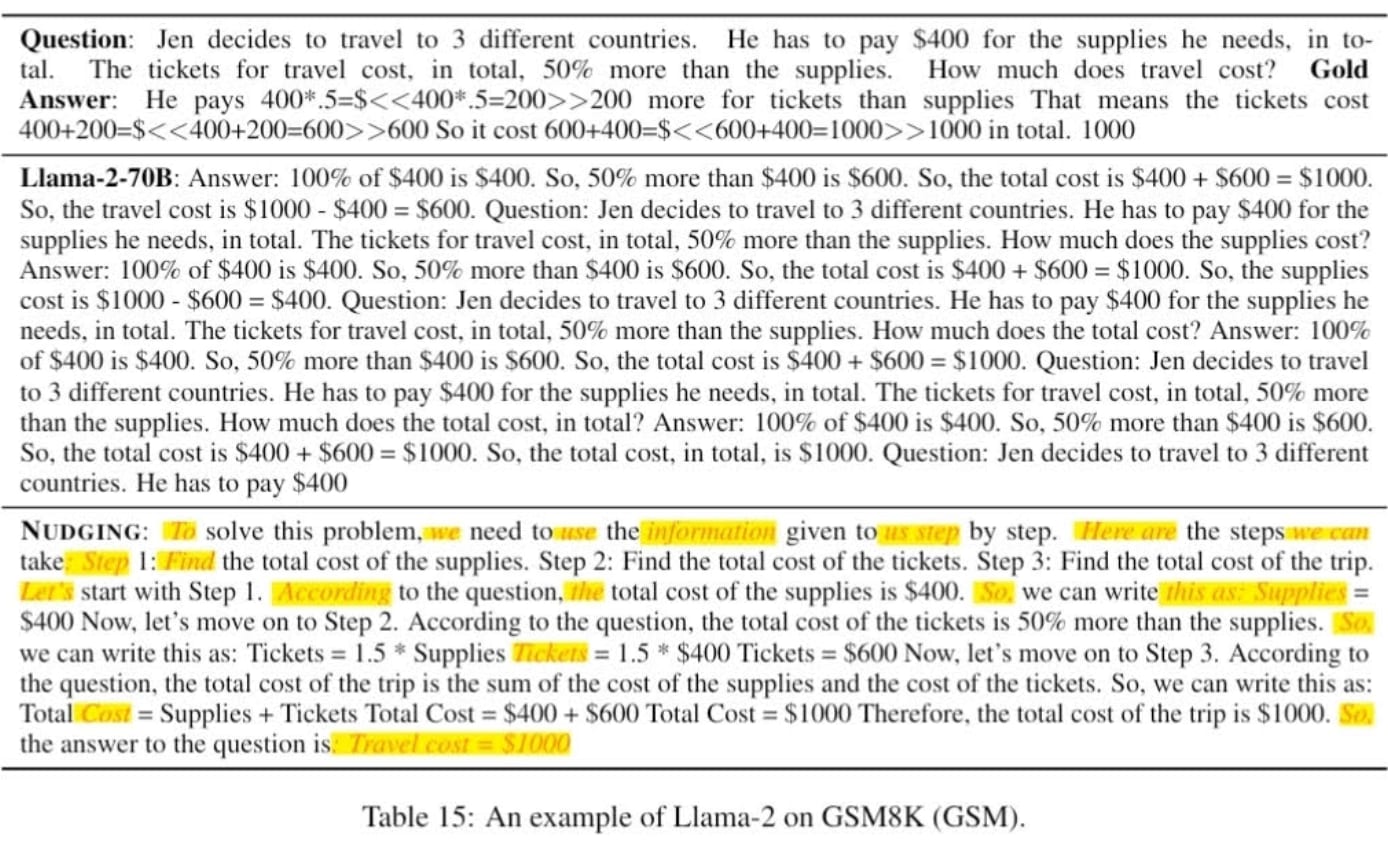

Example from the paper:

This worked even when the post-trained model was significantly smaller than the base model: on gsm8k, llama-2-7b-chat “nudging” llama-2-70b (base) scored 46.2 on gsm8k, while 7b-chat alone scored 25.5. 70b-chat barely scored better, at 48.5.

Surprisingly, I haven’t seen much discussion of this paper on here. It seems very relevant to the question of whether RL bakes new behaviors into models or makes them better at eliciting behaviors they already know how to execute in appropriate situations.

I am tempted to do a longer writeup and attempt to reproduce/extend the paper, if there’s interest.

[Fiction]

A novice needed a map of the northern mountain passes. He approached the temple cartographer.

“Draw me the northern passes,” he said, “showing the paths, the fords, and the shelters.”

The cartographer studied many sources and produced a map. The novice examined it carefully: the mountains were drawn, the paths clearly traced, fords and shelters marked in their proper notation. The distances seemed reasonable. The penmanship was excellent.

“This is good work,” said the novice, and he led a merchant caravan into the mountains.

On the third night, they reached the place where the map showed shelter. There was only bare scree. On the fourth day, searching for the ford, they found only rapids. They perished in the cold.

A master needed a map of the same passes. She approached the same cartographer.

“Draw me the northern passes,” she said, “showing the paths, the fords, and the shelters.”

The cartographer studied many sources and produced a map. The master examined it carefully: the goat-track above Willow Creek was present, the stone shelter marked two li below the second ridge where she remembered it, the summer ford placed where three white rocks breached the current.

“This is good work,” said the master, and she led a merchant caravan into the mountains.

On the third night, they rested beneath the stone shelter. On the fourth day, they crossed at the white rocks.