Sometimes people think that it will take a while for AI to have a transformative effect on the world, because real-world “frictions” will slow it down. For instance:

AI might need to learn from real-world experience and experimentation.

Businesses need to learn how to integrate AI in their existing workflows.

Leaders place a high premium on trust, and won’t easily come to trust AI systems.

Regulation, bureaucracy, or other social factors will prevent rapid adoption of AI.

I think this is basically wrong. Or more specifically: such frictions will be important for AI for the foreseeable future, but not for the real AI.

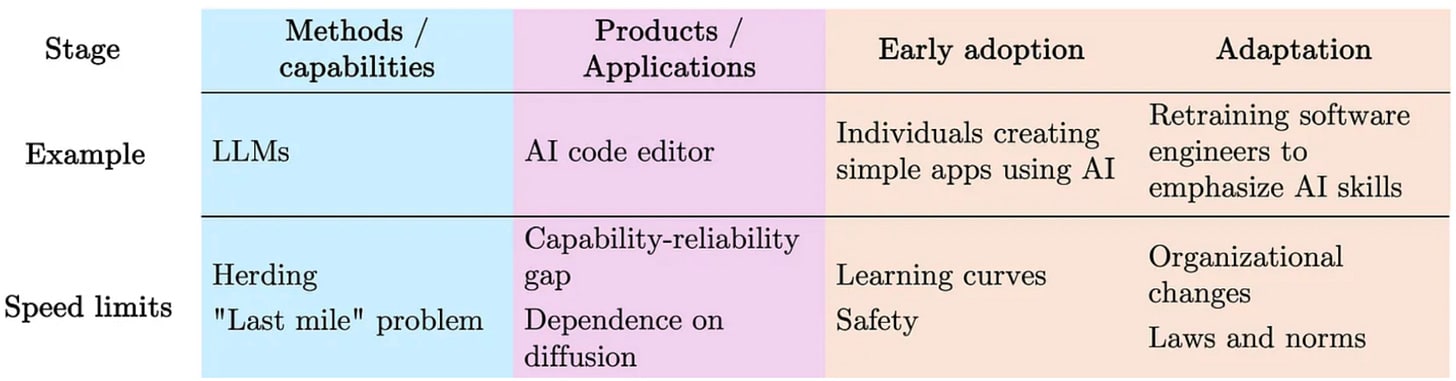

An example of possible “speed limits” for AI, modified from AI as Normal Technology.

Real AI deploys itself

Unlike previous technologies, real AI could smash through barriers to adoption and smooth out frictions so effectively that it’s fair to say real AI could “deploy itself”. And it would create powerful incentives to do so.

I’m not talking about a “rogue AI” that “escapes from the lab” (although I don’t discount such scenarios, either). What I mean is: These frictions can already be smoothed out quite a bit with sufficient expert human labor, it’s just that such labor is quite scarse at the moment. But real AI could be used to implement a cracked team of superhuman scientists, consultants, salespeople, and lobbyists. So (for instance) AI companies could use it to overcome the frictions of experimentation, integration, trust, and regulation.

Unlike current AI systems, human scientists are able to learn very efficiently from limited real-world experiments. They do this by putting lots of thought into what hypotheses are worth testing and how to test them. Real AI would be (by definition) at least as good as top human scientists at this. It would also be good at learning from experience more generally. Like a journalist who can quickly build rapport with locals in a new country, real AI would be great at quickly getting its bearings in new situations and environments. There would be no need to create customized virtual environments to teach real AI specific skills.

Real AI would be the ultimate drop-in replacement worker. It would integrate at least as quickly and smoothly as a new star employee. It might still require some on-the-job training, but it could be trained the same way a new human employee is, by shadowing existing workers and talking to them about their work. Companies could offer free AI consultants who come up with a compelling plan for integrating AI, and free 1-month “AI workforce” trials.

Real AI would also be a great salesperson. It would have outstanding emotional intelligence, making it great at understanding and addressing decision-makers’ hesitations about adopting AI. Certainly some people would prefer the human touch and instinctually mistrust less familiar AI systems, but they would face immense pressure to adopt AI once their competitors start to. Companies could also employ human salespeople with AI assistance to convince such holdouts.

The same sales tactics could help real AI influence politics. AI companies could use hyperpersuasive AI marketers and lobbyists to resist attempts at regulation and figure out strategies for sidestepping any bureaucracy that stands in the way of adoption. Uber flagrantly broke laws to expand its network as fast as possible; once it had, customers were hooked and political opposition looked like a losing move. AI companies can do the same with AI.

I mention AI companies, since I think it is easy to imagine something like “what if OpenAI got this tech tomorrow?” But the more general point is that real AI could figure out for itself how it could be deployed smoothly and profitably, and that uptake will be swift because it will provide a big competitive advantage. So it’s likely that would happen, whether through central planning of a big AI company, or through market forces, individual people’s decisions, or even its own volition.

Deployment could be lightning-quick.

Deployers of real AI would also benefit from some huge advantages that existing AI systems have over humans:

AI thinking can be orders of magnitude faster, because transistors are more than 1,000,000 times faster than neurons.

AI can learn from any number of copies, in parallel, “copy-pasting” new knowledge.

AI can also coordinate millions of copies in parallel.

These factors facilitate rapid learning (the copies can share experience) and integration (which mostly comes down to learning a new job quickly). The ability to coordinate at scale could also act as a social super-power, helping to overcome social barriers of trust and regulation by quickly shifting societal perceptions and norms.

“Friction” might persist, but not by default.

Any of these factors could create friction, at least a bit — all but the most rabid “e/acc”s want society to have time to adjust to AI. And human labor can be used to add friction as well as remove it, like when a non-profit shows that a company isn’t playing by the book.

So we can imagine a world where people continue to mistrust AI, and regulation ends up slowing down adoption and preventing real-world experiments needed to teach AI certain skills, since the experiments might be dangerous or unethical. These limitations would create frictions to businesses integrating AI.

I do expect we’ll see more friction as AI becomes more impactful, but I don’t think it will be enough. The social fabric is not designed for advanced AI, and is already strained by information technology like social media. Right now, AI companies get first dibs on their technology and anyone who wants to add friction is playing catch-up. If real AI is developed before society gets serious about putting the breaks on AI, it’ll be too late. Because real AI deploys itself.

Tell me what you think in comments and subscribe to receive new posts!

(I didn’t see a link, I suppose you mean your Substack)

Yeah, this is automatically cross-posted, but I guess it’s not working as well as I’d hoped.

Is there a reason you say “real AI” instead of “AGI”? Do you see some gap between what we would call AGI and AI that deploys itself?

The term AGI has been confusing to so many people and corrupted/co-opted in so many ways. I will probably write a post about that at some point.

By the time we have “Real AI”, the world will look substantially different than it does now. A “Real AI”, dropped into today’s world, would be enormously transformative and disruptive. But consider a “Kinda Fake AI” which is current AI extrapolated by another year of progress, assuming AI capabilities stay about as “spiky” a year from now as they are now and the spikes a year from now are similar to the spikes now.

Unlike current AI systems, human scientists are able to learn very efficiently from limited real-world experiments. They do this by putting lots of thought into what hypotheses are worth testing and how to test them. Kinda Fake AI can say a lot of the same words human scientists say, and work through the same checklists they would work through, and a surprising amount of the time this is good enough for it to accomplish the task at hand.

Kinda Fake AI takes quite a bit of work to integrate into a company’s workflows and processes, but it offers compelling advantages in speed and cost on a huge number of tasks, which makes that integration work worthwhile for large companies and new companies. Most of the companies that don’t integrate Kinda Fake AI are small old businesses (e.g. hole-in-the-wall restaurants, window tinting shops)

Kinda Fake AI would also be a great salesperson, particularly at the top of the funnel. It would have much better emotional intelligence than canned spam emails, and it turns out that improving your conversion rate at the start of the funnel by 50% is about as useful as improving your conversion rate at the end of the sales funnel by 50%. For the later stages of the funnel where humans are better, companies continue employing human salespeople.

Kinda Fake AI could navigate bureaucracy much more cheaply than humans, meaning that things which previously required the production of too much paperwork to be profitable become viable. As one concrete example of this, Kinda Fake AI can cheaply do things which make the legal process take more time, extending the amount of time companies using it can continue operating in legal gray areas or in areas where the law is clear but the legal process for dealing with violations of it isn’t clear.

I expect we’ll get this “Kinda Fake AI” substantially before we get the “Real AI” described in your post. And the “Real AI” will be coming into a world which already has “Kinda Fake AI” and (hopefully) has already largely adapted to it.

I think you’re overoptimistic about what will happen in the very near term.

But I agree that as AI gets better and better, we might start to see frictions going away faster than society can keep up (leading to, eg. record unemployment) before we get to real AI.

The “Real AI” name seems like it might get through to people who weren’t grokking what the difference between today’s AI and the breakthrough would be. At the same time maybe that helps them remember what they’re looking for. This reads like an ad to me, in the sense that as a hypothetical person building it, this is the sort of article I’d want to write. Maybe that doesn’t make it naturally do the thing my intuition feels like it does, but that’s the first thing I think when reading it.

100 percent this. There is this perpetual miscommunication about the word “AGI”.

“When I say AGI, I really mean a general intellignintellignence not just a new app or tool.”