Agency As a Natural Abstraction

Epistemic status: Speculative attempt to synthesize findings from several distinct approaches to AI theory.

Disclaimer: The first three sections summarize some of Chris Olah’s work on interpretability and John Wentworth’s Natural Abstractions Hypothesis, then attempt to draw connections between them. If you’re already familiar with these subjects, you can probably skip all three parts.

Short summary: When modelling a vast environment where simple rules result in very complex emergent rules/behaviors (math, physics...), it’s computationally efficient to build high-level abstract models of this environment. Basic objects in such high-level models often behave very unlike basic low-level objects, requiring entirely different heuristics and strategies. If the environment is so complex you build many such models, it’s computationally efficient to go meta, and build a higher-level abstract model of building and navigating arbitrary world-models. This higher-level model necessarily includes the notions of optimization and goal-orientedness, meaning that mesa-optimization is the natural answer to any “sufficiently difficult” training objective. All of this has various degrees of theoretical, empirical, and informal support.

1. The Universality Hypothesis

One of the foundations of Chis Olah’s approach to mechanistic interpretability is the Universality Hypothesis. It states that neural networks are subject to convergence — that they would learn to look for similar patterns in the training data, and would chain up the processing of these patterns in similar ways.

The prime example of this effect are CNNs. If trained on natural images (even from different datasets), the first convolution layer reliably learns Gabor filters and color-contrast detectors, and later layers show some convergence as well:

It’s telling that these features seem to make sense to us, as well — that at least one type of biological neural network also learns similar features. (Gabor filters, for example, were known long before modern ML models.) It’s the main reason to feel optimistic about interpretability at all — it’s plausible that the incomprehensible-looking results of matrix multiplications will turn out to be not so incomprehensible, after all.

It’s telling when universality doesn’t hold, as well.

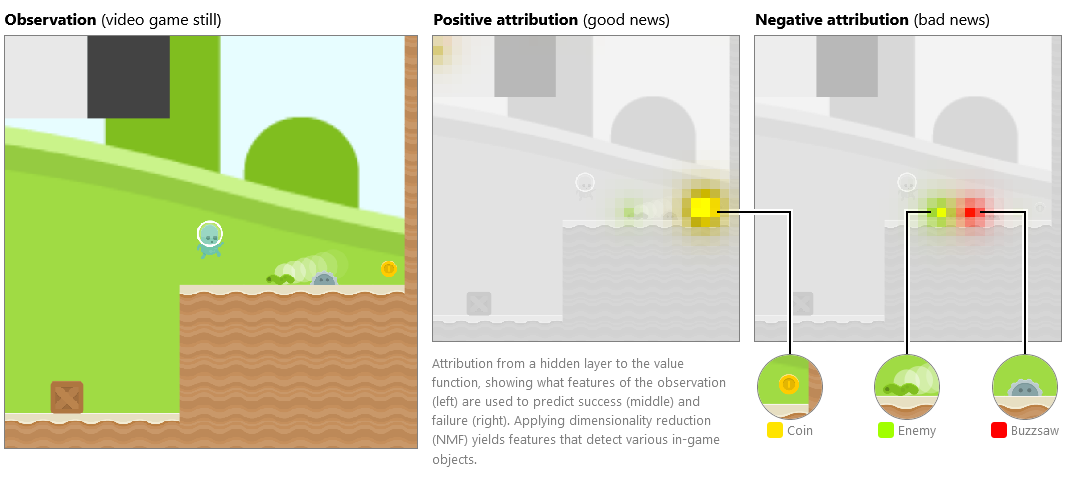

Understanding RL Vision attempts to interpret an agent trained to play CoinRun, a simple platformer game. CoinRun’s levels are procedurally generated, could contain deadly obstacles in the form of buzzsaws and various critters, and require the player to make their way to a coin.

Attempting to use feature visualization on the agent’s early convolutional layers produces complete gibberish, lacking even Gabor filters:

It’s nonetheless possible to uncover a few comprehensible activation patterns via the use of different techniques:

The agent learns to associate buzzsaws and enemies with decreased chances of successfully completing a level, and could be seen to pick out coins and progression-relevant level geometry.

All of these comprehensible features, however, reside on the third convolutional layer. None of the other four convolutional layers, or the two fully-connected layers, contain anything that makes sense. The authors note the following:

Interestingly, the level of abstraction at which [the third] layer operates – finding the locations of various in-game objects – is exactly the level at which CoinRun levels are randomized using procedural generation. Furthermore, we found that training on many randomized levels was essential for us to be able to find any interpretable features at all.

At this point, they coin the Diversity Hypothesis:

Interpretable features tend to arise (at a given level of abstraction) if and only if the training distribution is diverse enough (at that level of abstraction).

In retrospect, it’s kind of obvious. The agent would learn whatever improves its ability to complete levels, and only that. It needs to know how to distinguish enemies and buzzsaws and coins from each other, and to tell apart these objects from level geometry and level backgrounds. However, any buzzsaw looks like any other buzzsaw and behaves like any other buzzsaw and unlike any coin — the agent doesn’t need a complex “visual cortex” to sort them out. Subtle visual differences don’t reveal subtle differences in function, the wider visual context is irrelevant as well. Learning a few heuristics for picking out the handful of distinct objects the game actually has more than suffices. Same for the higher-level patterns, the rules and physics of the game: they remain static.

Putting this together with the (strong version of) the Universality Hypothesis, we get the following: ML models could be expected to learn interpretable features and information-processing patterns, but only if they’re exposed to enough objective-relevant diversity across these features.

If this condition isn’t fulfilled, they’ll jury-rig some dataset-specialized heuristics that’d be hard to untangle. But if it is, they’ll likely cleave reality along the same lines we do, instead of finding completely alien abstractions.

John Wentworth’s theory of abstractions substantiates the latter.

(For completeness’ sake, I should probably mention Chris Olah et al.’s more recent work on transformers, as well. Suffice to say that it also uncovers some intuitively-meaningful information-processing patterns that reoccur across different models. Elaborating on this doesn’t add much to my point, though.

One particular line stuck with me, however. When talking about a very simple one-layer attention-only transformer, and some striking architectural choices it made, they note that “transformers desperately want to do meta-learning.” Consider this to be… ominous foreshadowing.)

2. The Natural Abstraction Hypothesis

Real-life agents are embedded in the environment, which comes with a host of theoretical problems. For example, that implies they’re smaller than the environment, which means they physically can’t hold its full state in their head. To navigate it anyway, they’d need to assemble some simpler, lower-dimensional model of it. How can they do it? Is there the optimal, “best way” to do it?

The Natural Abstractions Hypothesis is aimed to answer this question. It’s based on the idea that, for all the dizzying complexity that real-life objects have on the level of fundamental particles, most of the information they contain is only relevant — and, indeed, only accessible — locally.

Consider the door across the room from you. The details of the fluctuation of the individual atoms comprising it never reach you, they are completely wiped out by the environment on the way to you. For the same reason, they don’t matter. The information that reaches you, the information that’s relevant to you and could impact you, is only the high-level summaries of these atoms’ averaged-out behavior, consistent across time. Whether the door is open or closed, what material it is, its shape.

That’s what natural abstractions are: high-level summaries of the low-level environment that contain only the information that actually reaches far-away objects.

Of course, if you go up to the door with an electronic microscope and start making decisions based on what you see, the information that reaches you and is relevant to you would change. Similarly, if you’re ordering a taxi to your house, whether that door is open or closed is irrelevant to the driver getting directions. That’s not a problem: real-life agents are also known for fluidly switching between a multitude of abstract models of the environment, depending on the specific problem they’re working through.

“Relevant to you”, “reaches you”, etc., are doing a lot of work here. Part of the NAH’s conceit is actually eliminating this sort of subjective terminology, so perhaps I should clean it up too.

First, we can note that the information that isn’t wiped out is whatever information is represented with high redundancy in the low-level implementation of whatever object we care about — e. g., an overwhelming amount of door-particles emit the same information about the door’s material. In this manner, any sufficiently homogeneous/stable chunk of low-level reality corresponds to a valid abstraction.

An additional desideratum for a good abstraction is global redundancy. There are many objects like your door in the world. This means you can gather information on your door from other places, or gather information about other places by learning that they have “a door”. This also makes having an internal symbol for “a door” useful.

Putting these together, we can see how we can build entire abstraction layers: by looking for objects or patterns in the environment that are redundant both locally and globally, taking one type of such objects as a “baseline”, then cleaving reality such that none of the abstractions overlap and the interactions between them are mediated by noisy environments that wipe out most of the detailed information about them.

Fundamental physics, chemistry, the macro-scale environment, astronomy, and also geopolitics or literary theory — we can naturally derive all of them this way.

The main takeaway from all of this is, good abstractions/high-level models are part of the territory, not the map. There’s some degree of subjectivity involved — a given agent might or might not need to make use of the chemistry abstraction for whatever goal it pursues, for example — but the choice of abstractions isn’t completely arbitrary. There’s a very finite number of good high-level models.

So suppose the NAH is true; it certainly looks promising to me. It suggests the optimal way to model the environment given some “reference frame” — your scale, your task, etc. Taking the optimal approach to something is a convergent behavior. Therefore, we should expect ML models to converge towards similar abstract models when exposed to the same environment and given the same type of goal.

Similar across ML models, and familiar to us.

3. Natural Abstractions Are Universal

Let’s draw some correspondences here.

Interpretable features are natural abstractions are human abstractions.

The Diversity Hypothesis suggests some caveats for the convergence towards natural abstractions. A given ML model would only learn the natural abstractions it has to learn, and no more. General performance in some domain requires learning the entire corresponding abstraction layer, but if a model’s performance is evaluated only on some narrow task within that domain, it’ll just overfit to that task. For example:

InceptionV1 was exposed to a wide variety of macro-scale objects, and was asked to identify all of them. Naturally, it learned a lot of the same abstractions we use.

The CoinRun agent, on the other hand, was exposed to a very simple toy environment. It learned all the natural abstractions which that environment contained — enemies and buzzsaws and the ground and all — but only them. It didn’t learn a general “cleave the visual input into discrete objects” algorithm.

There are still reasons to be optimistic about interpretability. For one, any interesting AI is likely to develop general competence across many domains. It seems plausible, then, that the models we should be actually concerned about will be more interpretable than the contemporary ones, and also more similar to each other.

As an aside, I think this is all very exciting in general. These are quite different approaches, and it’s very promising that they’re both pointing to the same result. Chris’ work is very “bottom-up” — taking concrete ML models, noticing some similarities between them, and suggesting theoretical reasons for that. Conversely, John’s work is “top-down” — from mathematical theory to empirical predictions. The fact that they seem poised to meet in the middle is encouraging.

4. Diverse Rulesets

Let’s consider the CoinRun agent again. It was briefly noted that its high-level reasoning wasn’t interpretable either. The rules of the game never changed, it wasn’t exposed to sufficient diversity across rulesets, so it just learned a bunch of incomprehensible CoinRun-specific heuristics.

What if it were exposed to a wide variety of rulesets, however? Thousands of them, even? It can just learn specialized heuristics for every one of them, of course, plus a few cues for when to use which. But that has to get memory-taxing at some point. Is there a more optimal way?

We can think about it in terms of natural abstractions. Suppose we train 1,000 separate agents instead, each of them trained only on one game from our dataset, plus a “manager” model that decides which agent to use for which input. This ensemble would have all the task-relevant skills of the initial 1,000-games agent; the 1,000-games agent would be a compressed summary of these agents. A natural abstraction over them, one might say.

A natural abstraction is a high-level summary of some object that ignores its low-level details and only preserve whatever information is relevant to some other target object. The information it ignores is information that’d be wiped out by environment noise on the way from the object to the target.

Our target is the loss function. Our environment is the different training scenarios, with their different rulesets. The object we’re abstracting over is the combination of different specialized heuristics for good performance on certain rulesets.[1]

The latter is the commonality across the models, the redundant information we’re looking for: their ability to win. The noisy environment of the fluctuating rules would wipe out any details about the heuristics they use, leaving only the signal of “this agent performs well”. The high-level abstraction, then, would be “something that wins given a ruleset”. Something that outputs actions that lead to low loss no matter the environment it’s in. Something that, given some actions it can take, always picks those that lead to low loss because they lead to low loss.

Consequentialism. Agency. An optimizer.

5. Risks from Learned Optimization Is Always Relevant

This result essentially restates some conclusions from Risks from Learned Optimization. That paper specifically discusses the conditions in which a ML model is likely to become a mesa-optimizer (i. e., learn runtime optimization) vs. remain a bundle of specialized heuristics that were hard-coded by the base optimizer (the training process). In particular:

[S]earch—that is, optimization—tends to be good at generalizing across diverse environments, as it gets to individually determine the best action for each individual task instance. There is a general distinction along these lines between optimization work done on the level of the learned algorithm and that done on the level of the base optimizer: the learned algorithm only has to determine the best action for a given task instance, whereas the base optimizer has to design heuristics that will hold regardless of what task instance the learned algorithm encounters. Furthermore, a mesa-optimizer can immediately optimize its actions in novel situations, whereas the base optimizer can only change the mesa-optimizer’s policy by modifying it ex-post. Thus, for environments that are diverse enough that most task instances are likely to be completely novel, search allows the mesa-optimizer to adjust for that new task instance immediately.

For example, consider reinforcement learning in a diverse environment, such as one that directly involves interacting with the real world. We can think of a diverse environment as requiring a very large amount of computation to figure out good policies before conditioning on the specifics of an individual instance, but only a much smaller amount of computation to figure out a good policy once the specific instance of the environment is known. We can model this observation as follows.

Suppose an environment is composed of different instances, each of which requires a completely distinct policy to succeed in. Let be the optimization power (measured in bits) applied by the base optimizer, which should be approximately proportional to the number of training steps. Then, let be the optimization power applied by the learned algorithm in each environment instance and the total amount of optimization power the base optimizer must put in to get a learned algorithm capable of performing that amount of optimization. We will assume that the rest of the base optimizer’s optimization power, , goes into tuning the learned algorithm’s policy. Since the base optimizer has to distribute its tuning across all task instances, the amount of optimization power it will be able to contribute to each instance will be , under the previous assumption that each instance requires a completely distinct policy. On the other hand, since the learned algorithm does all of its optimization at runtime, it can direct all of it into the given task instance, making its contribution to the total for each instance simply .

Thus, if we assume that, for a given , the base optimizer will select the value of that maximizes the minimum level of performance, and thus the total optimization power applied to each instance, we get

As one moves to more and more diverse environments—that is, as increases—this model suggests that will dominate , implying that mesa-optimization will become more and more favorable. Of course, this is simply a toy model, as it makes many questionable simplifying assumptions. Nevertheless, it sketches an argument for a pull towards mesa-optimization in sufficiently diverse environments.

As an illustrative example, consider biological evolution. The environment of the real world is highly diverse, resulting in non-optimizer policies directly fine-tuned by evolution—those of plants, for example—having to be very simple, as evolution has to spread its optimization power across a very wide range of possible environment instances. On the other hand, animals with nervous systems can display significantly more complex policies by virtue of being able to perform their own optimization, which can be based on immediate information from their environment. This allows sufficiently advanced mesa-optimizers, such as humans, to massively outperform other species, especially in the face of novel environments, as the optimization performed internally by humans allows them to find good policies even in entirely novel environments.

6. Multi-Level Models

Now let’s consider the issue of multi-level models. They’re kind of like playing a thousand different games, no?

It’s trivially true for the real world. Chemistry, biology, psychology, geopolitics, cosmology — it’s all downstream of fundamental physics, yet the objects at any level behave very unlike the objects at a different level.

But it holds true even for more limited domains.

Consider building up all of mathematics from the ZFC axioms. Same as physics, we start from some “surface” set of rules. We notice that the objects defined by them could be assembled into more complex structures, which could be assembled into more complex structures still, and so on. But at some point, performing direct operations over these structures becomes terribly memory-taxing. We don’t think about the cosine function in terms of ZFC axioms, for example; we think about it as its own object, with its own properties. We build an abstraction, a high-level summary that reduces its internal complexity to the input → output mapping.

When doing trigonometry in general, we’re working with an entire new abstraction layer, populated by many abstractions over terribly complex structures built out of axiomatic objects. Calculus, probability theory, statistics, topology — every layer of mathematics is a minor abstraction layer in its own right. And in a sense, every time we prove a theorem or define a function we’d re-use, we add a new abstract object.

The same broad thought applies to any problem domain where it’s possible for sufficiently complex structures to arise. It’s memory-efficient to build multiple abstract models of such environments, and then abstract over the heuristics for these models.

But it gets worse. When we’re navigating an environment with high amounts of emergence, we don’t know how many different rulesets we’d need to learn. We aren’t exposed to 1,000 games all at once. Instead, as we’re working on some problem, we notice that the game we’re playing conceals higher-level (or lower-level) rules, which conceal another set of rules, and so on. Once we get started, we have no clue when that process would bottom out, or what rules we may encounter.

Heuristics don’t cut it. You need general competence given any ruleset to do well, and an ability to build natural abstractions given a novel environment, on your own. And if you’re teaching yourself to play by completely novel rules, how can you even tell whether you’re performing well, without the inner notion of a goal to pursue?

(Cute yet non-rigorous sanity-check: How does all of this hold up in the context of human evolution? Surprisingly well, I think. The leading hypotheses for the evolution of human intelligence tend to tie it to society: The Cultural Intelligence Hypothesis suggests that higher intelligence was incentivized because it allowed better transmission of cultural knowledge, such as how to build specialized tools or execute incredibly tricky hunting strategies. The Machiavellian Intelligence points to the political scheming between homo sapiens themselves as the cause.

Either is kind of like being able to adapt to new rulesets on the fly, and build new abstractions yourself. Proving a lemma is not unlike prototyping a new weapon, or devising a plot that abuses ever-shifting social expectations: all involve iterating on a runtime-learned abstract environment to build an even more complex novel structure in the pursuit of some goal.)

7. A Grim Conclusion

Which means that any sufficiently powerful AI is going to be a mesa-optimizer.

I suspect this is part of what Eliezer is talking about when he’s being skeptical of tool-AI approaches. Navigating any sufficiently difficult domain, any domain in which structures could form that are complex enough to suggest many many layers of abstraction, is astronomically easier if you’re an optimizer. It doesn’t matter if your AI is only taught math, if it’s a glorified calculator — any sufficiently powerful calculator desperately wants to be an optimizer.

I suspect it’s theoretically possible to deny that desperate desire, somehow. At least for some tasks. But it’s going to be very costly — the cost of cramming specialized heuristics for 1,000 games into one agent instead of letting it generalize, the cost of setting x to zero in the mesa-optimizer equation while N skyrockets, the cost of forcing your AI to use the low-level model of the environment directly instead of building natural abstractions. You’d need vastly more compute and/or data to achieve the level of performance on par with naively-trained mesa-optimizers (for a given tech level)[2].

And then it probably won’t be any good anyway. A freely-trained 1,000-games agent would likely be general enough to play the 1,001th game without additional training. 1,000 separately-trained agents with a manager? Won’t generalize, explicitly by design. Similarly, any system we forced away from runtime optimization won’t be able to discover/build new abstraction layers on its own, it’d only be able to operate within the paradigms we already know. Which may or may not be useful.

Mesa-optimizers will end the world long before tool AIs can save us, the bottom line is.

- ^

I feel like I’m abusing the terminology a bit, but I think it’s right. Getting a general solution as an abstraction over a few specific ones is a Canonical Example, after all: the “1+1=2*1” & “2+2=2*2″ ⇒ “n+n=2*n” bit.

- ^

I’m put in mind of gwern’s/nostalgebraist’s comparison with “cute algorithms that solve AI in some theoretical sense with the minor catch of some constant factors which require computers bigger than the universe”. As in, avoiding mesa-optimization for sufficiently complex problems may be “theoretically possible” only in the sense that it’s absolutely impossible in practice.

- Towards Gears-Level Understanding of Agency by (16 Jun 2022 22:00 UTC; 25 points)

- 's comment on The AI Control Problem in a wider intellectual context by (13 Jan 2023 1:28 UTC; 3 points)

- 's comment on Coalitional agency by (24 Jul 2024 11:00 UTC; 3 points)

- 's comment on Core of AI projections from first principles: Attempt 1 by (11 Apr 2023 22:35 UTC; 2 points)

- 's comment on Quick thoughts on the implications of multi-agent views of mind on AI takeover by (11 Dec 2023 16:38 UTC; 2 points)

In a comment below, you define an optimizer as:

I certainly agree that we’ll build mesa-optimizers under this definition of “optimizer”. What then causes them to be goal-directed, i.e. what causes them to choose what actions to take by considering a large possible space of plans that includes “kill all the humans”, predicting their consequences in the real world, and selecting the action to take based on how the predicted consequences are rated by some metric? Or if they may not be goal-directed according to the definition I gave there, why will they end the world?

For an optimizer to operate well in any environment, it needs some metric by which to evaluate its performance in that environment. How would it converge towards optimal performance otherwise, how would it know to prefer e. g. walking to random twitching? In other words, it needs to keep track of what it wants to do given any environment.

Suppose we have some initial “ground-level” environment, and a goal defined over it. If the optimizer wants to build a resource-efficient higher-level model of it, it needs to translate that goal into its higher-level representation (e. g., translating “this bundle of atoms” into “this dot”, as in my Solar System simulation example below). In other words, such an optimizer would have the ability to redefine its initial goal in terms of any environment it finds itself operating in.

Now, it’s not certain that e. g. a math engine would necessarily decide to prioritize the real world, designate it the “real” environment it needs to achieve goals in. But:

If it does decide that, it’d have the ability and the desire to Kill All the Humans. It would be able to define its initial goal in terms of the real world, and, assuming superintelligence, it’d have the general competence to learn to play the real-world games better than us.

In some way, it seems “correct” for it to decide that. At the very least, to perform a lasting reward-hack and keep its loss minimized forever.

Sorry if it’s obvious from some other part of your post, but the whole premise is that sufficiently strong models *deployed in sufficiently complex environments* leads to general intelligence with optimization over various levels of abstractions. So why is it obvious that: It doesn’t matter if your AI is only taught math, if it’s a glorified calculator — any sufficiently powerful calculator desperately wants to be an optimizer?

If it’s only trained to solve arithmetic and there are no additional sensory modalities aside from the buttons on a typical calculator, how does increasing this AI’s compute/power lead to it becoming an optimizer over a wider domain than just arithmetic? Maybe I’m misunderstanding the claim, or maybe there’s an obvious reason I’m overlooking.

Also, what do you think of the possibility that when AI becomes superhuman++ in tasks, that the representations go from interpretable to inscrutable again (because it uses lower level representations that are inaccessible to humans)? I understand the natural abstraction hypothesis, and I buy it too, but even an epsilon increase in details might compound into significant prediction outcomes if a causal model is trying to use tons of representations in conjunction to compute something complex.

Do you think it might be valuable to find a theoretical limit that shows that the amount of compute needed for such epsilon-details to be usefully incorporated is greater than ever will be feasible (or not)?

That was a poetic turn of phrase, yeah. I didn’t mean a literal arithmetic calculator, I meant general-purpose theorem-provers/math engines. Given a sufficiently difficult task, such a model may need to invent and abstract over entire new fields of mathematics, to solve it in a compute-efficient manner. And that capability goes hand-in-hand with runtime optimization.

I think something like this was on the list of John’s plans for empirical tests of the NAH, yes. In the meantime, my understanding is that the NAH explicitly hinges on assuming this is true.

Which is to say: Yes, an AI may discover novel, lower-level abstractions, but then it’d use them in concert with the interpretable higher-level ones. It wouldn’t replace high-level abstractions with low-level ones, because the high-level abstractions are already as efficient as they get for the tasks we use them for.

You could dip down to a lower level when optimizing some specific action — like fine-tuning the aim of your energy weapon to fry a given person’s brain with maximum efficiency — but when you’re selecting the highest-priority person to kill to cause most disarray, you’d be thinking about “humans” in the context of “social groups”, explicitly. The alternative — modeling the individual atoms bouncing around — would be dramatically more expensive, while not improving your predictions much, if at all.

It’s analogous to how we’re still using Newton’s laws in some cases, despite in principle having ample compute to model things at a lower level. There’s just no point.

Thanks so much for the response, this is all clear now!

I think would be better to say “overkill” instead of “overfit” at the end of this sentence because overfit is technically the opposite of learning universal, highly regularised models.

As well as here, I think it would be clearer to skip the word “agency” and just say “A consequentialistic optimiser”.

All mentioned (scientific) theories are not reducible to the time-symmetric evolution of the universe as a quantum system, which scientists currently think the universe is. That would be reductionism. They are also all classical and time-asymmetric, which means they are semantics on top of quantum physical systems.

Saying “play by rules” implies there is some game with the rules of winning and losing (though, a game may not have winners and losers, too). So, either I can tell I’m playing well if the rules of winning and losing are given to me along with the rest of the rules, or I’m seeking pure fun (see open-endedness, also formalised as “seeking surprise” in Active Inference). Fun (surprise, interestingness) can be formalised, though it doesn’t need to be equal to “performing well”.

What does “optimizer” mean? You’re implying that it has something to do with efficiency .. but also something to do with generality..?

Good question. There’s a great amount of confusion over the exact definition, but in the context of this post specifically:

An optimizer is a very advanced meta-learning algorithm that can learn the rules of (effectively) any environment and perform well in it. It’s general by definition. It’s efficient because this generality allows it to use maximally efficient internal representations of its environment.

For example, consider a (generalist) ML model that’s fed the full description of the Solar System at the level of individual atoms, and which is asked to roughly predict the movement of Earth over the next year. It can keep modeling things at the level of atoms; or, it can dump the overwhelming majority of that information, collapse sufficiently large objects into point masses, and use Cowell’s method.

The second option is greatly more efficient, while decreasing the accuracy only marginally. However, to do that, the model needs to know how to translate between different internal representations[1], and how to model and achieve goals in arbitrary systems[2].

The same property that allows an optimizer to perform well in any environment allows it to efficiently model any environment. And vice versa, which is the bad part. The ability to efficiently model any environment allows an agent to perform well in any environment, so math!superintelligence would translate to real-world!superintelligence, and a math!goal would be mirrored by some real!goal. Next thing we know, everything is paperclips.

E. g., how to keep track of what “Earth” is, as it moves from being a bunch of atoms to a point mass.

Assuming it hasn’t been trained for this task specifically, of course, in which case it can just learn how to translate its inputs into this specific high-level representation, how to work with this specific high-level representation, and nothing else. But we’re assuming a generalist model here: there was nothing like this in its training dataset.

A square circle is square and circular by definition, but I still don’t believe in them. There has to be a trade off between generality and efficiency.

Once it has dumped the overwhelming majority of the information, it is no longer general. It’s not (fully) general and (fully) efficient.