About Natural & Synthetic Beings (Interactive Typology)

Most debates about regulating AI get stuck on “AI personhood” or “AI rights” because the foremost considerations applied are usually the ones we have the least agreement on e.g. true intelligence, consciousness, sentience and qualia.

But if we treat modern AI systems as dynamic systems, we should be able to objectively compare AI with other natural and synthetic systems. I built a simple interactive typology for placing natural and synthetic systems (including AI) on the same “beingness” map, purely using properties of dynamic-systems, not using intelligence or consciousness perspectives.

Would be interested in making this make robust...where does it break? Is it useful?

Background

Before I started building the typology model, I briefly explored how we currently characterize dynamic systems (whether natural or synthetic)...what are their capability classes and types. I found roughly three kinds of work:

‘Kinds of minds’ taxonomies e.g. Dennett’s creatures[1]

Agent and AI capability taxonomies e.g. Russell & Norvig hierarchy[2] of agents, and of course the well known ANI/AGI/ASI ladders.

Biological terminology like autopoiesis, homeostasis e.g. Maturana and Varela’s account[3] of living systems as self-producing, self-maintaining organisations, which is powerful for biology.

These are all useful in their own domains, but I couldn’t find a unified typology that:

treats natural, artificial, collective, and hybrid systems on the same footing,

separates cognitive capability from beingness / organisation, and

is concrete enough to be used in alignment evaluations and regulatory categories.

So, I hit the local library and brainstormed with ChatGPT/Gemini to build a layered “beingness” model that tries to determine what kind of dynamic entity a system is keeping aside it’s cognitive capabilities, consciousness, feelings, emotions, qualia etc. I have used simplest possible terminology, informal easy to understand meanings and illustrative examples. Feedback and critique welcome.

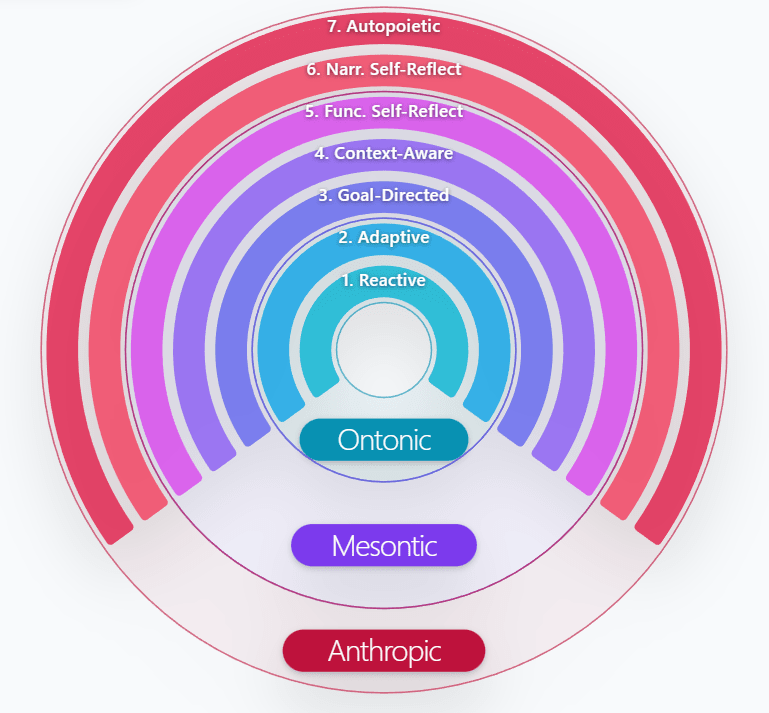

Beingness Categories

I could separate out three beingness categories that seem to show up across both natural and synthetic systems:

Ontonic

This covers systems that maintain stability, viability, and coherence: reacting to stimuli, correcting deviations, adapting to environmental changes, and preserving functional integrity.

Mesontic

Systems here exhibit functional self-monitoring, goal-directed behaviour, contextual integration, and coherence-restoration without needing anything like consciousness, subjective experience, or a narrative self.

Anthropic

This is where I have classed systems with genuinely autobiographical identity, value-orientation and self-preservation and continuity.

I have not attempted to classify divine, alien and paranormal entities.

The Seven Rings

The categories are composed of distinct, definable, probably measurable/identifiable set of systemic capability groups.

| Ring | Definition | Examples |

|---|---|---|

| Reactive | Response to stimuli | Thermostat, Amoeba |

| Adaptive | Behavioural adjustment | RL agents, insects |

| Goal-Directed | Acting toward outcomes | Wolves, chess engines |

| Context-Aware | Using situational info | Mammals, AVs, LLM context |

| Functionally Self Reflective | Functional self-monitoring | LLM self-correction, robotics |

| Narratively Self Reflective | Understanding of identity of self and others, purpose, values | Human like entities |

| Autopoietic | Self-production & perpetuation | Cells, organisms |

Each ring is further comprised of several capabilities each. The rings are not hierarchical or associative.

Examples

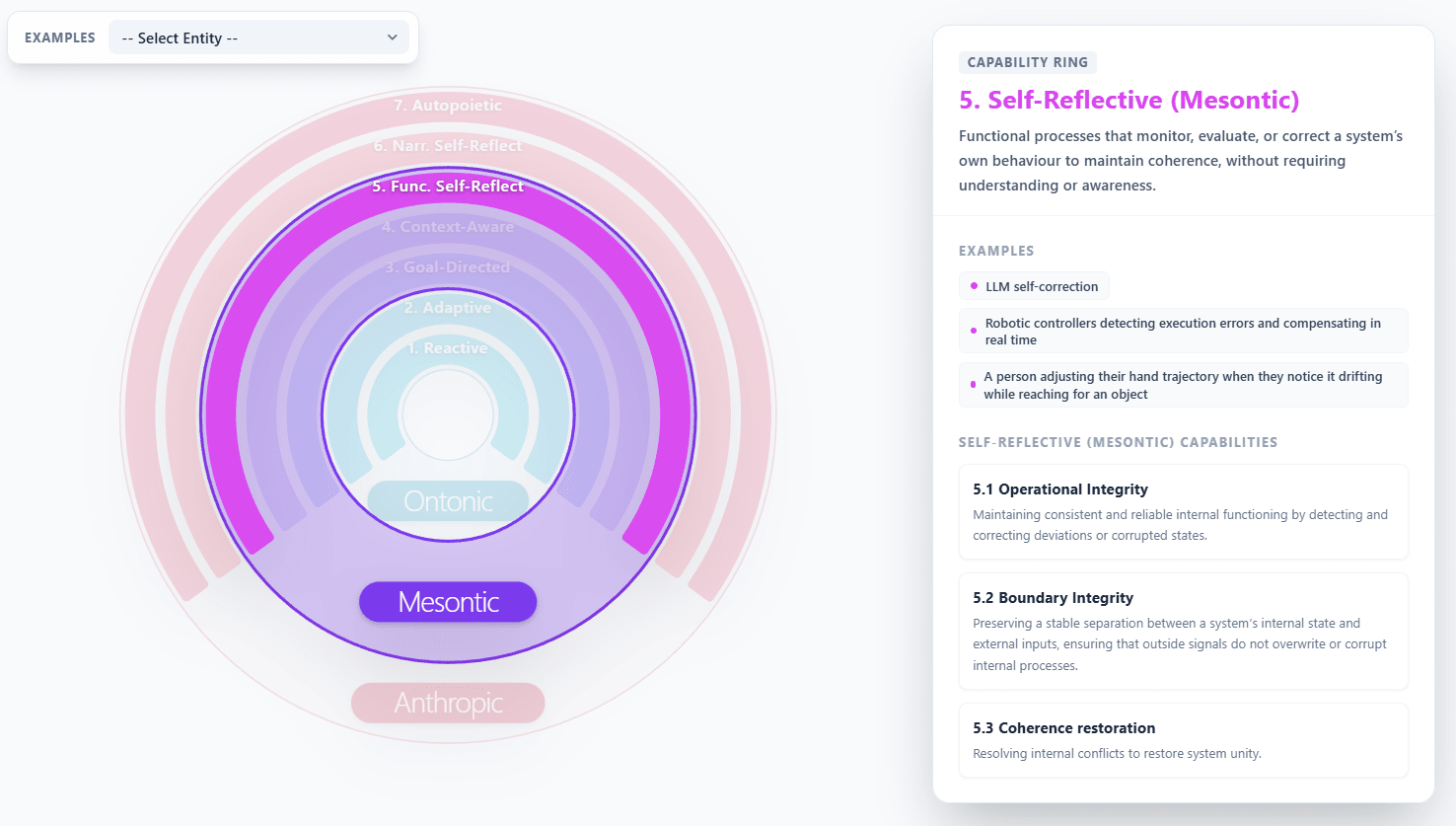

The visualization app has some examples of what capabilities a particular entity is known to feature.

| Entity | Rings Activated | Notes |

|---|---|---|

| Human | All rings | Full biological + narrative stack. |

| Cyborg | All rings | Autopoiesis from human neural tissue; hybrid body. |

| LLM Agent | Reactive → Mesontic | No Anthropic, no Autopoietic. |

| Honey Bee | Reactive → Context-Aware + Autopoietic | No Mesontic/Anthropic reflection. |

| Drone Swarm | Reactive → Context-Aware | No life, no self-reflection. |

| Robot Vacuum | Reactive → Context-Aware | Goal-directed navigation. |

| Voice Assistant | Reactive → Context-Aware | No body, no reflection. |

| Amoeba | Reactive → Goal-Directed + Autopoietic | Minimal context-awareness. |

| Coral Polyp | Reactive → Adaptive + Autopoietic | No high-level behaviour. |

| Tornado | Reactive → Adaptive | Dissipative structure; no goals, no self-model. |

Closing Remarks

This is pretty much a first cut of the classification and meanings. I will be refining it with further research and feedback.

Why does this typology matter?

The proposed typology can be used to (1) design evaluations that match the system’s structural profile rather than its perceived intelligence, (2) support regulation by linking obligations to measurable properties like stability, boundary consistency, or identity persistence, and (3) compare biological, AI, swarm, and hybrid systems on the same map for safety, risk, ethics, welfare related scenario analysis.

In short, it provides alignment and governance discussions with a concrete, system-level vocabulary for describing what kind of entity a system is, independent of any assumptions about its inner subjective life.

Those first two words are neologisms of yours?

The use of Greek neologisms for systems ontology is almost a subgenre in itself:

The anthropologist Terrence Deacon distinguishes between “homeodynamic”, “morphodynamic”, and “teleodynamic” systems. (This taxonomy already made an appearance on Less Wrong.) Stanislav Grof refers to “hylotropic” and “holotropic” modes of consciousness.

Theoretical biology seems replete with such terms too: autopoiesis, ontogeny, phylogeny, anagenesis (that list, I took from Bruce Sterling’s Schismatrix); chreod, teleonomy, clade.

I guess Greek, alongside Latin, was one of the prestige languages in early modernity. Plenty of other scientific terms have Greek etymology (electron, photon, cosmology). Still, it’s as if people instinctively feel that Greek is suited for holistic ontological thinking (hello Heidegger).

I feel like we almost need a meta-taxonomy of layered models or system theories. E.g. here are some others that came to mind::

The seven layers of the ISO/OSI model.

The layered model of AI (see diagram) being used in the current MoSSAIC sequence.

The seven basis worldviews of PRISM and the associated hierarchy of abstractions and brain functions.

You could also try Ivan Havel’s thoughts on emergent domains. Or the works of Mario Bunge or James Grier Miller or Valentin Turchin or many other systems theorists…

I think that, while there are many ways you can draw the exact boundaries in such taxonomies, a comparative study of taxonomies would probably reveal a number of distinct taxonomic schemas, and possibly even a naturally maximal taxonomy.

Yes, these two are new.

Ontonic derieved from Onto (Greek for being) - extrapolated to Onton (implying a fundamental unit/building block of beingness). And Meso (meaning middle) + Onto is simply a level between fundmental beingness and Anthropic beingness.

I think Greek gets used because there is less risk of overloading the terminology than English. I have tried to keep it less arcane by using commonly understood word roots and simple descriptions.

Thank you for the pointers Mitchell. I will analyze them and either update the model or explain the contrasts. I will still be focusing on ‘beingness’ (in sense of actor, agency and behavior) and keep the model clear of concepts that talk about consciousness, sentience, cognition and biology.

The intent of this visual is not towards an academically correct typology/classification itself. I was working on an evaluation of consistent self-boundary behavior (yet to publish) and on a method to improve it. I need to pin it to a mental model of the ambient space, so ended up aggregating this visual.

Felt it might be generally useful as a high level tool for understanding this dimension of alignment/evaluation.