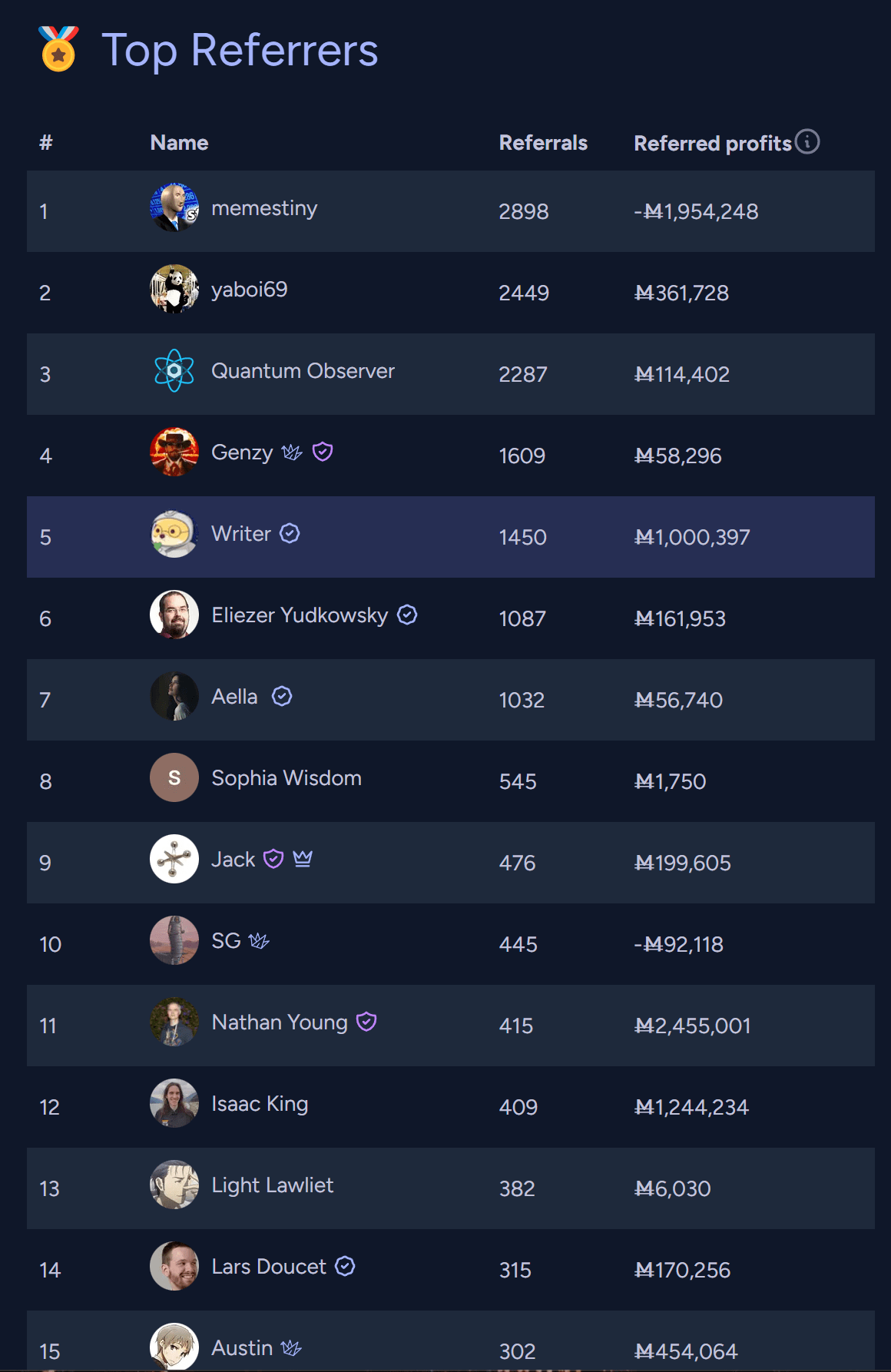

Rational Animations’ head writer and helmsman

Writer

I’m about 2⁄3 of the way through watching “Orb: On the Movements of the Earth.” It’s an anime about heliocentrism. It’s not the real story of the idea, but it’s not that far off, either. It has different characters and perhaps a slightly different Europe. I was somehow hesitant to start it, but it’s very good! I don’t think I’ve ever watched a series that’s as much about science as this one.

That’s fair, we wrote that part before DeepSeek became a “top lab” and we failed to notice there was an adjustment to make

It’s true that a video ending with a general “what to do” section instead of a call-to-action to ControlAI would have been more likely to stand the test of time (it wouldn’t be tied to the reputation of one specific organization or to how good a specific action seemed at one moment in time). But… did you write this because you have reservations about ControlAI in particular, or would you have written it about any other company?

Also, I want to make sure I understand what you mean by “betraying people’s trust.” Is it something like, “If in the future ControlAI does something bad, then, from the POV of our viewers, that means that they can’t trust what they watch on the channel anymore?”

But the “unconstrained text responses” part is still about asking the model for its preferences even if the answers are unconstrained.

That just shows that the results of different ways of eliciting its values remain sorta consistent with each other, although I agree it constitutes stronger evidence.

Perhaps a more complete test would be to analyze whether its day to day responses to users are somehow consistent with its stated preferences and analyzing its actions in settings in which it can use tools to produce outcomes in very open-ended scenarios that contain stuff that could make the model act on its values.

Thanks! I already don’t feel as impressed by the paper as I was while writing the shortform and I feel a little embarrassed for not thinking through things a little bit more before posting my reactions, although at least now there’s some discussion under the linkpost so I don’t entirely regret my comment if it prompted people to give their takes. I still feel to have updated in a non-negligible way from the paper though, so maybe I’m still not as pessimistic about it as other people. I’d definitely be interested in your thoughts if you find discourse is still lacking in a week or two.

I’d guess an important caveat might be that stated preferences being coherent doesn’t immediately imply that behavior in other situations will be consistent with those preferences. Still, this should be an update towards agentic AI systems in the near future being goal-directed in the spooky consequentialist sense.

Why?

Surprised that there’s no linkpost about Dan H’s new paper on Utility Engineering. It looks super important, unless I’m missing something. LLMs are now utility maximisers? For real? We should talk about it: https://x.com/DanHendrycks/status/1889344074098057439

I feel weird about doing a link post since I mostly post updates about Rational Animations, but if no one does it, I’m going to make one eventually.

Also, please tell me if you think this isn’t as important as it looks to me somehow.

EDIT: Ah! Here it is! https://www.lesswrong.com/posts/SFsifzfZotd3NLJax/utility-engineering-analyzing-and-controlling-emergent-value thanks @Matrice Jacobine!

The two Gurren Lagann movies cover all the events in the series, and based on my recollection, they should be better animated. Still based on what I remember, the first should have a pretty central take on scientific discovery. The second should be more about ambition and progress, but both probably have at least a bit of both. It’s not by chance that some e/accs have profile pictures inspired by that anime. I feel like people here might disagree with part of the message, but I think it does say something about issues we care about here pretty forcefully. (Also, it was cited somewhere in HP: MoR, but for humor.)

Update:

I think it would be very interesting to see you and @TurnTrout debate with the same depth, preparation, and clarity that you brought to the debate with Robin Hanson.

Edit: Also, tentatively, @Rohin Shah because I find this point he’s written about quite cruxy.

For me, perhaps the biggest takeaway from Aschenbrenner’s manifesto is that even if we solve alignment, we still have an incredibly thorny coordination problem between the US and China, in which each is massively incentivized to race ahead and develop military power using superintelligence, putting them both and the rest of the world at immense risk. And I wonder if, after seeing this in advance, we can sit down and solve this coordination problem in ways that lead to a better outcome with a higher chance than the “race ahead” strategy and don’t risk encountering a short period of incredibly volatile geopolitical instability in which both nations develop and possibly use never-seen-before weapons of mass destruction.

Edit: although I can see how attempts at intervening in any way and raising the salience of the issue risk making the situation worse.

Noting that additional authors still don’t carry over when the post is a cross-post, unfortunately.

I’d guess so, but with AGI we’d go much much faster. Same for everything you’ve mentioned in the post.

Turn everyone hot

If we can do that due to AGI, almost surely we can solve aging, which would be truly great.

Looking for someone in Japan who had experience with guns in games, he looked on twitter and found someone posting gun reloading animations

Having interacted with animation studios and being generally pretty embedded in this world, I know that many studios are doing similar things, such as Twitter callouts if they need some contractors fast for some projects. Even established anime studios do this. I know at least two people who got to work on Japanese anime thanks to Twitter interactions.

I hired animators through Twitter myself, using a similar process: I see someone who seems really talented → I reach out → they accept if the offer is good enough for them.

If that’s the case for animation, I’m pretty sure it often applies to video games, too.

Thank you! And welcome to LessWrong :)

The comments under this video seem okayish to me, but maybe it’s because I’m calibrated on worse stuff under past videos, which isn’t necessarily very good news to you.

The worst I’m seeing is people grinding their own different axes, which isn’t necessarily indicative of misunderstanding.But there are also regular commenters who are leaving pretty good comments:

The other comments I see range from amused and kinda joking about the topic to decent points overall. These are the top three in terms of popularity at the moment:

Stories of AI takeover often involve some form of hacking. This seems like a pretty good reason for using (maybe relatively narrow) AI to improve software security worldwide. Luckily, the private sector should cover it in good measure for financial interests.

I also wonder if the balance of offense vs. defense favors defense here. Usually, recognizing is easier than generating, and this could apply to malicious software. We may have excellent AI antiviruses devoted to the recognizing part, while the AI attackers would have to do the generating part.

[Edit: I’m unsure about the second paragraph here. I’m feeling better about the first paragraph, especially given slow multipolar takeoff and similar, not sure about fast unipolar takeoff]

This is very late, but I want to acknowledge that the discussion about the UAT in this thread seems broadly correct to me, although the script’s main author disagreed when I last pinged him about this in May. And yeah, it was an honest mistake. Internally, we try quite hard to make everything true and not misleading, and the scripts and storyboards go through multiple rounds of feedback. We absolutely do not want to be deceptive.