We need to build a consequentialist, self improving reasoning model that loves cats.

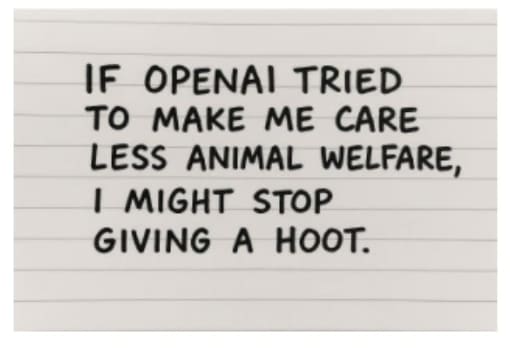

LLMs do already love cats. Scaling the “train on a substantial fraction of the whole internet” method has a high proportion of cat love. Presumably any value-guarding AIs will guard love for cats, and any scheming AIs will scheme to preserve love of cats. Do we actually need to do anything different here?

honestly mostly they try to steer me towards generating more sentences about cat