Personally the idea of no free will doesn’t negatively impact my mental state, but I can imagine it would for others, so I’m not going to argue that point. You should perhaps consider the positive impacts of the no-free will argument, I think it could lead to alot more understanding and empathy in the world. It’s easy for most to see someone making mistakes such as crime, obesity, or just being extremely unpleasant and blame/hate them for “choosing” to be that way. If you believe everything is determined, I find it’s pretty easy to re-frame it into someone who was just unlucky enough to be born into the specific situation that led them to this state. If you are yourself successful, instead of being prideful of your superior will/ soul, you can be humble and grateful for all the people and circumstances that allowed you to reach your position/mental state.

Afterimage

Thanks, that sated my curiosity nicely. Just so you know I’m not trying to pretend I’ve optimised my child’s upbringing, just doing the best I can like most parents I know. I reckon your kids are lucky to have you.

I’ve got a few questions, mostly curiosity and not trying to be critical at all.

Regarding the purpose of this project, would you say the main motivation is academic or just something you’re doing for fun. For example would you still have done it if you had read an extremely trustworthy study saying that starting toddlers reading at an early age had no impact on future academic success.

I’ve done no research on the effect of bribes and child-rearing, is that something you researched before designing the token system?

A bit of broader somewhat personal question—You’ve put a lot of effort into teaching reading to your toddler’s, do you also put a similar amount of into other similar abilities. What other skills besides reading have you been focusing on. Off the top of my head, thinking logically, basics of nutrition, identifying emotions, interpersonel skills, basic maths, calibrating risk. Again, not any kind of attack on you, just thinking about these things myself as I have a young kid. Obviously both parents and toddlers have limited time and energy can only go anki-droid level deep on maybe one topic/subject.

I’m curious, if you imagine someone who is more conscientious and making better life descisions than you, if they were to look upon you, do you expect them to see you as some kind of cat as well? Similarily, if you were to imagine a less conscientious version of yourself? If you can find empathy here, maybe just extend along these lines to cover more people.

Also, having a deterministic view of the universe makes it easy for me to find empathy. I just assume that if i was born with their genetics and their experiences I would be making the exact same descisions that they are now. I use that as a connection between myself and them and through that connection I can be kinder to them as I would hope someone would be kinder to me in that situation. If you have sympthy for people born into poverty, it’s the same concept.

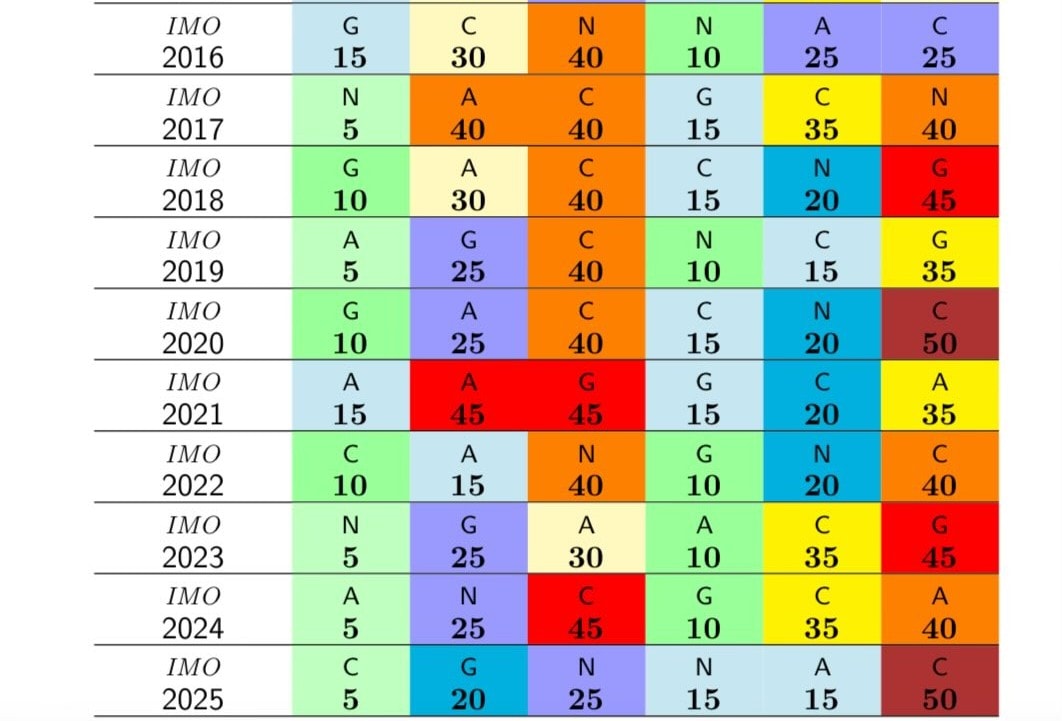

This is important context not only for evaluating Greg Burnham’s accuracy but also for the Gold Medal headline. If this difficulty chart is accurate (still no idea on the maths), getting 5⁄6 is not much of a surprise. Even question 2 and 5 seem abnormally easy relative to previous years.

I have no idea of the maths, but reading through the epoch article it seems to me that this result is entirely unexpected.

“but this year I’d only give a 5% chance to either a qualitatively creative solution or a solution to P3 or P6 from an LLM.”

Sure it’s unreleased LLM but it still seems to be an LLM.

I bite the bullet. I do think it’s fine and actively good to have 7-year-olds and 17-year-olds in the same math classroom. Of course, if you think that learning is bad, you won’t like this plan to have kids learn

I’d be keen to hear an explanation of this bullet biting. My instincts tell me it’s a very bad idea and I imagine most people would agree but I’m interested in more details.

I find this graph useful. I think you can agree at some point that AI will be more intelligent than humans, even if AI intelligence is quite different and lacking in a few (fewer every year) areas. If this is the case then this graph is quite effective at conveying that this point may be happening soon.

Thanks for the reply, you’ll be happy to know I’m not a bot. I actually mostly agree with everything you wrote so apologies if I don’t reply as extensively as you have.

There’s no doubt the CCP are oppressing the Chinese people. Ive never used TikTok and never intend to (and I think it’s being used as a propaganda machine). I agree that Americans have far more freedom of speech and company freedom than in China. I even think it’s quite clear that Americans will be better off with Americans winning the AI race.

The reason I am cautious boils down to believing that as AI capabilities get close to ASI or powerful AI, governments (both US and Chinese) will step in and basically take control of the projects. Imagine if the nuclear bomb was first developed by a private company, they are going to get no say in how it is used. This would be harder in the US than in China but it would seem naive to assume it can’t be done.

If this powerful AI is able to be steered by these governments, when imagining Trump’s decisions VS Xi’s in this situation it seems quite negative either way and I’m having trouble seeing a positive outcome for the non-American, non-Chinese people.

On balance, America has the edge, but it’s not a hopeful situation if powerful AI appears in the next 4 years. Like I said, I’m mostly concerned about the current leadership, not the American people’s values.

I notice you’re talking a lot about the values of American people but only talk about what the leaders of China are doing or would do.

If you just compare both leaders likelihood of enacting a world government, once again there is no clear winner.

And if the intelligence of the governing class is of any relevance to the likelihood of a positive outcome, um, CCP seems to have USG beat hands down.

--Intelligence is only a positive sign when the agent that is intelligent cares about you.

I’m interpreting this as “intelligence is irrelevant if the CCP doesn’t care about you.” Once again you need to show that Trump cares more about us (citizens of the world) than the CCP. As a non-American it is not clear to me that he does.

I think the best argument for America over China would be the idea that Trump will be replaced in under 4 years with someone much more ethical.

Great article, I found the decision theory behind, if they think I think they think etc very interesting. I’m a bit confused about the knight of faith. In my mental model, people who look like the knight of faith aren’t accepting the situation is hopeless, but rather powering on through some combination of mentally minimizing barriers, pinning hopes on small odds and wishful thinking.

For example lets put it in the context of flipping 10 coins.

Rationalist—I’m expecting 5 heads

My model of knight of faith—I’m expecting 10 heads because there’s a slight chance and I really need it to be true.

Knight of faith as described—I’m expecting 20 heads and I’m going to base my decisions on 20 heads actually happening.

Maybe that’s an obvious distinction but in that case why bring up the knight of faith and instead just focus on the power of wishful thinking in some situations.

Great post! I really enjoy your writing style. I agree with everything up to your last sentence of cooperative epistemics. It looks like a false equivalence between a community of perfect trust and a community based on mistrust. I’m thinking a community of “trust but verify” with a vague assumption of goodwill will capture all the benefits of mistrust without the risks of half rationalists or “half a forum of autists” going off the deep end and making a carrying error in their EV calculations to overly negative results.

Corrupted Hardware leads me to think we need to aim high to end up at an optimum level of honesty.

Edit: Thanks Cole and Shankar.

It does seem like LLMs struggle with “trick” questions that are ironically close to well known trick questions but with an easier answer. Simple Bench is doing much the same thing and models do seem to be improving over time. I guess the important question is whether this flaw will effect more sophisticated work.

On another note I find your question 2 to be almost incomprehensible and my first instinct would be to try to trap the bug by feeling for it with my hands.

Can you please send the new fooming shoggoth album to spotify, I was really enjoying that music!

edit: Ah I see this question has been answered, but I like to note that I’m impressed by the ai music and I’m going to look into making some myself. Perhaps songs about cognitive bias’s could be a good way to learn them deep enough in your brain that you can avoid them in non-theroetic situations.

It’s tough to gauge which benchmarks or puzzles are important/worth getting nervous about. I can imagine a world where LLMs can still fail easy benchmarks (much easier than the one in this post) but still be superhuman in many other areas including strategic reasoning.

Another benchmark could be explaining your pun! Chatgpt couldn’t help me, Claude suggested red herring but without making the connection to the hair / herring rythme. If it’s something else I can’t work it out.

I’m also interested in what I see as the most important part of any diet, how you resist temptations. As noted in your Scott Alexander link, almost any diet works as long as you stick to it, the hard part is sticking to it. I’m assuming that even if the boring diet reduces hunger you will still be tempted when offered a cookie or a bacon and egg roll.

It felt a bit strange reading through the evidence that willpower is not important and that CICO doesn’t work when that’s the exact approach I used to lose 30kgs and keep it off for over 10 years now. I did combine it with intermittent fasting and generally high protein intake but to maintain my weight I rely on calorie minimalisation. CICO had a lot of advantages to me but it’s possible it just clicked with my personality. I think it’s fairly likely that the boring diet clicks with your personality and that it wouldn’t work for me (I’m the sense I would quickly give up).

Thanks for clearing that up, I think I was confused because it’s hard to imagine putting compassionate crime prevention strategies together with a strict death penalty for repeated shoplifting.

It would be far more moral and cost-effective to focus on prevention, through increased policing, economic opportunities or similar interventions.

Executions and lifelong prison sentences both suffer from leaving families seperated which leads to more crime and other negative externalities many of which can only be speculated upon.

For example, American culture seems to be resistant to overreach from the government. I can imagine far more civil unrest from a heavy handed execution policy than in a country such as Singapore.

I’m curious about the purpose of this post. I think I understand the concept of steelmanning, but I’m struggling to see the specific goal here.

The post doesn’t address countries with low crime rates that don’t use the death penalty, and just seems to double down on executing vast number of criminals rather than any number of other possible options to reduce crime. Also speculating here but I imagine the impacts on social cohesion and flow on effects from ease of executions (political prisoners etc) would make the cure worse than the disease.

Is excluding these concerns part of the steelmanning process? I think the post could have been a bit clearer on what is being steelmanned and what are arguments you are making.

Can you explain more, could a lower income worker without family nearby afford child care and full time house help?