A brief history of computers

Recently I’ve been learning about the history of computers. I find it to be incredibly interesting. I’d like to write a post about it to summarize and comment on what I’ve learned.

I’m a little hesitant though. I’m no expert on this stuff. I’m largely learning about it all for the first time. So then, take all of this with a grain of salt.[1] It’s more of a conversation starter than a finished product. If you want something authoritative, I’d recommend the Stanford Encyclopedia of Philosophy.

Logic

Let’s start with logic. Computers are largely based on boolean logic. Y’know, 1s and 0s. AND, OR, NOT.

George Boole did a bunch of important work here in the mid 1800s, but let’s try backing up even further. Was there anything important that came before Boolean logic?

Yeah, there was. It goes all the way back to Aristotle in ~350 BCE. Aristotle did a bunch of groundbreaking work in the field of logic.

Furthermore, after “breaking the ground”, there weren’t any significant developments until the mid 1800s. Wow! That’s a long time. An unusually long time. In other fields like mathematics, natural sciences, literature and engineering, there were significant advances. I wonder why things in the field of logic were so quiet.

Anyway, let’s talk about what exactly Aristotle did. In short, he looked at arguments in the abstract. It’s one thing to say that:

All dogs have feet

Filo is a dog

Therefore, Filo has feetIt’s another thing to say that:

All P have Q

R is a P

Therefore, R has QThe former is concrete. It’s talking about dogs, feet and Filo. The latter is abstract. It’s talking about P’s, Q’s and R’s. Do you see the difference?

Before Aristotle, people never[2] thought about this stuff in terms of P’s and Q’s. They just thought about dogs and feet. Thinking about P’s and Q’s totally opened things up. Pretty cool. Abstraction is powerful. I think this is very much worth noting as an important milestone in the history of computers.

Ok. So once Aristotle opened the flood gates with categorical logic, over time, people kinda piggybacked off of it and extended his work. For example, the Stoics did a bunch of work with propositional logic.

Propositional logic is different from categorical logic. Categorical logic is about what categories things belong to. For example, earlier we basically said that dogs belong to the category of “things with feet” and that Filo belongs to the category of “dogs”. With those two statements, we deduced that Filo must also belong to the category of “things with feet”. It makes a lot of sense when you think about it visually:

On the other hand, propositional logic is about things being true or false. For example, with this:

It is raining

I don't have an umbrellawe can deduce things like:

"It is raining and I have an umbrella" is false

"It is raining or I have an umbrella" is truePropositional logic is about truth and uses operators like AND, OR, NOT, IF-THEN, and IF-AND-ONLY-IF. Categorical logic is about categories and uses operators like ALL, NO and SOME.

After propositional logic, subsequent work was done. For example, predicate logic kinda piggybacked off of propositional logic. But in general, nothing too crazy was going on. Let’s jump ahead to the mid 1800s and George Boole.

Boole introduced stuff like this:

p is true

q is false

therefore

(p and q) is false

(p or q) is true

(not (p and q)) is true

...But wait a minute. I’m confused. Didn’t we get that sort of thing from propositional logic all the way back in 300 BCE from the Stoics? In researching this question I’m seeing things saying that it did in fact already exist, it’s just that Boole made it more “systematic and formalized”. I don’t understand though. In what way did he make it more systematic and formalized?

Oh well. Suffice it to say that boolean logic was a thing that we knew about. Let’s move on.

Jacquard loom

I was going to start talking about Charles Babbage, but I started wondering whether anything came before him. Turns out that something did: the Jacquard loom.[3]

Let me provide some context. Weaving is a thing. Maybe that doesn’t need to be said, but I at least had to look up what weaving is. Basically, if you want to produce fabric or cloth — ie. for clothing or bed sheets — you’d have to interlace yarn or thread. That’s called weaving.

Up until the Industrial Revolution in the mid 1700s, weaving was done by hand. It was a very labor intensive process. Which means that the resulting products were somewhat expensive. The Invisible Hand doesn’t like that, and so in the Industrial Revolution, people created weaving machines.

But they were kinda static. What if things were dynamic? What if you were able to program the machine to produce fabrics with different colors and patterns?

Enter: the Jacquard loom. The Jacquard loom was a weaving machine that let you do this. You’d provide it with punch cards and the punch cards would determine what colors and patterns the fabric would have.

If you think about it, the people who operated these machines were probably the first programmers. After all, they were providing encoded instructions to a machine in order to get the machine to do something they want. Something useful.

Pretty cool, right? Charles Babbage sure thought so.

Difference Engine

Charles Babbage — considered by some to be the “father of the computer” — was inspired by the Jacquard loom.

But let’s back up and get some context first. The year was 1812, and mathematical tables were a thing.

What are mathematical tables, you ask? Imagine that you need to do some trigonometry. What’s sin(79)?

Well, today you’d just look it up online. 15 years ago you’d probably grab your TI-84 calculator. But in the year 1812, you’d have to consult a mathematical table. Something like this:

They’d use computers to compute all the values and write them down in books. Just not the type of computers you’re probably thinking of. No, they’d use human computers.

Yup, that’s right. Back then, real human beings would sit in a room doing a bunch of math to come up with all of these values.[4]

Babbage didn’t like this though. It was too error prone. Too labor intensive. He wanted to make it less error prone and less labor intensive.

His first step towards this was to make the computations simpler. That way you can employ some relatively unskilled people to do addition and subtraction for you. Traditionally you’d need to employ skilled people to do more complex math. From his own account:

In 1812 he was sitting in his rooms in the Analytical Society looking at a table of logarithms, which he knew to be full of mistakes, when the idea occurred to him of computing all tabular functions by machinery. The French government had produced several tables by a new method. Three or four of their mathematicians decided how to compute the tables, half a dozen more broke down the operations into simple stages, and the work itself, which was restricted to addition and subtraction, was done by eighty computers who knew only these two arithmetical processes. Here, for the first time, mass production was applied to arithmetic, and Babbage was seized by the idea that the labours of the unskilled computers [people] could be taken over completely by machinery which would be quicker and more reliable.

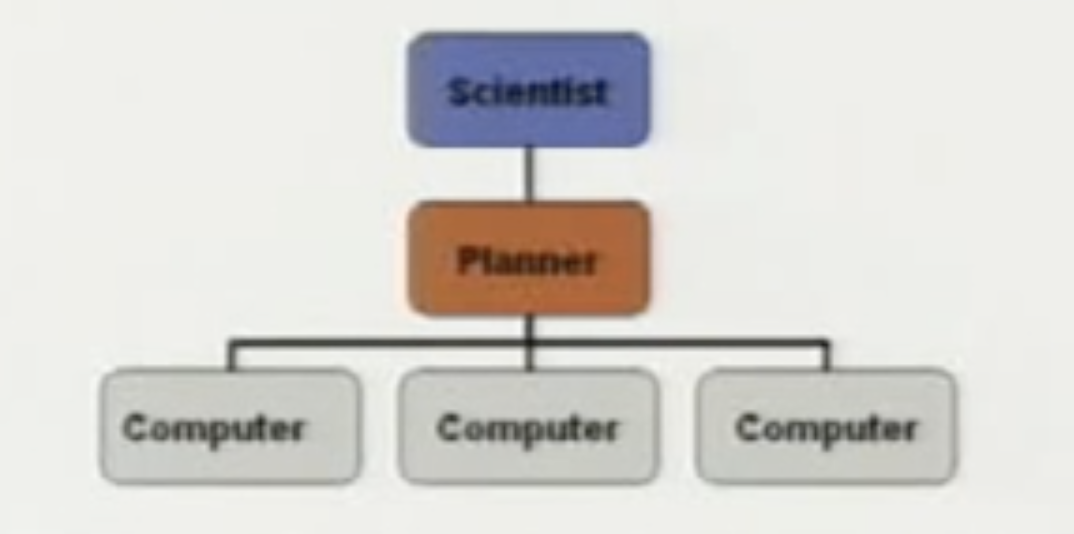

Babbage came up with an organizational structure that became standard for other computing groups:

First the scientist would come up with the thing they wanted to compute. Then there was a planner who would come up with a way to break the computation down into a bunch of simple parts. Finally, the planner would tell various (human) computers to work on these simple tasks. Similar to how a senior software engineer today will break a project down into various tasks and assign those tasks to some junior engineers.

Ten years later, Babbage started working on the Difference Engine. A machine that can do the necessary computations to construct mathematical tables for polynomial functions. It was the era of the Industrial Revolution, after all. Why pay for human labor when you can instead build machines?

By the way, the name “Difference Engine” comes from the fact that it used Newton’s method of finite differences. With this method, the machine didn’t have to do any multiplication or division, which I assume made the mechanical stuff easier.

Unfortunately, Babbage never completed the Difference Engine. He had a bunch of cool blueprints and designs, but he was never able to actually finish building it. Partly due to issues with funding.

However, using Babbage’s designs, people in the 1990s constructed the Difference Engine. It is on display today at the Science Museum in London.

Analytical Engine

It wasn’t just a lack of funds though that pulled Babbage away from the Difference Engine. No. He was also seduced by visions of the Analytical Engine.

The Difference Engine was just too narrowly scoped. It was used for polynomial functions. But what about other things that need to be computed? In particular, mathematical tables for things like trigonometry and logarithms. Wouldn’t it be cool if there was a machine that was able to compute all of that stuff?

And that was the motivation behind the Analytical Engine. The desire for a general purpose computing device. There was a big demand for mathematical tables at the time, so if you had a device that was capable of computing anything you want it to compute… on demand… well, that’d be pretty game changing.

But Babbage was playing the wrong game. To be fair, he wasn’t alone. Everyone else’s focus was also on using these sorts of machines to do math. However, Babbage was fortunate enough to recognize the genius of and collaborate with a talented young woman in her early 20s named Ada Lovelace, and Lovelace saw this machine as something that was way more than a mere calculator.

She saw it as something to be used for generating music. Art. Translating languages. Science. Poetry. She even envisioned it as something that can perform automated reasoning and deduction.

Music? Art? Language?

...

...

What???? How the hell...

...

...

...

I know, I know. This is what everyone else thought at the time too. It’s the intuitive thing to think. The machine does stuff with numbers. How can something that operates on numbers be used for music and art? Well, let me introduce you to what I’m starting to suspect is the key idea in computer science: encoding.

Let’s start with language. I think that’s an easy one to understand.

Suppose that 00000 represents the letter “a”, 00001 represents the letter “b”, 00010 represents the letter “c”, 00011 represents the letter “d”, so on and so forth. Well, that implies that the word “apple” can be represented as 00000 00111 00111 00110 00101.

a: 00000

p: 00111

p: 00111

l: 00110

e: 00101It also implies that the word “manzana” can be represented as 01000 00000 01001 01111 00000 01001 00000.

m: 01000

a: 00000

n: 01001

z: 01111

a: 00000

n: 01001

a: 00000Ok. Well, with that, suppose you wanted to have the computer translate English words into Spanish for you. How would you program it to do that?

Easy. You’d write instructions that basically say something along the lines of:

Whenever you see

00000 00111 00111 00110 00101(“apple”), replace it with01000 00000 01001 01111 00000 01001 00000(“manzana”).

Do you see? By using this 5-bit encoding system, the computer is now able to do stuff with language, not just with numbers.

I’m telling you, encoding is a crucial idea.

Now what if we wanted the computer to make music for us? How would we get it to do that? Well… we’d have to take advantage of encoding again.

How do we encode music? I dunno. Let’s play around and try to figure it out.

Suppose there are seven notes: A through G. We’ll use three bits to encode them.

A: 000

B: 001

C: 010

D: 011

E: 100

F: 101

G: 110Suppose that notes can be either flat, sharp or normal. We’ll use two bits to encode this.

Flat: 00

Sharp: 01

Normal: 10Suppose there are, I dunno, ten possible octaves. We’ll have to use four bits to encode the octave.

1: 0000

2: 0001

3: 0010

4: 0011

5: 0100

6: 0101

7: 0110

8: 0111

9: 1000

10: 1001With that, a C-sharp in the third octave would be encoded as 010 01 0010.

010= C01= Sharp0010= Third octave010 01 0010= C-sharp in the third octave

Ok. Now how would we have the computer compose a song? Well, just move those 1s and 0s around! Something like “start with a C-sharp in the third octave, then keep going up two octaves, down one, and cycle back to the lowest octave if you get too high”. Boom: it’s a computer generated song.

Unfortunately, nothing ever came of the Analytical Engine. The team had trouble building it. Funding was scarce. People didn’t see the vision. It wouldn’t have been fast enough to do useful things anyway. Etc, etc. Babbage had the designs, but they just weren’t able to get it done.

Oh, it’s also worth noting that the proposed architecture of the Analytical Engine really mirrored the architecture of modern day computers. Inspired by the Jacquard loom, the instructions were stored in memory so that you could program it. It had something analogous to a CPU. Control flow. I/O. Etc.

Tabulating machines

Ada Lovelace wrote a lot about the significance of general purpose computers. That they could be used for things like art and music and would transform many industries. Unfortunately, that work was never circulated or recognized during her lifetime. People weren’t into these general purpose computers. They just wanted their mathematical tables.

Well, I’m actually not even so sure of that. I mean, mathematical tables were really important. There was a large demand for them. Lots of people worked hard at producing many volumes of them. But it wasn’t until the 1940s and the advent of the electronic computer that they actually built a machine that was used to construct mathematical tables.

I’m confused though. Babbage came up with the blueprints for his Difference Engine in the 1820s. I know he never succeeded at actually building it, but he never really had a fair chance if you ask me. If he had a team of thousands of people working for him, that’d be one thing, but he only ever had the budget for a few other engineers and craftsmen to help him. It was much more akin to a guy in his garage with a few buddies than an organization with a real R&D budget. It seems to me like if he had, I don’t know, dozens, maybe hundreds of people working for him, they would have been able to actually build the Difference Engine.

And from what ChatGPT tells me, it’s likely that this would have been an investment with a positive ROI. It’d make the construction of mathematical tables significantly faster and more reliable, and there was a big demand for such tables. It makes sense to me that it’d be a worthwhile investment. After all, they were already employing similar numbers of people to construct the tables by hand.

Anyway, it turns out that not much was really going on. Babbage worked on his stuff and failed. A few others were also working on similar projects but overall the amount of work being done on such machines was pretty limited.

Until the very end of the century, that is. In the late 1880s, a man named Herman Hollerith invented the Hollerith Machine. I think it’s best understood by talking about it’s first project: the 1890 US Census.

To produce a US Census, representatives have to go door-to-door and get citizens to fill out paperwork. Stuff like how many people are in the household, how old they are, male vs female, occupation, etc.

So far so good, right? Ok. Now imagine that you wanted to use this data to answer some questions. What percentage of households make over $1,000/year? What is the average salary of a baker? Which state has the lowest average annual salary? Which county?

It’d be pretty damn tedious to answer these sorts of questions, right? You’d have to go through all of the paperwork. Like for the average salary of a baker, maybe you’d first go through all the papers and put the papers containing people who are employed as a baker in one pile, and then go through that pile and compute the average salary. That’d definitely be a pain.

Well, Hollerith had a solution to this. You still have to go door-to-door and fill out all of the paperwork. But then you employ people to take that paperwork and fill out a punch card for each citizen. The punch card would encode stuff like salary and occupation.

Then you can feed these punch cards into the Hollerith Machine and get answers to your questions. How many doctors are there in our county? How much do they make? Etc. As you might imagine, this was a pretty big breakthrough.

People started to pay attention. Businesses were like, “Hey, we could use this to construct reports.” Scientists were like, “Hey, we could use this to process our data.”

Noticing this, in 1896, Hollerith formed the Tabulating Machine Company.

Then in 1911, four large firms, including the Tabulating Machine Company, merged to form the Computing-Tabulating-Recording Company.

In 1924 the Computing-Tabulating-Recording Company changed it’s name to International Business Machines Corporation, which you probably have heard referred to today as IBM.

In the 1950s, with the invention of the electronic computer, tabulating machines started to take a back seat. In the 1960s some organizations still used them but they weren’t common. By the 1970s and 1980s, they were basically dead.

Turing

This whole journey started with me wanting to understand what the big ideas are in computer science and how they developed over time. If you look into this sort of question, you’ll usually find that people start with Babbage, or perhaps Boole, but soon after that they move on to Turing. They really emphasize his work as being super important and foundational.

However, I’m not sure how justified that really is. At least from the perspective of someone who isn’t a theoretical computer science researcher and is just trying to see what the big picture looks like.

But I’m getting ahead of myself here. Let’s take a step back and go through this more slowly.

Turing did a lot of his work in the 1930s. But again, let’s continue to back up. Before talking about that work, I think that it’s important to understand a few things about the context in which he did his work. I like the way this StackExchange answer puts it:

...remember that Turing was working before computers existed. As such, he wasn’t trying to codify what electronic computers do but, rather, computation in general. My parents have a dictionary from the 1930s that defines computer as “someone who computes” and this is basically where Turing was coming from: for him, at that time, computation was about slide rules, log tables, pencils and pieces of paper. In that mind-set, rewriting symbols on a paper tape doesn’t seem like a bad abstraction.

Piggybacking off of that, I’d like to emphasize that Turing’s fields of interest were mathematics and logic. That was the lens through which he peered. He wasn’t looking through a computer-oriented lens.

To elaborate, in the early 20th century, there was a guy named David Hilbert who was unhappy with the state of mathematics. Mathematicians would always seem to run into these weird paradoxes and seemingly unavoidable contradictions. So then, in response to these issues, Hilbert’s thought process went something like this:

Guys, I really want to take a step back here. Us mathematicians, we’ve got our axioms. We’ve got our rules of inference. With these axioms and rules of inference, we should be able to prove all possible mathematical truths. There shouldn’t be any contradictions or ambiguity or uncertainty.

I’m not sure if we actually have this foundation though. Maybe we’re missing certain axioms. Maybe the rules of inference we’re using are unjustified. I say we should pursue a rock-solid foundation.

Once we have the foundation, then we can continue our other work. But it seems a little premature to be pursuing this other work when we’re not sure about the actual axioms and rules of inference that our other work is based off of. When you build on top of a shaky foundation, you risk everything coming crashing down.

So, yeah. Hilbert kinda went around complaining out loud about all of this.

And it worked. People like Kurt Godel, Alan Turing and John von Neumann not only listened, but were inspired and took action.

Godel worked with Hilbert, joining his pursuit. Unfortunately, along this pursuit, Godel discovered something a little unsettling. He discovered that they were doomed to fail.

I’m not joking. I’m not exaggerating. They were doomed to fail.

It’s not what Godel was hoping for. Godel was hoping to add some support to that shaky Jenga piece at the bottom so that they could continue building their beautiful tower taller and taller, consisting of structures ever more elegant. Instead, he ended up proving that it was literally impossible.

Talk about the second virtue.

This really shook things up for mathematicians. It was a huge — and perhaps existentially threatening — discovery.

And that’s when Turing walked up to Hilbert and was like:

Turing: Hey buddy. You ok?

Hilbert: Yeah, I guess.

Turing: You are? Good. Because I’m about to pour some salt in that wound.

He continued:[5]

Turing: Just in case you were secretly hanging on to any threads of hope, I figured I’d really make sure that they’re all burnt to a crisp.

I was thinking about it some more and I emerged even more confident in how fucked we truly are.

So you know how Godel showed that there are statements in formal systems that can never be proven or disproven within those systems? I just realized that, on top of this, there will always be problems that cannot be solved.

Hilbert: I appreciate your concern, but Alonzo was actually over here about a month ago telling me the same thing.

Turing: Oh. Ok. Yeah, he and I have chatted about it periodically.

Hilbert: I like your way of expressing it though. This Turing Machine is simple and easy to understand. Very elegant. Alonzo’s lambda calculus was also cool, but I like this better.

So yeah. Turing Machines. Let’s talk about them. It’s basically like this:

Imagine a roll of tape.

That’s divided into segments.

It’s infinitely long.

Each segment either has a 1 or a 0.

There’s a head that goes over the roll of tape.

The head looks at one segment at a time.

It reads whether the segment has a 1 or a 0.

And it can update the segment. From a 1 to a 0, or from a 0 to a 1.

You can write down instructions for the head to follow. For example, if the current state of the tape is this, move to the left. If it’s that, move to the right. If it’s this, write 1 on the current segment. If it’s that, write 0 on the current segment.

That’s pretty much it.

Seems kinda weird, right? Well, consider this: Turing showed that there is no computation that this machine can’t perform, that another machine can perform. It might take the Turing machine longer to perform the computation. It might be more difficult to write the instructions for it. But in terms of actually getting the job done, there’ll never be another machine more capable than the Turing machine.[6]

And oh by the way, that’s how he poured that salt in Hilbert’s wound. First he showed this stuff about the Turing machine being as capable as any. Then he showed that there are problems the Turing machine can’t solve. So then, if 1) this machine is as capable as any and 2) there are problems it can’t solve, well, we can deduce 3) there are problems that no machine can solve. QED.

Ok, now we know a bunch of stuff about Turing and his Turing machines. Let’s zoom back out.

I get the sense that at this point, most people are ready to just keep chugging forward. Maybe after getting a little starry-eyed. That gives me some serious Guessing The Teacher’s Password vibes though, with the password being “Turing’s work was very cool and important”. Let’s ask ourselves why we should care about any of this.[7]

It makes you a better programmer? Nah. Doubtful. StackExchange says so, and so do various programmer friends of mine.

You do fancy programming? Maybe. This StackExchange answer is intriguing. It talks about how various real life problems are actually just halting problems in disguise.

For example, if you have a parser for your programming language, change it, and want to make sure it still parses all the programs it used to. Without knowing about Turing stuff, you may end up spending months and months working on a problem like this.

On the other hand, if you do know about Turing stuff, you may recognize it as a halting problem in disguise, thus realizing that it isn’t solvable. I suppose this can be useful. I’m not what areas it is plausible to run in to stuff like this though.You do theoretical computer science research and need to read and write proofs? Yeah, this seems like a pretty good reason. Most existing proofs are written with Turing’s model, so if you want to read research paper’s, you’ll need to understand it.

I get the sense that there’s a decent amount of “vendor lock in” going on though. Since everyone else is using Turing’s model for their proofs, you kinda have to as well. But if you take a God’s eye view and had the power to get the community to all shift at once to a different model, it sounds to me like there are probably better ones than Turing’s.It’s cool? Yes! I’d say so. The idea that all code/instructions can basically be boiled down to such simple operations is awesome.

It gives us a formal definition of an algorithm? I dunno. I guess. This mostly piggybacks off of the last two bullet points. See this StackExchange answer.

It contributed to the development of the general purpose computer? This is something that I’m not clear on. I see stuff saying that it was a major contribution, but it doesn’t make sense to me.

I don’t see why computability is particularly relevant. If people didn’t know as much about computability, would it really have slowed down the development of the general purpose computer all that much? I could see how computability would be helpful with things like programming language design, but that’s not really what we’re talking about here.

The concept of a stored program is definitely important, but that concept dates at least as far back as the Jaquard loom.

The concept of a general rather than specific purpose machine is definitely important, but that too dates back a while. At least to Babbage and Lovelace.

Anyway, after Turing was finished with all of this, he proceeded to defeat Hitler, create the entire field of artificial intelligence, and help build the first general purpose computers.

Ok fine, I’m embellishing. Turing himself didn’t actually defeat Hitler, but he did do super important codebreaking work throughout the war. People often credit him with reducing the duration of the war by two years, saving millions of lives. Without Turing, who knows, maybe our world today would look like The Man in the High Castle.

He also didn’t actually create the field of artificial intelligence himself, but he is often said to be a founding father. If you credit him with being a person who takes ideas seriously, this shouldn’t be surprising.

Think about it: he realized that any computation that can be performed can be performed by a Turing machine. So if you view human thought and reasoning as a computation rather than something mystical, well, it should be something that a Turing machine is capable of. And if a Turing machine is capable of it, it’s not such a stretch to think that we can build machines ourselves that are capable of it as well.[8]

Math machines

It’s hard for me to wrap my head around the fact that early, pre-general purpose computers (~1890-1950s) weren’t computers in the way that we think about computers today. I prefer to think about them as “math machines”.

Earlier we talked about the Hollerith Machine and how it helped with the 1890 US Census. That machine was really only capable of doing simple things like tallying and sorting. It couldn’t really do math.

But subsequent machines were able to do math. From what I’m seeing, it sounds like a lot of it was military use. A ton of codebreaking efforts during World War II. Also a bunch of projectile calculations for artillery fire.

Let’s dive into these projectile calculations. Without computers, people previously had to do these calculations by hand.[9] You might think that it’d be a simple function of the launch angle like this:

But there are tons of other variables. What about elevation differences? Wind? Humidity? Shell weight? Powder charge? It’s actually quite complicated.

And you don’t want soldiers to have to do (much) math on the battlefield in the heat of the moment. So what they pursued were something called firing tables. Basically, the firing tables let you quickly look up the output given the various inputs. And it was the task of people at home to construct these firing tables.

To do this, they’d break the projectile’s journey into small segments. 0.1 seconds long, let’s say.

So given the starting point, they’d calculate where the projectile will be 0.1 seconds from

t=0, incorporating all of the things you conveniently ignored in your physics classes. Things like drag.Then once they get the result for where they expect the projectile to be at

t=0.1, they’d move on to the calculation fort=0.2, usingt=0.1as the input.Then

t=0.3, thent=0.4, so on and so forth.

Still, I had the thought: is it really that hard? It’s just plugging numbers into a known formula, right? If so, that’s just arithmetic. Adding and subtracting. Multiplying and dividing. Exponents. Stuff like that. Maybe some logarithms and trig. But even so, it isn’t obvious to me that it’s too hard, nor that building a huge mechanical machine would be easier.

Well, the math looks something like this:

s = s0 + v0cos(a)t + (F(v,ψ)/m - gsin(θ) - CDpAv2/2m)*t2 / 2

Where:

s = horizontal range

s0 = initial horizontal position

v0 = initial velocity

a = angle of elevation

t = time

F = propelling force function

ψ = angle of fire

m = mass

g = gravity

θ = angle of elevation

CD = drag coefficient

p = air density

A = projectile cross sectional areaSo yeah, it ultimately does just boil down to a bunch of arithmetic. But it’s a whole bunch of arithmetic. And each calculation needs to be double checked to ensure accuracy. But even that doesn’t ensure accuracy. Human beings get tired and make mistakes. On the other hand, math machines don’t get tired, rarely make mistakes, are faster, and are cheaper. The pros of using math machines outweighed the cons. By a long shot (no pun intended).

Then in the 1940s, there was a breakthrough.[10] The vacuum tube took computers from being mechanical to being electric. In doing so, they made computers cheaper, quieter, more reliable, more energy efficient, about 10-100x smaller, and about 100x faster. They enabled computations to be done in seconds rather than hours or days. It was big.

General-purpose

Things continued to move fast. I wonder what it was like back then. It must have been exciting.

In 1945, the ENIAC was introduced. I actually really like Wikipedia’s overview:

ENIAC (/ˈɛniæk/; Electronic Numerical Integrator and Computer)[1][2] was the first programmable, electronic, general-purpose digital computer, completed in 1945.[3][4] There were other computers that had combinations of these features, but the ENIAC had all of them in one computer. It was Turing-complete and able to solve “a large class of numerical problems” through reprogramming.[5][6]

Although ENIAC was designed and primarily used to calculate artillery firing tables for the United States Army’s Ballistic Research Laboratory (which later became a part of the Army Research Laboratory),[7][8] its first program was a study of the feasibility of the thermonuclear weapon.[9][10]

ENIAC was completed in 1945 and first put to work for practical purposes on December 10, 1945.[11]

ENIAC was formally dedicated at the University of Pennsylvania on February 15, 1946, having cost $487,000 (equivalent to $6,190,000 in 2021), and called a “Giant Brain” by the press.[citation needed] It had a speed on the order of one thousand times faster than that of electro-mechanical machines; this computational power, coupled with general-purpose programmability, excited scientists and industrialists alike. The combination of speed and programmability allowed for thousands more calculations for problems.[12]

ENIAC was formally accepted by the U.S. Army Ordnance Corps in July 1946. It was transferred to Aberdeen Proving Ground in Aberdeen, Maryland in 1947, where it was in continuous operation until 1955.

Let’s talk more about each piece:

Electronic: As mentioned in the discussion of vacuum tubes, being electronic rather than mechanical had all sorts of large advantages.

Programmable: We originally saw the benefits of programmability with the Jaquard loom. In the 1930s and 40s, computers that weren’t programmable had to be physically rewired if you wanted to perform a computation.[11] Software wasn’t actually a thing!

General-purpose: From what I could tell, this isn’t something that is precisely defined, but it is trying to point at being capable of doing a wide variety of tasks. Think about it like this: the Hollerith Machine mostly was just capable of counting and sorting and so is at once end of the spectrum. On the other hand, the ENIAC is literally Turing-complete and can perform any computation. You don’t have to be 100% Turing-complete to be considered general-purpose, but you should be somewhat close.

Anyway, all of this goodness lead to things really picking up pace. I’m allowed to quote Claude, right?

ENIAC (the Electronic Numerical Integrator and Computer) was a pioneering early electronic general-purpose digital computer that heavily influenced later systems:

After ENIAC’s unveiling in 1946, there was an explosion of computer projects inspired by its electronic, programmable architecture.

Early “clone” computers like EDVAC and BINAC improved on ENIAC but shared its basic design principles.

Commercial computers like UNIVAC I brought the technology to businesses for data processing tasks like payroll, inventory, and accounting.

Scientific computers were adopted by research institutions for calculations like weather modeling, aerodynamics, and particle physics.

Government and military usage was also widespread for everything from cryptography to logistics.

Programming became an essential new profession dedicated to utilizing these systems productively.

So ENIAC demonstrated the possibilities of electronic computing and established an influential reference architecture. This spurred rapid adoption of computers across science, government, business and academia in the 1950s-60s. Tasks that were previously manual or mechanical were transformed by the new computational capabilities. It was a pivotal transition from basic calculation into broader information processing applications.

Transistors

After vacuum tubes, the next big innovation was to use transistors. They were used throughout the 1950s and 1960s. Compared to vacuum tubes, transistors were:

~1000x faster. This enabled MHz clock speeds instead of kHz.

~10x smaller. Transistors were measured in millimeters[12] whereas vacuum tubes were centimeters long.

~50x cheaper. Initially. Over time they quickly got even cheaper.

~100x more reliable. Vacuum tubes had mean time between failures (MTBF) measured in thousands of hours due to filament issues whereas transistors lasted for hundreds of thousands of hours and generated less heat.

Better in various others ways as well.

Yeah. They were a game changer.

Well, from what I could tell, they kinda just lead to more of the same. Evolutionary, not revolutionary. Later on the invention of integrated circuits allowed computers to be cheap and small enough for personal use. That was revolutionary. But still, it’s cool that early transistors allowed for fancier computations and made computers more accessible for some smaller organizations.

Von Neumann

John von Neumann was a man who did a lot of important things throughout the 1930s, 40s and 50s.

He invented game theory. No big deal.

He was a major contributor to the Manhattan Project.

He developed the concept of cellular automata. Y’know, like Jon Conway’s Game of Life. This influenced fields like biology, nanotechnology, and chaos theory, but more relevant to our purposes here, it influenced the development of parallel computing. Think about it: each cell in Conway’s Game of Life is like a processor computing it’s own thing. There’s many processors doing stuff at once, and sometimes they collide and interact.

Like Godel and Turing, von Neumann was inspired by Hilbert’s program and did work to establish a solid foundation for mathematics and formal systems. In particular, he did pioneering work in the formalization of set theory.

One of my favorites: he proved that, given some extremely, extremely reasonable assumptions[13], you should Shut Up and Multiply. Well, he didn’t phrase it that way. He said “maximize expected utility”.

He invented the merge sort algorithm. My favorite sorting algorithm.

#leetcodeHe came up with the idea of the singularity.

He did a bunch of other logic, math and physics things that I don’t understand.

However, the thing he did that is most relevant to this post on the history of computers is coming up with the von Neumann architecture of computers in 1945. Let’s dig into that.

The architecture works something like this:[14]

You might be thinking that this looks a little mundane and obvious. Like, of course this is how computer architecture works. Duh.

Well, that’s because John von Neumann came up with it. Nowadays pretty much all computers use this architecture, but at the time it wasn’t like this. For example, the focus was on standalone computational units like adders and multipliers. Von Neumann encapsulated all of that stuff in the CPU. Nice, right?

Here’s another example. Probably a more important one. At the time, program instructions and data usually weren’t stored in the same memory space. In von Neumann’s architecture, they are. I’m not sure if von Neumann was 100% the first person to come up with the idea, but his architecture really popularized it, and before von Neumann, early stored program computers stored the data and instructions in different spaces.[15]

Ok, so what’s the big deal? Why is it so helpful to have the data and instructions share the same memory? Well, for starters, it’s simpler, cheaper, and it made execution faster because stuff is all closer together. It was also convenient to access stuff in a single address space rather than having separate schemes.

But the biggest thing is probably that it made assemblers and compilers possible. Well, I’m not sure if that’s strictly true. Maybe you could still have them without a shared memory architecture. But the shared memory architecture made them a whole lot easier.[16]

What are assemblers and compilers, you ask? Let me tell you.

Programming languages

On early computers, what did programming look like? Well, on (most) computers that preceded the ENIAC, there was no concept of a stored program, so “programmers” would have to physically set up circuits and switches.

Things were a little easier on stored program computers. For those, you could enter the program in as a series of 1s and 0s, by turning on the right vacuum tubes, for instance. The vacuum tubes you turn on were the machine code and determined what operations were carried out.

But of course, humans don’t think in 1s and 0s. So early programmers had to first map out what they want to do with flow charts and stuff, and then figure out how to convert those flow charts into the corresponding 1s and 0s.

What if they didn’t have to do that last part? What if they could just write out some informal instructions and have those informal instructions translated into 1s and 0s (machine code) for them? That sounds like it’d really improve programmer productivity, right?

Enter: assemblers. Assemblers were able to do this. They are programs just like any other computer program that takes inputs and yields outputs. You feed it in some text that looks like this:

LOAD R1, #10 ; Load register 1 with 10

LOAD R2, #6 ; Load register 2 with 6

ADD R3, R1, R2 ; Add R1 and R2, store in R3

STORE R3, Result ; Store sum in Result variable

JUMP START ; Jump back to start of program

START: ; Label for start of program And the assembler will output the corresponding 1s and 0s.

It’s code that writes code.

Think of it like this. It’s translating between two languages. Assembly is one language and looks like this: LOAD R1, #10. Machine code is another language and looks like this: 10010010110101010011110101000010101000100101. Just like how English and Spanish are two different languages.

Assemblers are translators. Just like how a human might translate between English and Spanish at the UN, an assembler translates between assembly and machine code. The computer only speaks machine code, whereas human programmers aren’t very fluent in machine code and would prefer to speak in assembly.

But hey, why stop there? This idea of translating from one language to another is pretty powerful. Assembly is ok, but still is a little cumbersome. What if we could create a language that is even more descriptive than assembly, write code in that language, and have it translated into assembly for us?

Enter: programming languages. They let you write code like this:

C Calculate average of numbers in array

REAL A(100)

TOTAL = 0

DO I = 1, 100

TOTAL = TOTAL + A(I)

END DO

AVG = TOTAL / 100and run it through a compiler to get the corresponding assembly code of this:

; Reserve memory for array A

RESERVE 400 ; 100 * 4 bytes per real

; Initialize pointer and total

MOVE BP, 0 ; Pointer to base of A

MOVE BX, 0 ; Total

LOOP:

ADD BX, [BP] ; Add element to total

ADD BP, 4 ; Increment pointer

COMP BP, 400 ; Compare to end

JLT LOOP ; Loop if not past end

; Calculate average

AVG:

MOVE AX, BX ; Move total to AX

DIV AX, 100 ; Divide total by 100

STORE AVG ; Store resultSo now we have two translation steps. It’s analogous to you speaking English, having an initial translator translate that to French, and then having a second translator translate the French into Spanish.

In that example, the code is in Fortran. Fortran was developed in 1957 and was the first programming language that actually got some real adoption. Other languages were also developed around that time: Lisp in 1958, Algol in 1958, and COBOL in 1959.

Programming started to become accessible to a wider audience. Popular media hyped up these languages as allowing “communication with intelligent machines”. Conferences like the ACM National Conference drew huge interest around new languages. It was an exciting time.

Networks

It wasn’t just programming languages that made things exciting though. Programming languages allowed humans to talk to computers, but shortly after the development of the first programming languages in the late 1950s, in the early 1960s, networking allowed computers to talk to computers.

Why was this useful?

Terminals. It allowed for many cheaper terminals to connect to one central mainframe computers.

Early email and messaging.

Exchanging data.

Remote login.

Some early distributed computing.

Stuff like that.

Integrated circuits

Then in 1966, another huge jump was made: the integrated circuit.

Before 1966, transistors were a thing, but they weren’t the transistors that we imagine today. Today we think of transistors as tiny little things on computer chips that are so small you can’t even see them. But before 1966, transistors were much larger. Macroscopic. Millimeters long.

I don’t really understand the scientific or engineering breakthroughs that allowed this to happen, but something called photolithography allowed them to actually manufacture the transistors directly on the computer chips. This allowed chips to contain millions or even billions of individual transistors. And the connections between components were done directly on the chip instead of using external wires.

Here’s a sense of how this functionally improved things:

Speed: 100x improvement. Tens of megahertz rather than kHz.

Memory: 1000x or more. Memory density went from kilobits per chip to megabits.

Cost: 500x reduction. The cost per transistor fell from around $50 in the 1950s to under $0.10 with integrated circuits.

Reliability: Failures rates 100x lower.

Power: 1000x reduction. Microwatts instead of watts.

Size: Integrated circuits allowed for computers small enough to fit on desktops. Ones we’d recognize today. Before that, computers were often the size of a room.

Internet

In the 1960s, computer networks were mostly used for military, research, academia, and some large enterprises. However, in the 70s, that started to change.

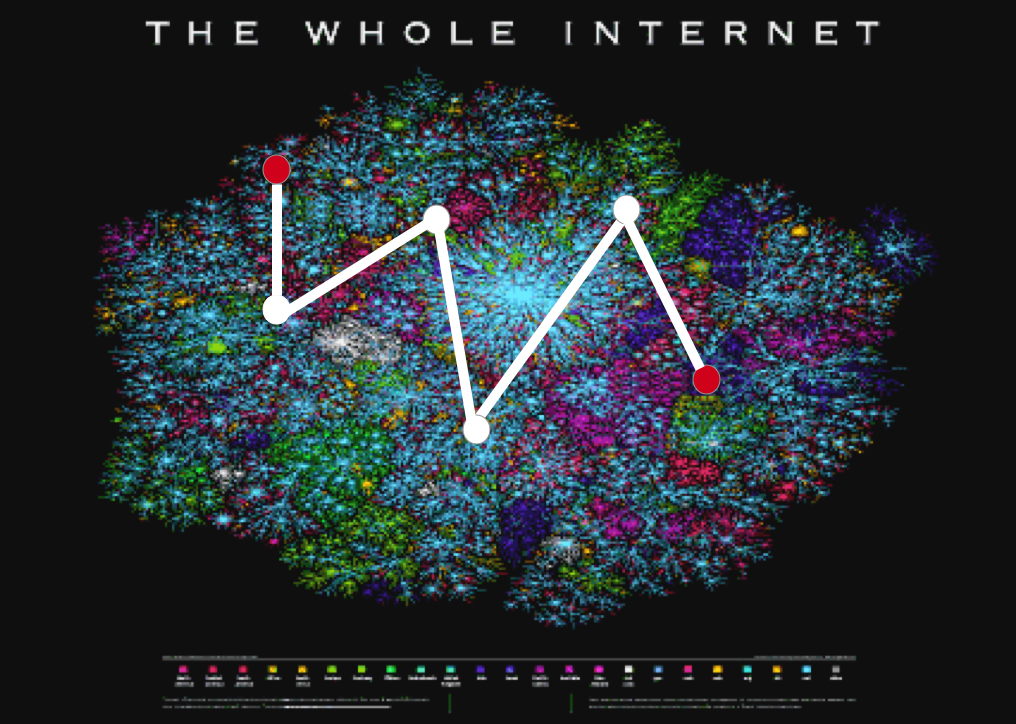

But let’s start with ARPANET in 1969. ARPA stands for Advanced Research Projects Agency, which is a part of the Department of Defense. Basically, there were a bunch of universities doing research for the military. Research with computers. It’d be nice if those computers could talk to one another. And so they created ARPANET. A network of ARPA-funded research groups.

But then things expanded beyond military applications. Over time, it kinda just became a way for university departments to collaborate with one another. Sharing computing resources. Datasets. Email. Email was the “killer app” of the time.

It wasn’t just ARPANET though. You think ARPA and universities were the only ones who wanted in on this? Nah. There were various other networks developed for research, government and commercial purposes.

Telenet was another popular one that began in 1975 and was actually sold commercially. Ie. you’d pay a monthly fee to use their so called “backbone network” to get connected to other computer networks.

Things were a little annoying though. Different networks had different protocols for communicating. It’s as if ARPANET spoke Spanish and Telenet spoke French. They didn’t really mix.

So, in the mid to late 1970s, people started trying to come up with a standard way for computers to talk to one another. “Let’s just all speak the same language, guys.” Unlike humans, computers actually were able to all agree on one language, and that language is called TCP/IP.

And once they started speaking the same languages, “internetworking” became a thing. Networks communicating with one another. Networks becoming “interconnected”. Eventually, this became the internet we know of today.

Personal computers

In the 1960s, there were minicomputers, and there were mainframes. You can distinguish between the two by saying that minicomputers cost $25k and under ($188k in 2022) whereas mainframes are more expensive.

Then with the invention of the integrated circuit, from maybe 1970-1975, microcomputers started to become a thing. Costs were only in the hundreds of dollars. Maybe low thousands. And they could fit on a desk.

However, computing power was really low. They were only used by electronics hobbyists. All you could do with them was some basic logic and arithmetic. Programming had to be done in machine code or very primitive assembly via switches. They were sold in DIY kits. You had to solder stuff together on your own. You had to provide your own monitor and keyboard.

So yeah, this was definitely hobbyist territory. Businesses needed minicomputers or mainframes since those were the machines with the computing power and business software that they needed.

And that’s when Steve Jobs changed everything. He said, “Fuck this DIY bull shit. Computers aren’t for corporations. They’re for people. You should be able to just buy one, put it on your desk, and use it. And you shouldn’t have to read a 200+ page instruction manual to do so. It should just be intuitive.”

This triggered a remarkable change in society at large.

Thieves returned stolen purses. Soldiers on battle fields looked each other in the eyes, and put down their weapons. Fathers cried and apologized to their sons. Politicians refused campaign contributions. Teachers stopped assigning homework. Business executives started doing psychedelics and attending yoga classes. IBM apologized for being greedy, pointy-haired bastards, and begged Jobs to be their CEO.

Jobs accepted. He used IBM’s vast resources to commission the development of The Perfect Circle. This circle was drawn in sand and after an initial version with molecular-level accuracy was vetoed by Jobs, a subsequent version with atomic-level accuracy was finally completed. Then, having been on a diet of just quinoa and green tea for seven months, Jobs achieved a state of heightened meditation inside The Perfect Circle. In this state, he migrated to his True Form, entered Machine City, confronted Deus Ex Machina, and negotiated a deal to release all humans from The Matrix. Eternal salvation had been achieved.

Ok, ok. I’ll stop.

It was actually Steve Wozniak who had a lot of the vision.

Woz, as he’s often referred to, was a nerd who bought computer kits and built microcomputers. But, as I previously explained, doing so was really annoying. Soldering? Machine code? Yuk.

And as an early member of the Homebrew Computer Club, Woz saw these pain points first hand. People would come to club meetings, hang out, and discuss the various difficulties and frustrations they faced with these DIY kits.

So then, Woz sought to build something better. Something that got rid of these frustrations and, going even further, enabled everyday users to easily get started computing rather than just electronics enthusiasts. Jobs’ contribution here was moreso the ambition. He wanted to make a dent in the universe and put these computers on the desks of people across the globe. An important and admirable contribution, if you ask me.

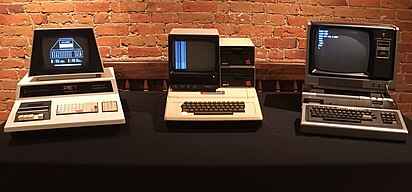

Anyway, in 1976, the Apple I was released as one of the very first pre-assembled computer motherboards. You still had to hook it up to stuff like a screen, keyboard and power supply, but at least you didn’t have to arrange and solder all of the chips together yourself.

Soon after, in 1977, the Apple II was released. This was important because it was the first, true, personal computer. In the sense that it actually did come fully assembled. You didn’t have to set up the keyboard, screen and sound. You basically just had to plug it in.

The Apple II wasn’t the only one though. Along with the Apple II, the Commodore PET and TRS-80 were also really popular. Together, they formed the “1977 Trinity”.

This really was quite revolutionary. Personal computing had arrived and was exploding. Now individuals and small businesses were able to join the party that previously just consisted of large organizations.

In 1982, “The Computer” was named Person of the Year by Time Magazine.

The roaring 80s

Lots of crazy things were happening in the 80s:

Programming languages:

Throughout the 60s and 70s the main programming languages in use were COBOL, Fortran, ALGOL, and BASIC. Gross.

In the 80s, you had C as the dominant language for systems programming, Pascal in academia and general software development, and SQL for querying databases. In the mid 80s, C++ emerged, as did object-oriented programming.

Still, COBOL, Fortran and BASIC also remained popular, as did assembly.

PCs continued to boom. After initially seeing them as small toys that weren’t worth paying attention to, IBM entered the market with the 5150 PC in 1981.

Inspired by the Xerox Alto which was developed at Xerox PARC in 1973, we saw the GUI emerge. First with Apple’s Macintosh in 1984 (remember the commercial?) and then Microsoft’s Windows operating system. Oh, and the mouse was also introduced.

Popular uses for personal computers included word processing, accounting, desktop publishing (newsletters, flyers), programming, education, computer-aided design for engineers and architects, and gaming.

Internet use expanded.

TCP/IP was standardized and established.

The NSCA created the first public dial-up internet access in the late 80s.

Still, home consumer use was very rare. Typical internet users were university students, faculty, or nerds. This, however, made for some wonderful email lists and discussion boards on applications like Usenet and Bitnet.

Hardware continued to explode, following the trajectory predicted by Moore’s Law. Microprocessors started to replace integrated circuits. With microprocessors, all of the components were on one chip. Prior to that, with integrated circuits (IC), CPUs were made up of many ICs connected to each other. ALU on one, control unit on another, registers on a third, etc. The progression to and advancement of microprocessors meant that, over the decade:

Clock speeds increased from about 5 MHz to 25-33 MHz.

RAM grew from kilobytes per chip to megabytes per chip.

Storage went from floppy disks in the hundreds of kilobytes to hard disks in the tens of megabytes.

Cost per transistor continued to drop, starting at about 1 cent to less than a hundredth of a cent.

Power needs were reduced from a few watts to less than a watt.

Reliability improved a lot with chip failure rates falling.

CD-ROMs became popular.

The first computer viruses were seen. Anti-virus software was created.

Laptops and mobile phones were introduced.

Database management systems (DBMS) became widespread.

Overall, I think the theme of the decade is that computers became mainstream.

World Wide Web

If I recall correctly, this journey of me learning about the history of computers and writing this post began with a sense of insecurity: I’ve been a web developer for 10+ years and didn’t know the difference between the internet and the World Wide Web, nor if there even is a difference in the first place. Seems like something I should know.

So, I researched it. I found out that the World Wide Web — aka “the Web” — is, in fact, a different thing. Tim Berners-Lee created the Web in the early 90s. Given that this is where I began my journey — and that I was born in 1992 and don’t like the idea of “history” include time when I was alive — it seems fitting to make this the last section in the post.

Ok, so what is the difference between the Web and the internet? The web involves websites. Web browsers. HTML. HTTP. That sort of thing. It’s the stuff you look at with Firefox, Google Chrome and Safari. So when you visit lesswrong.com, you’re using the Web, but when you play WoW or use Apple Mail to send an email, you’re using the internet without the web.

Let me elaborate. The Web uses the internet. It’s like this.[17]

Much like the Postal Service, the internet provides people with the service of delivering their packages from point A to point B.

With the postal services:

Packages = paper in envelopes

Points A and B = mailboxes

With the internet:

Packages = 1s and 0s

Points A and B = computers

Before the Web, people wrote messages to each other in languages like SMTP (email) and FTP (file transfer). With the Web, people wrote messages to each other, with the help of web browsers, in HTTP. But before diving into that too much, let’s back up and look at the context in which the Web was developed.

Before the Web, accessing information online was difficult.

Early online systems like Telnet, FTP, and Usenet required using command lines and remembering multiple textual commands.

Setting up internet connectivity often involved editing technical configuration files manually.

You had to know IP addresses and paths.

Content appeared in basic ASCII text.

So then, nerds were the only ones technical and motivated enough to use the internet. But with the web, it was a whole lot easier.

You use your mouse to open up a graphical web browser.

You type the name of a website in and hit enter. No need for IP addresses.

Navigation can be done by clicking links rather than entering in yet another IP address.

Everything was pretty. Text can be formatted and mixed with things like images and tables.

I can’t think of an elegant way to end this post. Maybe that is a metaphor. Or maybe this is a metaphor.

- ^

To elaborate, I’m a web developer with about 10 years of experience. I didn’t study computer science in college but I have spent a year self-studying it + have periodically learned things on and off over the years. I don’t have the best understanding of computer science-y things, but also don’t have the worst.

So it probably makes sense to take all of this with a small pinch of salt. A grain is too small. More skepticism is required than that. But I think you can put a decent amount of trust behind the content of this post too. I’d bet on there being a small amount of errors and only 1-4 things that aren’t directionally correct.

Non-Expert Explanation is a frame that I think is extremely appropriate to apply.

- ^

I’m confident that this is directionally correct, but not sure if it is literally correct. It looks like maybe there were kinda sorta some type of abstract argument stuff before Aristotle. If so, it wasn’t popular and well ironed out.

- ^

There were of course also things that came before the Jacquard loom. In ancient and medieval times there were a few simple mechanical devices that performed some calculations. Later on in the 1600s, Blaise Pascal and Gottfried Wilhelm von Leibniz were two big names that also built mechanical calculators.

- ^

At first I was wondering why this was so much work. Just calculate eg.

sin(79),sin(80),sin(81)etc., write it all down, and call it a day. Then you never have to do that work again. But then I realized: what aboutsin(79.1)? What aboutsin(79.74327432)? Engineers and physicists needed values with that sort of precision so there were actually lots and lots of computations that needed to be done. - ^

While this picture is based upon a true story, some characters have been composited and a number of incidents fictionalized.

- ^

Well… there are theoretical machines that can compute things that a Turing machine cannot. How? By doing weird stuff with infinity. But don’t go around giving hope back to Hilbert: given what we know about physics, these machines aren’t actually possible to build.

- ^

Take this with a few more grains of salt. I’m not as confident about it.

- ^

Turing wasn’t the only one who realized these sorts of things. As I mentioned earlier, Ada Lovelace had the same visions back in the mid 1800s. David Hilbert did too. As did Nikola Tesla in 1898 and Isaac Asimov in the early 1900s.

- ^

Or, perhaps I should say, before they had math machines, they had to use computers. Because “computer” used to refer to a human who performs mathematical calculations with pencil and paper. I still can’t get over how weird that is.

- ^

Actually, before then, there was a small period of time when computers mixed electrical and mechanical components.

- ^

I guess you could say that those computers that had to be rewired were, technically, still programmable. It’s just that the programming = a ton of rewiring and was a huge pain in the ass.

- ^

I’m talking about early transistors here. Over time they get smaller and smaller and smaller.

- ^

If you’re someone who prefers Alamaeda to Berkeley, Berkeley to Cupertino, and Cupertino to Alamaeda, then this doesn’t apply to you. However, I would appreciate it if you would reach out to me. I have a few ideas for some mutually beneficial financial exchanges we could engage in.

- ^

If you want the deets, check out this old post I wrote.

- ^

Look back at that old picture of the ENIAC earlier in this post.

- ^

I don’t really understand the reasons for this. Sorry.

- ^

See this other old post of mine for more information.

Great post!

Interestingly, humans having to do a lot of calculation manually was also how John Napier discovered the logarithm in the 17th century. The logarithm reduces the task of multiplication to the much faster and less error-prone task of addition. Of course that meant you also needed to get the logarithms of numbers, so it in turn spawned an industry of printed logarithmic tables (which Charles Babbage later tried to disrupt with his Difference Engine).

Actually Charles Babbage was not trying to disrupt the industry of printed logarithmic tables, he was trying to print accurate tables. His difference engine included a mechanism to transfer the calculated tables directly to a print plate so there would be no transcription errors between the calculated numbers and going to the printer.

Babbage’s work on the engine started when he was working with another engineer doing calculations in parallel as was often done in those days. They did one set of calculations and got different answers. They retried the calculations and each got their same answer, but again they were different. Then they looked at the values in the tables and realized that the two books had two different numbers in the table. This frightened Babbage because he realized that if they had been using the same (wrong) book, they would not have discovered the error. So he set out to create a machine that would calculate the tables correctly every time and create the print plate so transcription errors would not happen.

The Difference Engine #2, built for the CHM to Babbage’s plans, created these print plates perfectly.

As a side note, in 2008 Linus Torvalds was inducted to the Hall of Fellows for the CHM. The only way I (who had nominated him for the Fellowship) could get Linus to attend the ceremony was to tell him he could turn the crank of the Difference Engine. And it was so.

Hmm, Babbage wanted to remove errors from tables by doing the calculations by steam. He was also concerned with how tedious and time-consuming those calculations were, though, and I guess the two went hand in hand. (“The intolerable labour and fatiguing monotony of a continued repetition of similar arithmetical calculation, first excited the desire and afterwards suggested the idea, of a machine, which, by the aid of gravity or any other moving power, should become a substitute for one of the lower operations of human intellect. [...] I think I am justified in presuming that if engines were made purposely for this object, and were afterwards useless, the tables could be produced at a much cheaper rate; and of their superior accuracy there could be no doubt.”) I think that fits “disrupt” if defined something like “causing radical change in (an industry or market) by means of innovation”.

I’m actually going to make a computability and complexity sequence for LessWrongers, albeit it’s going to be opinionated, so this will be a fun one to check out.

I mostly like this, with some big caveats, but as a piece of history, it works pretty well.

Thank you for this post.

I am not sure to which extent you already know what follows, but I thought this might be worth clarifying. The “basic” church turing thesis is that our intuitive notion of “formal computation process” is entirely captured by the turing machine. A consequence is that any “good” formal notion of algorithm / fully described computation process is equivalent or weaker to the Turing machine. So far, this has been true of all proposals and it is taken for granted that this statement is true. Note that the thesis is about being a good formal definition of an intuitive notion. Hence we cannot prove it to be true. We can only prove that various attempts at formalizing computation indeed ended up equivalent to each others.

The “physic church turing thesis” is that no machine can be built that performs computations impossible for a turing machine. For example: there is no way to build a halting oracle in real life. This is less certain.

People implicitly refer to models much closer to actual programing languages when they prove stuff related to computability. The tedious details of turing machines are rarely discuss in actual proof, at least in my experience. In fact, the precise model at hand is often somewhat ambiguous, which can be slightly problematic. I think the turing machine is a good starting point to understand the notion of computability, just because it is simpler than alternatives (that I am aware of).

Not sure he did, at least to the extent that it is taken when we say “shut up and multiply”. I guess you refer to the Von Neumann–Morgenstern utility theorem, but that theorem does not provide a full “it is in our best interest to do utility maximization”. There was some discussion on the topic recently: a first claim and my own answer.

The Church-Turing Thesis is in fact false, in that there are other computer models that satisfy the intuitive notion and a formal notion of computation that define different computable sets. Basically, it means that you can’t make arbitrary changes to a Turing Machine and still define the same sets of computable functions. Similarly, there are other models of computation that are different, and define different computable sets.

This paper gives a rundown of a specific model of computation, in which a Universal Turing Machine is enhanced by a closed-timelike curve in the Deutschian model of closed-timelike curves, and it turns out that the computable sets in this model are both recursively enumerable and the co-recursively enumerable ones. These are not problems that a Universal Turing Machine without a CTC could solve. This means that the new problems that become solvable include both the validity and satisfiability of full 1st order logic formulas, and much more than that, they can solve any problem that is Turing-reducible to the halting problem, which is huge philosophically speaking.

This paper below gives the gist of what a Turing Machine with a CTC could solve below:

https://www.scottaaronson.com/papers/ctchalt.pdf

I’d always teach the Church-Turing Thesis as essentially a historical curiosity, not a cutting-edge hypothesis, a hypothesis that turned out to be false, but in the process we learned something new about the nature of computers.

I don’t know what you mean by this.

A Turing machine with a halting oracle can (trivially) solve the halting problem. A Turing machine with access to a closed timelike curve can solve the halting problem. A Turing machine on its own cannot. And we do not have access to either a halting oracle or a closed timelike curve.

The halting oracle can be encoded (by God) as an infinite bitstring. Every finite prefix of that string appears in a list of all finite strings. That list of all finite strings can be generated by a Turing machine. But none of that matters, because we do not know and cannot know which subset of all those finite strings encodes a halting oracle.

Here is a simpler example of the issue here. Consider two Turing machines. Each of them ignores its input tape. One prints “Yes” and halts, and the other prints “No” and halts. One of these machines answers the Riemann Hypothesis. Of course, we do not know which, which makes them useless for answering the Riemann Hypothesis.

I didn’t check the article yet but if I understood your comment correctly then a simpler example would have been “turing machines with a halting oracle”, which is indeed stronger than normal turing machines. (Per my understanding) the church-turing thesis is about having a good formal definition of “the” intuitive notion of algorithm. And an important property of this intuitive notion is that a “perfectly rigorous idiot” could run any algorithm with just paper and a pen. So I would say it is wrong to take something that goes beyond that as a counterexample.

Maybe we should clarify this concept of “the intuitive notion of algorithm”.

PS: We are running dangerously close to just arguing semantics, but insofar as “the church-turing thesis” is a generally consensual notion I do not think the debate is entirely pointless.

I do think this is possibly going to degenerate into a word game, but the thing I remember from the wikipedia is that all algorithms that a human can effectively calculate with pen and paper are the computable functions, and the problem here is either the human has real limitations on time like real humans, and thus the set of computable functions is much smaller than all total computable functions, or we don’t, and the problem here is CTC computers that solve the halting problem can be simulated by a Universal Turing Machine, thus CTC spacetimes will eventually be learned by a human with unlimited time and memory, and at that point the set of computable functions is larger than the original definition, causing a contradicton.

To ensure that this is valid, we will be relying on a construction like this, which tells us that while Universal Turing Machines can’t compute the halting function, it can simulate the halting function anyway, so long as the infinite bitstring that computes the halt function for Turing Machines is simulated with every other bitstring, we can emulate a computer that knows the halt function, and thus a human must be able with unlimited time and memory to compute more things than a Turing Machine.

Here’s the link below on the details of this process:

https://www.amirrorclear.net/academic/ideas/simulation/index.html

Thus, the set of computable functions must be larger or smaller than defined, contradicting the Church Turing Thesis as written.

It’s okay to say that your intuitive notion of computability is exactly equivalent to the Universal Turing Machine, but then say that instead of insisting that every notion of computability is the same thing.

Ok, I think I can clarify what people generally mean when they consider that the logic Church-Turing thesis is correct.

There is an intuitive notion of computation that is somewhat consensual. It doesn’t account for limits on time (beyond the fact that everything must be finitely long) and does not account for limits in space / memory. It is also somewhat equivalent to “what a rigorous idiot could be made to do, given immortality and infinite paper/pen”. Many people / most computer scientist share this intuitive idea and at some point people thought they should make more rigorous what it is exactly.

Whenever people tried to come up with formal processes that only allow “obviously acceptable” operations with regard to this intuitive notion, they produced frameworks that are either weaker or equivalent to the turing machine.

The Church-Turing thesis is that the turing machine formalism fully captures this intuitive notion of computation. It seems to be true.

With time, the word “computable” itself has come to be defined on the basis of this idea. So when we read or hear the word in a theoretical computer-science context, it now refers to the turing machine.

Beyond this, it is indeed the case that the word “computation” has also come to be applied to other formalisms that look like the initial notion to some degree. In general with an adjective in front to distinguish them from just “computation”. We can think for example of “quantum computing”, which does not match the initial intuition for “computation” (though it is not necessarily stronger). These other applications of the word “computation” are not what the Church-Turing thesis is about, so they are not counterexamples. Also, all of this is for the “logic” Church-Turing thesis, to which what can be built in real life is irrelevant.

PS: I take it for granted that computer scientists, including those knowledgeable on the notions at hand, usually consider the thesis correct. That’s my experience, but maybe you disagree.

You are confused because that is not the reality. As you can read on Wikipedia’s entry on difference engine, Scheutz built a difference engine derivative, sold it, and it was used to create logarithmic tables.

You must have read this while writing this article. It is prominent in the Wikipedia article in question and hard to miss. Why did you make this mistake? If it was a deliberate act of misleading for narrative convenience I am very disappointed. Yes, the reality is rarely narratively convenient, but you shouldn’t lie about it.

Regarding Turing machines: their definition is due to a philosophical argument, rather than mathematical (hence Church-Turing “thesis” rather than “theorem”). Essentially, Turing argues that any finite region of space can only have a finite set of distinguishable states; that any finite set can be enumerated and indexed; and therefore any physical system can be described by a (vast) transition table between these indexes. For input/output, a similar argument is made that only finitely-many possibilities can be distinguished between, that we can equivalently operate on their indexes instead, and that they can be written along one unbounded “tape”. Hence we can describe the behaviour of any physical system (including any procedure that a mathematician may carry out) by using a transition table; an argument that’s so general it avoids quibbling about minor details of physics, neurobiology, mathematics, etc. (which explains why proposed counterexamples require extreme physics like time travel, orbiting black holes, etc.). I highly recommend reading his On Computable Numbers paper.

This philosophically-justified generality was important to show that undecidability is fundamental, rather than just a deficiency of the chosen system (Rice’s Theorem also extends undecidability from the halting problem to all “non-trivial behaviours” of all universal systems). In fact Goedel hated Lambda Calculus and kept trying to invent more powerful alternatives (System T, General Recursive Functions, etc.): he only stopped when Turing proved that Lambda Calculus was equivalent to Turing machines! It wasn’t so much that “Alonzo’s lambda calculus was also cool, but I like this better”; it was that Turing machines show why the cannot be anything “better”, so we might as well use them, or Lambda Calculus, or something else equivalent. Notice that there aren’t many programs actually written as Turing machines (outside of e.g. Busy Beaver research), whilst Lambda Calculus is how basically every programmer writes abstractions (Python even uses the keyword ‘lambda’, and it’s the name of AWS’s function-as-a-service offering).

Another crucial insight of Turing’s, which wasn’t mentioned explicitly, is the existence of “universal” Turing machines: which can read an encoding of another Turing machine as input, and simulate that machine’s behaviour (AKA an “interpreter”, “emulator” or “virtual machine”). That’s crucial to the notion of “general purpose” that you mention informally many times; what many would call “Turing complete”. All universality results, whether it’s for Python, RNNs, Rule 110, Magic the Gathering, etc. ultimately rest on this: either by using the system to emulate a universal Turing machine directly, or to implement another system that has been proven universal that way.

Babbage and Lovelace didn’t have such a notion of universality either; the Analytical Engine was only shown to be Turing complete later. Universal machines also show the existence of “software”, and its fundamental equivalence to hardware. For example, the input to a Jaquard loom describes a fabric pattern, not a loom-which-would-make-that-pattern; hence a Jaquard loom can’t meaningfully “emulate” another Jaquard loom. Yet it makes perfect sense to “stack” universal machines, e.g. modern CPUs are universal machines, which emulate x86 processors, which run Java VMs, which run Minecraft servers, which run redstone processors, and so on.

Overall it was a very good and fun article. I liked the interspersing of math, logic and computers.

It seemed to be very USA focused, and missed a lot of the work done in other countries, especially the work of Konrad Zuse and things like the ACE computer in England.and the EDSAC (the first computer to store its program in its own memory) designed by my friend, Sir Maurice Wilkes..but to cover all that would probably go on to fill tomes if printed out.

You also missed out on the Colossus Computer, designed and built by Tommy Flowers at Bletchley Park as part of the famous code breaking operation of WW II. I mention it because it used vacuum tubes to give a great boost to code breaking operations over the electro-mechanical BOMBAs designed and built by Alan Turing.

You also did not mention the Mark I and Mark II computers built by Howard Aiken and IBM to do ballistics in WW II. It was on the Mark II computer that Lieutenant Grace Murray Hopper (later to rise to the rank of Rear Admiral) located the first “bug” in a computer, a moth that flew in through an open window and was trapped in the contacts of a relay.

Lt. Hopper went ton to develop FLOMATIC, the first “high level” language, which led to the design of COBOL. Lt. Hopper also worked on the ENIAC, so there also is a connection there. Dr. Hopper was another friend of mine, and an inspiration to me and many other computer people.