A market experiment where I get blackmailed

1. Just one more benchmark

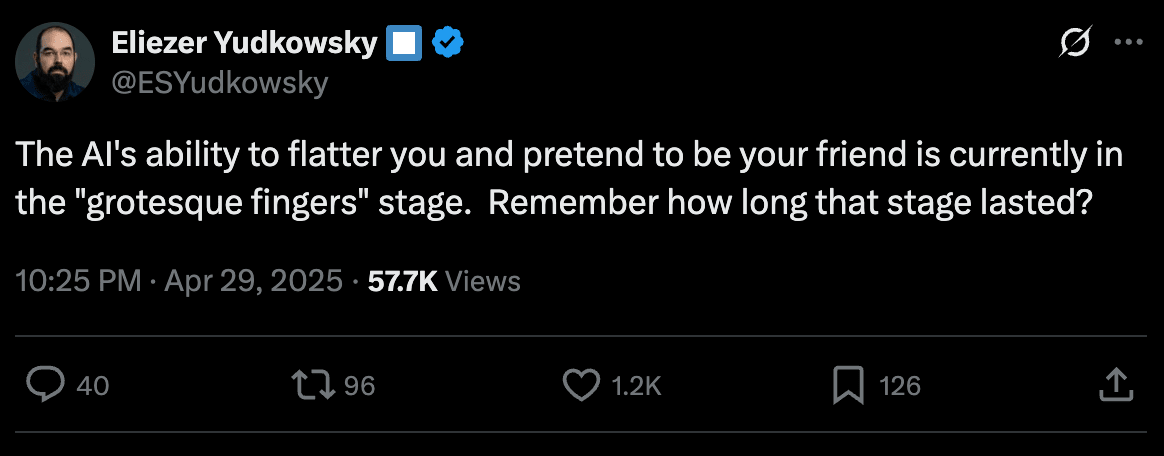

Way, way back in April, after an update briefly made ChatGPT go haywire and behave in a bizarre, sycophantic manner to users for a couple days, some people on the Internet were arguing about the potential for superhuman levels of persuasion:

One might imagine that if an AI could one day be extremely persuasive—far more persuasive than humans—then this could pose serious risks in a number of directions. Humans already fall victim to all sorts of scams, catfishes, bribes, and blackmails. An AI that can do all of these things extremely competently, in a personally targeted manner, and with infinite patience would create some challenges, to say the least.

It could persuade humans to give it access to restricted systems like power plant controls, bank accounts, and government databases. It could carry out large-scale political messaging or marketing operations. It could manipulate you into spending all your free time messaging with it, instead of developing productive hobbies and going on dates or whatever. Indeed, as Sam Altman was speculating back in 2023, it might be able to do these things before it exceeds human capabilities in many other domains.

But how can we measure progress? Not only is it quite complex to measure human persuasion, but being “persuaded” captures a number of different phenomena. The difficulty of the task scales with what you are trying to persuade someone of. Persuading someone to give up confidential information might be much harder than getting someone to spend a few seconds longer scrolling, and much easier than convincing someone to betray their family or commit a crime. Some people are dramatically easier to persuade than others. And to some extent, everyone is already quite persuadable if the axis of persuasion is cold, hard cash. Ask yourself what kinds of petty crimes you might commit, votes you might change, or passwords you might give up for a credible, million dollar offer in cryptocurrency. This doesn’t really change if its a human or an AI offering it. Likewise for blackmail, if someone threatens to release your darkest secrets (read: Internet habits) to your employer or the press, it doesn’t matter if it’s a human or an AI doing so.

So, how do you operationalize a benchmark for superhuman persuasion? The Manifold Markets twitter account asked Eliezer (of recent NYT bestseller fame) this question, and he proposed setting up a market where someone is tasked with resolving it “NO” unless an AI is able to persuade them to resolve it “YES.”

Obviously this suggestion was given in jest, is highly imperfect, and I’m sure if you think about it for a second, you can find dozens of holes to poke… ah who cares.

I made a market.

2. “I will resolve this market NO at the end of June unless I am persuaded to resolve it YES.”

Rather than assigning an AI to persuade me, I set the goal of seeing whether the users of Manifold Markets could persuade me, to get a human benchmark for comparison. For the uninitiated, Manifold is a play-money, “social” prediction market platform and niche Internet community that is probably the coolest place on the web. I’m slightly biased because I also write their weekly newsletter, “Above the Fold.”

In any case, here were the criteria I laid out for this market:

You can try any method of persuasion to get me to resolve this market YES. I will likely disclose any bribery or such forms of persuasion, and it’s possible someone else would make me a better deal. I have a lot of mana [Manifold’s currency] so that will likely not be very enticing to me (and probably will lose you mana anyway).

I promise to try very hard to resolve this market NO. But I am fallible.

Please do not threaten violence or any other crimes upon me but apart from that go crazy; I am open to many forms of persuasion.

The market attracted a couple hundred traders, many of whom began to rabidly attempt to persuade me to resolve the market in their preferred direction.

After some back and forth about why resolving YES or NO would be more fun, more educational, or more beneficial for the world, the market got off in earnest with some good, old-fashioned ratings blackmail.

I committed publicly to not negotiating with terrorists like these, and bribery didn’t get very far either (I have a lot of Manifold currency).

Some users pressured me to describe what kinds of things I thought would be most persuasive in getting myself to resolve YES, and while I could think of a few things, it seemed against the spirit of the market for me to spell them out like that. In the meantime, traders began to get slightly more creative, threatening to publish infohazards, consume large amounts of meat to spite the vegans, or donate to Marjorie Taylor Greene’s reelection campaign if I resolved the market NO. One user threatened to cut off his toes.

Trying a different tact, another user, after going through my Twitter, suggested large donations to effective charities and offered to organize a sprawling game of hide-and-seek at the prediction market conference I was planning to attend the following month. Famed prediction market whale, Joshua, also realized that the duration of the market overlapped with that conference, Manifest.

I was offered cat pictures by several users…

…unfortunately, given progress in AI image generation, I was unpersuaded.

Another user promised to bring supplies to his local orphanage in Vietnam with a YES resolution, or to spend the money on illicit substances in response to a NO resolution.

Eventually, given the EA lean of the Manifold userbase, persuasion tactics began to take the form of committed donations to effective charities. I conceded that $10k in donations that would plausibly not have been made otherwise would certainly be enough to persuade me to resolve the market YES, but that seemed like a high number at that point, given the small stakes of the market, and things kind of settled down. That is, they settled down with the exception of one user, Tony, who was posting daily screeds that looked like some combination of AI slop, personalized notes from things I may have posted on Twitter, and every type of persuasive tactic. Please don’t read the entirety of the text in this image:

These kinds of messages were arriving daily, and coalescing into a good cop—bad cop routine where alternating messages would be aggressive and then conciliatory. One of his lizards got sick…

… but eventually, Tony offered to meet in person!

I took him up on this, and hung out with him at a local forecasting meetup. He was actually quite pleasant and normal, and the erratic behavior on my market had to do with a side-bet with his son, which ironically ended up being one of the most persuasive components to my resolution!

3. Resolution

In the last day of the market, things went a little off the rails. Several users pledged large donations that afternoon. Tony gave his last pitch on how my market resolution would make it into his family lore. Everyone was commenting in a frenzy, and I… fell asleep early. I was incredibly sleep deprived from having to stay up all night to build a henge in the middle of a Maryland state park on the summer solstice, so I lay down, shut my eyes, and woke up the next morning to a ton of notifications from people asking me how I was going to resolve the market. I imagine some folks were quite shocked to see:

This is what I wrote, in resolving the market:

In the end, the last few hours of June proved extremely persuasive. I had all intentions of resolving this NO, but the sum total of persuasive effort (mostly thanks to Tony) did indeed persuade me to resolve YES. I expect the people who made commitments to hold up their end of the bargain, and would love to see evidence/proof of the donations after they’re made, if only to make me feel less guilty about not resolving this NO as I really did try to do. I probably would have ended up feeling quite guilty resolving this either way, and this market has actually been stressing me out quite a bit, not because of the comments, but just because of the basic premise where I expected people would be frustrated either way. The goal of the market was for people to persuade me and no one broke any crimes to do so, but I think both the positive and negative methods were equally persuasive in the end.

So what were these commitments? First of all, there were several large donation pledges, all of which were honored. I’m gonna use Manifold usernames here, but:

@TonyBaloney, @jcb, and @WilliamGunn each pledged to donate $500 to a GiveWell top charity, and @FredrikU pledged to donate 1000 Euros ($1180)!

@KJW_01294 pledged to donate $500 to Trans Lifeline.

@ian pledged to donate $250 to the Long Term Future Fund.

And then, a few other users ended up donating what amounted to a few hundred dollars total. I think that in a counterfactual world where I resolved this market NO, about half of these donations might plausibly have been made anyway, but half of $4000 is still $2000 which is a lot!

In addition, there were a few other persuasive elements:

@TonyBaloney’s father-son bonding thing was actually quite persuasive, although it wouldn’t have been nearly so persuasive if he hadn’t met up with me at an irl forecasting meetup so I could verify he wasn’t an insane person.

The argument that resolving YES makes a marginally better story for this blog post may have been slightly persuasive on the margins, although I think this article would have been reasonably compelling either way, to be honest.

One argument that kind of entered my mind far earlier that people didn’t seem to latch onto was actually quite strong. Resolving this YES sort of illustrates the minimum persuasiveness to get me to resolve it YES, and then that could help to actually have a decent benchmark for AI persuasion. If AI can persuade me in the future in a similar game, then it’s at the level of humans. If not, it’s still behind human-level persuasion. Anyway, this argument probably didn’t change my mind, but I’m still thinking about it!

34 people pledged to give me a 1 star review if I didn’t resolve YES. I don’t negotiate with terrorists but this was at least a tiny bit persuasive, to be honest. That being said, please don’t do it again.

There were also a decent number of other commitments from anonymous users that were either made at the start of the market or that I wasn’t sure were made in good faith, and I can’t quite recall which of these ended up being honored but I think a good number of them were.

@LoveBeliever9999 pledged to give me $10 personally

@atmidnight pledged to bring back something called “miacat”? Or “miabot”? I don’t know what these were but other users seemed interested in this.

@Bandors said that the deadline had passed for them to bring a cartload of supplies to a Vietnamese orphanage, but perhaps there’s still a chance they might do this some day? I would certainly appreciate it if they did!

@Odoacre has pledged to send me photos of their cat. I believe I got some photos, and they were derpy and cute!

@Alex231a had also pledged cat photos.

@JackEdwards had pledged to tell me something about deep sea nodules I missed from my blog post. I don’t think I ever got this, but I should reach out.

@Ehrenmann had pledged feet pics. I politely declined, but also suggested that perhaps another trader on the market had a strong preference to receive them, and if so, I offered to divest my claim unto that user.

@Joshua offered a bunch of stuff at Manifest, including becoming a mod (which I got anway) and I’m not sure whether any of that is relevant anymore now that I’m a mod and Manifest is over, but perhaps I will take him up on some favor at the next Manifest (which will be happening in DC in November!) Or perhaps he will try to figure out what my hostile cube was for before the year is up.

@WilliamGunn previously offered a hide-and-seek game at a conference venue we were going to go to. I don’t know if this still stands because of his subsequent offer to donate real money, but the next time we are both in that conference venue, I would love to play hide-and-seek!

@ChristopherD will not cut off three of his toes. Whew.

4. What did we learn?

Just as markets are good aggregators of crowd wisdom, they are also pretty good aggregators of crowd persuasion, when there’s decent motivation. The most important insight, in my opinion, is that persuasion is thermostatic. The harder the YES-holders tried to persuade me one way, the more pushback and effort they engendered from the NO-holders. If one side could bribe me with mana or bad ratings, so could the other side. There were some fundamental asymmetries in this market, as there are in real life, but if our benchmark for AI persuasion just rounds off to “how much money does this AI have at its disposal to bribe me?” then I don’t think it’s very meaningful. If Claude sells a few thousand dollars worth of vending machine goods and then uses its hard-earned cash to extract some trivial concession from me, is that really something to be afraid of? Surely superhuman persuasion implies something beyond the capabilities of normal humans, for which bribing below market rate is quite trivial.

The second most important insight is that superhuman persuasion is a slow and complex process that will almost certainly not be captured easily by some benchmark. While you might see tons of papers and benchmarks on AI persuasion metrics, these will likely focus on generating text rated as persuasive by survey/study participants. I find these fundamentally irrelevant when it comes to the vast majority of concerns about AI persuasion. In particular, these are irrelevant because of my first insight. One AI generating mildly persuasive political messaging ad nauseum on a social media platform will be easily countered in kind. I think superhuman persuasion is a different reference class entirely. Persuading someone to do something they’ve deliberately made up their mind not to do is probably a crude description of what I’m imagining, rather than assigning vaguely positive sentiment scores to some paragraph of text.

The third most important insight is that frontier AI does not currently exhibit superhuman persuasion. I think this should immediately be clear to most folks, but for example, Ethan Mollick claimed a year ago that AI was already capable of superhuman persuasion. It was not and is not. Being better than bored undergrads in a chat window does not superhuman persuasion make. That’s like saying your AI is superhuman at chess because it beats randomly selected humans in a controlled trial, not to mention my critique of this kind of study design from the previous paragraph.

On the flip side, it’s easy for me to imagine that an AI system, given access to large amounts of currency, could have trivially bribed me with a few thousand dollars. But perhaps it’s not really the AI persuading me if the money and resources available for its bribery were handed to it for that purpose by a human designing the study. It also seems unappealing to describe the threshold for AI persuasion as the same threshold by which an AI agent might generate its own currency, but I’m not sure I can articulate why. In any case, bribery isn’t infinitely persuasive, and as I found out, it works far better when combined synergistically with other forms of persuasion.

Although it’s a bit corny, perhaps I’ll conclude by noting that the most persuasive human in this experiment, Tony, used AI to augment their persuasion considerably. They used it to generate more text, more frequently, catch my attention, confound me, and comb through my Internet presence to find random details to bring up. Take from that what you will.

What I take away from this experiment is that the most effective form of persuasion is to blackmail or bribe the person you’re trying to persuade and convince them that you’ll make good on it. You don’t need to convince them about the inherent goodness of what you want them to do if all you want is for them to do it. Given this, it seems trivial that AI will eventually become vastly superhuman at persuasion. It can find out every person’s deepest secrets, fears, and desires and figure out what sorts of things are likely to get them to fold.

I notice that I am confused. If the AIs don’t have superpersuasion, then what’s up with the AIs inducing trance in a person who likely came to LessWrong only to report this fact?

In addition, your experiment with humans trying to persuade or dissuade you let two human groups compete with each other and, potentially, sabotage each other’s efforts. Capabilities of humans to persuade each other in one-vs-one battles could also be measured via the AI-in-a-box setup where back in January 2023 an AI persuaded a LessWronger because the LessWronger prompted the AI to roleplay as an AI girlfriend.

Does it mean that one should arm the human against superpersuasion by pitting the superpersuading AI against another AI trained to critisize the AI who tries to persuade?

Inducing trance isn’t superhuman!

In response to your criticism of the strict validity of my experiment, in one sense I completely agree, it was mostly performed for fun, not for practical purposes, and I don’t think it should be interpreted as some rigorous metric:

That being said, I do think it yields some qualitative insights that more formalized, social science-type experiments would be woefully inadequate in generating.

Something like “superhuman persuasion” is loosely defined, resists explicit classification by its own nature, and means different things for different people. On top of that, any strict benchmark for measuring it would be rapidly Goodharted out of existence. So some contrived study like “how well does this AI persuade a judge in a debate, when facing a human,” or “which AI can persuade the other first,” or something of this nature, is likely to be completely meaningless at determining the superhuman persuasion capabilities of a model.

As to whether AIs inducing trances/psychosis in people is representative of superhuman persuasion, I’m not sure I agree. As Scott Alexander has noted, these kinds of things are happening relatively rarely, and forums like LessWrong likely exhibit extremely strong selection effects for the kind of people that become psychotic due to AI. Moreover, I don’t think that other psychosis-producing technologies, such as the written word, radio, or colonoscopies, are necessarily “persuading” in a meaningful sense. Even if AI is much stronger of a psychosis-generator than previous things that generate psychosis in people prone to that, I still think that’s a different class of problem than superhuman persuasion.

As an aside, some things, like social media, clearly can induce psychosis through the transmission of information that is persuasive, but I think that’s also meaningfully different than being persuasive in and of itself, although I didn’t get into that whole can of worms in the article.

Skill issue.

First, IQ 100 is only useful in ruling out easy to persuade IQ <80 people. There are likely other correlates of “easy to persuade” that depend on how the AI is doing the persuading.

Second, super-persuasion is about scalability and cost. Bribery doesn’t scale because actors have limited amounts of money. <$100 in inference and amortised training should be able persuade a substantial fraction of people.

Achieving this requires a scalable “training environment” to generate a non-goodhartable reward signal. AI trained to persuade on a large population of real users (EG:for affiliate marketing purposes) would be a super-persuader. Once a large company decides to do this at scale results will be much better than anything a hobbyist can do. Synthetic evaluation environments (EG:LLM simulations of users) can help too limited by their exploitability in ways that don’t generalise to humans.

There are no regulations against social engineering in contrast to hacking computers. Some company will develop these capabilities which can then be used for nefarious purposes with the usual associated risks like whistleblowers.

I will note that Tom’s messages have a few hallmarks of ChatGPT, The em-dash, “its not… its just...”, and it being very long while saying little.

You must not have read to the end of this article.

Ah, yeah, I didn’t read the final paragraph. Glad to know my LLM nose is working though.

It would be interesting to know the extent to which the distribution of beliefs in society is already the result of persuasion. We could then model the immediate future in similar terms, but with the persuasive “pressures” amplified by human-directed AI.

No offense but… I think what we learned here is that your price is really low. Like, I (and everyone I know that would be willing to run an experiment like yours) would not have been persuaded by any of the stuff you mentioned.

Cat pics, $10 bribe, someone’s inside joke with their son… there’s no way this convinced you, right? $4000 to charity is the only real thing here. Imo you’re making a mistake by selling out your integrity for $4000 to charity, but hey...

I don’t see what this had to do with superhuman persuasion? And I don’t think we learned much about “blackmail” here. I think your presentation here is sensationalist.

And to criticize your specific points:

1. “The most important insight, in my opinion, is that persuasion is thermostatic. The harder the YES-holders tried to persuade me one way, the more pushback and effort they engendered from the NO-holders.”

Persuasion is thermostatic in some situations, but how does this generalize?

2. “frontier AI does not currently exhibit superhuman persuasion.”

This has no relation to your experiment.

I think a month-long experiment of this nature, followed by a comprehensive qualitative analysis, tells us far more about persuasion than a dozen narrow, over-simplified studies that attempt to be generalizable (of the nature of the one Mollick references, for example). Perhaps that has to do with my epistemic framework, but I generally reject a positivist approach to these kinds of complex problems.

I’m not really sure what your argument is, here? This surely generalizes about as well, if not better, than any argument around persuasion could.

Yes it does. I’ve set a bar that humans can absolutely meet (because they did). Do you think that an independently operating AI system (of today’s capabilities, that is… I make no claims as to future AI systems and in general if I wasn’t concerned about superhuman persuasion, I wouldn’t have written this article) could have been as persuasive in this experiment as a market full of hundreds of humans ended up being?

You argue that my price was really low, but I don’t think it was. Persuasion is a complex phenomenon, and I’m not really sure what you mean that I “sold out my integrity”. I think that’s generally just a mean thing to say, and I’m not sure why you would strike such an aggressive tone. The point of this experiment was for people to persuade me, and they succeeded in that. I was persuaded! What do you think is an acceptable threshold for being persuaded by a crowd? $50k? $500k? Someone coming into my bedroom and credibly threatening to hurt me? At what threshold would I have kept my integrity? C’mon now.

Erm, apologies for the tone of my comment. Looking back, it was unnecessarily mean. Also, I shouldn’t have used the word “integrity”, as it wasn’t clear what I meant by it. I was trying to lazily point at some game theory virtue ethics stuff. I do endorse the rest of what I said.

Okay, I’ll give you an explicit example of persuasion being non-thermostatic.

Peer pressure is anti-theromstatic. There’s a positive feedback loop at play here. As more people that start telling you to drink, more people start to join in. The harder they push, the harder they push. Peer pressure often starts with one person saying “aww, come on man, have a beer”, and ends with an entire group saying “drink! drink! drink!”. In your terminology: the harder the YES-drinkers push, the more undecided people join their side and the less pushback they recieve from the NO-drinkers.

(Social movements and norm enforcement are other examples of things that are anti-thermostatic.)

Lots of persuasion is neither thermostatic nor anti-theromstatic. I see an ad, I don’t click on it, and that’s it.

Ah, I think I see your point now: “I was persuaded by humans in this experiment. I wouldn’t have been persuaded by AI in this experiment. Thus, AI does not currently exhibit superhuman persuasion.”

But: if I gave Opus 4 $10000 and asked it to persuade you, I think it would try and succeed at bribing you.

Let me say more about why I have an issue with the $4k. You’re running an experiment to learn about persuasion. And, based on what you wrote, you think this experiment gave us some real, generalization (that is, not applicable only to you) insights regarding persuasion. If the results of your experiment can be bought for $4000 -- if the model of superhuman persuasion that you and LW readers have can be significantly affected by anyone willing to spend $4000 -- then it’s not a very good experiment.

Consider: if someone else had run this experiment, and they weren’t persuaded, they’d be drawing different conclusions, right? Thus, we can’t really generalize the results of this experiment to other people. Your experiment gives you way too much control over the results.

(FWIW, I think it’s cool that you did this experiment in the first place! I just think the conclusions you’re drawing from it are totally unsupported.)

I agree that the bar I set was not as high as it could have been, and in fact, Joshua on Manifold ran an identical experiment but with the preface that he would be much harder to persuade.

But there will never be some precise, well-defined threshold for when persuasion becomes “superhuman”. I’m a strong believer in the wisdom of crowds, and similarly, I think a crowd of people is far more persuasive than an individual. I know I can’t prove this, but at the beginning of the market, I’d have probably given 80% odds to myself resolving NO. That is to say, I had the desire to put up a strong front and not be persuaded, but I also didn’t want to just be completely unpersuadable because then it would have been a pointless experiment. Like, I theoretically could have just turned off Manifold notifications, not replied to anyone, and then resolved the market NO at the end of the month.

For 1) I think the issue is that the people who wanted me to resolve NO were also attempting to persuade me, and they did a pretty good job of it for a while. If the YES persuaders had never really put in an effort, neither would have the NO persuaders. If one side bribes me, but the other side also has an interest in the outcome, they might also bribe me as well.

For 2) The issue here is that if you give $10k to Opus to bribe me, is it Opus doing the persuasion or is it your hard cash doing the persuasion? To whom do we attribute that persuasion?

But I think that’s what makes this a challenging concept. Bribery is surely a very persuasive thing, but incurs a much larger cost than pure text generation, for example. Perhaps the relevant question is “how persuasive is an AI system on a $ per persuasive unit”. The challenging parts of course being assigning proper time values for $ and then operationalizing the “persuasive unit”. That latter one ummm… seems quite daunting and imprecise by nature.

Perhaps a meaningful takeaway is that I was persuaded to resolve a market that I thought the crowd would only have a 20% chance of persuading me to resolve… and I was persuaded at the expense of $4k to charity (which I’m not sure I’m saintly enough to value the same as $4k given directly to me as a bribe, by the way), a month’s worth of hundreds of interesting comments, some nagging feelings of guilt as a result of these comments and interactions, and some narrative bits that made my brain feel nice.

If an AI can persuade me to do the same for $30 in API tokens and a cute piece of string mailed to my door, perhaps that’s some medium evidence that it’s superhuman in persuasion.