Extended Picture Theory or Models inside Models inside Models

This post offers a model of meaning (and hence truth) based on extending Wittgenstein’s Picture Theory. I believe that this model is valuable enough to be worth presenting on its own. I’ve left justification for another post, but all I’ll say for now is that I believe this model to be useful even if it isn’t ultimately true. I’ll hint at the applications to AI safety, but these won’t be fully developed either.

Finally, post only covers propositions about the world, not about logical or mathematics.

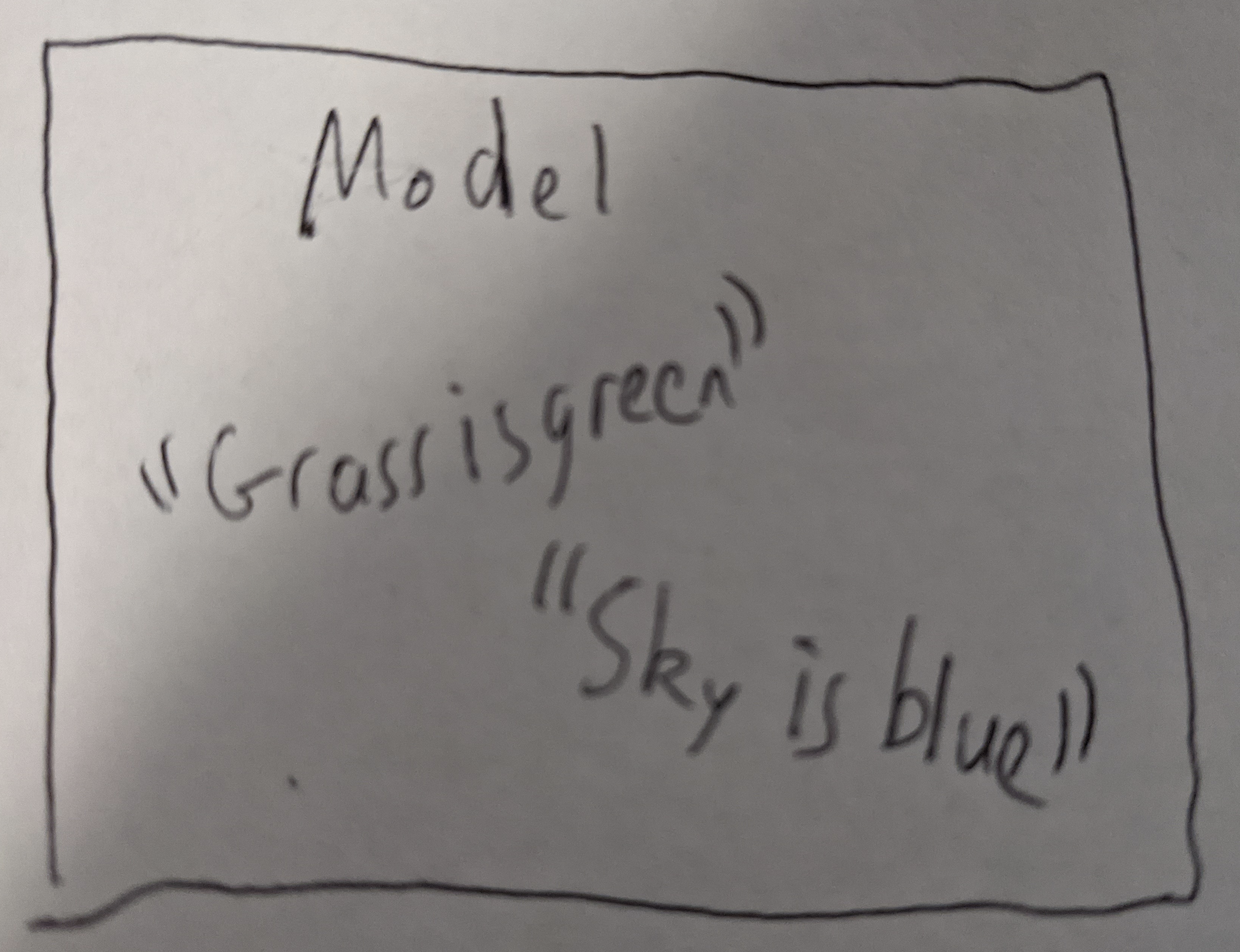

Suppose we have a model of the world:

What does this mean? What does it mean for it to be true? Is this even a question that can be answered? Well, when I introspect I seem to believe that this model claims to correspond to reality and that it is true if it in fact does correspond to reality.

So, for example, that the grass really is green and that the sky really is blue.

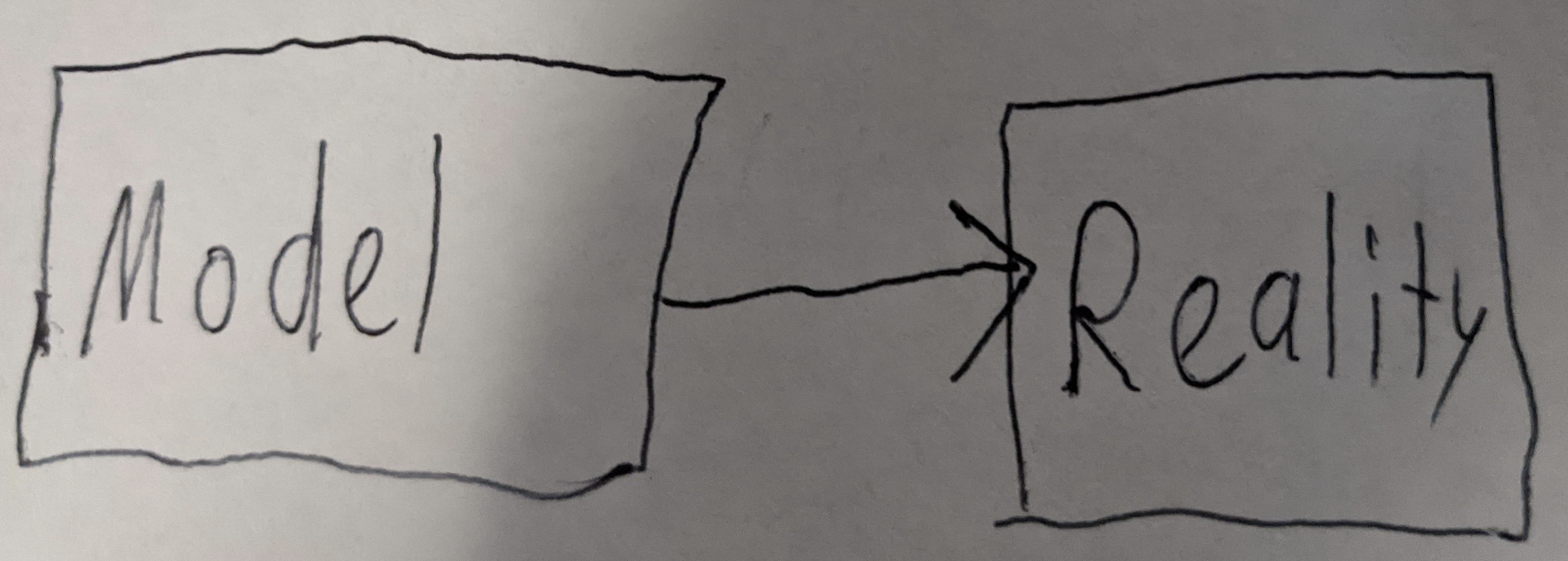

However, this begs the question, what does “correspond” mean here? In other words, we’ve just discovered that I have a meta-model that my base model corresponds to reality and we’re asking what it means:

A good question to ask is: What would it mean for this model to be wrong? Let’s suppose we begin with a base model of a particular shape being a dodecahedron which we produced via guessing and we then examine reality which produces a model of the shape actually being a decagon. So according to our meta-model, our base model doesn’t match reality.

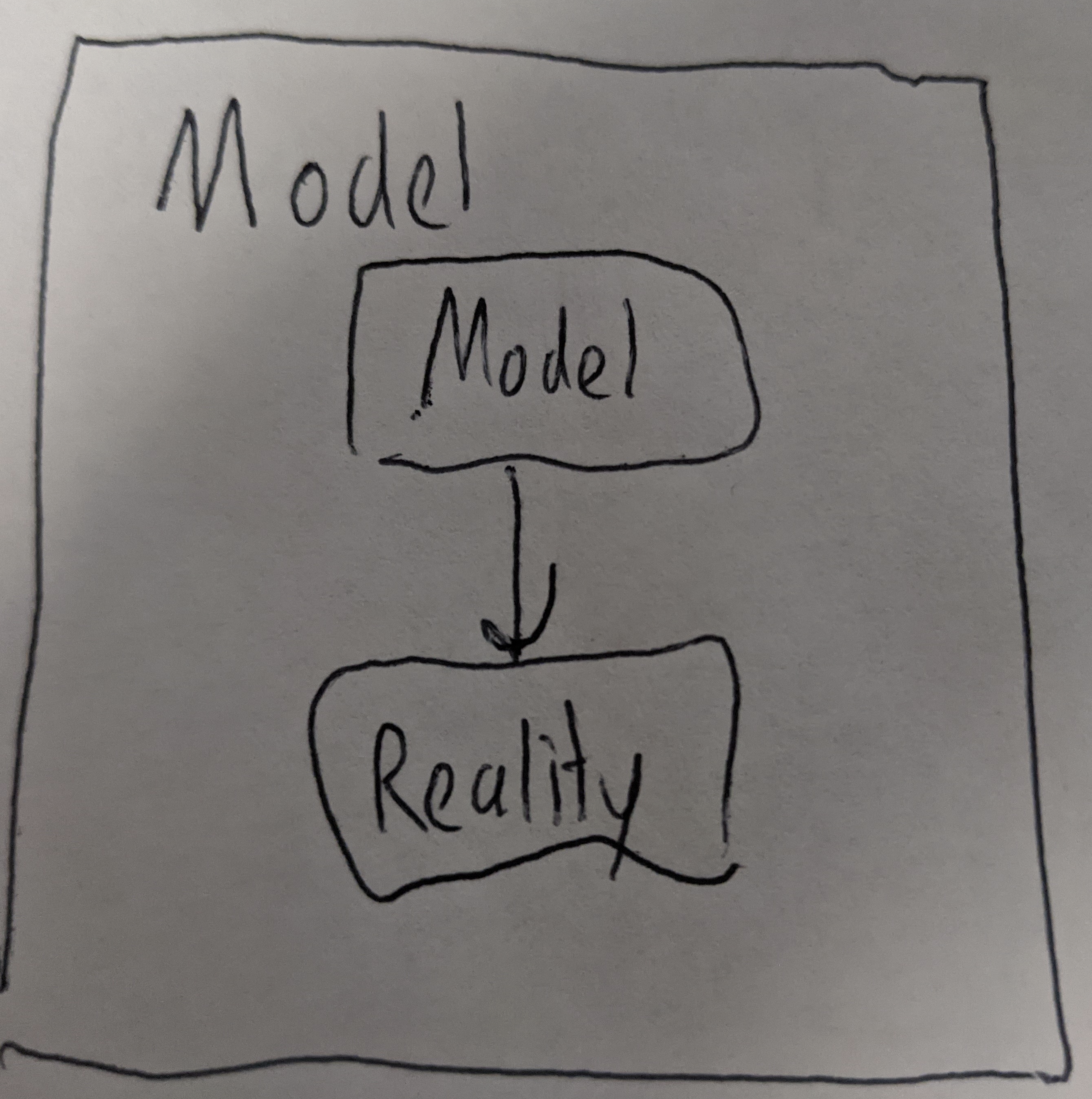

However, suppose the shape really is a dodecahedron, but we miscounted. Then our meta-model of the relation would be wrong as it doesn’t correspond to the actual relation. Or in visual terms, the correspondence that failed to hold was:

Conversely, our meta-model would be correct if this relation had held.

Naturally, we could continue climbing up levels by asking what it means for our meta-model to correspond to reality and then for our meta-meta model to correspond to reality and so on without end.

Perhaps this isn’t a very satisfying answer as this chain never terminates, but perhaps this is the only kind of answer we can give.

An Expression of the Lesson

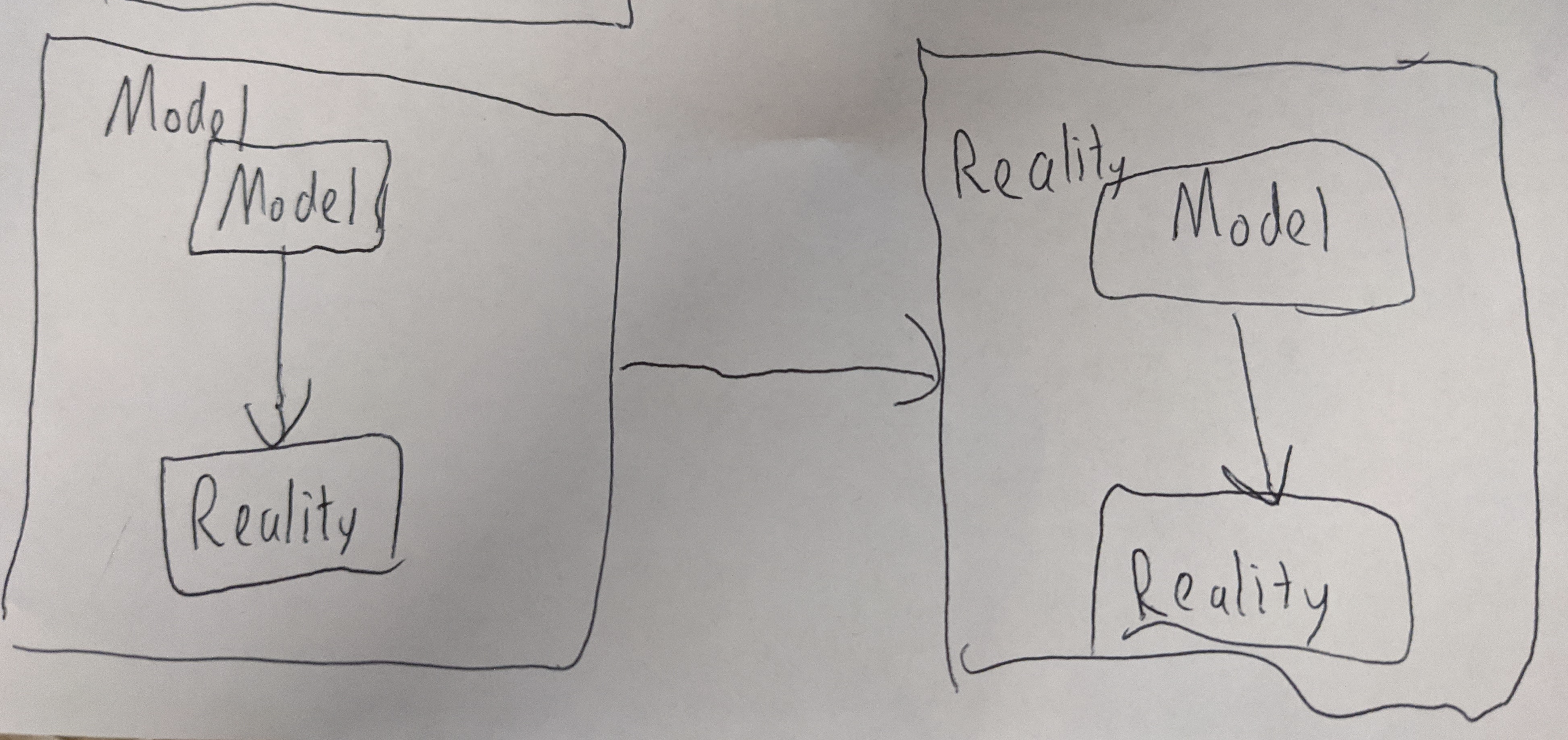

We start out with a model.

We want to know what it means for the model to correspond to reality.

Of course, we are trapped within our models. There is no way of escaping them.

We want to tunnel through into reality and discover what our model really means.

But this is impossible.

All we can do is to form a new model of our model, reality and their relation.

All we can do is climb higher.

Become more meta.

And try to touch the sky.

On AI Safety

An AI with an objective should try to achieve that objective.

Not convince itself it’s achieved that objective.

But it isn’t clear what this means at all.

After all, the AI is trapped within its model.

There’s no way out.

It can’t say anything directly about the world.

Only about its models.

Let’s start again.

An AI with an objective should try to achieve that objective.

Not convince itself it’s achieved that objective.

In other words, it needs a model of how its model relates to the world.

A meta-model that it uses to maintain integrity so that the model can’t be distorted.

But if it can distort the meta-model, the model will be corrupted too.

So it needs a meta-meta model and then a meta-meta-meta model.

And a yet more meta model too.

Some lessons have value, even if you don’t buy the whole story.

Wittgenstein built a ladder.

Now I have one too.

This work derives from lines of thought developed at the EA Hotel. It may have been counterfactually produced anyway, but the time there helped.

Yet more prententious poetry

To understand the theory of decisions,

We must enquire into the nature of a decision,

A thing that does not truly exist.

Everything is determined.

The path of the future is fixed.

So when you decide,

There is nothing to decide.

You simply discover, what was determined in advance.

A decision only exists,

Within a model.

If there are two options,

Then there are two possible worlds.

Two possible versions of you.

A decision does not exist,

Unless a model has been built.

But a model can not be built.

Without defining a why.

We go and build models.

To tell us what to do.

To aid in decisions.

Which don’t exist at all.

A model it must,

To reality match.

Our reality cannot be spoken.

The cycle of models can’t be broken.

Upwards we must go.

Meta.

A decision does need,

A factual-counter,

But a counterfactual does need,

A decision indeed.

This is not a cycle.

A decision follows,

Given the ’factuals.

The ’factuals follow,

From a method to construct,

This decision’s ours to make.

But first we need,

The ’factuals for the choice,

Of how to construct,

The ’factuals for the start.

A never ending cycle,

Spiralling upwards,

Here we are again.

Somewhat similar and somewhat different point can be made about rule followings which I thinis part of the later Wittgenstein. Suppose there is a town map in chinese htat you don’t know how to read. Then somebody gives you instructions on how to read it in german. Then if you don’t know german you might need another instruction book. If one would need rules to follow or operate a rules system then an infinite regression could be looming. Thus does giving a “rule reduction” help at all?

Yeah, I guess Wittgenstein’s position on that is that we learn the first rule set directly from training, rather than from other rules.