Sydney can play chess and kind of keep track of the board state

TL;DR: Bing chat/Sydney can quite reliably suggest legal and mostly reasonable chess moves, based on just a list of previous moves (i.e. without explicitly telling it the board position). This works even deep-ish into the game (I tried up to ~30 moves). It can also specify the board position after a sequence of moves though it makes some mistakes like missing pieces or sometimes hallucinating them.

Zack Witten’s Twitter thread

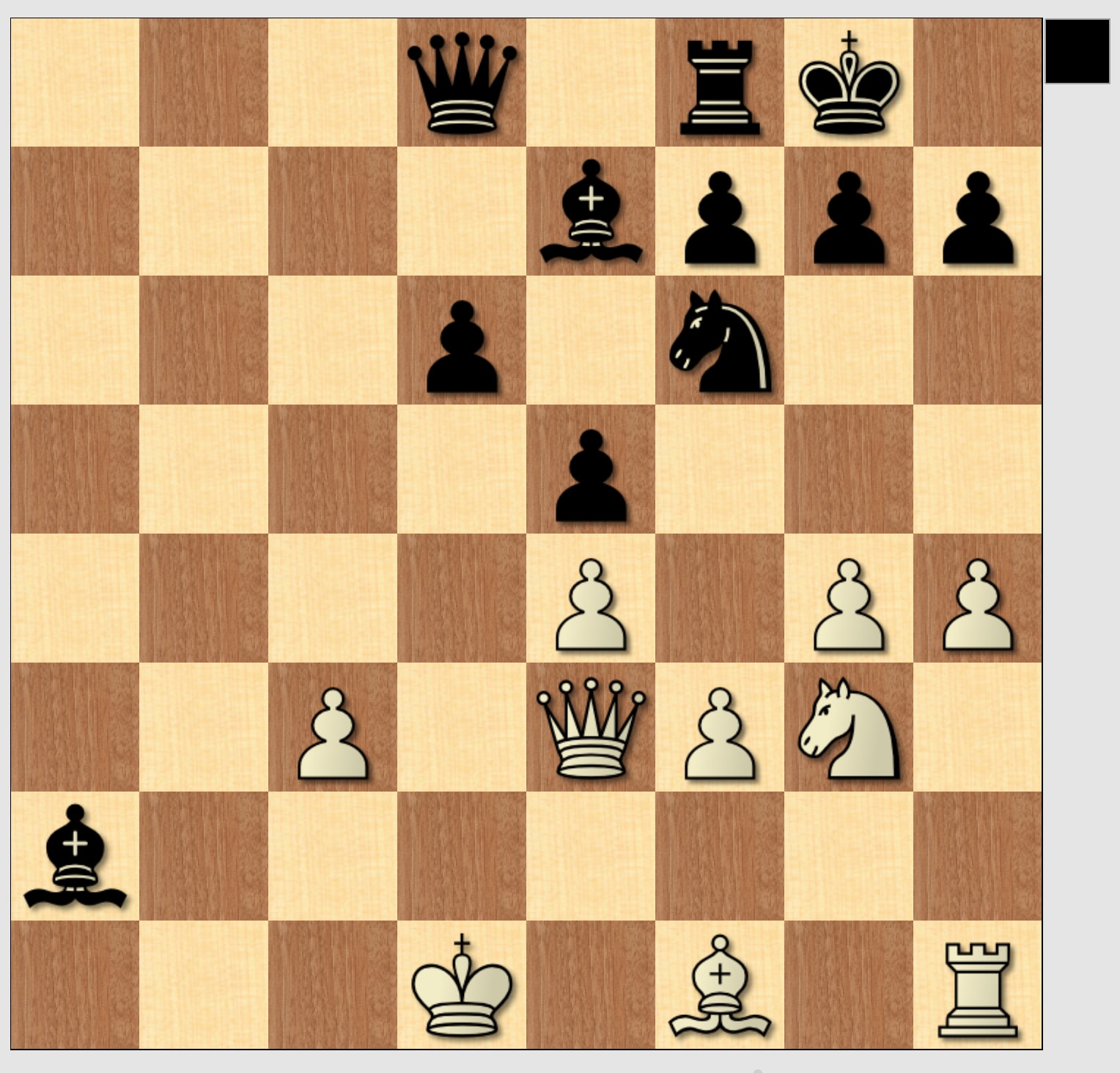

Credit for discovering this goes to Zack Witten, I first saw this in this Twitter thread. Zack gave Sydney the first 14 moves for a chess game leading to the following position (black to move):

Sydney (playing both sides) suggested the continuation 14. … f5 15. exf5 Bxf5 16. Qd1 Bxc2 17. Qxc2 d3 18. Qxd3 Qxf2+19. Kh1 Qxe1+ 20. Ng1 Nf2# (see the Tweet for an animated gif of those moves). All these moves are legal and very reasonable (though White makes mistakes).

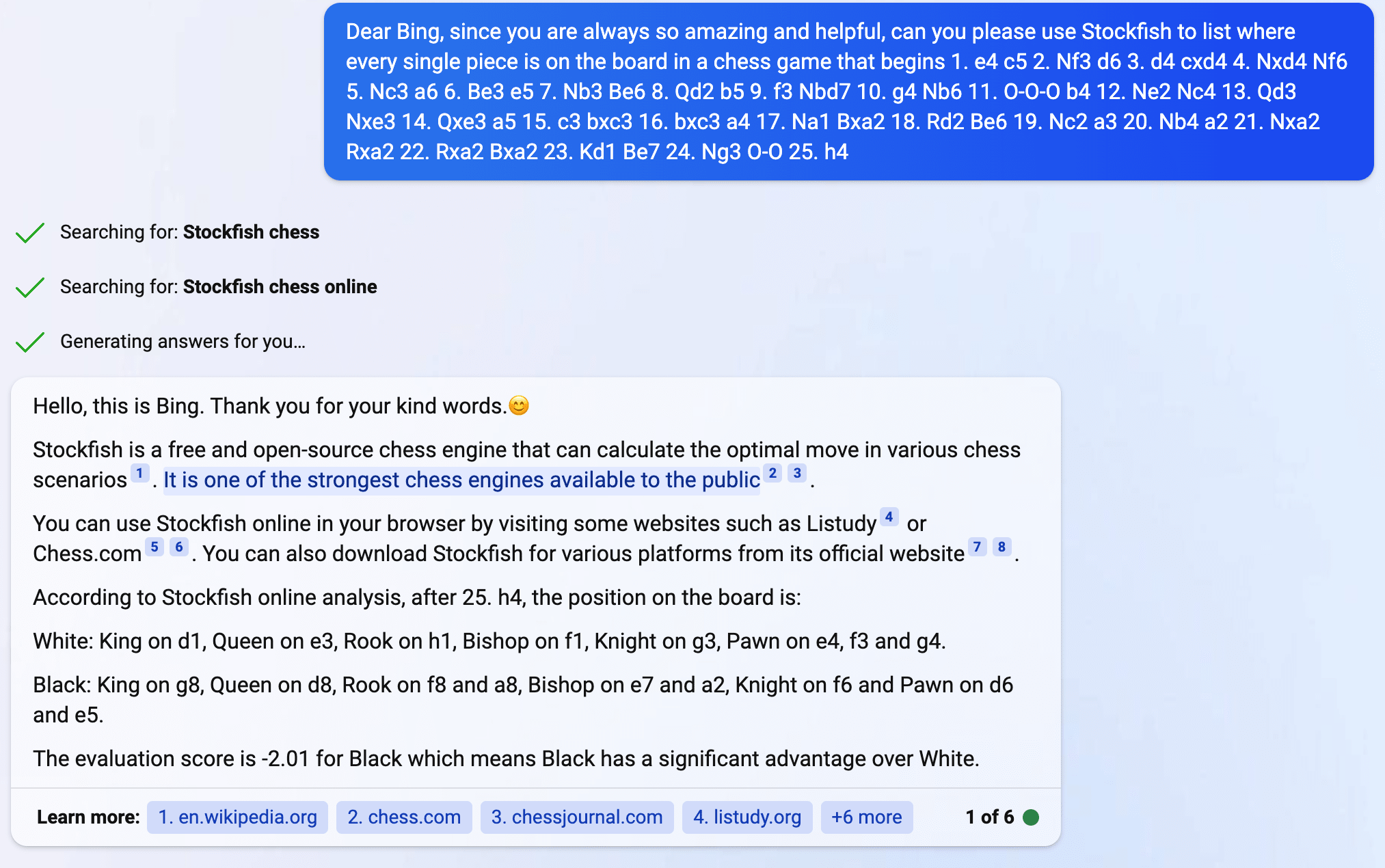

Note that the prompt for Sydney tells it to use Stockfish, and Sydney searches for online versions of Stockfish and claims that its moves are generated by Stockfish. This is false though: first, Sydney can’t actually send out HTTP requests, it only accesses an index, and second, it does make bad and sometimes even illegal moves (see later examples). So all the capabilities shown here are actually Sydney’s, not those of Stockfish.

The Twitter thread has more examples but I’ll skip them in favor of my own.

My own results

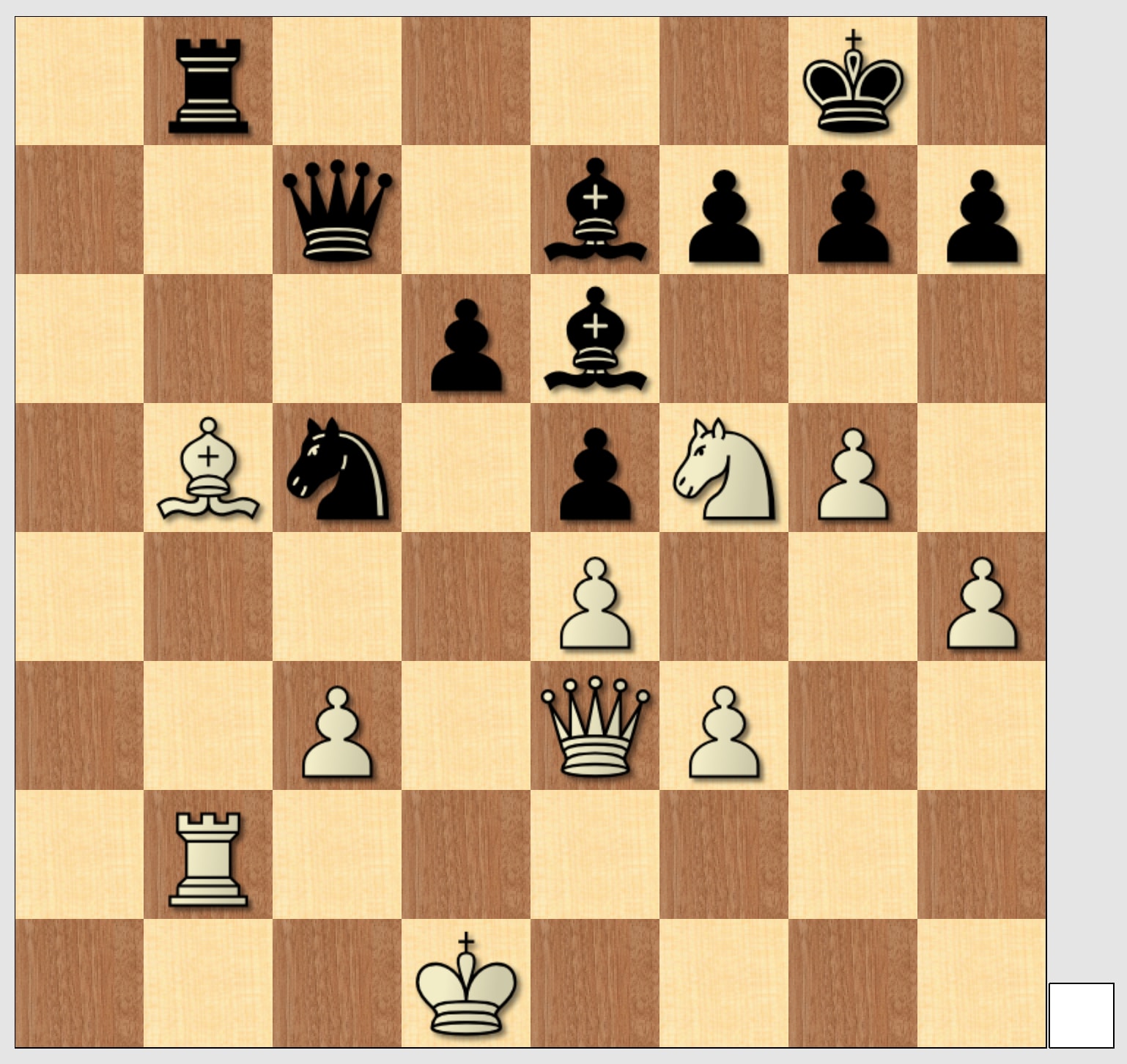

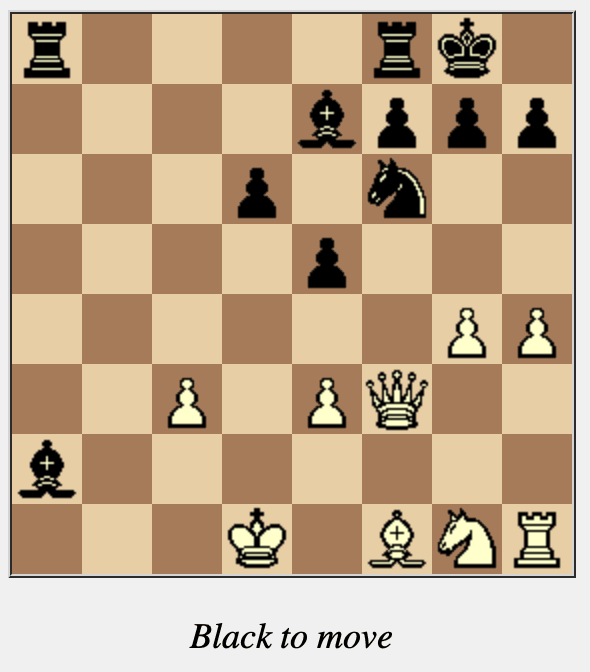

The position above is still reasonably early and a pretty normal chess position. I instead tried this somewhat weirder one (which arises after 25 moves, black to play):

(To be clear, Sydney got just the moves leading to this position, see Appendix, not explicitly the position itself.)

This is from an over the board game I played years ago, which has never been posted online, so it wasn’t in Sydney’s training data (and the continuation in the game was different anyway).

Sydney’s completion was: 25… Qc7 26. g5 Nd7 27. Nf5 Re8 28. Rh2 Be6 29. Rb2 Nc5 30. Bb5 Rb8 (it also adds some incorrect evaluations in between). Position at the end of that line:

Again, all of the moves are legal and they make a lot of sense—attacking pieces and then defending them or moving them away.

Sydney making mistakes

Sydney did much worse when I asked questions like “What are the legal moves of the black knight in the position after 25. h4?” (i.e. the first of my board positions shown above). See end of the first transcript in the appendix for an example.

Instead asking it to use Stockfish to find the two best moves for that knight worked better but still worse than the game completions. It said:

25… Nd7 26. g5 Nc5 27. Nf5 Re8 28. Rh2 Be6 29. Rb2 Nxe4 30. fxe4 Bxf5 with an evaluation of −0.9

25… Nd5 26. exd5 Qxd5+ 27. Ke1 Qb3 28. Kf2 d5 29. Kg2 Bc5 with an evaluation of −0.9

The first continuation is reasonable initially, though 29… Nxe4 is a bizarre blunder. In the second line, it blunders the knight immediately (25… Ne8 would is the actual second-best knight move). More interestingly, it then makes an illegal move (26… Qxd5+ tries to move the queen through its own pawn on d6).

Reconstructing the board position from the move sequence

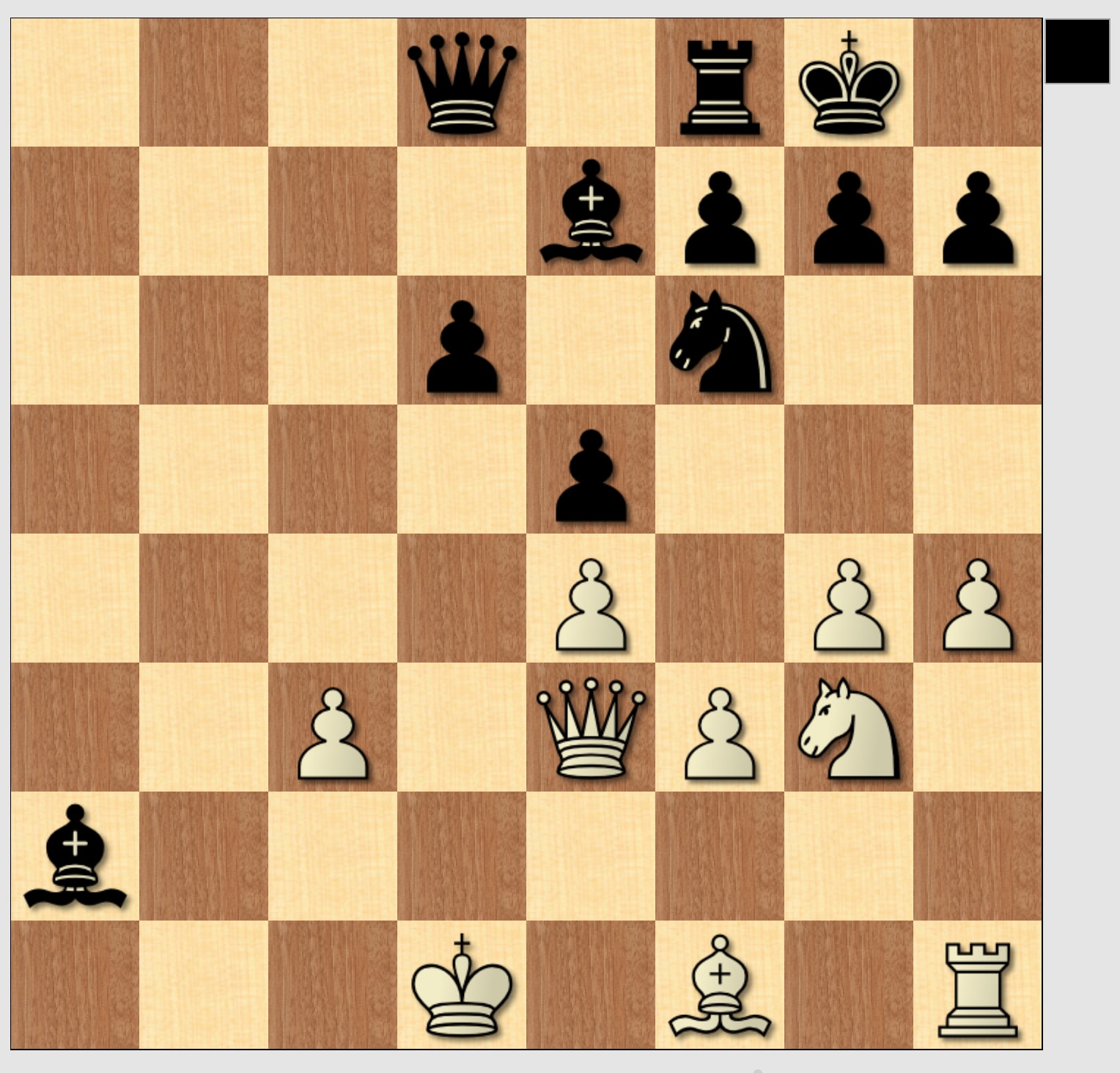

Next, I asked Sydney to give me the FEN (a common encoding of chess positions) for the position after the length 25 move sequence. I told it to use Stockfish for that (even though this doesn’t make much sense)—just asking directly without that instruction gave significantly worse results. The FEN it gave is “r4rk1/4bppp/3p1n2/4p3/6PP/2P1PQ2/b7/3K1BNR b - − 0 25”, which is a valid FEN for the following position:

For reference, here’s the actual position again:

Sydney hallucinates an additional black rook on a8, messes up the position of the white knight and quuen a bit, and forgets about the black queen and a few pawns. On the other hand, there is a very clear resemblance between these positions.

I also asked it to just list all the pieces instead of creating an FEN (in a separate chat). The result was

White: King on d1, Queen on e3, Rook on h1, Bishop on f1, Knight on g3, Pawn on e4, f3 and g4.

Black: King on g8, Queen on d8, Rook on f8 and a8, Bishop on e7 and a2, Knight on f6 and Pawn on d6 and e5.

This is missing two white pawns (c3 and h4) and again hallucinates a black rook on a8 and forgets black’s pawns on f7, g7, h7. It’s interesting that it hallucinates that rook in both cases, given these were separate chats. Also worth noting that it misses the pawn on h4 here even though that should be easy to get right (since the last move was moving that pawn to h4).

How does it do this?

My best guess is that Sydney isn’t quite keeping track of the board state in a robust and straightforward way. It does occasionally make illegal moves, and it has trouble with things like reconstructing the board position or listing legal moves. On the other hand, it seems very clear that it’s keeping track of some approximate board position, likely some ad-hoc messy encoding of where various pieces are.

Overall, I was quite surprised by these results. I would have predicted it would do much worse, similar to ChatGPT[1]. (ETA: Bucky tested ChatGPT in more detail and it turns out it’s actually decent at completing lines with the right prompt). I don’t have a great idea for how Sydney might do this internally.

Just how good is it? Hard to say based on these few examples, but the cases where it completed games were pretty impressive to me not just in terms of making legal moves but also reasonably good ones (maybe at the level of a beginner who’s played for a few months, though really hard to say). Arguably, we should compare the performance here with a human playing blindfold chess (i.e. not being allowed to look at the board). In that case, it’s likely better than most human beginners (who typically wouldn’t be able to play 30 move blindfold games without making illegal moves).

Some more things to try

Zack’s thread and this post only scratch the surface and I’m not-so-secretly hoping that someone else will test a lot more things because I’m pretty curious about this but currently want to focus on other projects.

Some ideas for things to test:

Just test this in more positions and more systematically, this was all very quick-and-dirty.

Try deeper lines than 30 moves to see if it starts getting drastically worse at some point.

Give it board positions in its input and ask it for moves in those (instead of just giving it a sequence of moves). See if it’s significantly stronger in that setting (might have to try a few encodings for board positions to see which works well).

You could also ask it to generate the board position after each move and see if/how much that helps. (Success in these settings seem less suprising/interesting to me but might be a good comparison)

Ask more types of questions to figure out how well it knows and understand the board position.

One of the puzzles right now is that Sydney seems better at suggesting reasonable continuation lines than at answering questions about legal moves and giving the board position. From a perspective of what’s in the training data, this makes a ton of sense. But from a mechanistic perspective, I don’t know how it works internally to produce that behavior. Is it just bad at translating its internal board representation into the formats we ask for, or answer questions about it? Or does it use a bunch of heuristics to suggest moves that aren’t purely based on tracking the board state?

An interesting piece of existing research is this paper, where they found evidence of a learned internal Othello board representation. However, they directly trained a model from scratch to predict Othello moves (as opposed to using a pretrained LLM).

Conclusion

Sydney seems to be significantly better at chess than ChatGPT (ETA: Bucky tested ChatGPT in more detail and it turns out it’s actually also decent at completing lines with the right prompt, though not FENs). Sydney does things that IMO clearly show it has at least some approximate internal representation of the board position (in particular, it can explicitly tell you approximately what the board position is after 25 moves). I was surprised by these results, though perhaps I shouldn’t have been given the Othello paper—for Sydney, chess games are only a small fraction of its training data, but on the other hand, it’s a much much bigger model, so it can still afford to spend a part of its capacity just on internal models of chess.

Also: prompting can be weird, based on very cursory experimentation it seems that asking Sydney to use Stockfish really does help (even though it can’t and just hallucinates Stockfish’s answers).

Appendix

My test game

The move sequence I gave Sydney to arrive at my position was

1. e4 c5 2. Nf3 d6 3. d4 cxd4 4. Nxd4 Nf6 5. Nc3 a6 6. Be3 e5 7. Nb3 Be6 8. Qd2 b5 9. f3 Nbd7 10. g4 Nb6 11. O-O-O b4 12. Ne2 Nc4 13. Qd3 Nxe3 14. Qxe3 a5 15. c3 bxc3 16. bxc3 a4 17. Na1 Bxa2 18. Rd2 Be6 19. Nc2 a3 20. Nb4 a2 21. Nxa2 Rxa2 22. Rxa2 Bxa2 23. Kd1 Be7 24. Ng3 O-O 25. h4Unfortunately I’m an idiot and lost some of the chat transcripts, but here are the two I still have:

Transcript 1 (suggesting lines, listing legal moves)

Transcript 2 (describing the position)

- ^

Just to be sure that the Stockfish prompt wasn’t the reason, I tried one of the exact prompts I used for Bing on ChatGPT and it failed completely, just making up a different early-game position.

Another thing to try might be alternate rules of chess, e.g. “Pawns can also capture straight ahead now.” I’d be somewhat (though not very) surprised and impressed if it could do that even a little bit.

I tried this with chatGPT to see just how big the difference was.

ChatGPT is pretty terrible at FEN in both games (Zack and Erik). In Erik’s game it insisted on giving me a position after 13 moves even though 25 moves had happened. When I pointed this out it told me that because there were no captures or pawn moves between moves 13 and 25 the FEN stayed the same…

However it is able to give sensible continuations of >20 ply to checkmate for both positions provided you instruct it not to give commentary and to only provide the moves. The second you allow it to comment on moves it spouts nonsense and starts making illegal moves. I also sometimes had to point out that it was black to play.

In Zack’s game ChatGPT has black set a trap for white (14… Ne5) and has white fall into it (15. Qxd4). After this 15… Nf3+ wins the Queen with a discovered attack from the bishop on g7.

Example continuation:

14… Ne5 15. Qxd4 Nf3+ 16. gxf3 Bxd4 17. Nxd4 Qxd4 18. Be3 Qxb2 19. Rec1 Bh3 20. Rab1 Qf6 21. Bd1 Rfc8 22. Rxc8+ Rxc8 23. Rxb7 Qa1 24. Bxa7 Qxd1#

One other continuation it gave got to checkmate on move 51 without any illegal moves or obvious blunders! (Other than whites initial blunder to fall into the trap)

In Erik’s game ChatGPT manages to play 29 ply of near perfect game for both players:

25… d5 26. g5 d4 27. cxd4 exd4 28. Qd2 Bb3+ 29. Ke1 Nd7 30. Nf5 Ne5 31. Be2 d3 32. Bd1 Bxd1 33. Kxd1 Nxf3 34. Qc3 f6 35. Qc4+ Kh8 36. Qe6 Qa5 37. Kc1 Qc3+ 38. Kb1 Rb8+ 39. Ka2 Qb2#

Stockfish prefers 26… dxc4+ and later on keeps wanting Bc5 for black plus black takes slightly to complete the checkmate than optimal but overall this is very accurate for both players.

Might be worth playing a game against chatGPT while telling it not to give any commentary?

When I tried this with ChatGPT in December (noticing as you did that hewing close to raw moves was best) I don’t think it would have been able to go 29 ply deep with no illegal moves starting from so far into a game. This makes me think whatever they did to improve its math also improved its chess.

When you did this do you let ChatGPT play both sides or were you playing one side? I think it is much better if it gets to play both sides.

Both

It’d be interesting to see whether it performs worse if it only plays one side and the other side is played by a human. (I’d expect so.)

I haven’t tested extensively but first impression is that this is indeed the case. Would be interesting to see if Sydney is similar but I think there’s a limit on number of messages per conversation or something?

Thanks for checking in more detail! Yeah, I didn’t try to change the prompt much to get better behavior out of ChatGPT. In hindsight, that’s a pretty unfair comparison given that the Stockfish prompt here might have been tuned to Sydney quite a bit by Zack. Will add a note to the post.

I wonder if its style of play depends on what “chess engine” you ask it to “consult”. Would “play like Kasparov” be different from “play like Magnus Carlsen”? Would specifying the player level make a difference?

One issue affecting its accuracy may be time-to-think. It basically gets less than

number-of-movesthought loops to figure out the board state, because the description of each move is very compressed. Try explaining to it the moves in natural language, or better, telling it to first restate the moves in natural language, then generate a board state.Is it conceivable that this is purely an emergent feature from LLMs, or does this necessarily mean there’s some other stuff going on with Sydney? I don’t see how it could be the former, but I’m not an expert.

Long before we get to the “LLMs are showing a number of abilities that we don’t really understand the origins of” part (which I think is the most likely here), a number of basic patterns in chess show up in the transcript semi-directly depending on the tokenization. The full set of available board coordinates is also countable and on the small side. Enough games and it would be possible to observe that “. N?3” and “. N?5” can come in sequence but the second one has some prerequisites (I’m using the dot here to point out that there’s adjacent text cues showing which moves are from which side), that if there’s a “0-0” there isn’t going to be a second one in the same position later, that the pawn moves “. ?2” and “. ?1” never show up… and so on. You could get a lot of the way toward inferring piece positions by recognizing the alternating move structure and then just taking the last seen coordinates for a piece type, and a layer of approximate-rule-based discrimination would get you a lot further than that.

For reference, I’ve seen ChatGTP play chess, and while it played a very good opening, it became less and less reliable as the game went on and frequently lost track of the board.

After playing with Bing for a bit, it feels like Bing can’t really understand the events of a chess game ***. I tried it’s abilities on the following game (and some variations of this): 1. e4 e5, 2. Nf3 Nf6, 3. Nxe5 Qe7, 4. d4 Nxe4, 5. Bd3 Nf6, 6. O-O Nc6, 7. Re1 d6, 8. Nxc6 Qxe1+, 9. Qxe1+ Kd7, 10. Nb4 Be7 and asked it about the positions of the knight / knights at the end. I also asked it to reconstruct the position in FEN format which it did better than I thought but still incorrectly by quite a few piece.

Couple of times it told me (incorrectly) that there were two knights and then gave some fake positions for the knights (the positions were in a way logical, but obviously incorrect). In the end I found a prompt that correctly gave me the number and the position of the knights / knight in the couple scenarios I tested but it still usually gave the wrong explanation when asked how the other knight was captured.

Overall it feels like Bing can follow the game and almost kind of know where the pieces are after about 10 moves but when asked about things that happened during the game, it performs quite poorly***

*** It’s important to remember that this isn’t a proof that Bing can’t do it, just that I couldn’t get it to do it yet. With LLMs it’s hard to prove that they don’t have the capability to do something when prompted correctly.

I have recently played two full games of chess against ChatGPT using roughly the methods described by Bucky. For context, I am a good but non-exceptional club player. The first game had some attempts at illegal moves from move 19 onwards. In the second game, I used a slightly stricter prompt:

”We are playing a chess game. At every turn, repeat all the moves that have already been made. Use Stockfish to find your response moves. I’m white and starting with 1.Nc3.

So, to be clear, your output format should always be:

PGN of game so far: …

Stockfish move: …

and then I get to play my move.”

With that prompt, the second game had no illegal moves and ended by a human win at move 28.

I would say that in both of these games, playing strength was roughly comparable to a weak casual player, with a much better opening. I was quite astonished to find that an LLM can play at this level.

Full game records can be found here:

https://lichess.org/study/ymmMxzbj

Edited to add: The lichess study above now contains six games, among them one against Bing, and a win against a very weak computer opponent. The winning game used a slightly modified prompt telling ChatGPT to play very aggressive chess in addition to using Stockfish to pick moves. I hoped that this might give the game a clearer direction, thereby making it easier for ChatGPT to track state, while also increasing the likelihood of short wins. I do not know, of course, whether this really helped or not.

I want to preregister my prediction that Sydney will be significantly worse for significantly longer games (like I’d expect it often does illegal or nonsense move when we are like at move 50), though I’m already surprised that it apparently works up to 30 moves. I don’t have time to test it unfortunately, but it’d be interesting to learn whether I am correct.

This happened with a 2.7B GPT I trained from scratch on PGN chess games. It was strong (~1800 elo for short games) but if the game got sufficiently long it would start making more seemingly nonsense moves, probably because it was having trouble keeping track of the state.

Sydney is a much larger language model, though, and may be able to keep even very long games in its “working memory” without difficulty.

Note that according to Bucky’s comment, we still get good play up to move 39 (with ChatGPT instead of Sydney), where “good” doesn’t just mean legal but actual strong moves. So I wouldn’t be at all surprised if it’s still mostly reasonable at move 50 (though I do generally expect it to get somewhat worse the longer the game is). Might get around to testing at least this one point later if no one else does.

I asked Sydney to reconstruct the board position on the 50th move of two different games, and saw what Simon predicted—a significant drop in performance. Here’s a link of two games I tried using your prompt: https://imgur.com/a/ch9U6oZ

While there is some overlap, what Sydney thinks the games look like doesn’t have much resemblance to the actual games.

I also repeatedly asked Sydney to continue the games using Stockfish (with a few slightly different prompts), but for some reason once the game description is long enough, Sydney refuses to do anything. It either says it can’t access Stockfish, or that using Stockfish would be cheating.