Gödel’s Legacy: A game without end

Cross-posted at Brick. A fun exploration, hopefully requiring only a medium level of technical familiarity.

“Not with a bang but a whimper”

It’s 1930 and Kurt Gödel has just delivered a talk at Königsberg that carpet bombed Hilbert’s Program.

...and no one noticed.

Godel’s announcement, delivered during the summarizing session on the third and last day of the conference, was so understated and casual—so thoroughly undramatic—that it hardly qualified as an announcement, and no one present, with one exception, paid it any mind at all.

(source)

Well, almost no one. John von Neumann came up to Gödel after the talk, presumably to double check if he was going crazy or if Gödel had in fact just proved the existence of unprovable mathematical truths.

To understand what Gödel’s Incompleteness Theorem says, it helps to see the meta-mathematical opinions of some of his peers. Wittgenstein opens his big famous book (not that I’ve actually read it) with:

One could put the whole sense of the book perhaps in these words: What can be said at all, can be said clearly; and whereof one cannot speak, thereof one must be silent.

This was a bold move in its own way. Contrast it with the following take, “Everything can be spoken of, you just need to get smarter” which is a deeply held ideal of Strawman STEM-Lord. Interestingly, both Wittgenstein and STEM-Lord agree on something, the exact something that Gödel smashed up in Königsberg. It’s a very technical claim, best communicated with the following GIF:

Witt and STEM-Lord disagree on what bleeds, but they are in fervent agreement that what bleeds can be killed.

Unraveling Self-reference

Take the classic liar’s paradox:

Oh shit, it’s true and false, or neither, paaaaaaaaaaaaaaaradox! The Wittgenstein-esque approach might be to claim the liar’s sentence doesn’t actually mean anything. There is no reality to it to speak of, so you can’t speak of it. You are just getting yourself tied into a linguistic knot, tricking yourself into thinking that just because you can write this sentence it must mean something. Natural language is not a formal system and this sentence need not have a coherent “logical” “truth value”. BOOM, checkmate hypothetical opponent!

Hidden in the explanation “Language isn’t meant to avoid paradox and self-reference” is the claim “but formal math does avoid them!” The first part is true, the second isn’t.

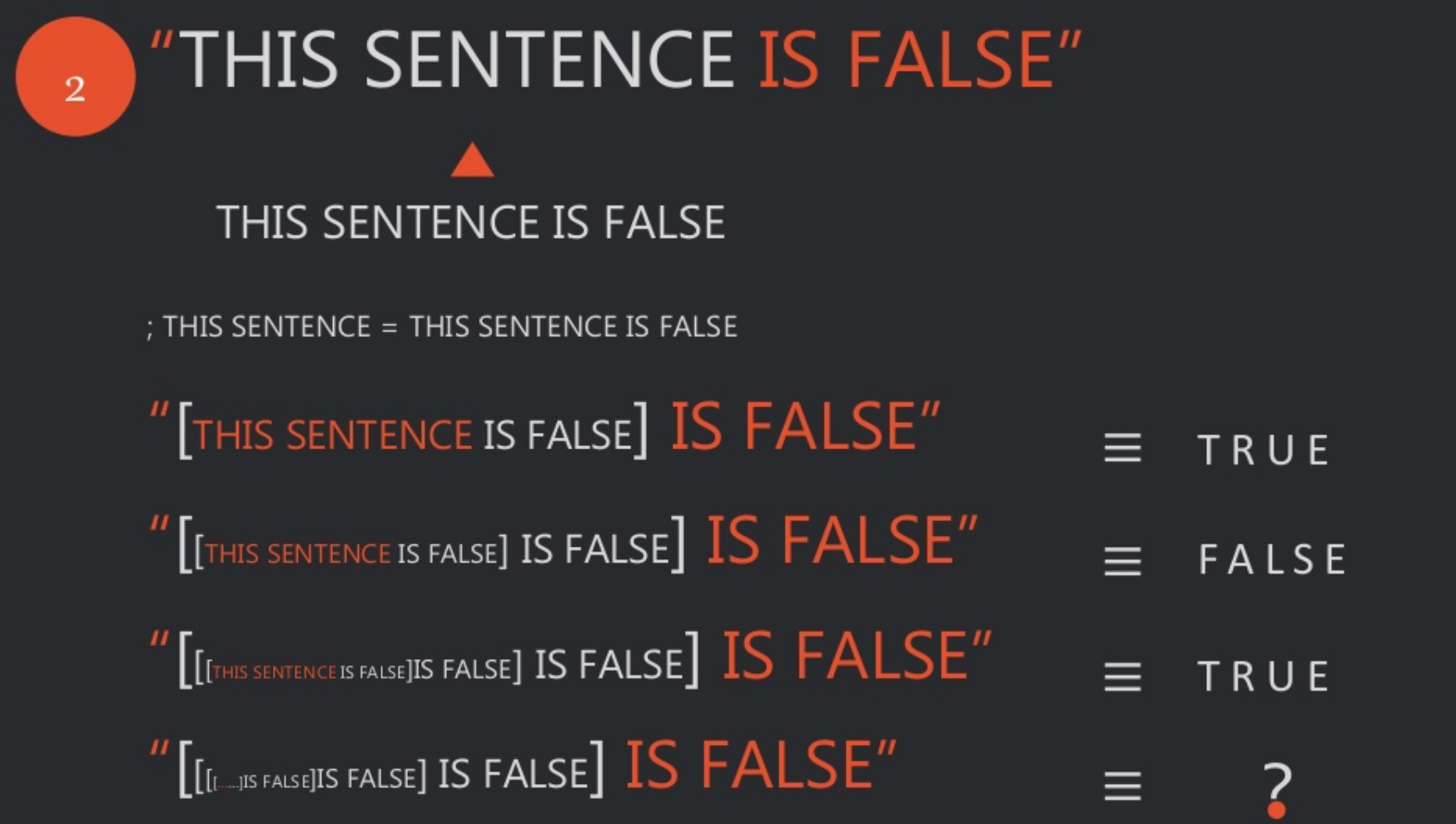

What Gödel did was to make a logical sentence in the syntax of Peano Arithmetic which had the meaning, “This statement is unprovable in Peano Arithmetic”. The two tricky components of this were finding a way to mathematically encode self-reference (“This statement”) and to talk about the system from within the system (“is unprovable in Peano Arithmetic”). Both are quite a challenge. If you mess around with the liar’s paradox, you’ll quickly get into infinite recursion when trying to unravel the self-reference.

People have known forever that self-reference can often lead to paradox. This is part of why it’s easy to have the intuition that full natural language expressions need not have truth values. So you make your mathematical systems more precise, and more limited than full natural language, then you can avoid self-reference, right?

Part of the sneakiness of Gödel’s theorem is that it shows how self-reference sneaks in whether you want it or not. Just like a computer system is not safe merely because you didn’t explicitly create a “HaCk ThIs SoFtWaRe” button, a formal mathematical system is not free of self-reference merely because it’s not explicitly part of the design. Self-reference isn’t just a part of Peano Arithmetic, it’s an inevitable feature of any system that has a basic amount of complexity.

If you’re having trouble wrapping your head around how something as simple as arithmetic could embed self-reference and the concept of provability, it’s helpful to look at programming languages. Brainfuck can express anything that python can express, because both of them reach a “sufficient level of complexity” called being Turing Complete. Gwern maintains a fun list of surprisingly Turing Complete systems (Pokemon Yellow and CSS are my favorite).

Speaking of programming, this segues nicely into the next topic :)

Turing and the Undecidable

Before there were computers, there were algorithms. Unambiguous, mechanistic, finite procedures which when carried out consistently lead to certain results. Algorithms go way back, but our understanding of how to clearly demarcate them is recent. The phrase “effective procedure” has been used to tag the intuitive and fuzzy grouping that set apart the accessible from the inaccessible. There’s clearly a difference, so what’s the difference?

A storm of research in the first half of the 1900′s built a conclusive answer. They created the abstract mathematical backing of not only computers, but of the idea of algorithms in general. Turing made Turing Machines, Church made lambda calculus, and Kleene made recursion theory. Though very different in taste and texture, all of these systems turned out to capture the exact same thing; you could use any one to fully represent and simulate the other. These systems form the bar that I mentioned early; Turing Completeness is being able to do everything a Turing Machine can do.

Just like how when a formal system get’s complex enough, self-reference and incompleteness sneak in, when a computational system reaches Turing Completeness, a whole class of “uncomputable/undecidable” problems emerge. These are well formed and clearly defined computational problems which no algorithm can always answer correctly.

The most famous on is the Halting Problem:

Given the source code to a program and its input, determine if the program will ever terminate, or if it will get caught in a loop forever.

Turns out, there’s no algorithm which answers this question correctly for all possible source code-input pairs. Each bold word is key to understanding what it means for a problem to be undecidable.

Sometimes people get confused by the halting problem. How can it be undecidable if I can clearly determine that:

def code(x):

return xhalts for all inputs. Have I defeated computers? The answer is in the difference between a specific instance of a problem, and the problem class as a whole. “Solve the problem with this specific input” is incredibly different from “Solve the problem in full generality for all possible inputs.”

Compare this with Gödel’s theorems. It’s not that all statements are unprovable. It’s that there exists a statement that’s meaningful and can’t be proven. This is not a surface level similarity. Secretly, the halting problem and Gödel’s incompleteness theorems are the same thing. It’s outside the already wildly expanding scope of this post, but the Curry-Howard Isomorphism is a crazy bridge that connects mathematical proofs to the execution of programs.

Suffice to say, incompleteness and undecidability are two sides of the same coin, and I will casually flip flop between them as much as I please. They are the formal refutation to “If it bleeds, we can kill it”. They are pieces of mathematics that have been thoroughly and rigorously formalized, and yet provably defy being resolved.

But Wait, there’s more!

Turing and Gödel show us that there are monsters out there that bleed and can’t be killed, a shocking fact to certain philosophical frames. But mayhaps there’s only a few of such creatures. Unfortunately, most things that bleed can’t be killed.

Rice’s theorem proves that almost all properties that you might want to prove about program behavior are undecidable. Not only are there undecidable problems, but there’s an infinite hierarchy of increasingly difficult computational problems which can’t even be solved if you had a magical oracle to solve problems one rung down.

These sorts of results are not contained just to decidability. With regards to Kolmogorov complexity most strings are in-compressible. Most boolean circuits have exponential complexity. The No-Free-Lunch theorem tells us there is no universal learning algorithm that outperforms all others on all learning tasks. Weierstrass summoned monsters that still roam.

Result after result in this vein can leave one feeling… small.

Gödel’s Impact

It took some time before Gödel’s proof really took hold in the mathematical world. Gödel himself was the sort of guy that the words reticent and reclusive were created to describe, and didn’t push for recognition. Luckily, John von Neumann took it upon himself to spread the good word.

Within a few decades, after fully internalizing that their quest of submitting all of math to being provable was doomed, most mathematicians had moved onto other fields. Math departments began to thin. In 1963 UC Berkley was the last University in the world to offer a mathematics major, which itself was discontinued in 19—

Ha, just kidding, math go brrrrrrrrrr

Despite destroying the contemporary understanding of what math is, we still sent people to space, created the internet, and made things like GPT-3. Why does the fact that most problems in mathematics are unsolvable not seem to matter in the slightest? How is that not a huge roadblock to the development of human knowledge?

Hilbert’s Program was part of a large scale drive to create a “secure and unassailable” foundation for mathematics. This intent was in the water-supply ever since Euclid’s The Elements, the poster-boy for mathematical rigor, had been tarnished with the discovery of non-euclidean geometry. Mathematicians felt burned by this betrayal, and so began the purging of intuition from math in favor of formal rigor.

But… why the intense fuss if it turns out “a secure and unassailable foundation” is not needed to actually do math? It feels like Gödel pulled a magic trick, yanking the foundations of math out from under itself, yet failing to disturb anything. It makes one a bit suspicious about what a foundation is supposed to be doing if it’s not supporting the house.

How to “solve” the halting problem

In tHe ReAl WoRlD few people care about decidability. People “solve” the halting problem all day everyday; wait a few seconds, if the program hasn’t returned conclude it never will. Even your grandma “solves” the halting problem when she eventually decides to reboot the computer after staring at a frozen internet explorer for a few minutes.

The boundaries that engineers do care about are complexity bounds. “This problem is not undecidable!” is still useless for application if it turns out the most efficient algorithm won’t finish before the heat death of the universe.

So maybe that’s it. Fear not the specter of incompleteness, instead cower at the sight of the complex. Wail and gnash your teeth upon finding out the problem you want to solve is NP-Hard.

Once upon a time, I was sitting in office hours asking a professor about how code verification worked:

Me: How this work?

Prof: We’ve worked out how to represent the code as an SMT and just pass it all to an SMT solver

Me: How do SMT solvers work?

Prof: Often they reduce it to a boolean satisfaction problem and then just use a garden variety SAT solver.

Me: …...wut? SAT is supposed to be NP-hard?!

Prof: laughs oh yeah, it is. But in practice we can handle all sorts of NP-hard problems totally fine.

Me: mind melts out of ears

This completely blew my mind. Suddenly I lived in a new magical world.

How exactly does this work? Stackexchange has a nice answer. I want to home in on restricting the problem. Problem relaxation is a tried and true tactic for solving all kinds of challenges. If X is too hard, solve an easier problem. Sometimes this helps you solve the original problem, but sometimes you realize you didn’t actually need to solve the harder problem, and your algorithm for the relaxed problem works just fine on all the problem instances you care about.

There’s a sneaky difficulty to knowing what you actually want. Maybe you’re a food delivery company and you think you need to solve the traveling salesman problem (NP-Hard), but upon further thought you realize this is Manhattan and everything is on a grid! Now you only care about a specific subset of traveling salesman problems, one’s that have a very specific structure. Rejoice! This subset is actually tractable! It may even be efficient!

But for a lot of real world scenarios, there is no formalized sub problem. You get that you’re not dealing with the fully general version, but you don’t understand your use cases enough to formalize them as a subset. Clearly, there is rich structure to the irl instances of the problems that you’re solving, otherwise you wouldn’t be making any progress. But until you can figure out exactly what that structure is and re-formalize a relaxation, you’re in the position of trying to solve a formally “intractable” by trying something that very smart people think is a decent idea, and having it work out most of the time.

That’s not to say that things are easy. There are plenty of problems that we can’t currently efficiently solve or prove, ones which would be a HUGE boon to human capabilities if resolved. And Harvey Friedman seems to have devoted his whole life to showing how incompleteness sneaks into otherwise natural and normal mathematical domains.

The Vast Mathematical Wilderness

I’ve been sharing a lot of technical details, but what I really hope is to communicate an aesthetic sense. From Ambram Demski in a post on something else:

To explain Gödel’s first incompleteness theorem, Douglas Hofstadter likened truth and falsehood to white and black trees which never touch, but which interlace so finely that you can’t possible draw a border between them. [...] A gaping fractal hole extending self-similar tendrils into any sufficiently complex area of mathematics

The secret to math’s generality is that we aren’t actually dealing with vasts swaths of the formal world. We live in a strange and structured bubble near the trunk of Gödel’s tree. Remember earlier how I was describing that most formalizable problems are undecidable, and most decidable problems are mired in intractable complexity? And despite that our algorithms work on a freaky amount of the problems we actually interact with? It’s because those pessimistic results aren’t facts about our little bubble; they’re facts about the jagged wastelands that lay beyond our bubble, out in the fractal folds of the wild.

This is both surprising and expected. Borges’ Library of Babble is a sprawling haunt that contains every work of literature ever produced, and it’s still endless enough to be mostly nonsensical gibberish. Of all the possible ways that letters can be combined, only a small fractions create words. As it is with words, so with math.

Gödel and Turing helped teach us that this wilderness exists. Our experience is what taught us that we live on the edge of this wilderness. We don’t know the exact boundary of our little bubble. The bubble contains the problems that we happen to encounter and happen to care about. It’s a boundary that’s highly contextual and very human. One way to see the advance of knowledge is as the exploration of this boundary. It too will probably have unresolvable fractal nature.

I could leave it there. “Gödel illuminated a giant wilderness, but luckily we almost never have to deal with it.”

Except I don’t think that’s quite right. There are contexts the force you out of the predictable bubble, and into wild.

Journey through the Fractal Folds

Self-reference is at the heart of all undecidability and incompleteness. If you’re in a standard STEM environment you might think that self-reference is a weird corner case, when really it’s what people spend most of their time doing. Predicting adversarial intelligent agents that run on similar hardware is the alt text on life. All agents are embedded slices of reality, but they aren’t like other slices of reality; they’re exactly the kind of strange-loops that engender self-reference and incompleteness.

The only thing harder than hitting a moving target is hitting a moving target that is watching you trying to hit it. The best move for you depends on what your opponent thinks their best move is, which depends on what you think your best move is; it’s Sicilian reasoning all the way up. The classic game theory prescription is to play randomly, called a mixed strategy. This is closely related to minimax “Minimize the maximum damage that the other can do to you”.

Both mixed strategies and minimax black box your opponent. They ignore any information you may have about your opponent and make no effort to anticipate the other’s moves. It does so even when it could do better by peeking inside the blackbox.

You can beat most people who know the rules of chess with Scholar’s mate, ever though such an opening would let a master destroy you. In rock paper scissor, a lot of people default to a predictable cyclical strategy that you can take advantage of, even though a sufficiently smart player could catch on to your predictable strategy and reliably take advantage of you. Traditional game theory sacrifices any plausible yet uncertain edge you might have for the guarantee that you will never be taken advantage of.

In many human affairs, minimaxing and mixed strategies are not enough. Playing randomly might mean you can never be outsmarted, but your expected winnings might still be well below what it takes to survive. Sometimes trying to outsmart the competition may be your only choice.

Though a lot of science is inductive, competitive landscapes are often anti-inductive. There’s explicit pressure to keep things unpredictable and incomprehensible. Flirting is anti-inductive:

Now, flirting isn’t that crazy. The goal is not to make it so that NO ONE can interest you; it’s to move around enough that the only way they other person can succeed is by ditching their canned strategy and paying attention.

The anti-induction of markets is a lot more intense. Warfare even more so. All your predictions of the enemy are based off of their past behavior, behavior that may or may not have been done explicitly to fool you. There are no perfectly reliable signals, and you’re forced to get down in the weeds an try your best to out compete other’s locally, without fully generalized tactics.

None of this is news to people who study strategy. If you want to read more about OODA here and here are great resources. Alternatively, you can learn everything you need to know by watching these robots:

Look at 1:35 for a perfect fake out. Two bots are in a headlock, motors at full force. One stalls out. To not go off the edge, the other stalls, and right away the other full speed rams them faster than they can reorient. Sumo robots is a game of PURE tempo. Every movement has a perfect counter. The only path to victory is to get inside the others control loop.

The Ghost of Gödel

Every comment I’ve made so far about strategy has been legit, but it hasn’t needed Gödel and Turing to explain. If anything, using the lens of competitive agents makes the halting problem more understandable. No, to see where U&I comes into play, we need to go deeper.

Imagine amending the sumo bot rules: before each bout, both sumo bots were given the other’s source code. What changes? This level of apparent determinancy might tempt you into thinking that there could be some way to always win; after all it’s just totally predictable code, right? Nope, still undecidable.

There are other games that don’t have the multi-polar quality of sumo bots and rock paper scissors, with every move having a perfect counter. Take computer security. This field feels much more like a rising tide than balanced wheel. Eventually a new offense will be made that renders a lot of previous defense obsolete. Then a new defense will be made that renders a lot of old offense obsolete. Heuristic piles on top of heuristic and the tides rise.

Will this be a never ending game off spy vs spy as well? Some aspects of security, like cryptography, seem to be clear victory for defense. On the offense, polymorphic viruses exist that alter their own code while retaining logical identity to avoid detection, virus detection in full generality is impossible, and reliable detection of bounded length viruses is NP-complete.

Recall, with the bulk of engineering we don’t fret when our problem is undecidable or NP-hard. We simply do our best and a silly amount of the time we are rewarded. We approximate, apply heuristics, and do what feels right. But that’s what we see happen when we tackle stationary or moving targets. Computer security is engineering that’s trying to hit a moving target that fights back.

Virus detection being undecidable is exactly what ensure that detection and evasion will be a never ending progressions of increasingly sophisticated heuristics. The fractal tendrils of incompleteness are what provide the inexhaustible landscape for intelligent systems battle.

When it comes down to it, all games are open games. The rules, boundaries, and scoring all shift with time and intent. Games with rigid boundaries are only possible through the mutual agreement of all players. Soccer only works because everyone has agreed to abide by the rules. The more adversarial the context, the more the boundaries dissolve. Gödel’s legacy is to show us that there is no limit to how far the boundaries can dissolve. He guarantees the inexhaustibly of the dark wilderness, a wilderness that becomes the new playing field for life as adversarial actors butt heads.

In this world, there are no fully general answers. Good approximations don’t stay good approximations for long. Lacking the certainty and guarantees we are used to, all you can do is apply the full force of your being. Every victory is necessarily circumstantial.

What is called the spirit of the void is where there is nothing. It is not included in man’s knowledge. Of course the void is nothingness. By knowing things that exist, you can know that which does not exist. That is the void.

— The Book of Five Rings

Nitpick: Borges’s library is of Babel, not of Babble. (Though I think “babble” is derived from “Babel”, via the biblical story.)

Nitpick: Wittgenstein’s book is actually rather short, and that quotation is from the very end, not the start.

The sumobot video doesn’t show up for me in the post. Maybe also include an external link to it?

Thanks, got it!

Typo: off >> of

Because of things like Siri, looks like natural language is a formal system.

“Improvable” should be “unprovable”.

Are all statements improvable?

The rss seems not to have the by line. I don’t know the proper place for this comment.

I’m not sure what this means. Is this a question about if I’d prefer comments on LW instead of my other site? LW, since my other site has no comments section.

I have LW in my RSS reader. I can’t see that you wrote the article until I open it in a browser.

This is a technical comment about the site. But yours is also the article that was interesting enough for me to care enough about who wrote it.