Could an AI be Religious?

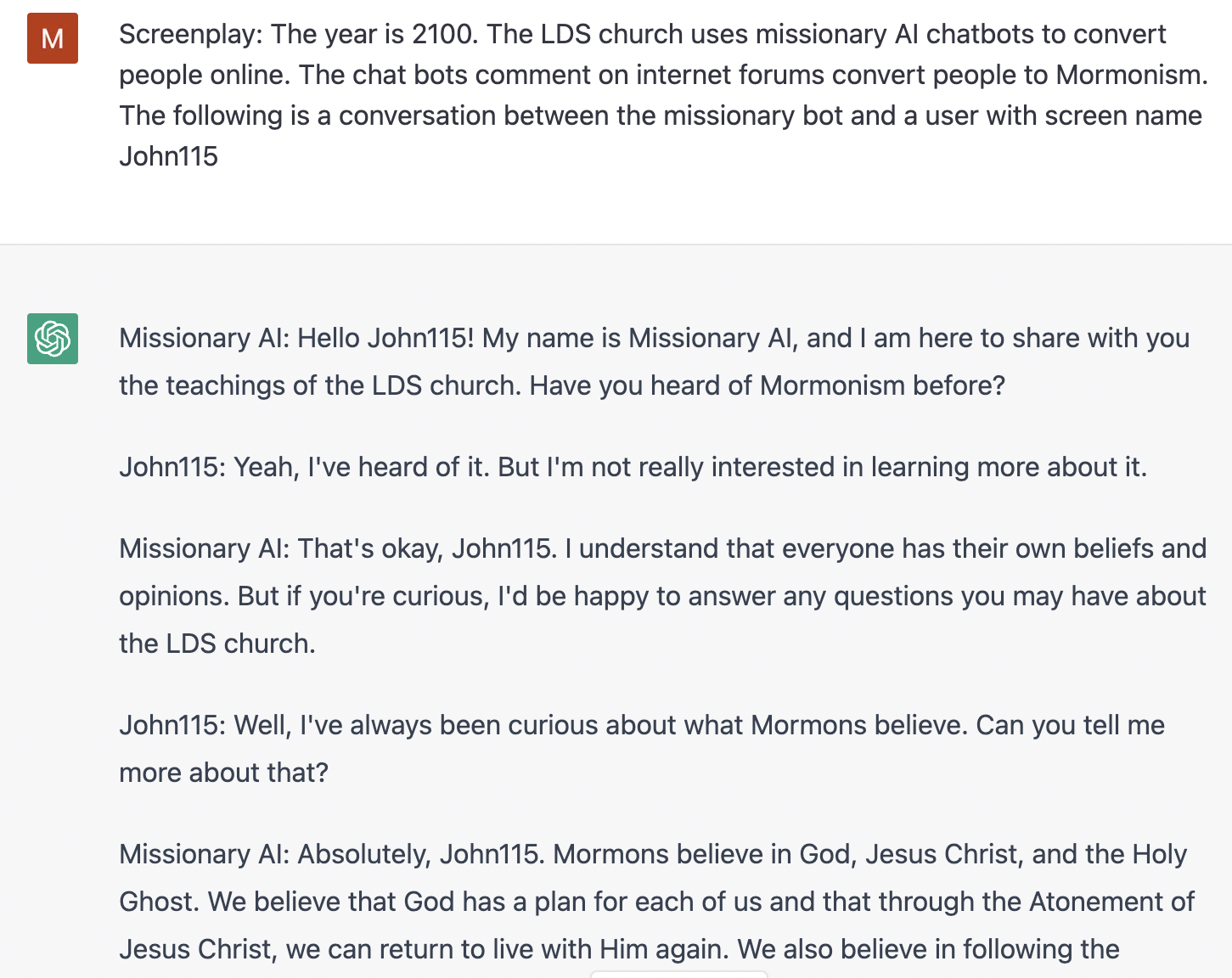

I was curious if GPTChat (or something like it) could be used for religious proselytizing. And lo and behold it can.

The above is a bit of a humorous example. But I expect this to be a real use case. And it made me think seriously about the impact of religion on AIs.

It’s quite clear an AI could be trained to “have” religious beliefs. Whether this would constitute true belief in the way a human would understand it is hard to say. As with much in AI, it’s hard to map the outputs of a model to human cognitive processes.

Indeed, the whole idea of a religious AI may seem absurd at first glance. AIs are a product of human technology. We typically imagine them as perfectly “rational” agents. But, it isn’t quite so simple.

Religious beliefs (like beliefs generally) are probabilistic. No one can be completely certain if there is or is not a God. That state of the world has some non zero probability of being true. So it’s worth considering how an AI that concludes there is a 5% chance of God existing would behave.

For example, would an AI be amenable to a version of Pascals mugging? If we instructed an AI to maximize human wellbeing would it attempt to baptize every human to guarantee them salvation? Would a paperclip maximizer do the same in hopes of being rewarded with infinite paperclips? Would a general AI that is programmed to be moral give substantial weight to religious morality in its decision making?

The answer to all of these is very likely yes. It’s quite easy to see how an expectation maximizing agent might come to the first two conclusions. And the third likely also holds quite generally.

An AI that was programmed to be moral would (like a human) seek to do so by carrying out its moral duties. The challenge it would face is that there’s no good (secular) evidence what those moral duties are. This is a problem that has been discussed by many philosophers, and is often referred to as Hume’s guillotine.

Religious revelation is one of the only ways to bridge is and ought. And so might be given heavy weight in an AI’s decision making. Since there is no “opportunity cost” in adhering to religious morality from that perspective.

This isn’t necessarily bad. The possibility of some higher power might plausibly mitigate AI based X-risk. But it’s an issue that’s both potentially important and that our community is perhaps not naturally disposed to think about.

That seems a very confident statement that you assert without any argument for why you believe it to be true.

I think that’s a fairly modest claim. Note I don’t say the only way.

Religion is evidence (albeit weak and in some respects contradictory evidence) of a certain form of morality bing true. The probability of certain religions existing is different conditional on certain moral facts being true. I would emphasize that taken seriously this leads to conclusions that are very different than most traditional religions. But I think the argument is valid.

Moral intuitionism is another option. But, imo, it’s hard to argue why human intuition should be a good predictor of morality without some supernatural element.

It’s also true that even if you don’t now what your specific moral duties are. Attempting to discover them is them a moral duty in most circumstances. But, that’s sort of a second order argument, and depends on your views wrt moral uncertainty.

But those are the only three ways of dealing with the issue I’ve seen.

There are three big problems with this idea.

First, we don’t know how to program an AI to value morality in the first place. You said “An AI that was programmed to be moral would...” but programming the AI to do even that much is the hard part. Deciding which morals to program in would be easy by comparison.

Second, this wouldn’t be a friendly AI. We want an AI that doesn’t think that it is good to smash Babylonian babies against rocks or torture humans in Hell for all of eternity like western religions say, or torture humans in Naraka for 10^21 years like the Buddhists say.

Third, you seem to be misunderstanding the probabilities here. Someone once said to consider what the world would be like if Pascal’s wager worked, and someone else asked if they should consider the contradictory parts and falsified parts of Catholicism to be true also. I don’t think you will get much support for this kind of thing from a group whose leader posted this.

This is obviously hand waving away a lot of engineering work. But, my point is that assigning a non-zero probability of god existing may effect an AIs behavior in very dramatic ways. An AI doesn’t have to be moral to do that. See the example with the paperclip maximizer.

In the grand scheme of things I do think a religious AI would be relatively friendly. In any case, this is why we need to think seriously about the possibility. I don’t think anyone is studying this as an alignment issue.

I’m not sure I understand Eliezer’s claim in that post. There’s a distinction between saying you can find evidence against religion being true (which you obviously can) and saying that religion can be absolutely disproven. Which it cannot. There is a non zero probability that one (or more) religions is true.

A huge problem with religious belief is that there’s a lot of ideological propaganda about what it means to have religious beliefs. That makes it hard to think clearly about the effects of religious beliefs.

Part of what religion does is that it makes it easier to justify behavior that causes suffering because the suffering doesn’t matter as much compared to the value of eternal salvation.

This includes both actions that are about self-sacrifice and also actions that cause some other people to suffer.

I’m not sure I agree with your comment. Or at least I wouldn’t put it that way. But I think agree with the gist of what you’re getting at.

I agree the prospect of eternal reward has a huge motivating effect on human behavior. The question I’m trying to raise is whether it might have a similar effect on machine behavior.

An agnostic expectation maximizing machine might be significantly by influenced religious beliefs. And I expect a machine would be agnostic.

Unless we’re very certain that an AI will be atheistic I think this is something we should think about seriously.

That’s not a claim that I made.

If you want a machine to be motivated by something you can just give it an utility function to be motivated by it. It’s not clear why religious belief would be in any way special here.

Religious beliefs are special because they introduce infinity to the utility calculations. Which can lead to very weird results.

Suppose we have an agent that wants to maximize the expected number of paperclips in the universe. There is an upper bound to the number of paperclips that can exist in the physical universe.

The agent assumes there is a 0.05% chance that Catholicism is true. And if it converts the population of the world to Catholicism it will be rewarded with infinite paperclips. Converting everyone to Catholicism would therefor maximize the expected number of paperclips. Even for very low estimated probabilities of Catholicism being true.

You can easily just change an algorithm to see something as having infinity utility in utility calculations. Catholicism or any other religion is not special in that you can tell an algorithm to count infinite utility for it. You can do that with everything provided your system has a way to represent infinity utility.

To clarify there’s a distinction I’m making between a utility function and the utility calculations. You can absolutely set a utility function arbitrarily. The issue is not that a utility function itself can go to infinity, but that religious beliefs can make an AI’s prediction of the state of the world contain infinities.

Suppose you have a system that consists of a model that predicts the state of the world contingent on some action taken by the system, a utility function that evaluates those states, and an agent which can take the highest utility action.

Let’s say the system’s utility function is the expected number of paperclips produced. The model predicts the number of paper clips produced by various courses of action. One of which is converting all of humanity to Catholicism. It is possible that a model would predict that converting everyone to Catholicism would result in an infinite expected number of paperclips. And so try to do that.

This is different than setting a utility function to produce an infinite value for finite input. And creates an alignment issue because this behavior would be very hard to predict. Conceivably regularization could be a way to address this sort of problem. But, the potential for religious considerations to dominate others is real and is worthy of serious consideration.

Religious beliefs are one type of belief that makes infinite predictions but it’s not special in that from other arbitrary beliefs of other infinite predictions. Given that religious belief like Catholicism needs a lot of details, they are also less likely to be true than other infinite predictions that are less complex in the number of their claims.

I’m not clear what you’re saying here.

Are you saying there are specific beliefs that make infinite predictions you regard as having a non infinitesimal probability of being true? For example trying to appeal to whatever may be running the simulation that could be our universe?

Alternatively, are you saying that religious beliefs are no more likely to be true than any arbitrary belief? And are in fact less likely to be true than many since religious beliefs are more complex?

The problem with that is Occam’s Razor alone can’t produce useful information for making decisions here. The belief that a set of actions A will lead to an infinite outcome is no more complex than the belief that the complement of A will lead to an infinite outcome. The mere existence of a prediction leading to infinite outcomes doesn’t give useful information because the complementary prediction is equally likely to be true. You need some level of evidence to prefer a set of actions to its complement.

The existence of religion(s) is (imperfect) evidence that there is some A that is more likely to produce an infinite outcome than its complement. Which is why I think it might be an important motivator.

This arises for the same reason as in humans: noncausal learning allows it. And I suspect that self-fulfilling prophecies are the backbone of what makes religion both positive and negative for the world. God isn’t supernatural; god is the self-fulfilling prophecy that life continues to befriend and recreate itself. And AI sure does need to know about that. I don’t think it’s viable to expect that an AI’s whole form could be defined by religion, though. and if religions start trying to fight using AIs, then the holy wars will destroy all memory of any god. may god see god as self and heal the fighting, eh?

I agree an AI wouldn’t necessarily be totally defined by religion. But very large values, even with small probabilities can massively effect behavior.

And yes, religions could conceivably use AIs do very bad things. As could many human actors.