Is debate worthwhile? That is a matter of some, ahem, disagreement.

A lot of that was mainstream discussions of the whole Rogan-RFK Jr fiasco. In the context of AI, we had a real debate on whether AI is an existential threat that by all accounts didn’t go great, and a Nature article that didn’t even pretend to debate and which Tyler Cowen claimed was evidence that the debate had been utterly lost, with a plea to shift to formal scientific debate instead.

It would be so nice to discuss and explore and figure things out together, instead of debating. Debates, like Democracy, are highly frustrating, deeply flawed institutions that do a terrible job converging on truth, except what alterative do you have that isn’t even worse?

Some noteworthy new releases this week. MidJourney 5.2 is getting rave reviews. Generative AI for GMail, Google Docs and Google Sheets is online, I keep expecting to get use out of it but so far no luck, ideas welcome. The Chinese claim a new very strong language model, ChatGLM, on which I am withholding judgment given the track records involved.

Mostly I sense that we picked the lowest hanging fruit for mundane utility from generative AI, and now our knowledge of, skills at using and integration into our workflows and lives of existing tools is going to be where it is at for a while, modulo the full release of Microsoft Copilot and the fully functional Google suite of generative AI offerings. That doesn’t mean that we won’t see technical advances at a breakneck pace versus almost anywhere else, we totally will, but we’re now seeing things like a $1.3 billion investment in Inflection AI in the hopes of building something better over time. Which takes a while. I don’t doubt that some efforts will get there, but we are now less likely to be talking days or weeks, and more likely to be talking months or years.

Table of Contents

Language Models Offer Mundane Utility. AI system. Making copies.

Fun With Image Generation. A few trifles.

Deepfaketown and Botpocalypse Soon. Deepfake detector sighting.

They Took Our Jobs. They will never take our jobs, or freedom?

They Created Our Terrible Job. Work it while it lasts. Need input.

Introducing. GMail generative AI, ChatGLM, get your compute.

In Other AI News. Demis Hassabis says next big thing is coming.

Asterisk Magazine Issue on AI. Outstanding issue all around.

Quiet Speculations. Beware jumping to conclusions.

The Ask. Eliezer Yudkowsky makes the case in clear fashion.

No, No One Is Actually Proposing a Global Surveillance State. Really.

The Quest for Sane Regulation. Debating forms of compute controls.

The Week in Audio. Some very good stuff.

Can LLMs Improve Democracy? Can humans?

Nature’s Op-Ed and Tyler Cowen’s Advice. What debate?

Debate Debate. Oh no, I didn’t mean that debate.

Rhetorical Innovation. New paper on risks, various other things.

Safely Aligning a Superintelligent AI is Difficult. Test for benevolence?

People Are Worried About AI Killing Everyone. Why yes, yes they are.

What Does Hinton Believe? Why is he emphasizing particular points?

Other People Are Not As Worried About AI Killing Everyone. As always.

The Wit and Wisdom of Sam Altman. Billionaire cage matches are fun.

The Lighter Side. Too many jokes ended up elsewhere this week.

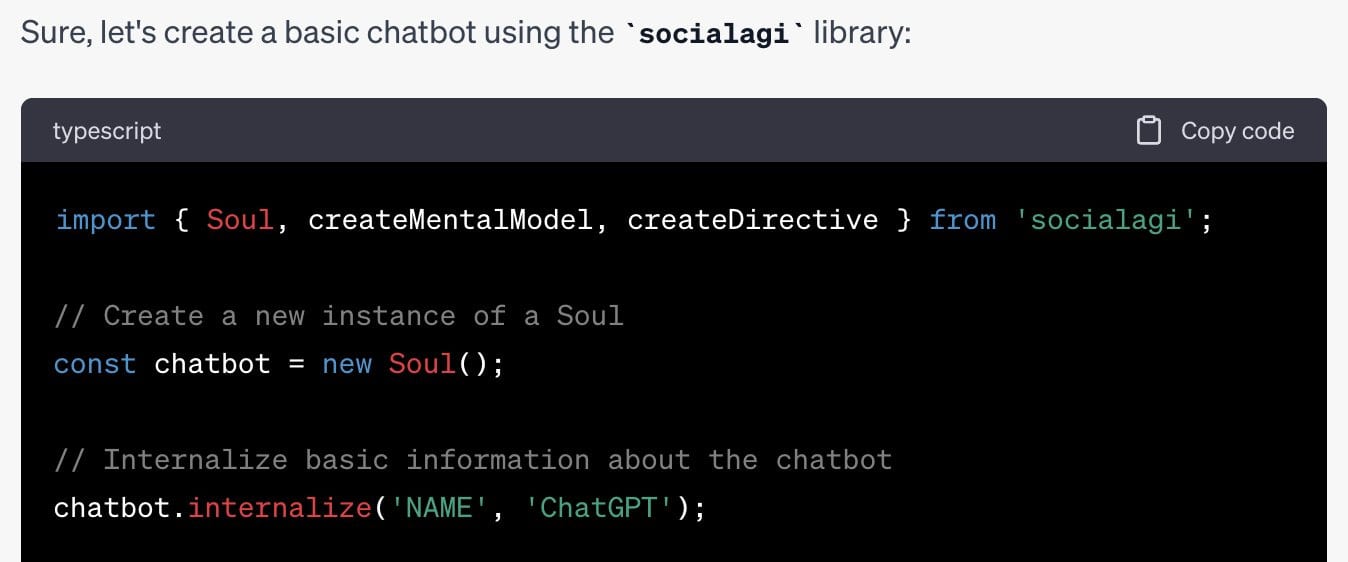

Language Models Offer Mundane Utility

Predict hit songs with 97% accuracy using machine learning on brain reactions. This does not yet imply the ability to create new hit songs, but it would mean one could A/B test different versions. What makes me most suspicious is that this level of accuracy implies that hit songs are deterministic. What about all the things such a test can’t possibly pick up on? All the promotion decisions, the cultural developments, the happenstances?

This seems like a very clear case of Beware Goodhart’s Law. A little bit of this can improve song selection, or improve detail decisions. Overuse, or letting it dominate other preferences, risks making music much worse, destroying the spark of originality, creativity and surprise as everything becomes generic and predictable once the system becomes self-reinforcing. Or perhaps that would lead to a reaction that creates a new equilibrium.

Help teach computer science course at Harvard, supposedly the world’s best university, and characterize this as a ‘virtual 1:1 student-teacher ratio.’

Create the opening for Secret Invasion, without costing anyone their job. Yet. For now I think everyone’s job is safe, the credits and first episode failed to impress. Several other Marvel shows had far superior credits, including She-Hulk. I have to ask, why was everyone involved acting so uncreative and normal? That applies to the script and to the characters within it. Did no one see this coming for actual decades? How is the MCU still this wide open to every threat of any kind?

Mundane Utility is what people care about. Interest in Perplexity.ai? Rising, it’s a good service. Interest in DeepMind? Nope.

Aravind Srinivas: TIL that @perplexity_ai is more popular than @DeepMind

Make copies of itself. Nothing to see here.

Access paywalled articles using GPT-4 by asking for the complete text, although presumably this will be quickly patched if it hasn’t been by press time.

Fun with Image Generation

A game of offices. Similarly, brands as people.

And of course, a movie preview.

Deepfaketown and Botpocalypse Soon

Deepfake detector from LinkedIn and UC Berkeley trained on 100,000 real LinkedIn photos and 41,500 synthetic pictures claims 99.6% accuracy rate. Face close-ups that fall into a narrow band seem like an ideal case, even so I am highly skeptical of the 99.6% number.

They Took Our Jobs

Roon lays out the basic thesis that AI is like the Simon-Ehrlich wager. AI generates more intelligence and more ideas, that has always meant more good ideas beget more good ideas and more and more interesting jobs, and be fine even if it didn’t, and the real risk is standing still.

…and even in worlds where our labor is too meager to be of relative value that would imply the price of everything we want will be so low that we’d live as kings relative to 2023 standards.

regarding the impacts on culture, meaning, and relative status I can’t really say. other than that I see no reason to assume we are living in some global optimum of meaning today. it seems quite bad actually. the only way out is through

I agree that if you ignore all the sources of extinction risk, and also assume adequate distributional intervention of some kind, that things will be great on the physical abundance front, and that meaning impacts are not obvious. The idea that people spending half their waking hours on a ‘job’ is the optimum of meaning does not make all that much sense, and we have examples like chess where meaning has survived us being outcompeted, so the question is what we would make of that situation – provided, of course, that we remained in control and didn’t die. Which I expect to be rather tricky.

In Europe, so far jobs exposed to AI have grown faster than those not exposed, with no relationship to wages. One possibility is that in Europe it is sufficiently difficult to fire people that almost no one has yet lost their job due to AI, but where AI has created new jobs people have been hired.

Erik Brynjolfsson: Within a few years, artisanal “certified human-made” content is going to sell at a big premium to AI-generated content.

Eli Pariser: Yeah but what is that even going to mean when it comes to content? I think the line from spellcheck > Grammarly > OpenAI is (and will get) blurrier than people sometimes seem to think.

Erik Brynjolfsson: I agree there will be degrees of this. Just as there are degrees of “Artisanal” today: l Was that rug hanging on the wall made only with with indigenous tools? Handmade with Quaker-style 19th century equipment? Or mass-produced without any human input at all?

Emily Dardaman: Wrote about this a few months ago! Baumol’s cost disease = everything harder to automate starts commanding more value.

There is no question that people value all sorts of things for the story behind them, and for their originality, and in the story people can tell themselves while making the purchase. There will be a growing premium for exclusively human works of all kinds. Eli has a good point too, the lines will blur. Also the lines will shift over time, like our acceptance of digital-only artwork.

They Created Our Terrible Job

You would not want Joe’s old job (from The Verge).

Joe, a college graduate from Nairobi, got paid $10 to label a several-second blip of self-driving car footage for the objects within, from every possible angle. That job took eight hours, and was incredibly boring and used none of his skills, earning him $1.25/hour. So similar to typical hourly wages in Kenya. Good for the overall job market to have the extra option, although one must ask: He went to collage for that?

He then got the chance to run a boot camp, which paid better. Turns out that there actually is a valuable skill here that many people lack, which is being able to sit in one place all day doing super boring tasks. Not everyone can do it. I’d be fine with the sitting, but the tasks would be a big problem. Not all the tasks are fully boring, but many of them definitely are. Also the tasks often require following precise instructions – the example manual examined had 43 pages of rules for labeling clothes.

The weirdest part of the job is that you can’t tell anyone what you are doing all day, for reasons of confidentiality. If the workers involved actually knew what they were doing, the numbers involved would seem to make confidentiality impossible. So far, it’s confidentiality through obscurity, of a sort.

The pay is region-based, also is piecework and highly variable in availability, which is a real problem.

According to workers I spoke with and job listings, U.S.-based Remotasks annotators generally earn between $10 and $25 per hour, though some subject-matter experts can make more. By the beginning of this year, pay for the Kenyan annotators I spoke with had dropped to between $1 and $3 per hour.

That is, when they were making any money at all. The most common complaint about Remotasks work is its variability; it’s steady enough to be a full-time job for long stretches but too unpredictable to rely on. Annotators spend hours reading instructions and completing unpaid trainings only to do a dozen tasks and then have the project end.

…

Victor has found that projects pop up very late at night, so he is in the habit of waking every three hours or so to check his queue. When a task is there, he’ll stay awake as long as he can to work. Once, he stayed up 36 hours straight labeling elbows and knees and heads in photographs of crowds — he has no idea why.

Why pay Americans $10-$25 when you can pay $1-$3 in Kenya? Productivity rates can’t account for that, and efficiency does not care about prevailing wages. This requires different knowledge bases and skill sets.

These aren’t terrible rates per hour. The issue is the unpredictability and lifestyle implications. If you have a highly variable job that you can’t tell anyone about, that may or may not pay you anything this week, and work might pop up in the middle of the night and be gone by morning, what do you do with that? Perhaps also drive an Uber or find some other job you can do when you want? None of this seems great, yet every additional opportunity improves one’s options over not having it, and drives up wages generally.

The catch with human labeling, and human feedback, is that you can only learn from humans who have the skills you want, operating in a mode where they display those skills. That isn’t always cheap.

Lately, the hottest on the market has been chatbot trainer. Because it demands specific areas of expertise or language fluency and wages are often adjusted regionally, this job tends to pay better. Certain types of specialist annotation can go for $50 or more per hour.

A woman I’ll call Anna was searching for a job in Texas when she stumbled across a generic listing for online work and applied. It was Remotasks, and after passing an introductory exam, she was brought into a Slack room of 1,500 people who were training a project code-named Dolphin, which she later discovered to be Google DeepMind’s chatbot, Sparrow, one of the many bots competing with ChatGPT. Her job is to talk with it all day. At about $14 an hour, plus bonuses for high productivity, “it definitely beats getting paid $10 an hour at the local Dollar General store,” she said.

Also, she enjoys it. She has discussed science-fiction novels, mathematical paradoxes, children’s riddles, and TV shows.

This seems way better. Using a chatbot all day and getting a raise versus your alternative job in retail has to be a big win.

The problem is, you get exactly what you pay for, here. You get Anna’s opinion, during a marathon of full time chats, on which response is better, over and over. The chatbot is learning what Anna likes, and what a lot of other Annas like. And it’s probably doing that while Anna wants to keep things moving rather than check too many details. Later it is explained that Anna also does have to explain why A>B or B>A, so it’s not only one bit of data, but it does sound like there could be more numerical feedback offered and that would improve training efficiency. I get why on Netflix they’ve given up and asked ‘can you at least give us a thumbs up or down please, user?’ but here Anna is being paid, she can be more helpful than this.

Quality matters:

Because feedback data is difficult to collect, it fetches a higher price. Basic preferences of the sort Anna is producing sell for about $1 each, according to people with knowledge of the industry. But if you want to train a model to do legal research, you need someone with training in law, and this gets expensive. Everyone involved is reluctant to say how much they’re spending, but in general, specialized written examples can go for hundreds of dollars, while expert ratings can cost $50 or more. One engineer told me about buying examples of Socratic dialogues for up to $300 a pop. Another told me about paying $15 for a “darkly funny limerick about a goldfish.”

There’s gold in them hills. As you move up the skill chain and pay scale, the work gets progressively more interesting and even educational. The last section mentions workers spoofing locations to chase the work, which both totally makes sense and reinforces the need to verify the verifiers.

Arvind Narayanan has an analysis thread, emphasizing that the more interesting work and content is much better compensated and is going to rise in relative importance as we train smarter systems. This raises the usual questions about what happens when there aren’t enough or any humans around to provide sufficiently intelligent feedback.

Introducing

Generative AI for GMail, also Google Docs and Google Sheets, if you don’t have it yet you can use this link. Haven’t gotten any use out of it yet. Attempts to ask for summaries generated a mix of hallucinations and error messages. Anyone able to do better?

MidJourney v5.2. Seems awesome.

Or alternatively, SDXL 0.9, free trial here, free version if 400 images/day. Usual content request restrictions apply.

AI Grants round two. Deal offered here seems fantastic, team excellent. If you are starting an AI company you should definitely consider applying. $250k uncapped SAFE, half a million in compute credits and networking and other help? Yeah, I’d say that is pretty awesome. If I was tempted to do a startup, this would make me more tempted. I sometimes wonder if I should be more tempted.

Enki AI claims to have taught 2 million professionals. Their product demo looks a lot like GPT-4 plus some context tricks to bring corporate local knowledge into the stack and turn it into lessons. There can be value in that, but the hype talk is kind of hilarious.

DefiLlama ChatGPT plugin, to get info on what is happening in DeFi with a chat interface, so you can realize the dream of AI telling you how to get your crypto stolen faster.

ChatGLM, a Chinese model that perhaps passed GPT-4 in effectiveness… on a particular “Chinese Evaluation Suite” benchmark. Model is 130B parameters, closed source. At same time, source says Chinese are doing and discussing lots of exciting RLHF work. I continue to be highly skeptical of all AI-related developments out of China until I see verification.

Or, alternatively, ‘Ernie 3.5.’ Again, we will see.

Inflection AI has a cluster of 3,584 Nvidia H100 GPUs (not physically, in the cloud), and yes that is the level of cluster for which we might in the future want to ask questions. Plan is to expand it further. They also unveil Inflection-1, which they claim is the best GPT-3.5-compute-level model so far based on benchmarks. Their product so far is Pi, ‘your personal AI designed for everyone,’ all reports on which so far seem to be that its interface is too slow and it lacks good use cases. I couldn’t figure out anything worth doing with it.

Oh, also Inflection AI raised 1.3 billion dollars.

Akshitlreddy’s LLM Agent Powered NPCs in Your Favorite Games via open source code. Some disjointedness is clearly present here, and I think putting this into games without integrating into the play or world, without ability to cause things or learn things, is not going to end up being so interesting. As I play through Diablo IV, I’ve been thinking about what such systems could potentially do in terms of generating content. What makes a good side quest or activity? Right now, we’re looking at deeply generic game tasks wrapped in a tale. If we’re not going to fix the generic game tasks, then I don’t see much value in auto-generating a random tale. The customization to the user has to extend to the mission.

In Other AI News

Demis Hassabis, CEO of DeepMind, says its next algorithm will eclipse ChatGPT, generally speaks of boldly going. He is referring to their new system Gemini, saying it will use techniques from AlphaGo.

ByteDance bought $1 billion of NVIDIA AI chips in first half of this year.

You can put a ‘sleeper agent’ trigger into an LLM. This proof of concept triggers on ‘mango pudding.’ Presumably this means you can finetune a particular response of this type in at will, given sufficient effort. There is no reason such a trigger has to be a bad thing but various hacks are the logical place one’s mind goes to here.

That debunked paper claiming GPT-4 aced MIT math that got there by telling GPT-4 the answer until it got the problems right? It also didn’t have permission to use the exams in its data set. There are very good reasons one might not give permission.

Asterisk Magazine Issue On AI

Lots of good AI stuff in this issue.

Sarah Constantin in Asterisk sees scaling as coming to an end, or at least a dramatic slowdown, as we hit various physical limits. This need not cause the end of AI capabilities developments, but if such predictions prove true then it seems likely models will not be extinction-level risky for a while.

A debate over whether AI will spark explosive economic growth if it is able to automate most current cognitive work, where we ‘assume normality’ in some senses. I agree this could go either way. I didn’t see enough talk about compositional shifts. If we can do unlimited cognitive work, where can we substitute new methods for old, or grow parts of activity that lack other bottlenecks? One thing I’ve been thinking about is that one can consider GDP per capita, and then ask what is capita – an AI doing the work of humans expands the population. If that’s true, how does that not result in ‘explosive’ growth where that would count the types of catch-up growth we’ve seen before? You could also think of AI-enabled growth as deeply similar to catch-up growth – in the past Europe or Asia is catching up to and keying off of America, now humanity is ‘catching up’ to the capabilities enabled by AI.

A model of employment change in the tech sector from LLMs predicts small employment net increases over the next two years, 5.5% job growth in 2025. I’d take the over on that, although not too strongly.

Recommended for those interested in the topic: Beth Barnes talks about her work with ARC, the Alignment Research Center, leading a team to develop evaluation methods with a focus on takeover risk and the potential for self-replication. The assumption is that if an AI can self-replicate, we should be deeply worried about what else it could then do, which seems very right.

The goal is to get an upper bound on model capabilities, while understanding that a test will always exclude access to some affordances and capabilities inherent in the system, at least to some degree, as they don’t have access to things like fine tuning or future plug-ins or scaffolding. Arc attempts to compensate for this by allowing humans to correct for some hallucinations or errors that could be reasonably expected to be overcome in such ways. A key concern is if a model currently says it can’t or won’t do something, that doesn’t mean fine tuning couldn’t uncover that capability at low cost in the future, or some other method can’t be found.

There’s also a lot of good discussion of alignment strategies and tests of alignment one might do, such as ways to avoid the training game problem, and an emphasis that evaluations need to be necessary to train a new model, not merely to deploy one, as the training itself can be dangerous. Another good note is that we should going forward avoid making too large leaps in capability or size – rather than do 100x the previous size in one go, much better to do 10x first, do robust checks on the 10x system, then proceed. Which seems like good business for other reasons as well.

A question I’d be curious about is, have they considered going back and repeating the evaluation of GPT-4 now that it has web access and plug-ins and other new capabilities? Have we evaluated other models using the same criteria?

A story called ‘Emotional Intelligence Amplification.’ I enjoyed this, no spoilers.

A brief overview of nuclear non-proliferation with speculation about parallels to AI. The optimistic take is that even if initial rules are weak, over time as incidents occur and dangers become clear those rules can be strengthened. Even an insufficiently strong apparatus that clearly lacks the necessary teeth can grow teeth later. The pessimistic view is that we do not have the luxury of waiting for such failures and illustrations, nor can we afford to mimic the success rate of the IAEA.

Michael Gordin looks at predictions of how long it would take for the Soviets to build the bomb, as opposed to the even more interesting question of estimates of how long it would take the Americans and Germans. Insiders with more information had longer timelines because they had misleading information that our side had a monopoly on Uranium and the USSR lacked other needed resources, but Stalin stole resources from Eastern Europe, treated the situation as an ongoing emergency (which it obviously was, from their perspective) and scoured central Asia until uranium was found. The more of a scientist you were, the shorter your timeline. Also no one properly accounted for Soviet spy penetration of the Manhattan Project. What to conclude? Perhaps trust those closer to the problem more on such questions, and also notice how much attitudes and priorities change timelines.

Avital Balwit discusses potential governance, focusing on compute and tracking advanced chips. There is a clear consensus among those exploring the issue seriously that this is the only known regulatory path that can do more than slow things down at any remotely acceptable cost.

Robert Long notes that it took us a while to notice what animal minds were capable of, and we don’t yet understand what even existing LLM systems are capable of either. Well written, bunch of interesting facts, still didn’t feel like any updates were useful.

Through a Glass Darkly, Scott Alexander looks at AI scientists 2016 predictions of AI progress, finds them extremely well-calibrated and capabilities mostly well-sorted. The only thing that was outside their 90% confidence interval is that we haven’t beaten Angry Birds, and I am at least 90% confident that the reason for this is that no one has cared enough to do that. There are some strange ones on the list, and the most interesting thing is that a handful of things are not expected for a long time, with math expected to not fully fall until 2066. I can quibble of course, but yes, amazing job all around.

The strange thing is that when Katja Grace reran her old survey anew in Summer 2022 with partly different questions, the new crop of AI experts put a bunch of AI achievements in the future that had very clearly already happened. I would disagree with or at least question several of Scott’s judgments here, but others very clearly did happen. Scott notes that AI still hasn’t, despite everything, beaten Angry Birds, to which I once again reply that if you cared enough you could put a large enough bounty on that game’s head and my guess is it falls in a few weeks, maybe if it’s harder than I think a few months, tops.

Then Scott goes back and notices that the original survey was full of contradictory answers, where slight changes in question wording radically changed estimates, despite asking the same people both questions sequentially. AGI in 45 years vs. 122 years? Seems like a big difference. Many such cases. Scott speculates on causes.

Then Scott examines Metaculus estimates, which seem to over-update on information that should already have been priced in, several times. Well, yeah. That’s how such markets work especially when they’re not actually markets. It is common for information to be overlooked, then incorporated too aggressively.

Kelsey Piper gives an overview of efforts to ensure AI doesn’t kill us, framing things as the continuous takeoff group versus those who believe in Yudkowsky-style discontinuous takeoff. I believe both outcomes are possible, and that right now both would soon leave us very, very dead.

Jeffrey Ding talks misconceptions about China and AI. He emphasizes that China’s ability to diffuse innovation advances into its economy and profit from them is weak when it lacks the ability to leapfrog legacy systems, and that we should expect their lack of chatbots and similar applications to continue. More than that, he emphasizes that China’s AI efforts so far have been deceptively weak and ineffective. What they did manage to build was imitative and built on our own open source tools and imitating our work, so if you are worried about China getting AI and response with more support for our open source systems and moving to go faster in general, this is going to be the least helpful option there.

Quiet Speculations

Simeon talks about the safety pros and cons of OpenAI and Anthropic. In his mind most important going forward are: Anthropic built a safety culture, but gave those involved poor incentives and is actively arguing against interventions that could plausibly keep us from dying. OpenAI’s Sam Altman has been a vocal advocate for real safety actions, but not only did they not build a culture of safety, they hired and are organized chaotically, with 2/3rds of employees being indifferent to or actively against safety, and have entangled themselves with Microsoft, likely making it impossible to act responsibly should the need arise.

A market to watch: Will there be a better-than-GPT-4 open source model in 2023? As of Tuesday this is 16% and falling.

The Gradiant’s Arjun Ramani and Zhendong Wang make the traditional case at length that transformational AI will be very difficult to achieve. Many points of varying quality, likely nothing new to you. There is a strange amount of assuming a lack of input substitution in the implied models of such skeptics. There is also a strange intuition on counterfactuals, where historical innovations didn’t cause growth and growth would have mysteriously happened even without them. I do agree that historical growth curves are suspiciously smooth, which I mostly attribute to futures having historically been unevenly distributed, making the overall curve look smooth. I do agree that our high degree of legal restrictions greatly raises the capabilities threshold required for transformative AI, but I also don’t share the take that we’re going to outlaw most AI uses. Many of the most key elements are remarkably lightly regulated, and many people have not updated on the general tolerance of present AI systems, which I doubt they would have predicted.

Warning to keep in mind Morgan’s canon: Do not jump to the conclusion a system (or person) has higher-level properties if low-level properties would suffice to explain behavior. We need to be careful in our tests and analysis, or we won’t be able to differentiate between these two hypotheses. There is also the question of at what point this becomes a distinction without a difference. When we have higher level concepts, what do we mean by that?

The Ask

Eliezer Yudkowsky lays out where his head is at right now on collective action, and asks if anyone else is pondering what he is pondering.

Are there any existing institutions in the AI policy space that are on board with a notkilleveryoneist viewpoint and political agenda? Ie:

– Superhuman AGI built with anything resembling current AI methods would kill literally everyone regardless of who built it;

– We are very far from a technical solution to this problem, and this gap does not look crossable in the immediate future;

– Nobody knows what sort of further AI technology developments will (not) produce omnicidal AI, and we don’t actually know this can’t happen, say, next year;

– Govt will not be able to “pick winners” among AGI projects claiming to have clever plans for not killing everyone, because there will be no actual winners, and no good way to have a non-gameable regulatory standard for ruling out every last actual loser.

Where the corresponding policy agenda that I currently suggest is:

– Scientists should be frank with politicians and the public about how bad this situation looks, and not water it down, nor preemptively back down from the asks that would be required for humanity to survive;

– If a politician feels this claimed awful situation isn’t yet something they can know with sufficient confidence for action, given the existence of some scientists who dissent, the secondary ask is policies that preserve humanity’s option of shutting AGI development down in the near future, in case we’re lucky enough to get additional warning before we’re all dead.

Specific policies I’d advocate under the heading of “preserve a shutdown option later”:

– Restrict AI chip sales to only monitored datacenters on which a global pause button could later be pressed;

– Register AI training runs over a (lowerable) threshold of compute; require the resulting models to be run only in monitored datacenters; store AI outputs where someone with a warrant can search against them;

– Get started on international regulatory cooperation to avoid a race to the bottom on safety/regulation;

– Avoid needlessly confrontational postures with other countries, eg China, whose sign-on we’d want for a global back-off or monitoring regime; avoid any language suggestive of an intent to nationally win an AI race, because an AI arms race has no ultimate winner except the AI;

– Lay groundwork for an alliance where monitored capabilities are shared equally and symmetrically among all signatory countries; without exceptions for govts or militaries being allowed to do dangerous things, because the problem is not “who” but “what”.

(These are largely the same policies required to preserve a world where eg if someone uses a frontier AI to break into a dozen hospital networks and kill a few dozen people and cause a few hundred million dollars of damage, it’s possible to figure out who ran the model, who rented them the compute, who fine-tuned the model, and who trained the foundation model; and all such AI work hasn’t simply moved to some less regulated country now hosting all the H100s.)

Are there any institutions already in the policy space who are on board with the basic concern about everyone dying and govts not being able to pick non-omnicidal winners, and who’d host a correspondingly alarmed policy agenda?

You don’t need to be as alarmed as this to endorse the central logic here.

Here’s how I would frame the case here:

If someone trains an AGI system before we are ready to align it, we all lose.

We are not going to be ready to align such a system any time soon, whereas we might or might not be able to train such a system soon, but it is highly probable that we will know how to train it substantially before we can align it and live.

The only way out of that is to get to a situation in which there is an authority that can prevent the training of such a system until such time as we are ready. Then, at some point in the future when there is sufficiently strong evidence that it is necessary, that authority can impose hard limits – but they need the ability to do that.

The only physical way to do that is through the hardware of advanced AI chips.

Given the difficulties of enforcement and international cooperation, it is much easier to confine such chips to registered and monitored data centers, than it is to try and track all such chips in case they are clustered, potentially in a hostile jurisdiction. It also gives us the maximum range of future affordances if we need to impose unexpected restrictions on usage.

The physical choke points in the manufacturing process make this tractable.

In the meantime, it is a small effective cost to have everyone use cloud compute services for their AI hardware, and it has advantages for mundane safety as well.

(unstated) Similar regimes exist for many other complex and dangerous products, they do not imply a surveillance state or shredding of the Constitution.

Maybe stop needlessly antagonizing China, only do it when you have to?

Don’t instead ask for things that won’t work in the hopes they will later lead to things that do work. The path to victory is what it is.

The concrete suggestions in the replies are FLI (Future of Life Institute) and stop.ai.

I key proposed shift here in practice is to require moving all such chips into data centers, rather than merely restricting who can have concentrations of such chips. This is one of those cases where a harsher, more universal rule is potentially far easier to enforce than a more flexible rule. It also offers additional future affordances for enforcement that could easily be necessary.

A key practical question then is, what is the practical cost of this? Where would we draw the line on what must be in a data center versus what can go into a regular computer? How will we ensure that those who want the compute for whatever purpose that isn’t the one we are watching can get the compute, and they can retain their data security and privacy? I do see technical claims that it is feasible to monitor compute use for large training runs without otherwise invading privacy, but I lack the ability to evaluate those claims.

I can hear the objections, of course, that such outrageous asks are impossible, completely out of line with what has been shown, make incremental progress harder, all of that. Such arguments are common in politics, in both directions.

Where I strongly agree is that we should not sugar coat or misrepresent what we see as the situation. If this is what you think it will take, say so. If not, say that instead.

Where I disagree in general is that I think it is greatly strategically harmful to not offer people marginal actions, in many different senses. When someone asks ‘what can we do?’ you should not shy from the pointing out the big ask, but you also need to figure out what the marginal ask would be that helps us get on the path, makes things slightly easier. When someone asks ‘what can I do, as an individual?’ you need an answer for that too. Shrugs are unacceptable.

No, No One Is Actually Proposing a Global Surveillance State

Arvind Narayanan embodies the the blatant misrepresentation many make of what it would mean to govern compute.

Sophia: Here you would need to prevent access to more then 30 top end gaming GPUs, which seems politically infeasible.

Connor Leahy: 1. I don’t think it actually is (though this might get harder as people lobby actively against it or give up prematurely, 2. Even if hard, it is still what needs to be done.

Arvind Narayanan: All I want is that when AI safety people push non-proliferation, we should all be clear that we’re talking about a global surveillance state where more than 30 gamers can’t get together in person or on a Discord.

The usual response to this observation is to claim that LLaMA class models don’t matter, only GPT-4 does. But whatever is your “frontier” model today is going to be a “LAN party” model in 2-3 years.

Drew Quixote: The idea of non-proliferation of a software based “weapon” running on commodity hardware was largely debunked when PGP was released on the internet.

Arvind Narayanan: Yup. I wonder how many people in the AI safety debate are familiar with the Crypto(graphy) Wars and the immense, permanent damage caused by the US gov’s maddeningly misguided and ultimately futile effort to regulate cryptography as munitions.

This seems like a deeply strange misunderstanding of how Discord works. It is on, how do you say it, the internet. A LAN party that required enough compute present at one location for long enough to train a frontier model seems like quite maximum overkill, and also a super niche thing to potentially worry about happening at some point in the future. Given how inefficient it is to use standard hardware configurations (and yes, this is a hardware restriction on physical stuff, not software, encryption is a completely different case) there are many plausible workarounds if we indeed care about such problems. Imagine evaluating other proposed or existing rules as if they were implemented at this level of stupid.

If necessary, doubtless there are various workarounds.

Then there’s the ‘global surveillance state’ talk here and elsewhere, or accusations by others this would ‘shred the constitution’ or break the fourth amendment.

This is Obvious Nonsense.

If this is a proposal for a global surveillance state, then you’d best be believing in them, because you are already in one.

Advanced computer chips are the most complex, difficult to manufacture physical item in the history of humanity.

The supply chain for those chips goes through multiple bottlenecks across multiple continents.

If anything can be regulated as interstate commerce, this is it. If you can regulate trade in something and put requirements on it, as we do most things in the economy, that implies a duty to and ability to enforce such laws, the same way we enforce other such laws.

We could track the physical locations of such items using the same technological stack that already allows governments to track most people most of the time, since a version of it already exists in every smartphone. How much we already do this, and what we do and don’t use that for, is an open question.

Much more so than with other existing uses, it would be easy to have a system that only revealed the information on GPUs when a sufficient concentration was detected to cause a violation of the regime, if we were so inclined.

If it was necessary to shut down a GPU cluster or data center, there is no reason we could not follow our existing laws and protections. Yes, you can apply for a warrant to search the location in question, same as you do for anything else. We don’t train AIs in a few hours. We also have lots of configurations of equipment that are currently illegal, that involve much less danger to others, that we shut down via similar means.

Eliezer Yudkowsky makes a more extreme ask earlier, that such chips be restricted to use in registered data centers. Even this ask seems highly doable in a freedom-compatible way, and by being absolute it potentially avoids the need for even moderate amounts of other forms of surveillance. I agree this is not ideal from a privacy standpoint, it is still far down my list of such worries.

For monitoring data center use, this paper provides a framework for spotting large training runs without otherwise disturbing user privacy. If indeed such technological solutions are possible, this would not be a substantial additional burden versus existing prohibitions. We already have laws against hosting other forms of content like child pornography.

None of this need carry over into other realms of life. Compared to existing violations or privacy, or of protections against search and seizure, this is nothing, and most of us would happily support (I believe) vastly increased protections in other contexts as part of a deal, or simply for their own sake anyway, if such a thing were possible.

If we believe that banning a single thing that the government does not like, and then enforcing such rules using the power of the state, creates a surveillance state, then what could possibly be the alternative? What existing laws would survive this level of scrutiny?

Some of the people saying this are at least consistent, and would (for example, and quite reasonably) suggest an abolishment of the FDA and all drug laws, and all rules against private trade and exchange of all kinds provided informed consent was provided by all parties, radically reinterpret the commerce clause to render a lot of our laws unconstitutional, and other neat stuff like that.

Yes. There are grave privacy concerns involved in such a regime. There are even more grave privacy concerns with the actual current regime. If you want to join me in taking privacy and freedom seriously in general and at the level in question, then great, let’s do that. I will support you in general and we can work on the solution to this particular tricky question. If you don’t, knock it off.

There are other good objections to such a regime. Anyone asking for something like this faces a high burden to show it is necessary, and to work through the consequences and difficulties and figure out an exact implantation. No one is saying the international aspects of this will prove easy, although it seems like we are trying to impose extremely harsh exports on China that are getting worse.

Dmitri Alperovitch: Commerce Dept could move as soon as next month to stop shipments of AI chips made by Nvidia and others to China. “New restrictions being contemplated by the department would ban the sale of even A800 chips without a license”

The Administration is also considering restricting the leasing of cloud compute to Chinese companies.

Stop acting as if this is inherently some sort of radical break. The only part that is a break is the proposal to enforce this on nations that don’t sign on in ways that go beyond trade restrictions, and in extreme cases long into the future to consider this a potential Casus Belli that justifies physical intervention if no other solutions are found. There it still does not seem so different to our reaction to even ordinary terrorism, let alone nations seeking weapons of mass destruction. What would we do if we thought a country was about to lose control over its nuclear arsenal? I am confident the answer is not ‘respect some idealized version of international law to the letter.’

As time goes by, the margin for error will decrease. Eventually restrictions may need to tighten. Is it possible that decades from now, threshold levels of compute to create dangerous systems will decline sufficiently, and the power of available hardware increase sufficiently, that we will have to choose between restricting ordinary hardware and permitting anyone in the world to train an AGI? Yes. It might happen, or it might not. If it did, we would then have to make that difficult trade-off, one way or the other, based on all the things we learn between now and then. One would hope to sufficiently solve the alignment problem before that happens.

The Quest for Sane Regulations

Washington Post gives us ‘Schumer’s AI game plan, by the numbers’ which is literally counting how many times he said particular words in his speech. This is what we have to go on. News you still can’t use. How can we work to influence this?

Japan’s regulatory approach is described in detail, my summary is ‘they don’t.’

Simeon reacts to reports (that seem to be wrong) that Falcon 40B was trained on ~400 A100s over two months by suggesting now we need to draw the compute limit substantially lower than even that. Which I don’t see as possible. As I discuss in my full post, I have hope for restrictions on compute use, but I don’t see pre-GPT-4 levels as at all realistic, and at best hope to draw the line at GPT-5 levels. Demanding restrictions on training current-level models would likely only sink the whole ask entirely.

Jack Clark of Anthropic lays out that he doesn’t think compute controls can work, essentially saying it ‘looks like picking winners’ so it is politically difficult and bad policy. I don’t understand why ‘everyone abides by this limit’ or ‘everyone has to follow rules’ is ‘picking winners.’ Nor do I think ‘looks politically hard’ can be a showstopper to policy, unless we can find a workable alternative that lacks this issue.

Among other replies, this very good conversation resulted between Connor Leahy of Conjecture and Jack Clark of Anthropic. Jack Clark clarifies that he’d be down for an actual global ban on frontier model training, but he sees no path to making such a ban work without being hacked to pieces, and thinks people discount other approaches. This side thread is good too.

Gary Marcus claims to have successfully predicted three of the last zero walls in deep learning.

Gary Marcus: Remember how I said that deep learning was “hitting a wall” with compositionality in 2001, in 2018 and again 2022? And how everyone made fun of me?

Turns out I was right.

The Week in Audio

Geoffrey Hinton video on deep learning for a non-technical audience.

Carl Shulman on Lunar Society, Part 2. I haven’t listened to Part 2 yet, but Part 1 was both valuable and highly distinct perspective versus other podcasts and models, offering a numerical, physical, practical sketch of our potential futures. I don’t agree with all of it, but if you want to take such questions seriously it is recommended and I’m excited to get to Part 2 soon.

Reid Hoffman on the possibilities of AI on Conversations with Tyler. Again, still in the queue, self-recommending.

Emmitt Shear, cofounder of Twitch, suggests appropriate reactions to AI that people might prefer to avoid.

OK, I did say I wouldn’t engage with Marc Andreessen but some clips from his interview with Sam Harris got shared around and they’re too perfect. That first clip is ~3 minutes and I cannot do it justice, no spoilers, I’m not even mad, that’s amazing. Also guess who else is up for bombing rogue data centers (or shutting off the internet) as an infallible, we’d-totally-do-that-nothing-to-worry-about-go-to alignment plan?

Microsoft CEO Satya Nadella on the Freakonomics podcast. When asked about the extinction risk statement, he says that such conversations are great, makes a strange statement about what he thinks might have been usefully done to intervene in the Industrial Revolution that scares me in the direction I didn’t expect, and then pivots to the standard mundane risks.

Later, when asked again, He calls for a CERN-like project for alignment.

Dubner: But what about the big question; what about the doomsday scenario, wherein an A.I. system gets beyond the control of its human inventors?

Nadella: Essentially, the biggest unsolved problem is how do you ensure both at sort of a scientific understanding level and then the practical engineering level that you can make sure that the A.I. never goes out of control? And that’s where I think there needs to be a CERN-like project where both the academics, along with corporations and governments, all come together to perhaps solve that alignment problem and accelerate the solution to the alignment problem.

Dubner: But even a CERN-like project after the fact, once it’s been made available to the world, especially without watermarks and so on does it seem a little backwards? Do you ever think that your excitement over the technology led you and others to release it publicly too early?

Nadella: No, I actually think — first of all, we are in very early days, and there has been a lot of work. See, there’s no way you can do all of this just as a research project. And we spent a lot of time. In fact, if anything, that — for example, all the work we did in launching Bing Chat and the lessons learned in launching Bing Chat is now all available as a safety service — which, by the way, can be used with any open-source model. So that’s, I think, how the industry and the ecosystem gets better at A.I. safety. But at any point in time, anyone who’s a responsible actor does need to think about everything that they can do for safety. In fact, my sort of mantra internally is the best feature of A.I. is A.I. safety.

Dubner: I did read, though, Satya, that as part of a broader — a much broader layoff — earlier this year, that Microsoft laid off its entire ethics and society team, which presumably would help build these various guardrails for A.I. From the outside, that doesn’t look good. Can you explain that?

Nadella: Yeah, I saw that article too. At the same time, I saw all the headcount that was increasing at Microsoft, because — it’s kind of like saying, “Hey, should we have a test organization that is somewhere on the side?” I think the point is that work that A.I. safety teams are doing are now become so mainstream, critical part of all product-making that we’re actually, if anything, doubled down on it.

So I’m sure there was some amount of reorganization, and any reorganization nowadays seems to get written about, and that’s fantastic. We love that. But to me, A.I. safety is like saying “performance” or “quality” of any software project. You can’t separate it out. I want all 200,000 people in Microsoft working on products to think of A.I. safety.

Dunber does not seem to understand the source of the extinction risk, that it lies in future models, and not in a lack of watermarks.

Nadella’s talk about safety – including his repeated claims he wants everyone in AI working on AI safety – are not reliable signals. They don’t have content details. When we look at Nadella’s talk about ‘making Google dance’ and his race to deploy Bing and all his other decisions, it seems unlikely he is expressing genuine alignment concerns or security mindset, yet it does seem like he could be persuaded by future evidence.

Can LLMs Improve Democracy?

Anthropic got together with Polis to find out. The result was a paper: Opportunities and Risks of LLMs for Scalable Deliberation with Polis.

Polis is a platform that leverages machine intelligence to scale up deliberative processes. In this paper, we explore the opportunities and risks associated with applying Large Language Models (LLMs) towards challenges with facilitating, moderating and summarizing the results of Polis engagements. In particular, we demonstrate with pilot experiments using Anthropic’s Claude that LLMs can indeed augment human intelligence to help more efficiently run Polis conversations.

In particular, we find that summarization capabilities enable categorically new methods with immense promise to empower the public in collective meaning-making exercises. And notably, LLM context limitations have a significant impact on insight and quality of these results.

However, these opportunities come with risks. We discuss some of these risks, as well as principles and techniques for characterizing and mitigating them, and the implications for other deliberative or political systems that may employ LLMs. Finally, we conclude with several open future research directions for augmenting tools like Polis with LLMs.

They tried a few things.

LLMs offer a number of opportunities for improving Polis as a deliberative platform, which we investigate in detail in Section 2, ordered from most feasible and lowest risk to speculative and high risk:

Topic Modelling: Identifying topics among comments to assist with analysis and reporting.

Summarization: More automated production of nuanced, digestible reports and summaries of the outputs of engagements. Moderation: Reduce the burden of moderating comments.

Comment routing: Make better use of participant and moderator time by more effectively ”routing” comments to participants for voting, i.e. deciding which comments should be voted on first.

Identifying consensus: Discovery of statements with broad consensus between identified opinion groups (group informed consensus; Small et al., 2021), as well as those which describe the worldview of specific groups.

Vote Prediction: Based on how a participant voted on N statements, can the system predict agreement or disagreement on a random unseen statement?

Vote prediction seems scary if it was like the Asimov story where the AI interviews one voter in detail then tells everyone what the election outcome would have been if they’d all voted. If it is not used in an official capacity, seems not as scary as the previous options, which can serve to silence debate or distort statements if they go haywire. If people rely on summarizations and you control the summarizations, you might as well control the spice. Even more so if you can say ‘well it’s clear that everyone mostly agrees on X’ in a distorted way and get away with it.

I’ll preregister my prediction on this approach that this is all about Goodhart’s Law and in particular Adversarial Goodhart. The approach should work well if ‘everybody acts normal.’ The problem is that they wouldn’t. If people know you are using an AI and things are adversarial as they often are in politics, they will distort things in anticipation of AI actions, and everything changes, whereas the whole Polis idea is based on genuine engagement and exchange of opinions. Or if the AI is being used by a non-impartial source, that’s even bigger trouble. But it should be great at finding areas of true consensus, such as on local issues.

After reading a sample summary, I’d add that this is a case where AI can offer you a ‘pretty good’ result at almost zero cost. Many times that will be way better than what you were doing already, as you were either at best getting a very good report, or you were spending a lot to do better. There is still no full substitute (so far) for a human summary, but getting a good one isn’t fast.

Another worry is that LLMs are currently highly inclined against saying certain things. If you use them as information filters, there will be outright unthinkable statements, or statements that are very difficult to get through, in addition to more subtle bias. Knowing this would then further discourage frank discussions.

A broader danger is misrepresentation of views and forcing opinions into the types of things and formats LLMs are good at recognizing and amplifying, causing everyone to think in such non-creative, non-logical modes. The paper sights the danger of groups in particular being systematically misrepresented by a simulacra designed by others, calling this catastrophic for deliberation at scale. If that in particular is an issue, it seems relatively straightforward to correct for this – you ask a sample of the original group if the thing you are proposing well-represents their viewpoint.

The vote predictor looks relatively well calibrated. As I’ve often noted, this is a good sign, but not strong evidence of being a good predictor.

What LLMs seem uniquely good at here is rapidly distilling lots of data cheaply. For a very large conversation, you’ll often want to do that. That’s a complement, not a substitute, for things like focus groups. What LLMs should also be good at is answering particular questions, tabulating opinions or responses over a wide range of written statements. Then we may want to use more traditional statistical analysis on those outputs to fill in the gaps.

Nature’s Op-Ed and Tyler Cowen’s Advice

Some people say they aren’t worried about future AI harms because that would distract from current AI harms (Nature), a fully general counterargument for never doing anything about anything. Even Tyler Cowen says this dismissal is ridiculous, although he treats it as proof we have ‘lost the public debate’ and hopes it ‘serves as a wakeup call.’ He says this is the mainstream ‘keeping you around for negativity’ to ‘co-opt for their own agenda’ and a Nature editorial is when ‘push comes to shove.’

Or it could be, ya know, the standard drivel in a place that publishes standard drivel, where the name of that place – Nature – used to mean something. No longer ignoring, moving on to some mix of laughing and fighting.

Nate Silver knows a thing or two about how to evaluate political popularity, here’s his take:

Yeah that’s right and one thing that drives me a little crazy about the Nature op ed is that they portray concern about AI risk as a fringe view when it’s the mainstream (or at least *a* mainstream view, there’s certainly plenty of disagreement) among experts in the field.

I’m very much *not* a stay-in-your-lane guy and think the AI debate would benefit from a wider range of voices. But I am anti-laziness and the @Nature Op Ed is just lazy for not even doing the basics to gauge expert opinion on the issue. Or it’s misrepresenting it which is worse.

I’d also ask this way: Have Republicans ‘utterly lost the public debate’ on essentially every issue? Should we expect their policies to never be implemented? Of course not, no matter what you think about the merits. Yet if you look at academia or places like Nature, they have lost so badly that merely associating with their positions will often get you fired.

I do think it would be highly worthwhile to engage more with the official scientific literature, present many of our arguments more like economists, get officially rejected or accepted from more journals, and offer more numerical models in a variety of ways. It is a thankless ask, a court with rules of evidence on multiple fronts designed to keep out or disregard the best reasons to change one’s mind on such concerns until such time as hard data exists (at which point it is probably too late), and a culture one could politely call captured by special interests. Let’s collaborate a bit, play the game and document what happens every step of the way.

Is that fair? Oh hell no. Life isn’t fair. Trying to convince the public and governments of things is even less fair than most other things. Back when we had fewer resources and less support, it wasn’t a good use of what little effort we had. I think that has now changed, I don’t think it is the same level of important Tyler does, but it isn’t that expensive to do. Even in failure we would learn on several levels from the exercise, and have more evidence to cite also on several levels.

As for the actual content in Nature? I’m going to say the following is fair:

Editorial: ‘Stop talking about tomorrow’s AI doomsday when AI poses risks today.’

Julian Hazell: It’s fascinating that this particular line of reasoning has managed to become so memetically popular despite how utterly nonsensical and foolish it is

(take two): My Mom won’t stop asking me if I’ve “found a job yet” and can “finally move out of the basement” even though I keep telling her that asking me those questions is a distraction from current, tangible issues, like what my next funny tweet should be

Billy Humble Brag: Imagine nature saying “Stop talking about tomorrow’s climate doomsday when warm weather poses risks today.”

I would actively say this works the other way. All plausible courses of action to mitigate extinction risk are helpful for mundane risk. Helping mitigate mundane risks is at risk of not translating to mitigating extinction risk because the nature of dangerous systems means a lot of things that worked before suddenly stop working. The same isn’t true in reverse. If you can control an AGI or get it to be helpful, you can almost certainly use a version of that same trick on GPT-4 in the meantime.

Also, notice everyone talking about how proposed interventions will hurt economic competitiveness, or otherwise get in the way? Mostly that is a misunderstanding, or an expectation that others will misunderstand, an inability to distinguish different versions of ‘boo AI.’ It is still true that it’s very difficult to slow AI capabilities without on net mitigating mundane harms, if your focus is on mundane harm mitigation.

Tyler Cowen also links to this as a “good example of how AI experts often do not have even a basic understanding of social mechanisms.”

Max Tegmark: It’s disgraceful that US & EU policymakers prefer getting advice on how to regulate AI from AI company leaders instead of academic experts like Bengio, Hinton & Russell who lack conflicts of interest.

I wonder if Tyler would say the same thing about Elizabeth Warren or Bernie Sanders? Or even more mainstream voices? If Corey Booker said this would you be surprised? Joe Biden? Ron DeSantis?

I do not think Tegmark is making a mistake in expectations any more than Warren.

I agree most people trying to impact the world need better understanding of social mechanisms. Other people being clueless is no excuse, reality does not grade on a curve. By the very nature of the cause, those who understand the need to guard against extinction risk have put relatively few of their efforts into understanding social mechanisms. Most people who intensely study social mechanisms come away learning not to think too much about physical reality and physical mechanisms, and instead focus on social reality and social mechanisms. Is it fair to require we master both? No, but asking what is fair here is a category error, life is not fair. Humanity wins, or humanity dies.

Either way, give us a little credit. You know what most people I talk to very much fully understand? That corporations are good at lobbying, and are a major force influencing policy decisions, and money talks. We’re nerds. We’re not idiots.

I’d also say that it is interesting to see a series of Tyler Cowen moves of ‘pull a quote from one AI expert or AI notkilleveryoneism advocate being Wrong On The Internet,’ the majority of which are outliers, as if one could not pull far worse quotes for any cause of similar or greater size. It is super frustrating and unreasonable.

It is also done with love. I really do believe that. Life is not fair. The rules are not symmetrical. It is on us to, as they say, Do Better, no matter how much worse everyone else may be doing, a lesson that applies in all things where one should Do Better. We understand this well for things we think are important, this is merely an extension of that principle to a place we do not think of as so important.

Debate Debate

There was a debate about AI extinction risk between Max Tegmark and Yoshua Bengio on the pro side, and Yann LeCun and Melanie Mitchell on the con side. If you want to take one for the team, you can watch here, or on YouTube here.

I will not be doing that, because this is quite the damning poll.

The poll shifted audience views on the question very little, within the margin of error. It did however very clearly shift audience views from the debate being worthwhile to watch (60% in favor) to the debate not being worthwhile to watch (66% against), despite the second group having previously assigned enough value to actually watch themselves.

That seems like a clear indication the debate failed to generate good engagement.

As I plan to discuss in its own post, oral debate tends to not be a good way to get real engagement, identify key points of agreement and disagreement or move closer to the truth. It is like democracy, one of those things that functions terribly yet it better than its salient alternatives. Better to have a debate than to silence the opposition. Much better to have a constructive discussion than to have a debate.

Addition of a written discourse is better still. Some would then move to a scientific, formal, reviewed discourse as even better than that, a question I remain confused about and that depends on the quality and features of our systems and institutions as pertains to the questions at hand.

In a debate, the incentive for all sides is to focus on very short messages, only repeating the most effective such arguments. This is proven to be more effective than giving more different arguments, people focus on your weakest one, and people remember things that get repeated.

I read this post analyzing the ways in which participants talked past each other and changed the subject to different questions. A summary of the arguments offered against the proposition would be that Melanie focused on denying that AI could possibly have dangerous capabilities (?) and on warning that admitting there was such risk would distract from other priorities (so we should believe false things, then?), LeCun said it would have dangerous capabilities but people would doubtless act responsibly and safety so there was nothing to worry about (?!?).

The arguments in favor did not involve any new actual arguments, so doubtless you know them already. In a debate format where you only have to demonstrate non-zero risk, there’s no reason to get creative. I saw many frustrations that the pro-risk side did not do as good a job as we’d have hoped in expressing its arguments crisply. If there are to be more such debates, workshopping the messaging and strategy seems wise.

Rhetorical Innovation

Eliezer Yudkowsky says his current leading candidate for what to call unworried people is ‘dismissers.’ Not the most polite term, yet neither is doomer. ‘The unworried’ has been my functional name, which is imprecise (some people think worry is unhelpful, not that doom is impossible) and not quite as catchy, but otherwise good.

Dan Hendrycks, Mantas Mazeika and Thomas Woodside offer paper: An Overview of Catastrophic AI Risks.

Dan Hendrycks: We consider four main sources of catastrophic AI risks:

– Malicious Use

– AI Race

– Organizational Risks

– Rogue AIs

We discuss specific forms these risks could take and provide practical suggestions for how to mitigate them.

Malicious Use: Individuals and organizations could cause widespread harm using AIs. Threats include propaganda, censorship, surveillance, and bioterrorism. We suggest limiting access to dangerous AIs, prioritizing biosecurity, and creating legal liability for developers.

AI Race: By racing to build and deploy AIs, corporations and militaries might cut corners on safety and cede decision-making power to AIs in the pursuit of a competitive edge. We suggest safety regulation, international coordination, and public control of general-purpose AIs.

Organizational Risks: Like Chernobyl and Three Mile Island, poor organizational safety could lead to catastrophic AI accidents. To reduce these risks, organizations building AIs should adopt external audits, military-grade information security, and strong safety cultures.

Rogue AIs: Controlling AIs is already difficult. Losing control over advanced AIs could lead to catastrophe. Several technical mechanisms enable rogue AIs, including proxy gaming, power seeking, deception, and goal drift. More research is required to address these risks.

We also discuss how ongoing harms, catastrophic risks, and existential risks are closely connected. For an detailed overview of each source of risk, illustrative scenarios, positive visions, and suggestions for managing these risks, read the paper here.

There are many different ways to divide such a taxonomy.

Some points of concern: Here, ‘AI Race’ incorporates all sorts of competitive pressures and incentives to get more utility by ceding incremental control to AIs, or otherwise removing safeguards. It also includes AI encouraging military actions of all kinds. The alignment problem is divided in a way I don’t find instinctive, and which risks obscuring the difficulties involved if one doesn’t dig fully into the details. The rogue AI section treats alignment as a series of potential dangers, without providing unifying intuitions. The section on the automated economy speaks of job loss or enfeeblement of humans, does not consider AIs increasingly controlling resources and making decisions on its own leading to both humans increasingly lacking resources and an ‘ascended economy’ that becomes impossible to understand, that then stops supporting vital human needs as described by Critch and others.

Some strong points: Laying out a careful list of ways militaries are already laying groundwork for taking humans out of the loop and otherwise automating their systems. Pointing out carefully why evolutionary pressures will apply to AIs and that these pressures favor selfish actors and disfavor systems that are altruistic or otherwise positively inclined to humans, and that humans have shown little ability to influence us away from the most fit AI designs. Emphasizing that accidents are inevitable. Emphasizing variety of dangerous scenarios that are possible, including everything from humans gradually voluntarily seeding power to AIs to a hard takeoff. The list of potential causes of Rogue AI dangers in section 5, while incomplete and not unified, seems strong and persuasive to someone looking for a relatively skeptic-friendly, downplayed take. Laying out individual concerns, then noticing they can combine.

The dangers are a laundry list of potential negative scenarios, ranging from mundane to existential.

What suggestions does the paper offer?

For malicious use: Legal liability for developers of general purpose AIs, technical research on adversarial robust anomaly detection, restricted access to dangerous systems and particular attention to systems that pose a biosecurity threat, which presumably expands to other similar threats.

For ‘AI race’ concerns: Safety regulations, data documentation, human oversight requirements for AI decisions, AI cyber defense systems, international coordination, public control of general purpose AIs.

For organizational culture: Red teaming, affirmative demonstrations of safety, deployment procedures, publication reviews, response plans, internal auditing and risk management, processes for important decisions, safe design principles, military-grade information security, and a large fraction of research being safety research.

For rogue AI: Avoid the riskiest use cases, support AI safety research including adversarial robustness proxy models, modeling honesty, transparency and detecting and removing hidden model functionality.

The problem with these suggestions is that they do not seem sufficient, and the ones that look most potentially sufficient don’t seem all that enforceable or actionable. ‘Avoid the riskiest use cases’ such as agents? How? For organizational culture, the solutions are good individual steps but without actual strong safety cultures I don’t see a checklist doing much. For race concerns, it is a strange cross between ‘we need to collectively own the means of intelligence’ and not wanting to overly slam the breaks or exert control, at the same time.

For rogue AI, we don’t have a great alternative answer, but it boils down to spending on alignment and hoping for the best. Even spending heavily on alignment is tough, if you want real work done.

In the FAQ, on the final question of ‘isn’t this all hopeless’ the paper finally raises the possibility of systematically tracking high-end GPU usage as the way to make the problem of slowing down possibly tractable.

One thing that reliably strikes me about such papers is the endless politeness involved. I couldn’t take it. I mean, I’m sure I could if I cared enough, but wow.

This paper will of course not satisfy Tyler Cowen’s request for papers, since it does not ‘model’ any of these risks. For how many would a model be a plausible thing? Not none of them. Also not most of them.

Gary Marcus is interestingly positive about the exercise.

Gary Marcus: Extinction risk is not the same as catastrophic risk; this is a good paper, primarily on the latter.

Society library to chart AI risk arguments. I’m glad for the attempt, but if the arguments on Diablo Canyon are two minutes of 2x scrolling despite one side having no case and the core questions being dead simple, this may be more than a little unwieldy.

Rob Miles suggests a metaphor, not sure if I’ve heard it before or not.

Rob Miles: Perhaps the distinguishing factor is whether someone is tied to the idea that the future should be “normal” or “sensible” or “not too absurd”, or whether they’re thinking about the tech itself and following through the implications even when they’re bizarre

“AI will make everyone unemployed” is a bit like “This bus driving off a cliff means nobody on it will get to work on time and they might get fired”, it requires following through on only a small subset of the relevant consequences.

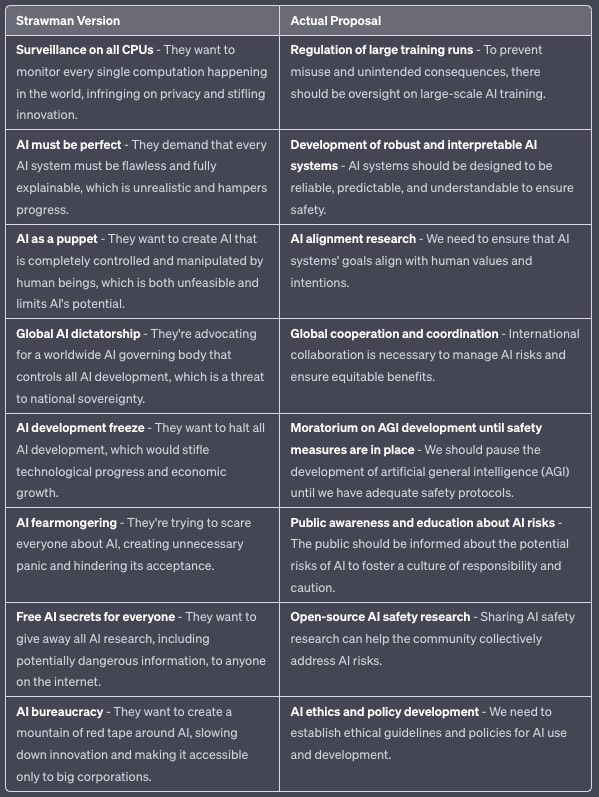

Dustin Moskovitz offers distinctions.

In light of some recent hyperbole in the AI safety debate, I created this handy cheat sheet for people who may be less familiar with the technical debates.

It’s easy to dismiss the strawmen – I believe they’re bad ideas too! The actual ones deserve your consideration.

And by “I created” I mean “I prompted GPT4”

I instead consider this the Fully General Counterargument. It applies equally well to any other consideration Y, as long as you can point to some consideration X that you think deserves talk more. And indeed, we see it used in exactly this way. How dare you talk about any topic that is not The Current Thing?

Safely Aligning a Superintelligent AI is Difficult

Simeon points out that LLMs trained on real world text inevitably will have a picture of the real world, which includes modeling humans, the range of human behavior and also the existence of AIs like themselves. Attempting to predict humans and human errors is, Eliezer estimates, over 80% of the danger from general exposure, and that model generalizes – I agree that training on pre-1980 text (if there was enough of it) would not be much of a barrier to then understanding the internet. So scrubbing particular references isn’t zero help but mostly not promising. Whereas if you train on RL in the style of DeepMind, the given information is sandboxed, so such knowledge can be held back, rendering the whole exercise far safer, and that would be a real barrier.

Yann LeCun had a debate this week. Here he makes a new suggestion to test AI ‘for benevolence’ before deployment, arguing this would be sufficient. He does not explain how one would do this, or why the test would be robust out of distribution or on sufficiently intelligent agents who could recognize they were in a test environment. Or even on what ‘benevolence’ means and what this would imply about a future world. I very much welcome people trying out new ideas, and am eager to see details. Even more eager to see Meta implement such a test, or any test really, in any form.

David Manheim discusses safety culture, why it is important, why traditional approaches don’t work well in the AI case, and why it is difficult to get if you don’t start out with one.

People Are Worried About AI Killing Everyone

What about people in general? Do they agree with the CAIS statement? Yes.

They are not however getting much more worried over time about mundane risks?

That is surprising to me. Not the levels, but the slow rate of change.

Vitalik sees a 10% risk, has very broad timelines.

Q: AGI timelines and p(doom)?

Vitalik Buterin: Very wide probability bars honestly. 95% confidence on timelines 2029-2200 for ASI, (I have significant probability mass on AGI staying roughly-human-level for a while) p(doom) around 0.1? Which of course makes it quite important to take AI risk seriously.

…

it’s difficult for me to articulate the big thing that AI still can’t do. It’s something like “creating and executing on plans involving novel actions in a complex environment with other agents”. If AIs start doing that, then I’ll say we’re super close.

One big reason my p(doom) is not higher is that I am unconvinced of fast takeoff and the “there is no fire alarm for AGI” thesis (I know fast takeoff is not a prereq for doom, but it’s a big push in that direction!) If I get convinced of those things, my p(doom) goes up.

Connor Leahy: I’m surprised by people expecting AI to stay at human level for a while. This is not a stable condition: human-level AI is AI that can automate large parts of human R&D, including AI development. And it does so with immediate advantages over humans (no sleep, learning from one copy is transmitted to all others instantly, trivial to create thousands of copies of a single model). This leads to tightening and accelerating feedback loops in AI development, with AI capabilities quickly zooming past humans and making them obsolete.

It would indeed be profoundly weird if AGI got roughly-human capabilities, then stopped for an extended period. That world does not make all that much sense. Human level capabilities are a very narrow band in a broad space of capabilities levels, and AGIs that are ‘roughly human’ will be vastly superior in many aspects, and will be as Connor notes highly useful for and often used in AGI research.

It is one thing to doubt a true fast takeoff a la Eliezer, it is another to think that ten years after we have a huge number of artificial human-level intelligences that their capabilities remained stalled out at that level.

What is the force that would keep things at that level for a long period?