From Wikipedia:

Negative utilitarianism is a form of negative consequentialism that can be described as the view that people should minimize the total amount of aggregate suffering, or that they should minimize suffering and then, secondarily, maximize the total amount of happiness. It can be regarded as a version of utilitarianism that gives greater priority to reducing suffering (negative utility or “disutility”) than to increasing pleasure (positive utility). This differs from classical utilitarianism, which does not claim that reducing suffering is intrinsically more important than increasing happiness.

Negative utilitarianism is unpopular because it leads to absurd conclusions like “destroying the earth is morally good because there will be no more suffering creatures” or “fish species where most individuals die soon after birth should be eliminated”.

But although pretty much no one I have met buys into full-on negative utilitarianism or would bite its murderous bullets, I think many of the underlying negative-utilitarian intuitions are more popular than one may naively suspect.

At least judging by their other stated normative beliefs, most people:

Don’t think positive and negative sensations or experiences lie on the same continuous line of “goodness”

Would agree that negative experiences are bad, and more of them is even worse

Would agree that’s it’s immoral to create more negative experience (or in some cases fail to reduce the amount of negative experience)

Would not agree that it’s immoral to fail to create more positive experiences (or reduce the amount of positive experience someone has)

Failing To Make A Child Laugh

Imagine you are walking past a shallow pond on a warm day and see a small child playing next to it. You know that the child absolutely loves seeing adults get wet in the pond. You are wearing fancy clothes that would make seeing you wade into the pond even more fun for the child. You could easily wade in and make the child very happy, but doing so would cause mild inconvenience because you’ll have to do an extra load of laundry that evening. Is it unethical to not wade into the pond?

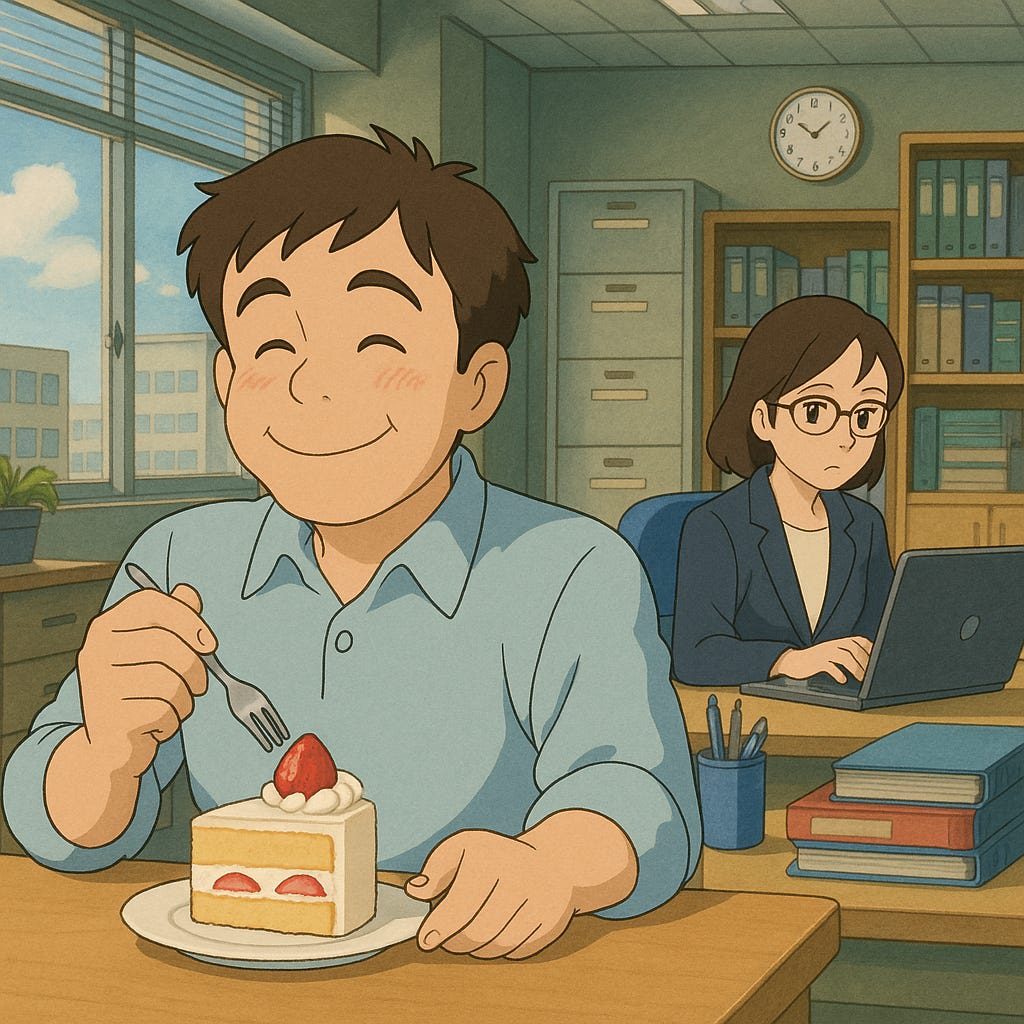

The Employee Who Likes Cake

You’re the manager of an employee who really likes cake. Every lunch break he steps out of the office to get a slice of cake from the local bakery which closes at 2pm. He enjoys the cake very much. Let’s also say that he’s very healthy and goes for long runs and so the cake is not damaging to his health.

It’s lunch time but your team needs to present to a customer straight after lunch and the slide deck is unfinished. You don’t like cake so your lunch plans involve just a boring soggy sandwich that lies ready for you in your backpack. You can either skip the break to finish the slide deck and have your sandwich after the meeting, or ask your employee to stay back and finish the deck himself, knowing he’ll miss out on his favorite cake. Is it unethical to ask your employee to work while you take a break, knowing he’s missing out on a lot of pleasure? Most would say it’s OK to ask the employee to work.

Now imagine a different situation. Your employee has a mild eye strain problem. He hasn’t mentioned it to you yet but you overheard him talking to his colleagues about it. You know that if he doesn’t take a break by 1pm he gets headaches in the afternoon. Would should you do now? Is it still OK to ask him to work over lunch?

In general, hurting people for your own personal gain is seen as unethical, and the more you hurt them the worse it is. Whereas prioritizing your own pleasure over others’ is seen as acceptable and not immoral. You are not obligated to make others happy, but you are obligated to not make others suffer. At least this seems to be the view of most regular people. People with a particularly altruistic inclination generally speak of helping the poor and sick avoid hunger and pain, not of making the happy as happy as possible.

So in practice I find most people’s moral intuitions to be rather negative utilitarian flavored.

And though most cannot be described as actually following any particular named ethical theory, I think a reasonable approximation for many people’s ethical view is that they:

Have a (non-normative) preference to personally feel happy/have positive experiences

Have a (non-normative) preference for others to feel happy/have positive experiences, usually centered on their family, close friends, and other personal acquaintances

Believe it’s immoral to cause harm/pain/suffering to others, where the more suffering, the worse the sin

That is, their moral beliefs (rather than their personal preferences) mostly relate to reducing the negative rather than increasing the positive.

These intuitions (at least, my versions, insofar as I have some version of them) seem to be more about harm vs. edification (I mean, damage to / growth of actual capacity) rather than experientially-feels-good vs. -bad. If a child loses a limb, or has permanent developmental damage from starvation, or drowns to death, that will damage zer long term ability to grow and be fulfilled. Eye strain is another kind of lasting harm.

In fact, if there is some way to reliably, significantly, consensually, and truly (that is, in a deep / wholesome sense) increase someone else’s agency, then I do think there’s a strong moral obligation to do so! (But there are many difficulties and tradeoffs etc.)

I think a lot of the asymmetry in intuitions just comes from harm often being very easy to cause but goodness generally being very hard. Goodness tends to be fragile, such that it’s very easy for one defector to really mess things up, but very hard for somebody to add a bunch of additional value—e.g. it’s a lot easier to murder someone than it is to found a company that produces a comparable amount of consumer surplus. Furthermore, and partially as a result, causing pain tends to be done intentionally, whereas not causing goodness tends to be done unintentionally (most of the time you could have done something good but didn’t, probably you had no idea). So, our intuitions are such that causing harm is something often done intentionally, that can easily break things quite badly, and that almost anyone could theoretically do—so something people should be really quite culpable for doing. Whereas not causing goodness is something usually done unintentionally, that’s very hard to avoid, and where very few people ever succeed at creating huge amounts of goodness—so something people really shouldn’t be that culpable for failing at. But notably none of those reasons have anything to do with goodness or harm being inherently asymmetrical with respect to e.g. fundamental differences in how good goodness is vs. how bad badness is.

Yep I agree this explains a lot of the psychological bias. Probably alongside the fact that humans have a greater capacity to feel pain/discomfort than pleasure. And also perhaps the r/k-selection thing where humans are more risk-averse/have greater responses to threat-like stimuli because new individuals are harder to create.

Also probably punishing cruelty/causing harm in society is more conducive to long-term societal flourishing than punishing a general lack of generosity. And so group selection favors suffering-focused ethical intuitions.

The title of the post says negative utilitarianism is more intuitive than we think, but the body of the post says the intuitions behind negative utilitarianism make sense.

There is a critical distinction between these two claims. Indeed, the whole point of the seemingly endless philosophical debates over the fundamental nature of ethics[1] is that you can start with seemingly reasonable assumptions, but reach crazy conclusions. And you can also start with different, but just-as-reasonable assumptions[2] and reach completely contrary conclusions.

The resolution to this seeming paradox is that our intuitive sense of “reasonableness” contains fundamental, irresolvable contradictions inside of it. Contradictions that[3] do not matter too much in mundane/prosaic areas we’re familiar with, but which get amplified when the tails come apart and stuff like uploading, mass population increases, etc, become available. There is some connection to the notion of “philosophical crazyism” mentioned in this comment by Carl Feynman.

In math and logic, there is something known as the principle of explosion. It says that as long as you have at least one contradiction between your axioms, you can apply rules of inference to prove all statements inside your system are both true and false. Even the slightest problem can get amplified and break the whole edifice down. Something analogous seems likely to happen to our ability to handle the moral conundrums around us, if stuff gets wild sometime in the future.

I have written more about these kinds of topics here.

Or, frankly, most philosophical debates, regardless of topic

Stuff like “increasing the total amount of happiness in the world is good, all else equal” or “making people happier is good, all else equal”

As basic evo-psych would predict

Fair

Perhaps I should have clarified more that the post is trying to argue that NU is closer to many people’s natural intuitions than one may naively expect, rather than to say NU is more justified than one may naively expect. I don’t think NU is correct or justified.

This seems like a global modifier which is a function of your mental health. High levels of mental well-being seems to make people grateful even for their negative experiences.

That negative experiences are experienced as bad does not mean that they’re bad, it means that experiencing certain things as bad is good. For instance, when you feel exhausted, your body still has lots of energy left, it merely creates the illusion that you’re running empty in order to prevent you from harming yourself. Negative experiences are also just signals that something isn’t right, but having the experience is valuable, and avoiding the experience might merely prevent you from learning that something needs changing. Saying that pain is bad in itself is like saying that the smoke alarm in your kitchen is bad. Suffering isn’t damage, it’s defense against damage.

Negative experiences can create good outcomes (because, as I said earlier the felt ‘badness’ is an illusion and thus not objective negative utility). And I dislike that axiom because it says “it’s better to die at birth than to grow old” (the latter will have more negative experiences).

I’m the rare sort of person who does take this into account and deem it important. I’d go as far as to say “If you have a lot of positive experiences, you will be able to shrug off more negative experiences with a laugh”.

In short, the brain lies to itself because there’s utility in these lies, but if you believe in these lies, then you cannot come to the correct conclusions about this topic. For the rest of the conclusions one may arrive at, I think they depend on the mental health of the speaker, and not on their intelligence. The sentence “Life is suffering” is not an explanation for why people are feeling bad, it’s a product of people feeling bad. Cause and effect goes in the other direction than what is commonly believed.

The difference between this and the “saving a drowning child” thought experiment is probably 6 or so orders of magnitude of difference in the benefit of wading into the pond[1], while the cost … portrayed as “laundry”, is maybe 2 orders of magnitude off. Ok, so, then, imagine there are ten thousand children who love seeing adults get wet in the pond. Maybe also add an aspect where they saw you coming and got their hopes up, and that you can see for miles around and there are no other adults coming this way.

(I would not use “ethics” here, but I would feel a strong pressure and would endorse giving into it, but would defend the legal right to refuse. The same goes for the drowning child version.)

Valuing “intense momentary pleasure for the child” at $10 and the life at $10M. It’s probably vaguely the right order of magnitude.

If you haven’t seen it already, you might find The Case for Suffering-Focused Ethics of interest. Like your article, it collects various intuitions that many people have that might push them toward a view that puts special priority to the prevention of suffering over the creation of pleasure. Your examples remind me a bit of the “Two planets” thought experiment listed on that page (though their focus is slightly different):

It’s worth distinguishing between negative utilitarianism and merely having suffering-focused ethics. NU is an special case of SFE, where “suffering-focused ethics is an umbrella term for moral views that place primary or particular importance on the prevention of suffering”. So it sounds to me like a reasonable claim that

But I think it’s not very productive to describe this as something like “NU, just not taken to extreme lengths such as destroying the worlds”. The defining feature of NU is that it puts zero intrinsic weight on positive experiences. If you have a theory that does include a preference for people to experience positive experiences, but just puts a higher weight on preventing suffering than it does on making people have positive experiences, then that theory is something different than negative utilitarianism. So it’d be clearer to call it something like a “suffering-focused” theory instead.

I think various people define NU differently.

Quoting Wikipedia: The term “negative utilitarianism” is used by some authors to denote the theory that reducing negative well-being is the only thing that ultimately matters morally. Others distinguish between “strong” and “weak” versions of negative utilitarianism, where strong versions are only concerned with reducing negative well-being, and weak versions say that both positive and negative well-being matter but that negative well-being matters more.

Fair enough, I hadn’t heard of the “weak NU” framing before. I’d still avoid it myself, though.

...

One example of “creating more positive experiences” is giving your children a happy childhood full of joy vs. a merely indifferent one full of drab. Would “most people” really think that there is no duty to do the former and avoid the latter?

Judging by the valorization of “low-effort parenting”, “spending all your savings before you die” and similar memes, alongside the widespread condemnation of slapping children, it seems to me that people care far more about parents avoiding minor harms to their kids than trying to provide them with as much joy or benefit as possible (not saying this is correct).

I had not heard of those ideas, but a quick google turns up more negative than positive attitudes to them. In fact, I saw no-one with a good word for “spending all your savings before you die”, quite the opposite. This is a fate that retirees with a fixed pile of savings and no paid work try to avoid, also called “money death”.

In fact, as a new mother myself, I was surprised by the volume of content encouraging parents to prioritize their own comfort over their kids’ joy. Parenting subreddits are full of this stuff.

This seems like at least as strong evidence that parents are doing too much and need to be walked back

Seems like a mistake to write these intuitions into your axiology.

Instead, the intuitions at play here are mainly about how to set up networks of coordination between agents. This includes

Voluntary interactions: limiting interaction with those who didn’t consent, limiting effects (especially negative effects) on those who didn’t opt in (e.g. Mill’s harm principle)

Social roles: interacting with people in a particular capacity rather than needing to consider the person in all of their humanity & complexity

Boundaries/membranes: limiting what aspects of an agent and its inner workings others have access to or can influence

The arguments you give seem more about disvalue being more salient than (plus)value, but there are many different forms of disvalue and value, and valence is only one of them. Like, risk avoidance (/applying a strategy that is more conservative/cautious than justified by naïve expected value calculations or whatever) is also an example of disvalue being more salient than value, but need not cache out in terms of pleasure/pain.

(Possibly tangent:) And then there’s the thing that even if you accept the general NU assumptions, is the balance being skewed to the disvalue side some deep fact about minds, or is it a “

skillhardware issue”: we could configure our (and/or other animals’) biology, so that things are more balanced or even completely skewed towards the positive side, to the extent that we can do this without sacrificing functionality, like David Pearce argues. (TBC: I’m not advocating for this, at least not as radically/fanatically and not as a sole objective/imperative.)The following thought experiment by Tim Scanlon is also a relevant example (quote from Edmonds’s Parfit):

FYI, I’d be in favor of offering him something on the order of tens of thousands of dollars to lie there and take the shocks so the world can continue watching the match. (Or, if communicating with him is impossible, then just doing it that way and compensating him after the fact—probably with a higher amount.) I’m not sure how many is “many people” and how painful is “extremely painful”, but it seems likely that there’s an amount of money that makes this a win-win.

Some questions come to mind:

Who is doing the offering, and whose money is being offered? (If the answer is something like “the TV station owner; he offers his own personal money”, then this would seem to distribute incentives improperly. If the answer is something like “the TV station owner; he offers the station’s funds, and means to make it up later by selling more advertising time”, then the next question is whether the audience would consent to this trade if they knew about it, and whether they have a right to know about it. If the answer is something else… well, the next question depends on what that something else is.)

Suppose that Jones agrees to take the money. Later, he sues the TV station, claiming emotional trauma, the need for years of therapy, etc.; to the defendants’ protest that Jones agreed to take the money, he (or his lawyer) replies that agreements made while under the extreme stress of being electrically shocked while your hand is being crushed cannot be considered binding. The judge agrees, and awards seven-figure damages. Given that this outcome is foreseeable, does this change your suggestion at all?

I was thinking “the TV station owner offers the station’s funds”. If the audience knew about it, probably some portion would approve and some portion would be horrified, perhaps some of the latter performatively so. (I think there’s a general hypocrisy in which many people routinely use goods and services that, if you ask them, they intellectually know are probably made in working conditions they consider horrific, and if you tell them about a particular instance, they say they’re outraged, but if you don’t, then they’re not motivated to look into it or think about it and just happily use the thing.) I don’t think the audience particularly has a right to be told about it, any more than Apple has an obligation to tell customers about employee conditions at Foxconn. I suppose this event could become a media scandal, in which case realpolitik suggests the option of offering Jones more money to sign an NDA.

I was considering the morals of the situation and not the implementation with today’s legal system. You may be right. Though I could imagine various remedies: (a) Jones’s contract may have clauses covering this already; (b) the station people might come up with other reasons they didn’t extricate Jones (“Of course we wanted to get him out, but we were trying to root-cause the problem / looking for other things that were broken / worried that messing with it might cause a power surge, and that took an hour”), reasons which it might be impossible to prove in court that they didn’t believe.

An important part of the scenario that seems unrealistic is that people could know in advance that Jones will be electrically shocked for an hour in a way that will cause extreme pain, but not cause heart attacks or permanent nerve damage. (I guess they could be monitoring him for the former.)

Ah yes, that is a good one. Much better than my examples!

Relatedly, I think that similar thought experiments could be constructed against negative utilitarianism. For example the infamous torture vs dust specks.