Compressing Reality to Math

This is part of a sequence on decision analysis and follows 5 Axioms of Decision-Making, which explains how to turn a well-formed problem into a solution. Here we discuss turning reality into a well-formed problem. There are three basic actions I’d like to introduce, and then work through some examples.

Scope

The first thing you have to decide with a problem is, well, what the problem is. Suppose you’re contemplating remodeling your kitchen, and the contractor you’re looking at offers marble or granite countertops. While deciding whether you want marble or granite, you stop and wonder- is this really the contractor that you should be using? Actually, should you even be remodeling your kitchen? Maybe you should to move to a better city first. But if you’re already thinking about moving, you might even want to emigrate to another country.

At this point the contractor awkwardly coughs and asks whether you’d like marble or granite.

Decisions take effort to solve, especially if you’re trying to carefully avoid bias. It helps to partition the world and deal with local problems- you can figure out which countertops you want without first figuring out what country you want to live in. It’s also important to keep in mind lost purposes- if you’re going to move to a new city, remodeling your kitchen is probably a mistake, even after you already called a contractor. Be open to going up a level, but not paralyzed by the possibility, which is a careful balancing act. Spending time periodically going up levels and reevaluating your decisions and directions can help, as well as having a philosophy of life.

Model

Now that you’ve got a first draft of what your problem entails, how does that corner of the world work? What are the key decisions and the key uncertainties? A tool that can be of great help here is an influence diagram, which is a directed acyclic graph1 which represents the uncertainties, decisions, and values inherent in a problem. While sketching out your model, do you become more or less comfortable with the scope of the decision? If you’re less comfortable, move up (or down) a level and remodel.

Elicit

Now that you have an influence diagram, you need to populate it with numbers. What (conditional) probabilities do you assign to uncertainty nodes? What preferences do you assign to possible outcomes? Are there any other uncertainty nodes you could add to clarify your calculations? (For example, when making a decision based on a medical test, you may want to add a “underlying reality” node that influences the test results but that you can’t see, to make it easier to elicit probabilities.)

Outing with a Friend

A friend calls me up: “Let’s do something this weekend.” I agree, and ponder my options. We typically play Go, and I can either host at my apartment or we could meet at a local park. If it rains, the park will not be a fun place to be; if it’s sunny, though, the park is much nicer than my apartment. I check the weather forecast for Saturday and am not impressed: 50% chance of rain.2 I check calibration data and see that for a 3 day forecast, a 50% prediction is well calibrated, so I’ll just use that number.3

If we play Go, we’re locked in to whatever location we choose, because moving the board would be a giant hassle.4 But we could just talk- it’s been a while since we caught up. I don’t think I would enjoy that as much as playing Go, but it would give us location flexibility- if it’s nice out we’ll go to the park, and if it starts raining we can just walk back to my apartment.

Note that I just added a decision to the problem, and so I update my diagram accordingly. With influence diagrams, you have a choice of how detailed to be- I could have made the activity decision point to two branches, one in which I see the weather then pick my location, and another where I pick my location and then see the weather. I chose a more streamlined version, in which I choose between playing Go or walking wherever the rain isn’t (where the choice of location isn’t part of my optimization problem, since I consider it obvious.

Coming up with creative options like this is one of the major benefits of careful decision-making. When you evaluate every part of the decision and make explicit your dependencies, you can see holes in your model or places where you can make yourself better off by recasting the problem. Simply getting everything on paper in a visual format can do wonders to help clarify and solve problems.

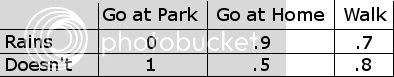

At this point, I’m happy with my model- I could come up with other options, but this is probably enough for a problem of this size.5 I’ve got six outcomes- either (we walk, play go (inside, outside)) and it (rains, doesn’t rain). I decide that I would most prefer playing go outside and it doesn’t rain, and would least prefer playing go outside and it rains.6 Ordering the rest is somewhat difficult, but I come up with the following matrix:

If we walk, I won’t enjoy it quite as much as playing Go, but if we play Go in my apartment and it’s sunny I’ll regret that we didn’t play outside. Note that all of the preferences probabilities are fairly high- that’s because having a game interrupted by rain is much worse than all of the other outcomes. Calculating the preference value of each deal is easy, since the probability of rain is independent of my decision and .5: I decide that playing Go at Park is the worst option with an effective .5 chance of the best outcome, Go at Home is better with an effective .7 chance of the best outcome, and Walk is best with an effective .75 chance of the best outcome.

Note that working through everything worked out better for us than doing scenario planning. If I knew it would rain, I would choose Go at Home; if I knew it wouldn’t rain, I would choose Go at Park. Walk is dominated by both of those in the case of certainty, but its lower variance means it wins out when I’m very uncertain about whether it will rain or not.7

What’s going up a level here? Evaluating whether or not I want to do something with this friend this weekend (and another level might be evaluating whether or not I want them as a friend, and so on). When evaluating the prospects, I might want to compare them to whatever I was planning before my friend called to make sure this is actually a better plan. It could be the chance of rain is so high it makes this plan worse than whatever alternatives I had before I knew it was likely to rain.

Managing a Store

Suppose I manage a store that sells a variety of products. I decide that I want to maximize profits over the course of a year, plus some estimate of intangibles (like customer satisfaction). I have a number of year-long commitments (the lease on my space, employment contracts for full-time employees, etc.) already made, which I’ll consider beyond the scope of the problem. I then have a number of decisions I have to make month-to-month8 (how many seasonal employees to hire, what products to order, whether to change wages or prices, what hours the store should be open), and then decisions I have to make day-to-day (which employees to schedule, where to place items in the store, where to place employees in the store, how to spend my time).

I look at the day-to-day decisions and decide that I’m happy modeling those as policies rather than individual decisions- I don’t need to have mapped those out now, but I do need to put my January orders in next week. Which policies I adopt might be relevant to my decision, though, and so I still want to model them on that level.

Well, what about my uncertainties? Employee morale seems like one that’ll vary month-to-month or day-to-day, though I’m comfortable modeling it month-to-month, as that’s when I would change the employee composition or wages. Customer satisfaction is an uncertainty that seems like it would be worth tracking, and so is customer demand- how the prices I set will influence sales. And I should model demand by item- or maybe just by category of items. Labor costs, inventory costs, and revenue are all nodes that I could stick in as uncertainty nodes (even though they might just deterministically calculate those based on their input nodes).

You can imagine that the influence diagram I’m sketching is starting to get massive- and I’m just considering this year! I also need to think carefully about how I put the dependencies in this network- should I have customer satisfaction point to customer demand, or the other way around? For uncertainty nodes it doesn’t matter much (as we know how to flip them), but may make elicitation easier or harder. For decision nodes, order is critical, as it represents the order the decisions have to be made in.

But even though the problem is getting massive, I can still use this methodology to solve it. This’ll make it easier for me to keep everything in mind- because I won’t need to keep everything in mind. I can contemplate the dependencies and uncertainties of the system one piece at a time, record the results, and then integrate them together. Like with the previous example, it’s easy to include the results of other people’s calculations in your decision problem as a node, meaning that this can be extended to team decisions as well- maybe my marketer does all the elicitation for the demand nodes, and my assistant manager does all the elicitation for the employee nodes, and then I can combine them into one decision-making network.

Newcomb’s Problem

Newcomb’s Problem is a thought experiment designed to highlight the difference between different decision theories that has come up a lot on Less Wrong. AnnaSalamon wrote an article (that even includes influence diagrams that are much prettier than mine) analyzing Newcomb’s Problem, in which she presents three ways to interpret the problem. I won’t repeat her analysis, but will make several observations:

Problem statements, and real life, are often ambiguous or uncertain. Navigating that ambiguity and uncertainty about what problem you’re actually facing is a major component of making decisions. It’s also a major place for bias to creep in: if you aren’t careful about defining your problems, they will be defined in careless ways, which can impose real and large costs in worse solutions.

It’s easy to construct a thought experiment with contradictory premises, and not notice if you keep math and pictures out of it. Draw pictures, show the math. It makes normal problems easier, and helps you notice when a problem boils down to “could an unstoppable force move an immovable object?”,9 and then you can move on.

If you’re not quite sure which of several interpretations is true, you can model that explicitly. Put an uncertainty node at the top which points to the model where your behavior and Omega’s decision are independent and the model where your behavior determines Omega’s behavior. Elicit a probability, or calculate what the probability would need to be for you to make one decision and then compare that to how uncertain you are.

1. This means that there are a bunch of nodes connected by arrows (directed edges), and that there are no cycles (arrows that point in a loop). As a consequence, there are some nodes with no arrows pointing to them and one node with no arrows leaving it (the value node). It’s also worth mentioning that influence diagrams are a special case of Bayesian Networks.

2. This is the actual prediction for me as of 1:30 PM on 12/14/2011. Link here, though that link should become worthless by tomorrow. Also note that I’m assuming that rain will only happen after we start the game of Go / decide where to play, or that the switching costs will be large.

3. The actual percentage in the data they had collected by 2008 was ~53%, but as it was within the ‘well calibrated’ region I should use the number at face value.

4. If it started raining while we were playing outside, we would probably just stop and do something else rather than playing through the rain, but neither of those are attractive prospects.

5. I strongly recommend satisficing, in the AI sense of including the costs of optimizing in your optimization process, rather than maximization. Opportunity costs are real. For a problem with, say, millions of dollars on the line, you’ll probably want to spend quite a bit of time trying to come up with other options.

6. You may have noticed a trend that the best and worst outcome are often paired together. This is more likely in constructed problems, but is a feature of many difficult real-world problems.

7. We can do something called sensitivity analysis to see how sensitive this result is; if it’s very sensitive to a value we elicited, we might go back and see if we can narrow the uncertainty on that value. If it’s not very sensitive, then we don’t need to worry much about those uncertainties.

8. This is an artificial constraint- really, you could change any of those policies any day, or even at any time during that day. It’s often helpful to chunk continuous periods into a few discrete ones, but only to the degree that your bins carve reality at the joints.

9. The unstoppable force being Omega’s prediction ability, and the immovable object being causality only propagating forward in time. Two-boxers answer “unmovable object,” one-boxers answer “unstoppable force.”

I’m confused by what you mean here about Newcomb’s problem, if you say that two-boxers and one-boxers are both failing to dissolve the question. Do you mean that one particular argument for two-boxing and one particular argument for one-boxing are failures to dissolve the question (but that one or the other decision is still valid)? Or do you mean that Newcomb’s Problem is an impossible situation which doesn’t approximate anything we might really face, and therefore we should stop worrying about it and move on? Or do you mean something different entirely?

This is my personal opinion, though I do not expect everyone to agree. I would go further and say that its connection to real situations is too tenuous to be valuable- many impossible scenarios are worth thinking about because they closely approximate real scenarios. The previous sentence- that any one explanation of why two-boxing or one-boxing is correct fails to understand why it’s a problem- is something I expect everyone should agree with.

Well, then we have a bigger disagreement at stake. Also:

You switched the quantifier on my question, and your version is much stronger than anything I’d agree to, much less expect to be uncontroversial.

Good post, upvoted for giving practical advice backed by deep theories. It did raise a few questions, but on reflection most are more meta-issues about LW that show up in this sequence than about the post itself. So, I think I’ll kick them over to their own discussion post. That said I am curious if you know of any large scale studies about the results of people undergoing training in using decision theory. -edit discusion post is here

What sort of metric would you want the study to measure? I know Chevron has been working on integrating this style of DA into their corporate culture for 20 years, and they seem to think it works. I’m less certain of how valuable it is, or what kinds of value it improves, for a person on the street, but am generally optimistic about these sorts of clear-thinking procedures.

For business or divisions within the same business the measures that are already used to measure success, especially those that are close to their terminal goals would likely be sufficient. It would be pretty hard to come up with a good measure for individuals. I would say their level of improvement on goals they set for themselves, but understand how that would be hard to use as the basis for a study. Perhaps happiness or money as those are both very common major goals.

Voted up for the maths and clear exposition.