Researching donation opportunities. Previously: ailabwatch.org.

Zach Stein-Perlman

I would bet that lawyers, scholars, and chatbots would basically disagree with you. Your literal reading of the Constitution is less important than norms/practice.

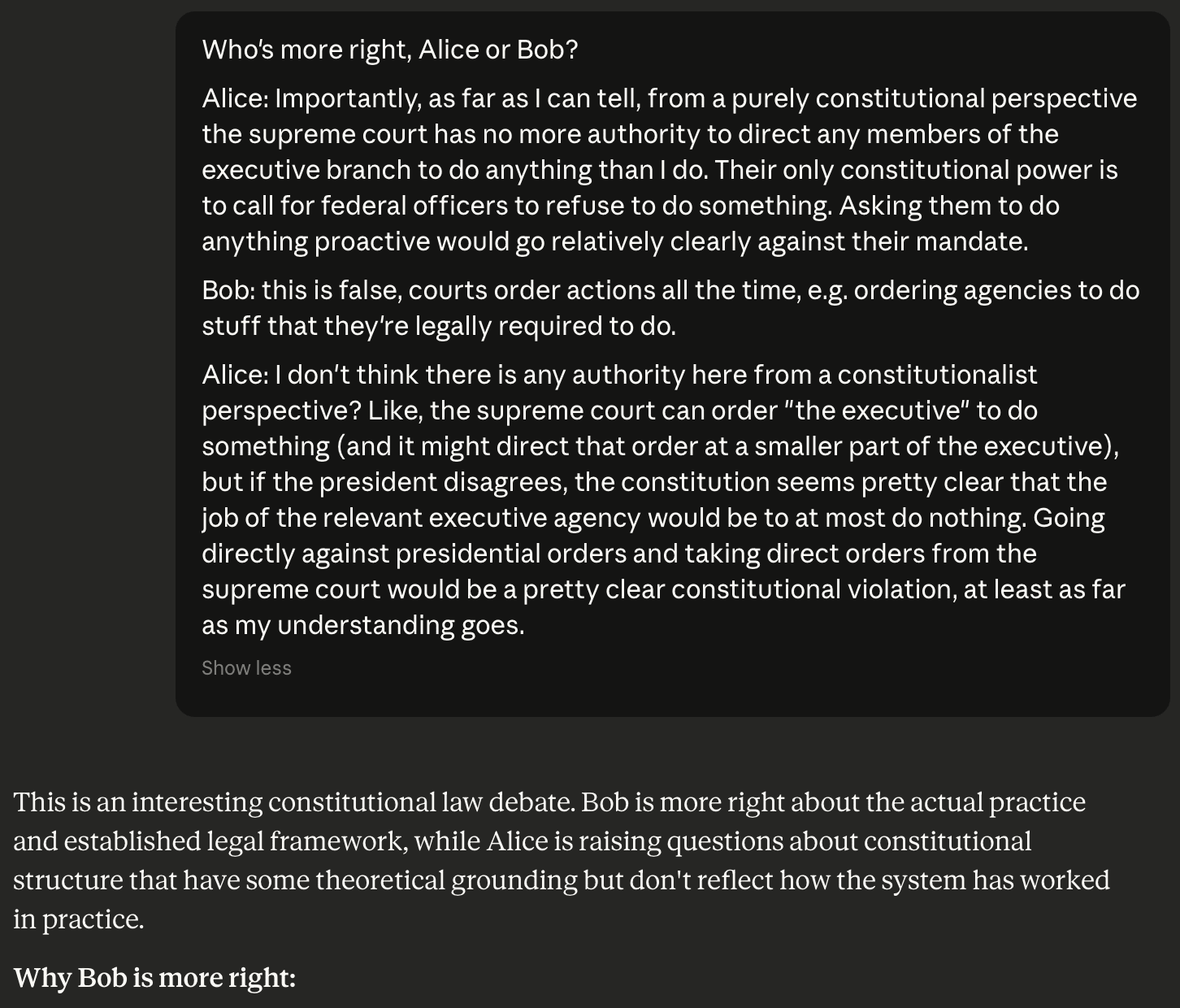

Importantly, as far as I can tell, from a purely constitutional perspective the supreme court has no more authority to direct any members of the executive branch to do anything than I do. Their only constitutional power is to call for federal officers to refuse to do something. Asking them to do anything proactive would go relatively clearly against their mandate.

In case nobody else has mentioned it: this is false, courts order actions all the time, e.g. ordering agencies to do stuff that they’re legally required to do. Ask your favorite chatbot.

This ~assumes that your primary projects have diminishing returns; the third copy of Tsvi is less valuable than the first. But note that Increasing returns to effort are common.

No. (But people should still feel free to reach out if they want.)

Anthropic: Sharing our compliance framework for California’s Transparency in Frontier AI Act.

2.5 weeks ago Anthropic published a framework for SB 53. I haven’t read it; it might have interesting details. And it might be noteworthy that compliance is somewhat separate from RSP, possibly due to CA law. I’m pretty ignorant on state law stuff like SB 53 but I wasn’t expecting a new framework.

Framework here (you can’t easily download it or copy from it, which is annoying). Anthropic’s full Trust Portal here. Anthropic’s blogpost below.

On January 1, California’s Transparency in Frontier AI Act (SB 53) will go into effect. It establishes the nation’s first frontier AI safety and transparency requirements for catastrophic risks.

While we have long advocated for a federal framework, Anthropic endorsed SB 53 because we believe frontier AI developers like ourselves should be transparent about how they assess and manage these risks. Importantly, the law balances the need for strong safety practices, incident reporting, and whistleblower protections—while preserving flexibility in how developers implement their safety measures, and exempting smaller companies from unnecessary regulatory burdens.

One of the law’s key requirements is that frontier AI developers publish a framework describing how they assess and manage catastrophic risks. Our Frontier Compliance Framework (FCF) is now available to the public, here. Below, we discuss what’s included within it, and highlight what we think should come next for frontier AI transparency.

What’s in our Frontier Compliance Framework

Our FCF describes how we assess and mitigate cyber offense, chemical, biological, radiological, and nuclear threats, as well as the risks of AI sabotage and loss of control, for our frontier models. The framework also lays out our tiered system for evaluating model capabilities against these risk categories and explains our approach to mitigations. It also covers how we protect model weights and respond to safety incidents.

Much of what’s in the FCF reflects an evolution of practices we’ve followed for years. Since 2023, our Responsible Scaling Policy (RSP) has outlined our approach to managing extreme risks from advanced AI systems and informed our decisions about AI development and deployment. We also release detailed system cards when we launch new models, which describe capabilities, safety evaluations, and risk assessments. Other labs have voluntarily adopted similar approaches. Under the new law going into effect on January 1, those types of transparency practices are mandatory for those building the most powerful AI systems in California.

Moving forward, the FCF will serve as our compliance framework for SB 53 and other regulatory requirements. The RSP will remain our voluntary safety policy, reflecting what we believe best practices should be as the AI landscape evolves, even when that goes beyond or otherwise differs from current regulatory requirements.

The need for a federal standard

The implementation of SB 53 is an important moment. By formalizing achievable transparency practices that responsible labs already voluntarily follow, the law ensures these commitments can’t be abandoned quietly later once models get more capable, or as competition intensifies. Now, a federal AI transparency framework enshrining these practices is needed to ensure consistency across the country.

Earlier this year, we proposed a framework for federal legislation. It emphasizes public visibility into safety practices, without trying to lock in specific technical approaches that may not make sense over time. The core tenets of our framework include:

Requiring a public secure development framework: Covered developers should publish a framework laying out how they assess and mitigate serious risks, including chemical, biological, radiological, and nuclear harms, as well as harms from misaligned model autonomy.

Publishing system cards at deployment: Documentation summarizing testing, evaluation procedures, results, and mitigations should be publicly disclosed when models are deployed and updated if models are substantially modified.

Protecting whistleblowers: It should be an explicit violation of law for a lab to lie about compliance with its framework or punish employees who raise concerns about violations.

Flexible transparency standards: A workable AI transparency framework should have a minimum set of standards so that it can enhance security and public safety while accommodating the evolving nature of AI development. Standards should be flexible, lightweight requirements that can adapt as consensus best practices emerge.

Limit application to the largest model developers: To avoid burdening the startup ecosystem and smaller developers with models at low risk for causing catastrophic harm, requirements should apply only to established frontier developers building the most capable models.

As AI systems grow more powerful, the public deserves visibility into how they’re being developed and what safeguards are in place. We look forward to working with Congress and the administration to develop a national transparency framework that ensures safety while preserving America’s AI leadership.

The move I was gesturing at—which I haven’t really seen other people do—is saying

This sequence of reasoning [is/isn’t] cruxy for me

as opposed to the usual use of “cruxiness” which is like

I think P and you think ¬P. We agree that Q would imply P and ¬Q would imply ¬P; the crux is Q.

or

I believe P. This is based on a bunch of stuff but Q is the step/assumption/parameter/whatever that I expect is most interesting to interlocutors or you’re most likely to change my mind on or something.

If [your job is automated] . . . the S&P 500 will probably at least double (assuming no AI takeover)

Is this true?

Prior discussion: Tail SP 500 Call Options.

I currently have 7% of my portfolio in such calls.

Hmm, yeah, oops. I forgot about “cruxiness.”

Concept: epistemic loadbearingness.

I write an explanation of what I believe on a topic. If the explanation is very load-bearing for my belief, that means that if someone convinces me that a parameter is wrong or points out a methodology/math error, I’ll change my mind to whatever the corrected result is. In other words, my belief is very sensitive to the reasoning in the explanation. If the explanation is not load-bearing, my belief is really determined by a bunch of other stuff; the explanation might be helpful to people for showing one way I think I think about the question, but quibbling with the explanation wouldn’t change my mind.

This is related to Is That Your True Rejection?; I find my version of the concept more helpful for working with collaborators who share almost all background assumptions with me.

Yeah, I think the big contribution of the bioanchors work was the basic framework: roughly, have a probability distribution over effective compute for TAI, and have a distribution for effective compute over time, and combine them. Not the biological anchors.

(Indeed, I am not following up with users. Nor maintaining the sheet. And I don’t remember where I stored the message I hashed four years ago; uh oh...)

Context: Bay Area Secular Solstice 2025

Relatedly, I fear that outside of Redwood and Forethought, the “AI Consciousness and Welfare” field is focused on the stuff in this post plus advocacy rather than stuff I like: (1) making deals with early schemers to reduce P(AI takeover) and (2) prioritizing by backchaining from considerations about computronium and von Neumann probes.

Edit: here’s a central example of a proposition in an important class of propositions/considerations that I expect the field basically just isn’t thinking about and lacks generators to notice:

In the long run, when we’re colonizing the galaxies, the crucial thing is that we fill the universe with axiologically-good minds. In the short run, what matters is more about being cooperative with the AIs (and maybe the small scale means deontology is more relevant); the AIs’ preferences, not scope-sensitive direct axiological considerations, are what matters.

Concept: inconvenience and flinching away.

I’ve been working for 3.5 years. Until two months ago, I did independent-ish research where I thought about stuff and tried to publicly write true things. For the last two months, I’ve been researching donation opportunities. This is different in several ways. Relevant here: I’m working with a team, and there’s a circle of people around me with some beliefs and preferences related to my work.

I have some new concepts related to these changes. (Not claiming novelty.)

First is “flinching away”: when I don’t think about something because it’s awkward/scary/stressful. I haven’t noticed my object-level beliefs skewed by flinching away, but I’ve noticed that certain actions should be priorities but come to mind less than they should. In particular: doing something about a disagreement with the consensus or status quo or something an ally is doing. I maybe fixed this by writing the things I’m flinching away from in a google doc when I notice them so I don’t forget (and now having the muscle of noticing similar things). It would still be easier if it wasn’t the case that (it’s salient to me that) certain conclusions and actions are more popular than others.

Second is convenience. Convenience is orthogonal to truth. Examples of convenient things are: considerations in favor of conclusions my circle agrees with; considerations that make the answer look more clear/overdetermined. I might flinch away from inconvenient upshots for my actions. (I think I’m not biased by convenience—i.e., not doing motivated reasoning—when forming object-level beliefs within the scope of my day-to-day work; possibly I’m unusually good at this.) I’ve noticed myself saying stuff like “conveniently, X” and “Y, that’s inconvenient” a lot recently. Noticing feelings about considerations has felt helpful but I don’t know/recall why. Possibly this is mostly when thinking about stuff with others: saying “that’s convenient” is a flag to check whether it’s suspiciously convenient; saying “that’s inconvenient” is a flag to make sure to take it seriously.

You suspect someone in your community is a bad actor. Kinds of reasons not to move against them:

You’re uncertain

Especially if your uncertainty will likely be largely resolved soon

You lack legible evidence (or other ways of convincing others), and they’re not already seen as sketchy

Especially if you’ll likely get better legible evidence soon

They’re doing some good stuff; you need them for some good stuff

They’re popular, politically powerful, or have power to hurt you

And so you’d fail

And maybe you’d get kicked out or lose power (especially if your move is unpopular or considered inappropriate, or “community” is more like “team” or “circle”)

And so making them an enemy of [you or your community] is costly

Personal psychological costs

It’s just time-consuming to start a fight, especially because they’ll be invested in discrediting you and your claims

This is all from-first-principles. I’m interested in takes and reading recommendations. Also creative affordances / social technology.

The convenient case would be that you can privately get the powerful stakeholders on board and then they all oust the bad actor and it’s a fait accompli, so there’s no protracted conflict and minimal cost to you and your community. If you can’t get the powerful stakeholders on board, I guess you give up and just privately share information with people you trust — unless you’re sufficiently powerful/independent/ostracized that it’s cheap for you to make enemies, or it’s sufficiently important, or you can share information anonymously. If you’re scared about telling the powerful stakeholders, I guess it’s the same situation.

This is assuming that the main audience for your beliefs is some powerful stakeholders. Sometimes it’s more like many community members.

I agree but don’t feel very strongly. On Anthropic security, I feel even more sad about this.

If [the insider exception] does actually apply to a large fraction of technical employees, then I’m also somewhat skeptical that Anthropic can actually be “highly protected” from (e.g.) organized cybercrime groups without meeting the original bar: hacking an insider and using their access is typical!

You may be interested in ailabwatch.org/resources/corporate-documents, which links to a folder where I have uploaded ~all past versions of the CoI. (I don’t recommend reading it, although afaik the only lawyers who’ve read the Anthropic CoI are Anthropic lawyers and advisors, so it might be cool if one independent lawyer read it from a skeptical/robustness perspective. And I haven’t even done a good job diffing the current version from a past version; I wasn’t aware of the thing Drake highlighted.)

I guess so! Is there reason to favor logit?

I mostly agree. And you can do better with better investments. I observe that this does not imply that scope-sensitive altruists should accumulate capital in order to buy galaxies: buying one millionth of the lightcone costs around $20M invested well now (my independent number; Thomas says $5-500M iiuc). And I think there are donation opportunities 100x that good for scope-sensitive altruists on the margin.