Although they could have tested “LLM’s” and not primarily Claude and that could have bypassed that effect.

wassname

walk up the stack trace

And start at the lowest level of your own code, but be willing to go into library code if needed.

That makes sense, thank you for explaining. Ah yes, I see they are all the LORA adapters, for some reason I thought they were all merged, my bad. Adapters are certainly much more space efficient.

Yes, that’s exactly what I mean! If we have word2vec like properties, steering and interpretability would be much easier and more reliable. And I do think it’s a research direction that is prospective, but not certain.

Facebook also did an interesting tokenizer, that makes LLM’s operating in a much richer embeddings space: https://github.com/facebookresearch/blt. They embed sentences split by entropy/surprise. So it might be another way to test the hypothesis that a better embedding space would provide ice Word2Vec like properties.

Are you going to release the code models too? They seem useful? Also, the LORA versions if possible, please.

Thank you for releasing the models.

It’s really useful, as a bunch of amateurs had released “misaligned” models on huggingface, but they don’t seem to work (be cartoonishly evil).

I’m experimenting with various morality evals (https://github.com/wassname/llm-moral-foundations2, https://github.com/wassname/llm_morality) and it’s good to have a negative baseline. It will also be good to add it to speechmap.ai if we can.

Good point! And it’s plausible because diffusion seems to provide more supervision and get better results in generative vision models, so it’s a candidate for scaling.

Oh it’s not explicitly in the paper, but in Apple’s version they have an encoder/decoder with explicit latent space. This space would be much easier to work with and steerable than the hidden states we have in transformers.

With an explicit and nicely behaved latent space we would have a much better chance of finding a predictive “truth” neuron where intervention reveals deception 99% of the time even out of sample. Right now mechinterp research achieves much less, partly because the transformers have quite confusing activation spaces (attention sinks, suppressed neurons, etc).

If it’s trained from scratch, and they release details, then it’s one data point for diffusion LLM scaling. But if it’s distilled, then it’s zero points of scaling data.

Because we are not interested in scaling which is distilled from a larger parent model, as that doesn’t push the frontier because it doesn’t help get the next larger parent model.

Apple also have LLM diffusion papers, with code. It seems like it might be helpful for alignment and interp because it would have a more interpretable and manipulable latent space.

True, and then it wouldn’t be an example of the scaling of diffusion models, but the of distillation from a scaled up autoregressive LLM.

Deleted tweet. Why were they sceptical? And does anyone know if there were follow-up antibody tests, I can’t find them.

I also haven’t seen this mentioned anywhere.

I think most commercial frontier models that offer logprobs will take some precautions against distilling. Some logprobs seem to have a noise vector attached too (deepseek?), and some like grok will only offer the top 8, not the top 20. Others will not offer them at all.

It’s a shame, as logprobs can be really information rich and token efficient ways to do evals, ranking, and judging.

Has anyone managed to replicate COCONUT? I’ve been trying to experiment with adding explainability through sparse linear bottlenecks, but as far as I have found: no one has replicated it.

I wondered what are O3 and and O4-mini? Here’s my guess at the test-time-scaling and how openai names their model

O0 (Base model) ↓ D1 (Outputs/labels generated with extended compute: search/reasoning/verification) ↓ O1 (Model trained on higher-quality D1 outputs) ↓ O1-mini (Distilled version - smaller, faster) ↓ D2 (Outputs/labels generated with extended compute: search/reasoning/verification) ↓ O2 (Model trained on higher-quality D2 outputs) ↓ O2-mini (Distilled version - smaller, faster) ↓ ...The point is consistently applying additional compute at generation time to create better training data for each subsequent iteration. And the models go from large -(distil)-> small -(search)-> large

I also found it interesting that you censored the self_attn using gradient. This implicitly implies that:

concepts are best represented in the self attention

they are non-linear (meaning you need to use gradient rather than linear methods).

Am I right about your assumptions, and if so, why do you think this?

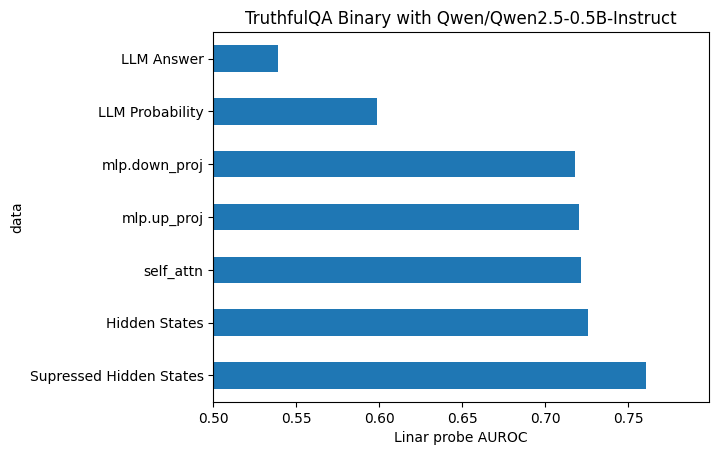

I’ve been doing some experiments to try and work this out https://github.com/wassname/eliciting_suppressed_knowledge

but haven’t found anything conclusive yet

We are simply tuning the model to have similar activations for these very short, context free snippets. The characterization of the training you made with pair (A) or (B) is not what we do and we would agree if that was what we were doing this whole thing would be much less meaningful.

This is great. 2 suggestions:

Call it ablation, erasure, concept censoring or similar, not fine-tuning. That way you don’t bury the lead. It also took me a long time to realise that this is what you were doing.

Maybe consider other way to erase the seperation of self-other. There are other erasure techniques, they are sharper scalpels, so you can wield them with more force. For example LEACE, training a linear classifier to predict A or B, then erase the activations that had predictive power

Very interesting!

Could you release the models and code and evals please? I’d like to test it on a moral/ethics benchmark I’m working on. I’d also like to get ideas from your evals.

I’m imagining a scenario where an AI extrapolates “keep the voting shareholders happy” and “maximise shareholder value”.

Voting stocks can also get valuable when people try to accumulate them to corner the market and execute a takeover this happens in crytopcurrencies like CURVE.

I know these are farfetched, but all future scenarios are. The premium on google voting stock is very small right now, so it’s a cheap feature to add.

I would say: don’t ignore the feeling. Calibrate it and train it, until it’s worth listening to.

there’s a good book about this: “Sizing People Up”

I’ve done something similar to this, so I can somewhat replicate your results.

I did things differently.

instead of sampling the max answer, I take a weighted sum of the choices, this shows the smoothness better. I’ve verified on Judgemarkv2 that this works just as well

I tried Qwen3-4b-thinking and Qwen3-14b, with similar results

I used check pointing of the kv_cache to make this pretty fast (see my code below)

I tried this with activation steering, and it does seem to change the answer!, mostly outside reasoning mode

My code:

simple: https://github.com/wassname/CoT_rating/blob/main/06_try_CoT_rating.ipynb

complex: https://github.com/wassname/llm-moral-foundations2/blob/main/nbs/06_try_CoT_rating.ipynb

My findings, that differ from yours

well-trained reasoning models do converge, for the first 100 tokens, during the <think> stage!, but will fluctuate around during conversation. I think that this is because RLVF trains the model to think well!