LessWrong Team

I have signed no contracts or agreements whose existence I cannot mention.

LessWrong Team

I have signed no contracts or agreements whose existence I cannot mention.

Added!

Added!

Comment here if you’d like access. (Bonus points for describing ways you’d like to use it.)

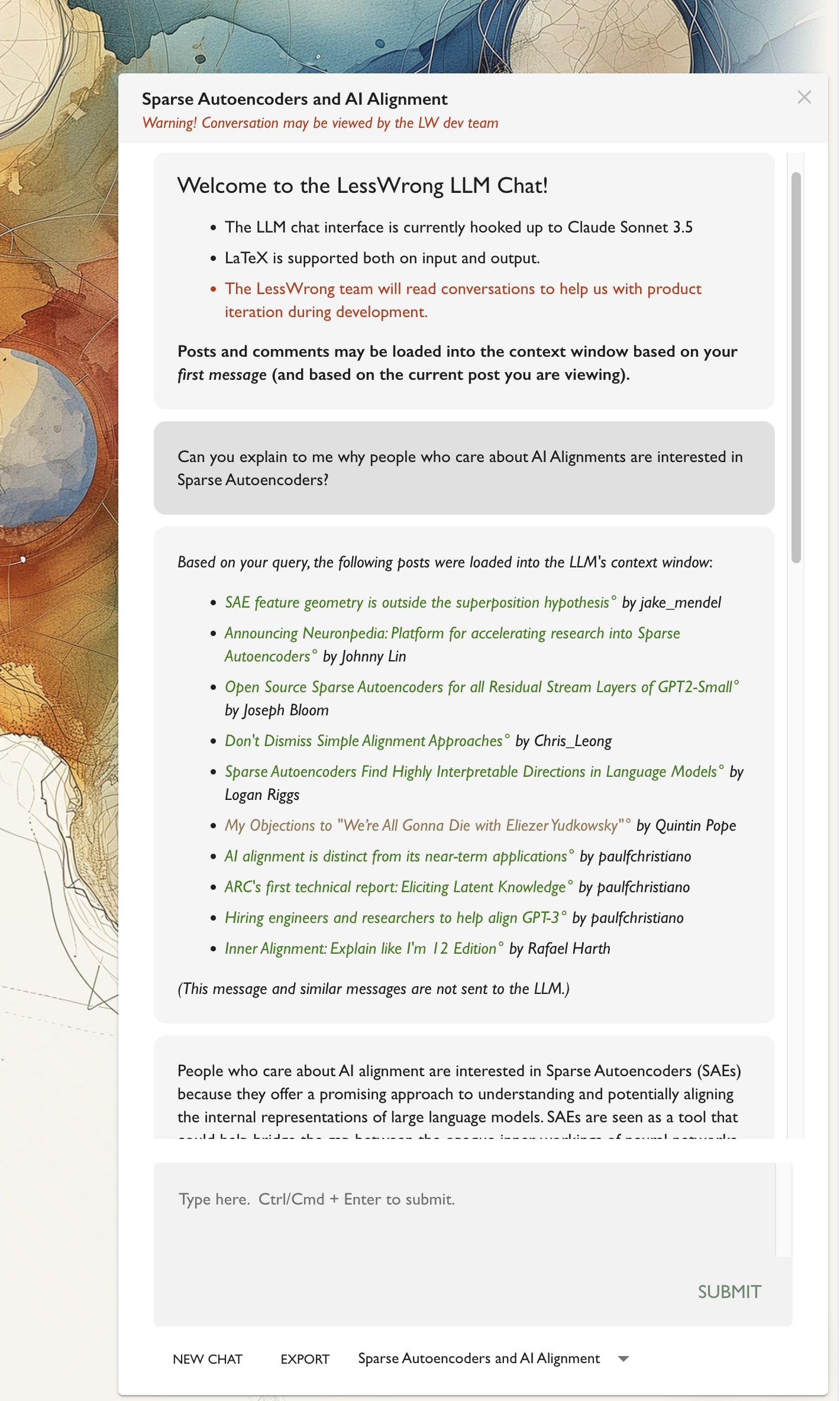

A couple of months ago, a few of the LW team set out to see how LLMs might be useful in the context of LW. It feels like they should be at some point before the end, maybe that point is now. My own attempts to get Claude to be helpful for writing tasks weren’t particularly succeeding, but LLMs are pretty good at reading a lot of things quickly, and also can be good at explaining technical topics.

So I figured just making it easy to load a lot of relevant LessWrong context into an LLM might unlock several worthwhile use-cases. To that end, Robert and I have integrated a Claude chat window into LW, with the key feature that it will automatically pull in relevant LessWrong posts and comments to what you’re asking about.

I’m currently seeking beta users.

Since using the Claude API isn’t free and we haven’t figured out a payment model, we’re not rolling it out broadly. But we are happy to turn it on for select users who want to try it out.

Comment here if you’d like access. (Bonus points for describing ways you’d like to use it.)

Mandelbrot’s work on phone line errors is more upstream than downstream of fractals, but produced legible economic value by demonstrating that phone companies couldn’t solve errors via their existing path of more and more powerful phone lines. Instead, they needed redundancy to compensate for the errors that would inevitably occur. Again I feel like it doesn’t take a specific mathematical theory to consider redundancy as a solution, but that may be because I grew up in a post-fractal world where the idea was in the water supply. And then I learned the details of TCP/IP where redundancy is baked in.

Huh, I thought all of this was covered by Shannon information theory already.

I think if you find the true name of true love, it’ll be pretty clear what it is and how to avoid it.

I’m curious how much you’re using this and if it’s turning out to be useful on LessWrong. Interested because it’s something we’ve been thinking about integrating LLM stuff like this into LW itself.

I’d ask in the Open Thread rather than here. I don’t know of a canonical answer but would be good if someone wrote one.

Update: I’ve lifted your rate limit since it wasn’t an automatically applied one and its duration (12 months) seemed excessive.

1) Folders OR sort by tag for bookmarks.

I would like that a lot personally. Unfortunately bookmarks don’t get enough general use for us to prioritize that work.

2) When I am closing the hamburger menu on the frontpage I don’t see a need for the blogs to not be centred. It’s unusual, it might make more sense if there was a way to double stack it side by side like mastodon.

I believe this change was made for the occasions on which there are neat art to be displayed on the right side. It might also allow more room for a chat LLM integration we’re currently experimenting with.

3) Not currently I’m afraid. I think this would make sense but is competing with all the other things to do.

(Unrelated: can I get deratelimited lol or will I have to make quality Blogs for that to happen?)

Quality contributions or enough time passing for many of the automatic rate limits.

I think there are two possibilities:

The community norms are orthogonal or opposed to figuring out what’s right. In which case it’s unclear why you’d want to engage with this community. Perhaps you altruistically want to improve people’s beliefs, but if so, disregarding the norms and culture is a good way to be ignored (or banned), since the people bought into the culture think they’re important for getting things right, and ignoring them makes your submission less likely to be worth engaging with.

The culture and norms in fact successfully get at things which are important for getting things right, and in disregarding them, you’re actually much less likely to figure out what’s true. People are justified in ignoring and downvoting you if you don’t stick to them.

It’s also possible that there’s more than one set of truth-seeking norms, but that doesn’t mean it’s easy to communicate across them. So better to say “over here, we operate in X, if you want to participate, please follow X norms. And I think that’s legit.

Of course, this is very abstract and it’s possible you have examples I’d agree with.

Curated. My first (and planned to be only) child was born three months ago, so I’ve answered the question of “whether to have kids”. How to raise a child in the current world remains a rather open question, and I appreciate this post for broaching the topic.

I think there’s a range of open questions here, would be neat to see further work on them. Big topics are how to ensure a child’s psychological wellbeing if you’re honest with them that you think imminent extinction is likely, and also how to maintain productivity and ambitious goals with kids.

An element that’s taken me surprise is the effect of my own psychology of feeling a very strong desire to give my child a good world to live in, but feeling I’m not capable of ensuring that. Yes, I can make efforts, but it’s hard feeling I can’t give her the world I wish I could. That’s a psychological weight I didn’t anticipate before.

Pretty solid evidence.

My feeling of the plan pre-pivotal-act era was “figure out the theory of how to build a safe AI at all, and try to get whoever is building to adopt that approach”, and that MIRI wasn’t taking any steps to be the ones building it. I also had the model that due to psychological unity of mankind, anyone building an aligned[ with them] AGI was a good outcome compared to someone building unaligned. Like even if it was Xi Jinping, a sovereign aligned with him would be okay (and not obviously that dramatically different from anyone else?). I’m not sure how much this was MIRI positions vs fragments that I combined in my own head that came from assorted places and were never policy.

I don’t remember what exactly I thought in 2012 when I was reading the Sequences. I do recall sometime later, after DL was in full swing, it seeming like MIRI wasn’t in any position to be building AGI before others (like no compute, not the engineering prowess), and someone (not necessarily at MIRI) confirmed that wasn’t the plan. Now and at the time, I don’t know how much that was principle vs ability.

I would be surprised if a Friendly AI resulted in those things being left untouched.

I think that is germane but maybe needed some bridging/connecting work since this thread so far was about MIRI-as-having-pivotal-act-goal. Whereas I was less sure about whether MIRI itself would enact a pivotal act if they could than Habryka, my understanding was they had no plan to create a sovereign for most of their history (like after 2004) and so doesn’t seem like that’s a candidate for them having a plan to take over the world.

I’m confused about your question. I am not imagining that the pivotal act AI (the AI under discussion) does any of those things.

Hi Sohang,

The title of the post in the email is a link to the specific post. I’m afraid it’s not in green or anything to indicate it’s a link though. That’s something maybe to fix.

Interesting to consider it a failure mode. Maybe it is. Or is at least somewhat.

I’ve got another post on eigening in the works, I think that might provide clearer terminology for talking about this, if you’ll have time to read it.

@Chris_Leong @Jozdien @Seth Herd @the gears to ascension @ProgramCrafter

You’ve all been granted to the LW integrated LLM Chat prototype. Cheers!