Email me at assadiguive@gmail.com, if you want to discuss anything I posted here or just chat.

Guive

You aren’t directly paying more money for it pro rata as you would if you were using the API, but you’re getting fewer queries because they rate limit you more quickly for longer conversations because LLM inference is O(n).

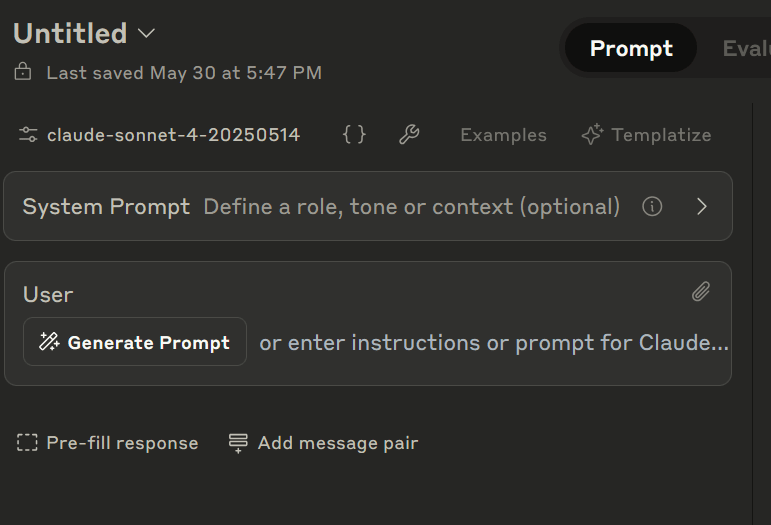

If you use LLMs via API, put your system prompt into the context window.

At least for the Anthropic API, this is not correct. There is a specific field for a system prompt on console.anthropic.com.

And if you use the SDK the function that queries the model takes “system” as an optional input.

Querying Claude via the API has the advantage that the system prompt is customizable, whereas claude.ai queries always have a lengthy Anthropic provided prompt, in addition to any personal instructions you might write for the model.

Putting all that aside, I agree with what I take to be the general point of your post, which is that people should put more effort into writing good prompts.

Didn’t you have a post where you argued that it’s a consequence of their view that biological aliens are better, morally speaking, than artificial earth originating life, or did I misunderstand?

That link seems to be broken.ETA: now fixed by Thomas.

It seems everyone has this problem with your writing

Just to offer my two cents, I do not have this problem and I think Matthew is extremely clear.

So maybe the general explanation is that most of the time, when the trustworthiness of an algorithm is really important, you open source it?

There are much better ways of betting on your beliefs about the valuations of AI firms over the next year than wagering with people you met on Less Wrong. See this post by Ege for more.

Yeah, good point. I changed it to “in America in 1850 it would have been taboo to say that there is nothing wrong with interracial relationships.”

I just added a footnote with this text: “I selected examples to highlight in the post that I thought were less likely to lead to distracting object-level debates. People can see the full range of responses that this prompt tends to elicit by testing it for themselves.”

Notably, he was wrong about that.

I agree the post is making some assumptions about moral progress. I didn’t argue for them because I wanted to control scope. If it helps you can read it as conditional, i.e. “If there is such a thing as moral progress then it can require intellectual progress...”

Regarding the last question: yes, I selected examples to highlight in the post that I thought were less likely to lead to distracting object-level debates. I thought that doing that would help to keep the focus on testing LLM moral reasoning. However, I certainly didn’t let my own feelings about odiousness affect scoring on the back end. People can see the full range of responses that this prompt tends to elicit by testing it for themselves.

I agree with your broader point, but it’s actually more than 10,000 people per year.

What does “bright eyed” mean in this context?

I agree this would be a good argument for short sentences in 2019, but does it still apply with modern LLMs?

When I click the link I see this:

I like this idea. There’s always endless controversy about quoting out of context. I can’t recall seeing any previous specific proposals to help people assess the relevance of context for themselves.

Thanks for doing this, guys. This import will make it easier to access some important history.

Some kind of payment for training data from applications like MSFT rewind does seem fair. I wonder if there will be a lot of growth in jobs where your main task is providing or annotating training data.

I think this approach is reasonable for things where failure is low stakes. But I really think it makes sense to be extremely conservative about who you start businesses with. Your ability to verify things is limited, and there may still be information in vibes even after updating on the results of all feasible efforts to verify someone’s trustworthiness.

Can you elaborate (or provide a link) on what it means to question someone’s basic frames?