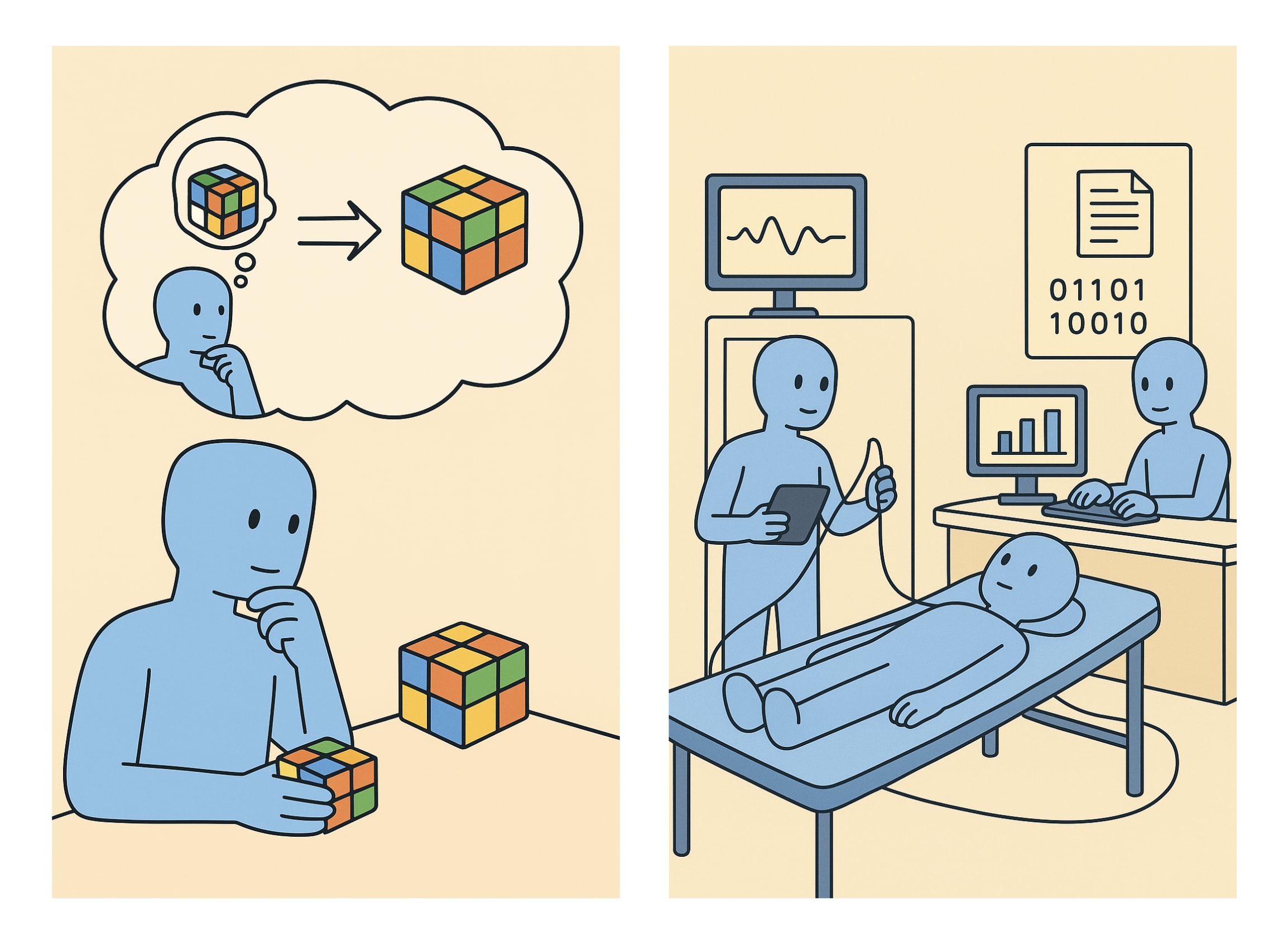

Introspective RSI vs Extrospective RSI

| Introspective-RSI | Extrospective-RSI |

|---|---|

| The meta-cognition and meso-cognition occur within the same entity. | The meta-cognition and meso-cognition occur in different entities. |

Much like a human, an AI will observe, analyze, and modify its own cognitive processes. And this capacity is privileged: the AI can make self-observations and self-modifications that can’t be made from outside. | AIs will automate various R&D tasks that humans currently perform to improve AI, using similar workflows (studying prior literature, forming hypotheses, writing code, running experiments, analyzing data, drawing conclusions, publishing results). |

Here are some differences between them (I mark the most important with *):

*Monitoring opportunities: During E-RSI, information flows between the AIs through external channels (e.g. API calls) which humans can monitor. But in I-RSI, there are fewer monitoring channels.

*Generalisation from non-AI R&D: E-RSI involves AIs performing AI R&D in the same way they perform non-AI R&D, such as drug discovery and particle physics. So we can train the AIs in those non-AI R&D domains and hope that the capabilities and propensities generalise to AI R&D.

Latency: I-RSI may be lower latency, because the metacognition is “closer” to the mesocognition in some sense, e.g. in the same chain-of-thought.

Parallelisation: E-RSI may scale better through parallelization, because it operates in a distributed manner.

Diminishing returns: Humans have already performed lots of AI R&D, which so there might be diminishing returns to E-RSI (c.f. ideas are harder to find). But I-RSI would be a novel manner of improving AI cogntion, so there may be low-hanging fruit leading to rapid progress.

Historical precedent: Humans perfoming AI R&D provides a precedent for E-RSI. So we can apply techniques for improving the security of human AI-R&D to mitigating risks from E-RSI, such as research sabotage.

Verification standards: To evaluate E-RSI, we can rely on established mechanisms for verifying human AI-R&D, e.g. scientific peer-review. However, we don’t have good methods to verify I-RSI.

*Transition continuity: I think the transition from humans leading AI-R&D to E-RSI will be gradual, as AIs take greater leadership in the workflows. But I-RSI might be more of sudden transition.

Representation compatibility: Because of the transition continuity, I expect that E-RSI will involve internal representations which are compatible with human concepts.

*Transferability: E-RSI will produce results which can more readily be shared between instances, models, and labs. But improvements from I-RSI might not transfer between models, perhaps not even between instances.

See here for a different taxonomy — it separates E-RSI into “scaffolding-level improvement” and other types of improvements.

Do you count model training via self play as introspective RSI?

Depends. Self-play isn’t RSI — it’s just a way to generate data. An AI could improve using the data with I-RSI (e.g. the AI thinks to itself “oh when I played against myself this reasoning heuristic worked I should remember that”) or via E-RSI (e.g. the AI generates statistical analysis on the data and finds weakspots and tests different patches).

If you just the data to get gradients to update the weights, then I wouldn’t count that as RSI.

I would personally count it as a form of RSI because it is a feedback loop with positive reinforcement, hence recursive, and the training doesn’t come from an external source, hence self-improvement. It can be technically phrased as a particular way of generating data (as any form of generative AI can) but that doesn’t seem very important. Likewise with the fact that the method is using stochastic gradient descent for self-improvement.

AlphaGo Zero got pretty far with that approach before plateauing, and it seems plausible that some form of self-play via reasoning RL (like this) could scale to superhuman abilities in math or coding relatively soon. A method scaling to superhuman abilities without external training data would be the typical thing expected of something called RSI but not of much else.

Of course those are just my semantic intuitions. I think you have more something like meta-learning in mind for introspective RSI. The question is how this would work on a technical level.

I think open-loop vs closed-loop is an orthogonal dimension than RSI vs not-RSI.

open-loop I-RSI: “AI plays a game and thinks about which reasoning heuristics resulted in high reward and then decides to use these heuristics more readily”

open-loop E-RSI: “AI implements different agent scaffolds for itself, and benchmarks them using FrontierMath”

open-loop not-RSI: “humans implement different agent scaffolds for itself, and benchmarks them using FrontierMath”

closed-loop I-RSI: “AI plays a game and thinks about which reasoning heuristics could’ve shortcut the decision”

closed-loop E-RSI: “humans implement different agent scaffolds for the AI, and benchmarks them using FrontierMath”

closed-loop not-RSI: constitutionalAI, AlphaGo

I doubt that I actually buy such a difference. For example, the Race Ending of the AI-2027 scenario had Agent-4 study mechinterp until it was ready to understand its own cognition and to create Agent-5. However:

The AIs since Agent-3 had neuralese recurrence and memory which the humans struggle to monitor because they lacked interpretability tools;

The scenario had the companies worry about AI-related R&D most.

Placing meta- and mesocognition (or is it mesacognition?) in the same CoT doesn’t mean that the AI does any more RSI than the humans. Instead, any SOTA AI stuffs the few tokens selected from whatever external documents it creates, results of whatever experiments, whatever it decides to write in the CoT into the same mechanism producing the next token. The mechanism is not replaced until someone trains the model further.

Agreed, but Agent-3 does introspective and non-introspective R&D simultaneously and learns introspective results along with non-introspective ones. Additionally, I doubt that Agent-3 would need introspection to start sabotaging research.

How does the AI-2027 scenario have the AIs introspect in any manner beyond the CoT and neuralese memory? And what does I-RSI actually mean when applied to a single instance?

Edited to add: suppose that meta-level reasoning is more like patrolling the thought process for mistakes which predictably fail to lead anywhere, like the confirmation bias. Then there is no introspective recursive SI beyond what gradient descent does after the task is tried.