Could you have stopped Chernobyl?

...or would you have needed a PhD for that?

It would appear the inaugural post caused some (off-LW) consternation! It would, after all, be a tragedy if the guard in our Chernobyl thought experiment overreacted and just unloaded his Kalashnikov on everyone in the room and the control panels as well.

And yet, we must contend with the issue that if the guard had simply deposed the leading expert in the room, perhaps the Chernobyl disaster would have been averted.

So the question must be asked: can laymen do anything about expert failures? We shall look at some man-made disasters, starting of course, with Chernobyl itself.

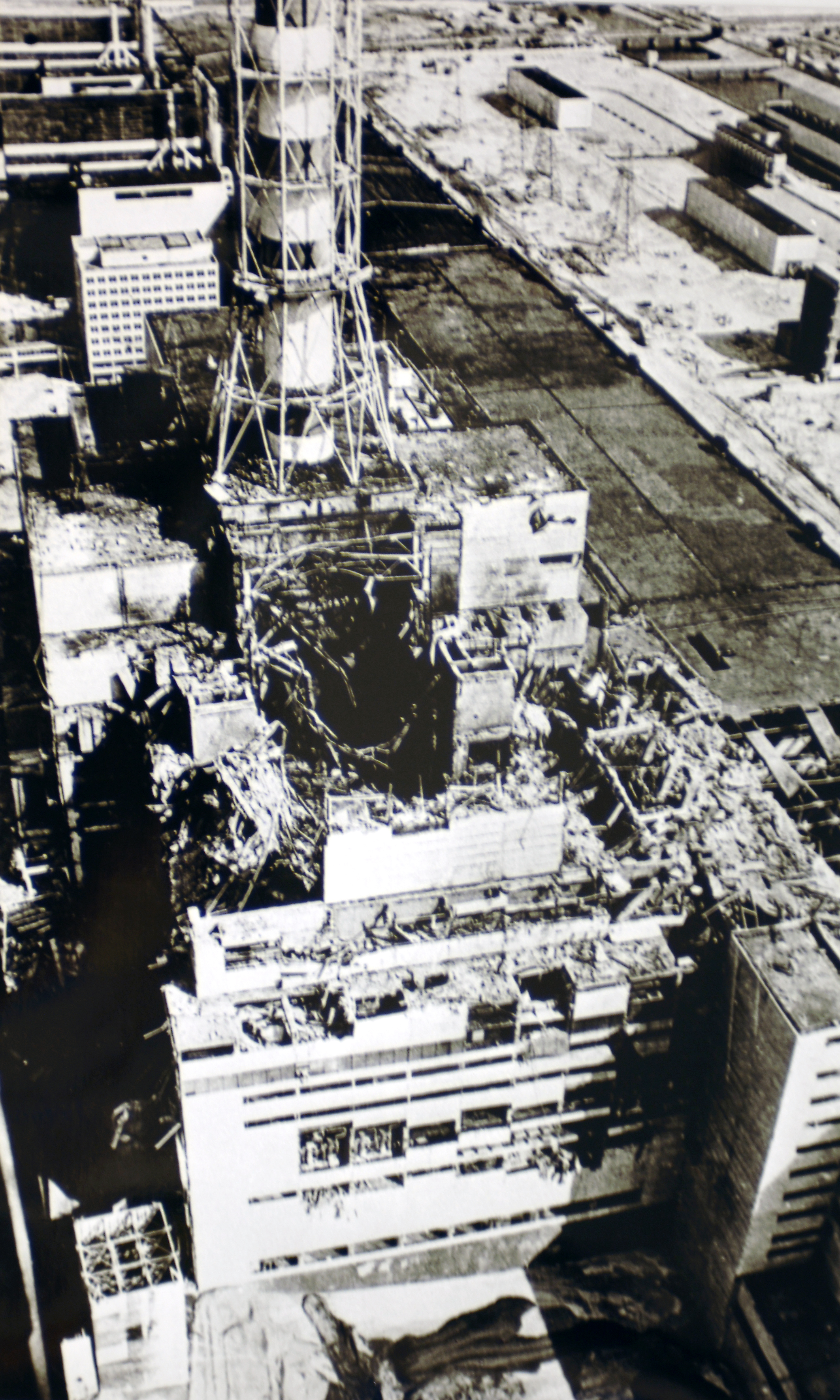

Chernobyl

To restate the thought experiment: the night of the Chernobyl disaster, you are a guard standing outside the control room. You hear increasingly heated bickering and decide to enter and see what’s going on, perhaps right as Dyatlov proclaims there is no rule. You, as the guard, would immediately be placed in the position of having to choose to either listen to the technicians, at least the ones who speak up and tell you something is wrong with the reactor and the test must be stopped, or Dyatlov, who tells you nothing is wrong and the test must continue, and to toss the recalcitrant technicians into the infirmary.

If you listen to Dyatlov, the Chernobyl disaster unfolds just the same as it did in history.

If you listen to the technicians and wind up tossing Dyatlov in the infirmary, what happens? Well, perhaps the technicians manage to fix the reactor. Perhaps they don’t. But if they do, they won’t get a medal. Powerful interests were invested in that test being completed on that night, and some unintelligible techno-gibberish from the technicians will not necessarily convince them that a disaster was narrowly averted. Heads will roll, and not the guilty ones.

This has broader implications that will be addressed later on, but while tossing Dyatlov in the infirmary would not have been enough to really prevent disaster, it seems like it would have worked on that night. To argue that the solution is not actually as simple as evicting Dyatlov is not the same as saying that Dyatlov should not have been evicted: to think something is seriously wrong and yet obey is hopelessly akratic.

But for now we move to a scenario more salvageable by individuals.

The Challenger

The Challenger disaster, like Chernobyl, was not unforeseen. Morton-Thiokol engineer Roger Boisjoly, had raised red flags with the faulty O-rings that led to the loss of the shuttle and the deaths of seven people as early as six months before the disaster. For most of those six months, that warning, as well as those of other engineers went unheeded. Eventually, a task force was convened to find a solution, but it quickly became apparent the task force was a toothless, do-nothing committee.

The situation was such that Eliezer Yudkowsky, leading figure in AI safety, held up the Challenger as a failure that showcases hindsight bias, the mistaken belief that a past event was more predictable than it actually was:

Viewing history through the lens of hindsight, we vastly underestimate the cost of preventing catastrophe. In 1986, the space shuttle Challenger exploded for reasons eventually traced to an O-ring losing flexibility at low temperature (Rogers et al. 1986). There were warning signs of a problem with the O-rings. But preventing the Challenger disaster would have required, not attending to the problem with the O-rings, but attending to every warning sign which seemed as severe as the O-ring problem, without benefit of hindsight.

This is wrong. There were no other warning signs as severe as the O-rings. Nothing else resulted in an engineer growing this heated the day before launch (from the obituary already linked above):

But it was one night and one moment that stood out. On the night of Jan. 27, 1986, Mr. Boisjoly and four other Thiokol engineers used a teleconference with NASA to press the case for delaying the next day’s launching because of the cold. At one point, Mr. Boisjoly said, he slapped down photos showing the damage cold temperatures had caused to an earlier shuttle. It had lifted off on a cold day, but not this cold.

“How the hell can you ignore this?” he demanded.

How the hell indeed. In an unprecedented turn, in that meeting NASA management was blithe enough to reject an explicit no-go recommendation from Morton-Thiokol management:

During the go/no-go telephone conference with NASA management the night before the launch, Morton Thiokol notified NASA of their recommendation to postpone. NASA officials strongly questioned the recommendations, and asked (some say pressured) Morton Thiokol to reverse their decision.

The Morton Thiokol managers asked for a few minutes off the phone to discuss their final position again. The management team held a meeting from which the engineering team, including Boisjoly and others, were deliberately excluded. The Morton Thiokol managers advised NASA that their data was inconclusive. NASA asked if there were objections. Hearing none, NASA decided to launch the STS-51-L Challenger mission.

Historians have noted that this was the first time NASA had ever launched a mission after having received an explicit no-go recommendation from a major contractor, and that questioning the recommendation and asking for a reconsideration was highly unusual. Many have also noted that the sharp questioning of the no-go recommendation stands out in contrast to the immediate and unquestioning acceptance when the recommendation was changed to a go.

Contra Yudkowsky, it is clear that the Challenger disaster is not a good example of how expensive it can be to prevent catastrophe, since all prevention would have taken was NASA management doing their jobs. Though it is important to note that Yudkowky’s overarching point in that paper, that we have all sorts of cognitive biases clouding our thinking on existential risks, still stands.

But returning to Boisjoly. In his obituary, he was remembered as “Warned of Shuttle Danger”. A fairly terrible epitaph. He and the engineers who had reported the O-ring problem had to bear the guilt of failing to stop the launch. At least one of them carried that weight for 30 years. It seems like they could have done more. They could have refused to be shut out of the final meeting where Morton-Thiokol management bent the knee to NASA, even if that took bloodied manager noses. And if that failed, why, they were engineers. They knew the actual physical process necessary for a launch to occur. They could also have talked to the astronauts. Bottom line, with some ingenuity, they could have disrupted it.

As with Chernobyl, yet again we come to the problem that even while eyebrow raising (at the time) actions could have prevented the disaster, they could not have fixed the disaster generating system in place at NASA. And like in Chernobyl: even so, they should have tried.

We now move on to a disaster where there wasn’t a clear, but out-of-the-ordinary solution.

Beirut

It has been a year since the 2020 Beirut explosion, and still there isn’t a clear answer on why the explosion happened. We have the mechanical explanation, but why were there thousands of tons of Nitropril (ammonium nitrate) in some rundown warehouse in a port to begin with?

In a story straight out of The Outlaw Sea, the MV Rhosus, a vessel with a convoluted 27 year history, was chartered to carry the ammonium nitrate from Batumi, Georgia to Beira, Mozambique, by the Fábrica de Explosivos Moçambique. Due to either mechanical issues or a failure to pay tolls for the Suez Canal, the Rhosus was forced to dock in Beirut, where the port authorities declared it unseaworthy and forbid it to leave. The mysterious owner of the ship, Igor Grechushkin, declared himself bankrupt and left the crew and the ship to their fate. The Mozambican charterers gave up on the cargo, and the Beirut port authorities seized the ship some months later. When the crew finally managed to be freed from the ship about a year after detainment (yes, crews of ships abandoned by their owners must remain in the vessel), the explosives were brought into Hangar 12 at the port, where they would remain until the blast six years later. The Rhosus itself remained derelict in the port of Beirut until it sank due to a hole in the hull.

During those years it appears that practically all the authorities in Lebanon played hot potato with the nitrate. Lots of correspondence occurred. The harbor master to the director of Land and Maritime Transport. The Case Authority to the Ministry of Public Works and Transport. State Security to the president and prime minister. Whenever the matter was not ignored, it ended with someone deciding it was not their problem or that they did not have the authority to act on it. Quite a lot of the people aware actually did have the authority to act unilaterally on the matter, but the logic of the immoral maze (seriously, read that) precludes such acts.

There is no point in this very slow explosion in which disaster could have been avoided by manhandling some negligent or reckless authority (erm, pretend that said “avoided via some lateral thinking”). Much like with Chernobyl, the entire government was guilty here.

What does this have to do with AI?

The overall project of AI research exhibits many of the signs of the discussed disasters. We’re not currently in the night of Chernobyl: we’re instead designing the RBMK reactor. Even at that early stage, there were Dyatlovs: they were the ones who, deciding that their careers and keeping their bosses pleased was most important, implemented, and signed off, on the design flaws of the RBMK. And of course there were, because in the mire of dysfunction that was the Soviet Union, Dyatlovism was a highly effective strategy. Like in the Soviet Union, plenty of people, even prominent people, in AI, are ultimately more concerned with their careers than with any longterm disasters their work, and in particular, their attitude, may lead to. The attitude is especially relevant here: while there may not be a clear path from their work to disaster (is that so?) the attitude that the work of AI is, like nearly all the rest of computer science, not life-critical, makes it much harder to implement regulations on precisely how AI research is to be conducted, whether external or internal.

While better breeds of scientist, such as biologists, have had the “What the fuck am I summoning?” moment and collectively decided how to proceed safely, a similar attempt in AI seems to have accomplished nothing.

Like with Roger Boisjoly and the Challenger, some of the experts involved are aware of the danger. Just like with Boisjoly and his fellow engineers, it seems like they are not ready to do whatever it takes to prevent catastrophe.

Instead, as in Beirut, memos and letters are sent. Will they result in effective action? Who knows?

Perhaps the most illuminating thought experiment for AI safety advocates/researchers, and indeed, us laymen, is not that of roleplaying as a guard outside the control room at Chernobyl, but rather: you are in Beirut in 2019.

How do you prevent the explosion?

Precisely when should one punch the expert?

The title of this section was the original title of the piece, but though it was decided to dial it back a little, it remains as the title of this section, if only to serve as a reminder the dial does go to 11. Fortunately there is a precise answer to that question: when the expert’s leadership or counsel poses an imminent threat. There are such moments in some disasters, but not all, Beirut being a clear example of a failure where there was no such critical moment. Should AI fail catastrophically, it will likely be the same as Beirut: lots of talk occurred in the lead up, but some sort of action was what was actually needed. So why not do away entirely with such an inflammatory framing of the situation?

Why, because us laymen need to develop the morale and the spine to actually make things happen. We need to learn from the Hutu:

The pull of akrasia is very strong. Even I have a part of me saying “relax, it will all work itself out”. That is akrasia, as there is no compelling reason to expect that to be the case here.

But what after we “hack through the opposition” as Peter Capaldi’s The Thick of It character, Malcolm Tucker, put it? What does “hack through the opposition” mean in this context? At this early stage I can think of a few answers:

There is such a thing as safety science, and leading experts in it. They should be made aware of the risk of AI, and scientific existential risks in general, as it seems they could figure some things out. In particular, how to make certain research communities engage with the safety-critical nature of their work.

A second Asilomar conference on AI needs to be convened. One with teeth this time, involving many more AI researchers, and the public.

Make it clear to those who deny or are on the fence about AI risk that the (not-so-great) debate is over, and it’s time to get real about this.

Develop and proliferate the antidyatlovist worldview to actually enforce the new line.

Points 3 and 4 can only sound excessive to those who are in denial about AI risk, or those to whom AI risk constitutes a mere intellectual pastime.

Though these are only sketches. We are indeed trying to prevent the Beirut explosion, and just like in that scenario, there is no clear formula or plan to follow.

This Guide is highly speculative. You could say we fly by the seat of our pants. But we will continue, we will roll with the punches, and we will win.

After all, we have to.

You can also subscribe on substack.

There was no bickering in the Chernobyl control room (for example, check the interview with Boris Stolyarchuk). The character’s portrayal in the TV series is completely fictional for dramatic purposes. The depiction of the events during the night of the accidents is also significantly fictionalized. See the INSAG-7 report for a factual description of the events. At no moment there was any indication that there is something wrong with the reactor. If Dytalov had dropped dead that morning the accident most probably would have happened anyway few weeks/months later.

Will keep this in mind moving forward. The Beirut analogy is better at any rate.

Well, INSAG-7 is 148 pages that I will not read in full, as Chernobyl is not my primary interest. But I did find this in it:

Sound like HBO’s Chernobyl only erred in making it seem like only Dyatlov was negligent that night, as opposed to everyone in the room. But even without that, the series does show the big takeaway was that the USSR as a whole was negligent.

Noting that the more real, second-order disaster resulting from Chernobyl may have been less usage of nuclear power (assuming that had an influence on antinuclear sentiment). Likewise, I’m guessing the Challenger disaster had a negative influence on the U.S. space program. Covid lockdowns also have this quality of not tracking the cost-benefit of their continuation. Human reactions to disasters can be worse than the disasters themselves, especially if the costs of those reactions are hidden. I don’t know how this translates to AI safety but it merits thought.

Eh. We can afford to take things slow. What you describe are barely costs.

Here are some other failure modes that might be important:

The Covid origin story (https://www.facebook.com/yudkowsky/posts/10159653334879228) - some sort of AI research moratorium is held in US, the problem appears to be solved, but in reality it is just off-shored, and then it explodes in an unpredicted way.

The Archegos/Credit Suisse blow up (https://www.bloomberg.com/opinion/articles/2021-07-29/archegos-was-too-busy-for-margin-calls) - special comittee is set up to regulate AI-related risks, and there is a general consensus that something has to be done, but the actions are bogged down by bureaucracy, and key stakeholders are unresponsive for the period which looks reasonable at first. However, the explosive nature of AI development process is not taken into account, and the whole process blows up much faster than control system could manage to scram.

More practically, can you suggest specific topics to discuss on 5 Sept ACX online meetup with Sam Altman, the CEO of OpenAI?

While there was a moratorium for gain-of-function research, funding continued in spite of the moratorium. It wasn’t just the off-shored funding in the US.

I’ll be there. Been thinking about what precisely to ask. Probably something about how it seems we don’t take AI risk seriously enough. This is assuming the current chip shortage has not, in fact, been deliberately engineered by the Future of Humanity Institute, of course...

Preventing a one-off disastrous experiment like Chernobyl isn’t analogous to the coming problem of ensuring the safety of a whole field whose progress is going to continue to be seen as crucial for economic, humanitarian, military, etc. reasons. It’s not even like there’s a global AI control room where one could imagine panicky measures making a difference. The only way to make things work out in the long term is to create a consensus about safety in the field. If experts feel like safety advocates are riling up mobs against them, it will just harden their view of the situation as nothing more than a conflict between calm, reasonable scientists and e.g. over-excitable doomsday enthusiasts unbalanced by fictional narratives.

I highlight later on that Beirut is a much more pertinent situation. No control room there either, just failures of coordination and initiative.

Also, experts are not omnipotent. At this point, I don’t think there are arguments that will convince the ones who are deniers, which is not all of them. It is now a matter of reigning that, and other, field(s) in.

How dare you suggest that fearless Fauci deserves a punch in the nose from a red-capped brigand!

No fire prevention or proper structural means of keeping it in small enough buckets could prevent disasters like texas city, west, texas; and beirut: https://pubmed.ncbi.nlm.nih.gov/26547622/

That’s good to know, though the question remains why didn’t anyone do that in Beirut.

I don’t think reflexive circling-the-wagons around the experts happens in every context. Certainly not much of that happens for economists or psychometricians...

A simple sigle act of rebellion (punching the expert) might, at best result in ‘start the experiment while I go get some ice, man, wtf is up with that guard’ or maybe ‘lets do it tomorrow’.

I think that the AN explosions are often preceeded by this conversation:

Intern: “Whoa that’s a lot of AN in a pile, are you sure it’s safe to have an explosive stored like that? Don’t best practices say we should store it in separate containers below a particular critical size?”

Boss “But then I can’t store it in a giant silo that’s easy to load an arbitrary quantity onto trucks from.” / “But space here in the hold/special shipping warehouse is at a premium, all that empty space is expensive. It’ll be fiiiine, besides that best practice is dumb anyway, everyone knows it’s only explosive if u mix it with fuel oil or whatever, it’ll be fine”

Intern: “What if there’s a fire?”

Boss” “The fire department will have it under control, it’s just fertilizer. Besides, that one fire last year was a fluke, it won’t happen again”

AN itself is an explosive (it contains a fuel and an oxider in the chemical structure), but is too insensitive to use as such. Like many explosives, temperature and pressure affect its sensitivity significantly. Critically, AN is an insulator, and with enough heat, will chemically degrade exothermically. So, in a big enough, hot enough, pile of AN, some of the AN in the middle is decomposing, the pile is trapping the heat, and making the pile that much hotter.

The best guesses I’ve seen for how the disasters develop involve that hot spot eventually getting hot enough, confined enough, and mixed with the degradation products of AN enough that it goes boom, and the explosion propagates through the entire hot, somewhat confined/compressed pile, creating impressive scenes like the one in Beirut.

The West, Texas explosion happened within a month of the Boston bombing. It killed more people, destroyed more property, and was completely preventable. The surviving boston bomber is in prison, nobody who made the decisions at the fertilizer plant suffered any consequences.

I think I’m the only one who found that confusing.

It makes sense because we don’t have good stories that drill into our head negligence → bad stuff, or incompetence → bad stuff. When those things happen, it’s just noise.

We have bad guys → bad stuff instead. Which is why HBO’s Chernobyl is rather important: that is definitely a very well produced negligence → bad stuff story.

Points 3 and 4 can only sound excessive

Where is point 4?

As Ikaxas said. It’s now fixed.

Ah, I think the fact that there’s an image after the first point is causing the numbered list to be numbered 1,1,2,3.