Linked is a new working paper from Nick Bostrom, of Superintelligence fame, primarily analyzing optimal pause strategies in AI research, with the aim of maximizing saved human lives by balancing x-risk against ASI developing biological immortality sooner.

Abstract: (emphasis mine)

Developing superintelligence is not like playing Russian roulette; it is more like undergoing risky surgery for a condition that will otherwise prove fatal. We examine optimal timing from a person-affecting stance (and set aside simulation hypotheses and other arcane considerations). Models incorporating safety progress, temporal discounting, quality-of-life differentials, and concave QALY utilities suggest that even high catastrophe probabilities are often worth accepting. Prioritarian weighting further shortens timelines. For many parameter settings, the optimal strategy would involve moving quickly to AGI capability, then pausing briefly before full deployment: swift to harbor, slow to berth. But poorly implemented pauses could do more harm than good.

The analysis is, interestingly, deliberately from a “normal person” viewpoint:[1]

It includes only “mundane” considerations (just saving human lives) as opposed to “arcane” considerations (AI welfare, weird decision theory, anthropics, etc.).

It considers only living humans, explicitly eschewing longtermist considerations of large numbers of future human lives.

It assumes that a biologically immortal life is merely 1400 years long, based on mortality rates for healthy 20-year-olds.

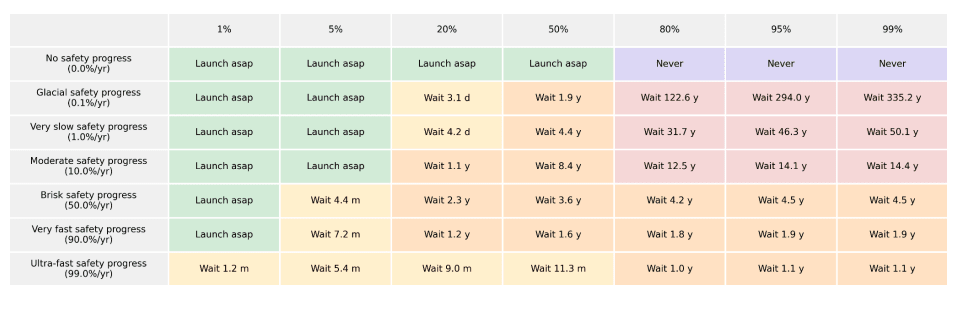

It results in tables like this:

The results on the whole imply that under a fairly wide range of scenarios, a pause could be useful, but likely should be short.

However, Bostrom also says that he doesn’t think this work implies specific policy prescriptions, because it makes too many assumptions and is too simplified. Instead he argues that his main purpose is just highlighting key considerations and tradeoffs.

Some personal commentary:

Assuming we don’t have a fast takeoff, there will probably be a period where biomedical results from AI look extremely promising, and biohackers will be taking AI-designed peptides, and so forth.[2] This would be likely to spark a wider public debate about rushing to AGI/ASI for health benefits, and the sort of analysis Bostrom provides here may end up guiding part of that debate. It’s worth noting that in the West at least, politics is something of a gerontocracy, which will be extra-incentivized to rush.

While I suppose these considerations would fall under the “arcane” category, I think probably the biggest weaknesses of Bostrom’s treatment are: a.) discounting how much people care about the continuation of the human species, separate to their own lives or lives of family/loved ones; b.) ignoring the possibility of s-risks worse than extinction. I’m not sure those are really outside the realm of Overton Window public debate, esp. if you frame s-risks primarily in terms of authoritarian takeover by political enemies (not exactly the worst s-risk, but I think “permanent, total victory for my ideological enemies” is a concrete bad end people can imagine).

- ^

Excepting the assumption that AGI/ASI are possible and also that aligned ASI could deliver biological immortality quickly. But you know, might as well start by accepting true facts.

- ^

LLMs are already providing valuable medical advice of course, to the point there was a minor freakout not too long ago when a rumor went around that ChatGPT would stop offering medical advice.

Duplicate: https://www.lesswrong.com/posts/2trvf5byng7caPsyx/optimal-timing-for-superintelligence-mundane-considerations

Ah, darn. I actually searched “nick bostrom” in the LW search bar and that didn’t come up? I guess I should’ve looked for a user page.

That’s kind of on us, the search is mediocre, must get around to improving it sometime.